Zeitleiste Internetausfälle

Tauchen Sie ein in die bekanntesten Internet-Störungen. Entdecken Sie die Auswirkungen, die Ursachen und die wichtigsten Lektionen, um die Widerstandsfähigkeit Ihres Internet-Stacks zu gewährleisten.

Januar

TikTok

Was ist passiert?

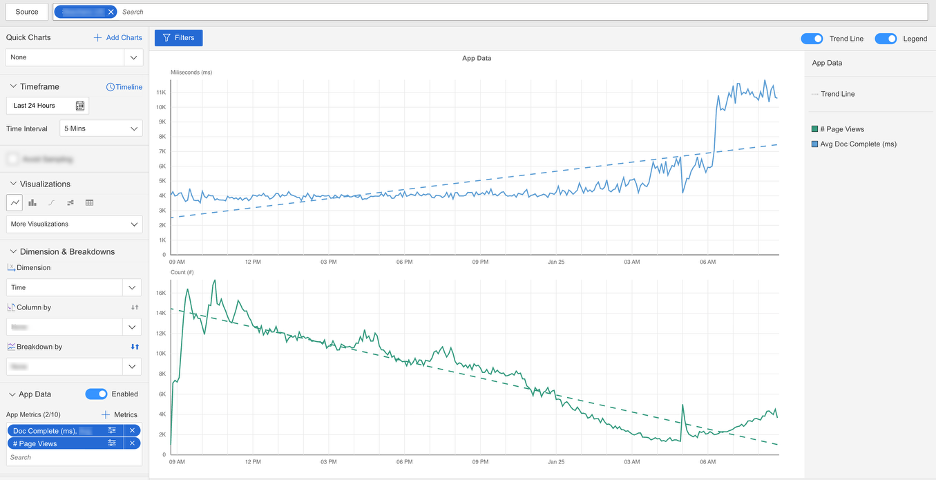

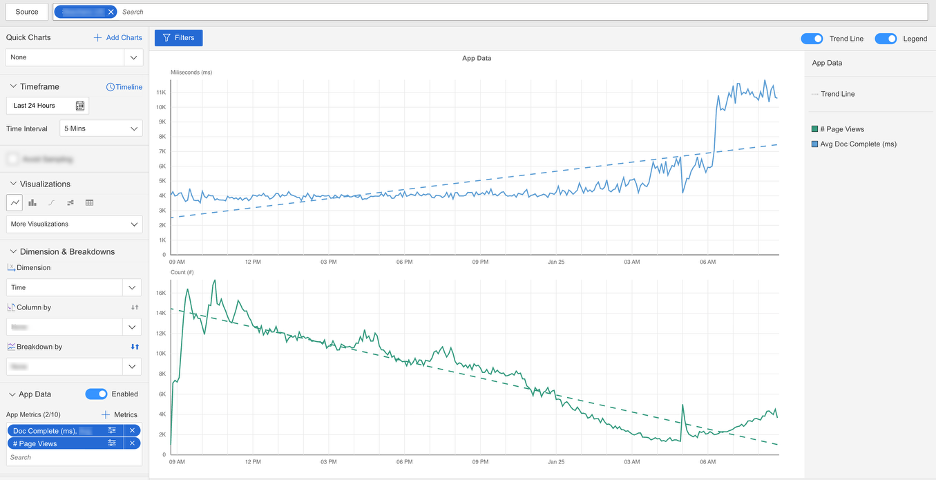

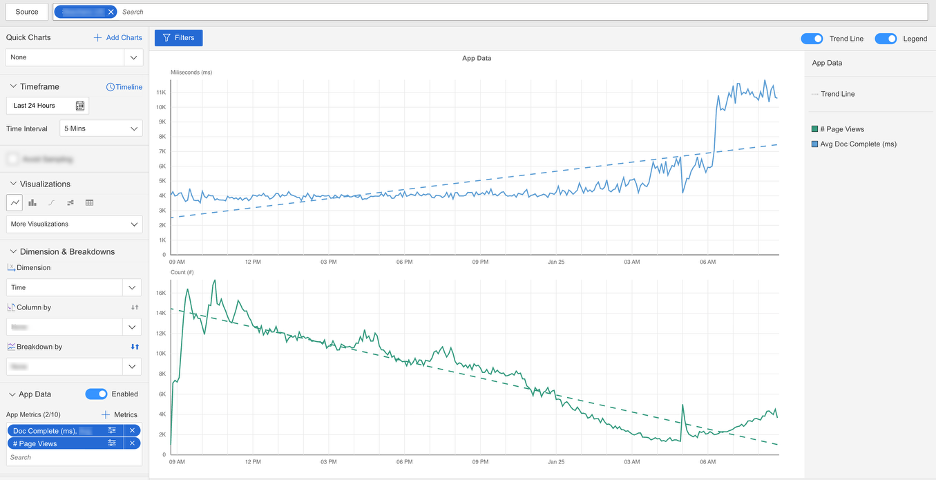

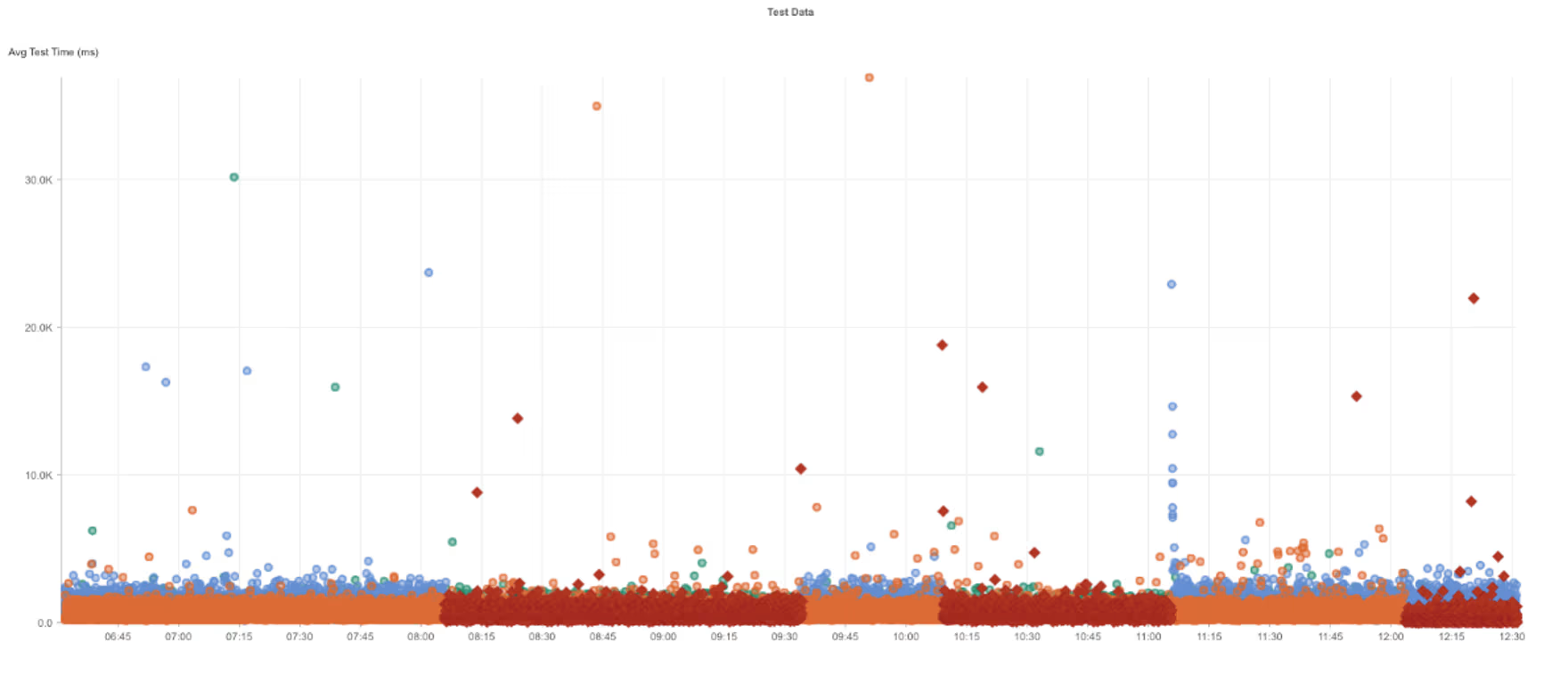

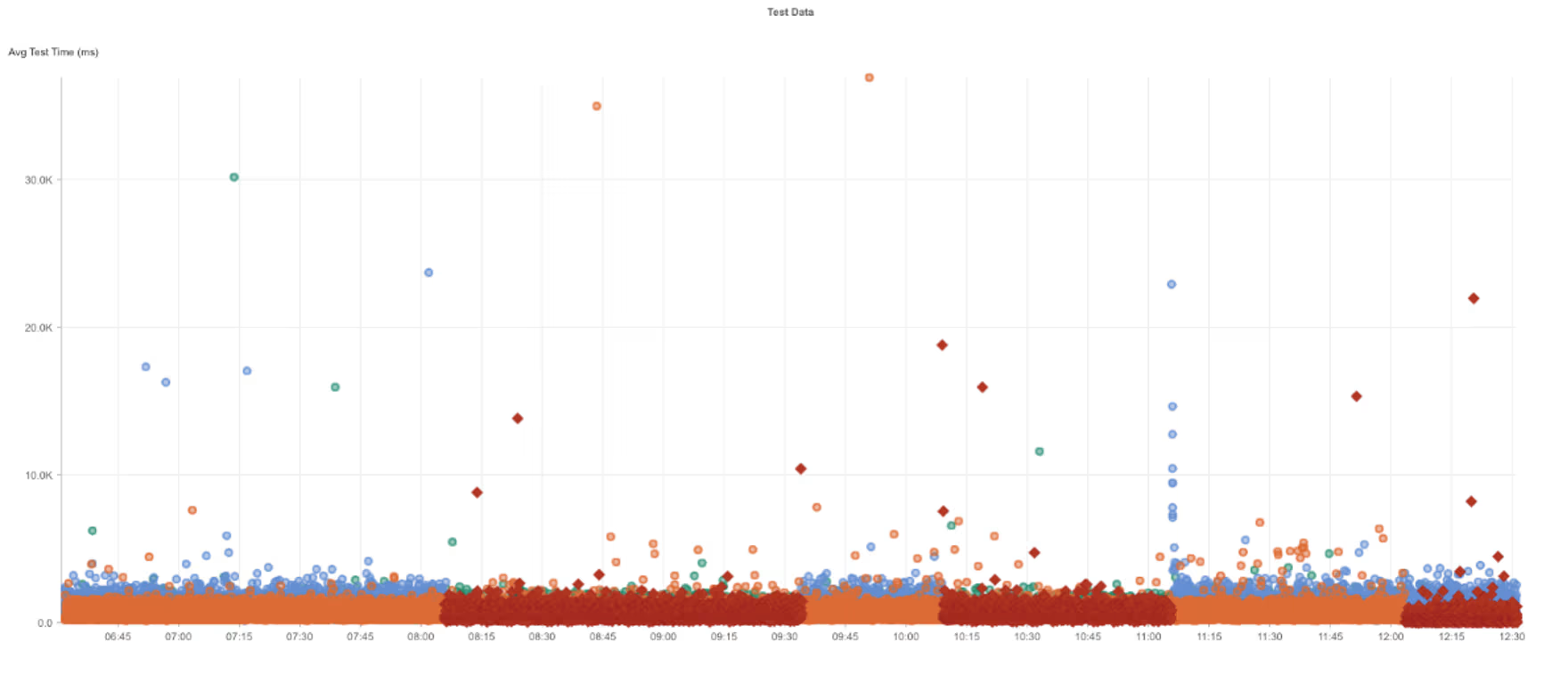

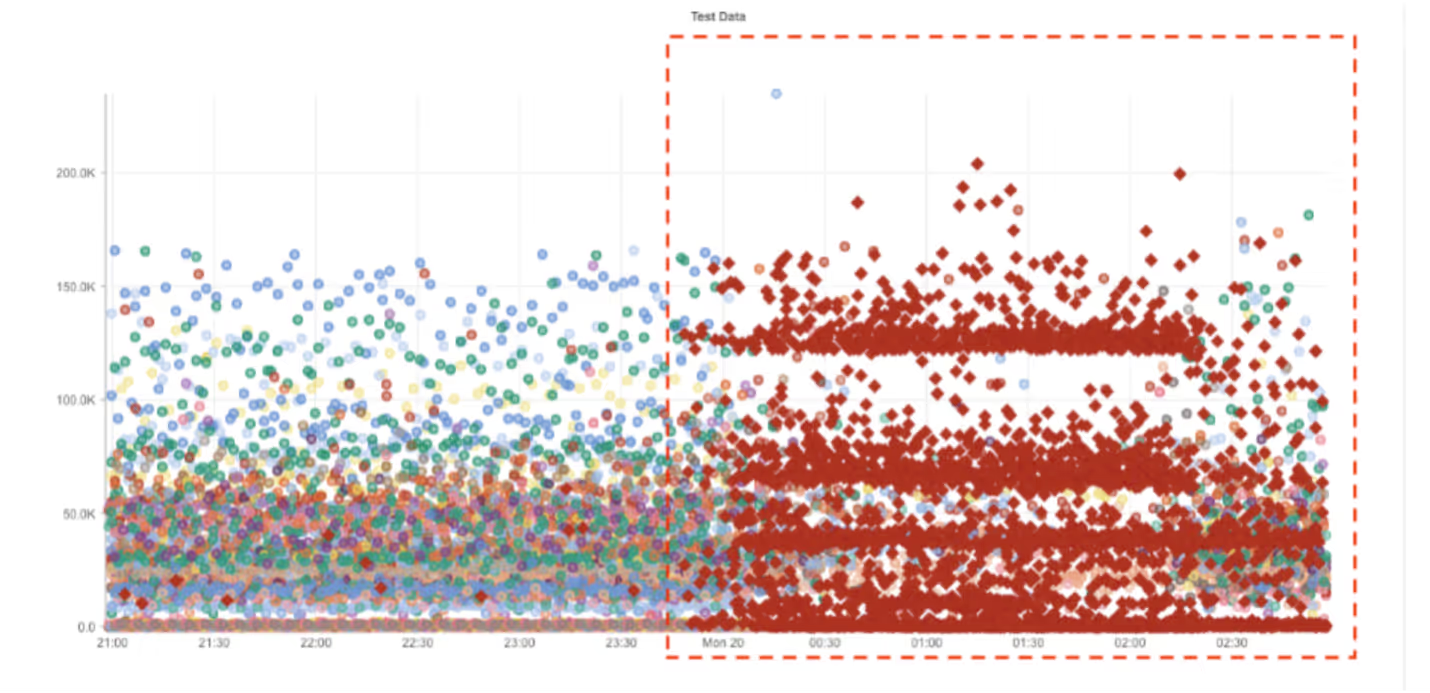

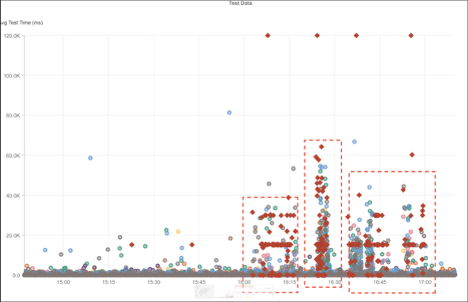

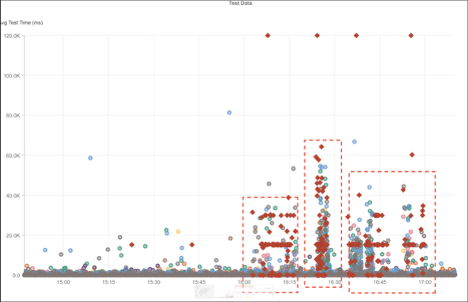

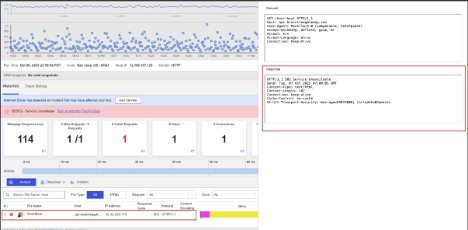

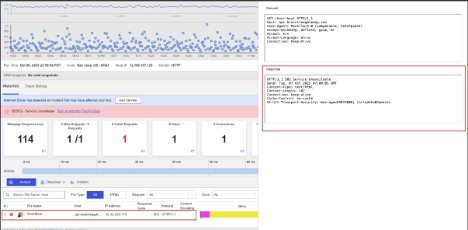

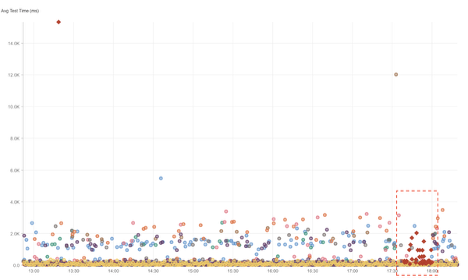

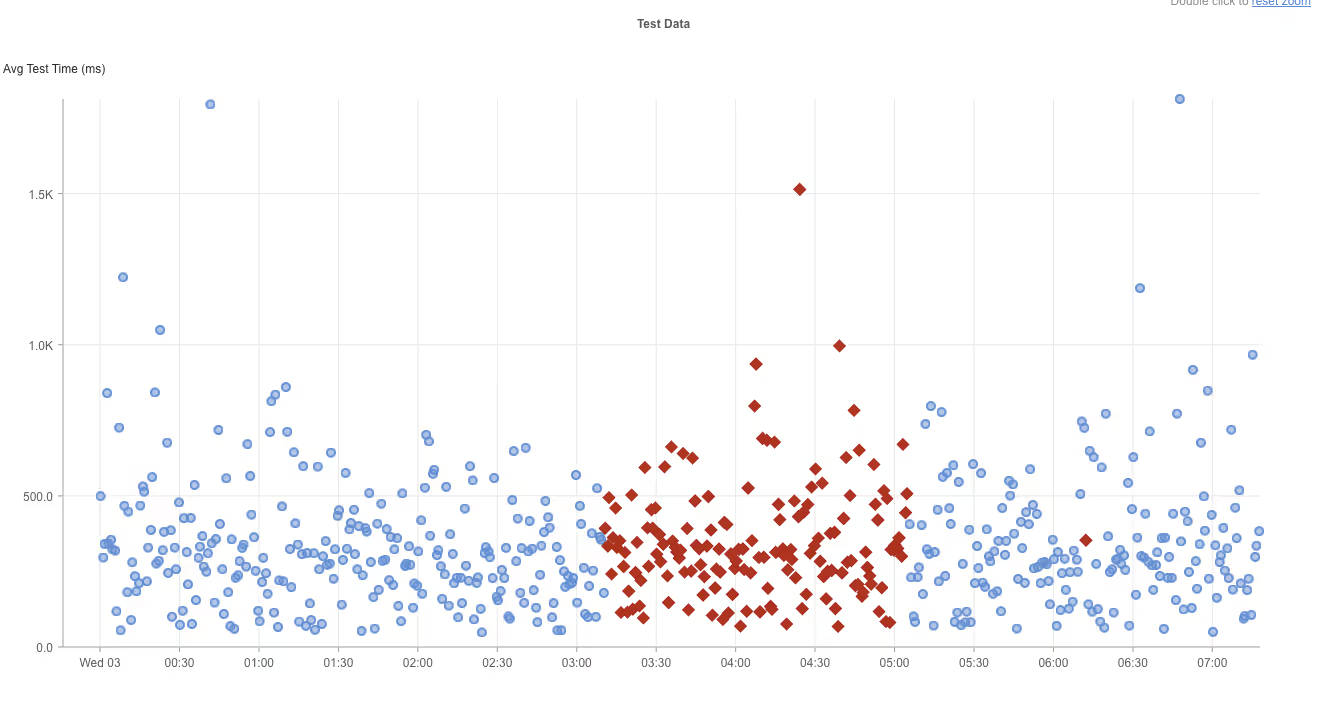

Catchpoint und Browser-Timings zeigten, dass Anfragen an den Analysedienst von TikTok während der Verbindungsphase (TCP-Verbindung) verzögert wurden, wobei die Verbindungszeiten in einigen Fällen 20 bis 30 Sekunden betrugen. Die DNS-Auflösung, SSL-Verhandlung, Serververarbeitung und Seitenrenderingzeiten waren normal, was darauf hindeutet, dass die Verzögerung vor dem erfolgreichen Aufbau einer Netzwerkverbindung auftrat.

Da die TikTok-Skripte im kritischen Seitenladepfad enthalten waren, warteten die Browser auf diese Verbindungen von Drittanbietern, bevor sie das Laden der Seite abschlossen. Infolgedessen erschienen die Seiten für die Benutzer langsam oder reagierten nicht, obwohl die eigene Anwendung der Website, die Backend-Dienste und das CDN normal funktionierten und die Verfügbarkeit hoch bliebCatchpoint

Catchpoint zeigten deutlich die tatsächlichen Auswirkungen auf die Benutzer:

• 37 % weniger Seitenaufrufe

• 24 % höhere Absprungrate

• Verschlechterung der Core Web Vitals, die ausschließlich auf das Verhalten von Drittanbietern zurückzuführen ist

Mitbringsel

• Skripte von Drittanbietern können eine Website verlangsamen, selbst wenn alles andere einwandfrei funktioniert.

• Synthetische Überwachung und RUM-Überwachung helfen dabei, Probleme von Drittanbietern von Anwendungs- oder Infrastrukturproblemen zu unterscheiden.

• Durch die Überwachung des gesamten Internet-Stacks wird deutlich, wo Zeit verloren geht, z. B. bei DNS, Verbindungsaufbau oder Antwortverzögerungen.

• Die Ausfallsicherheit des Internets hängt davon ab, dass kritische Abhängigkeiten beim Laden von Seiten von externen Diensten begrenzt und Analyse- oder Marketing-Skripte nach Möglichkeit isoliert werden.

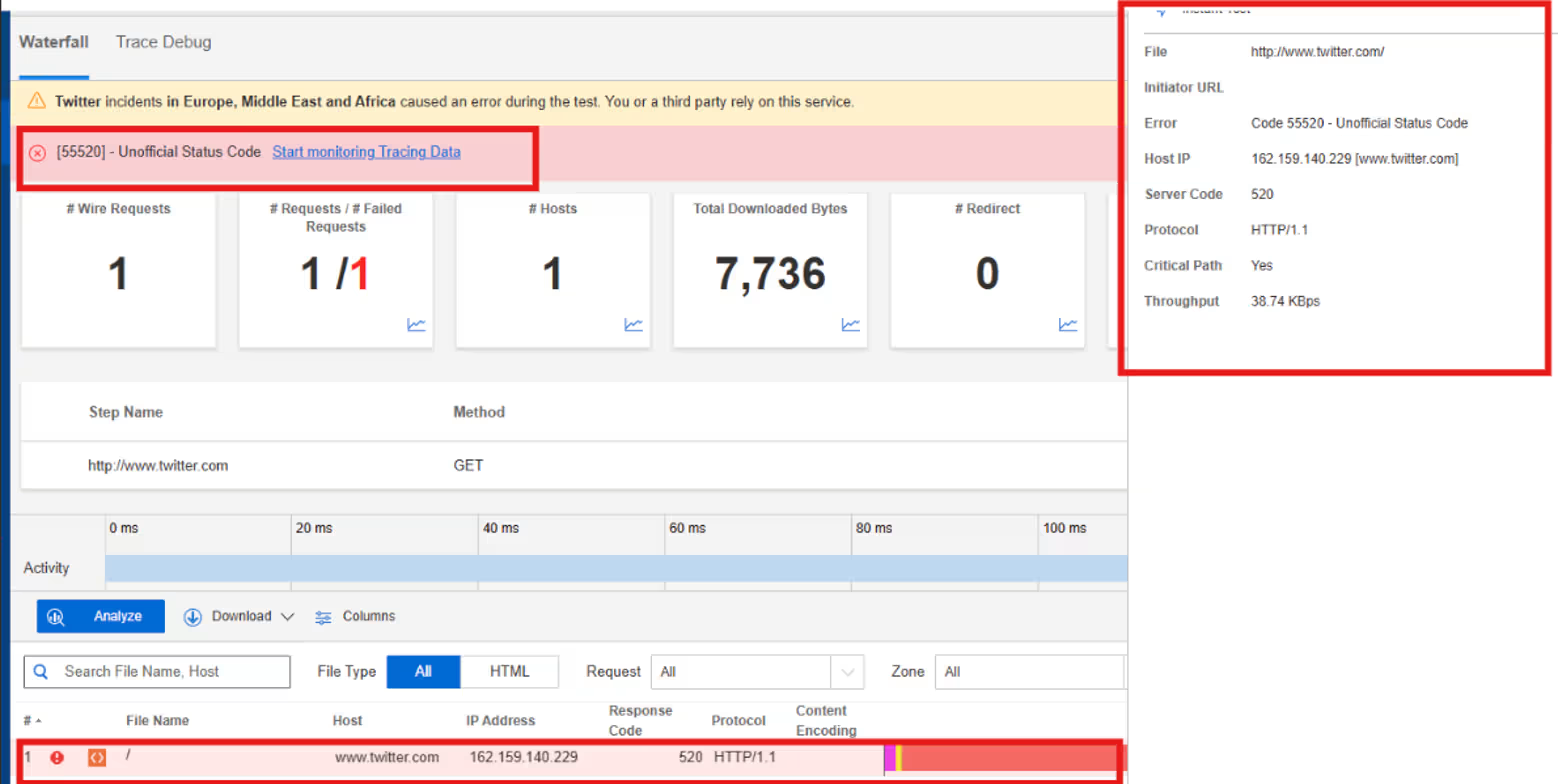

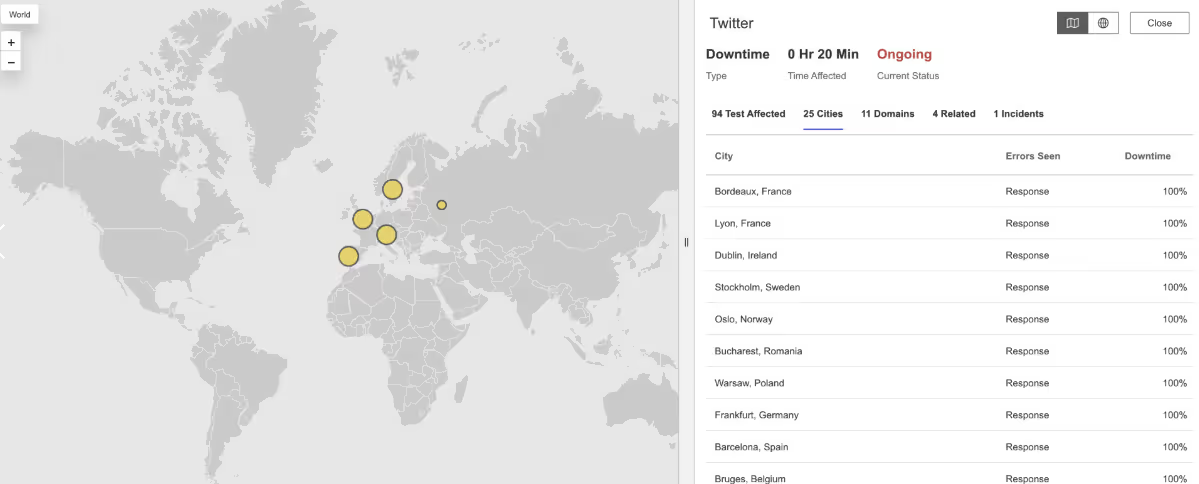

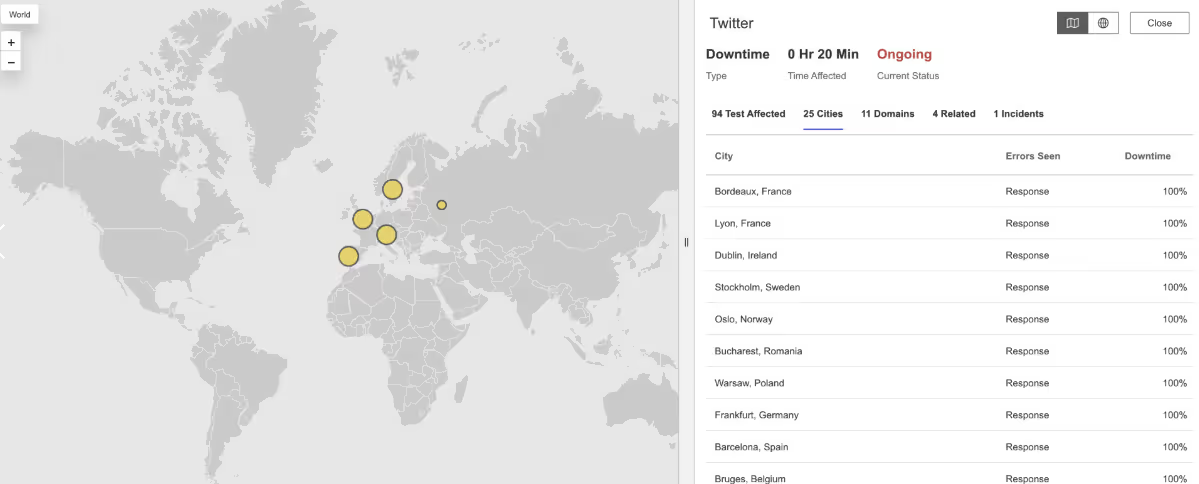

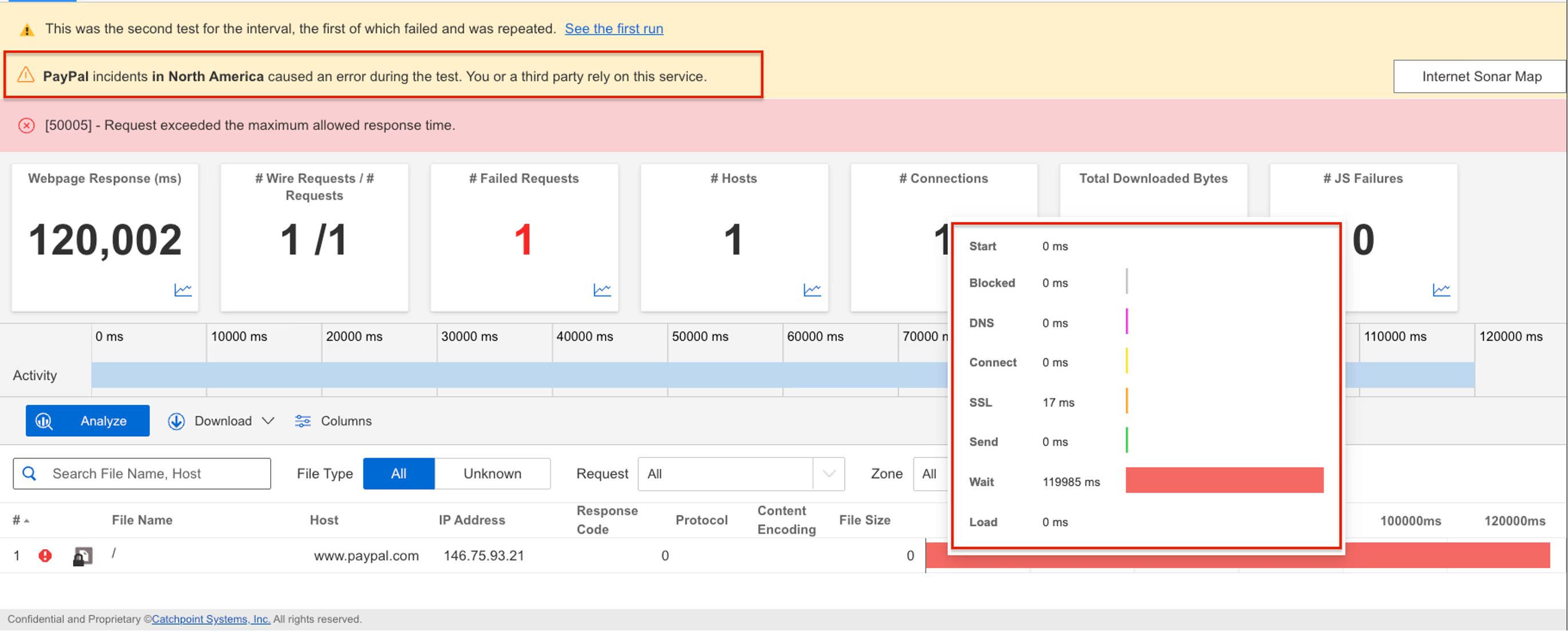

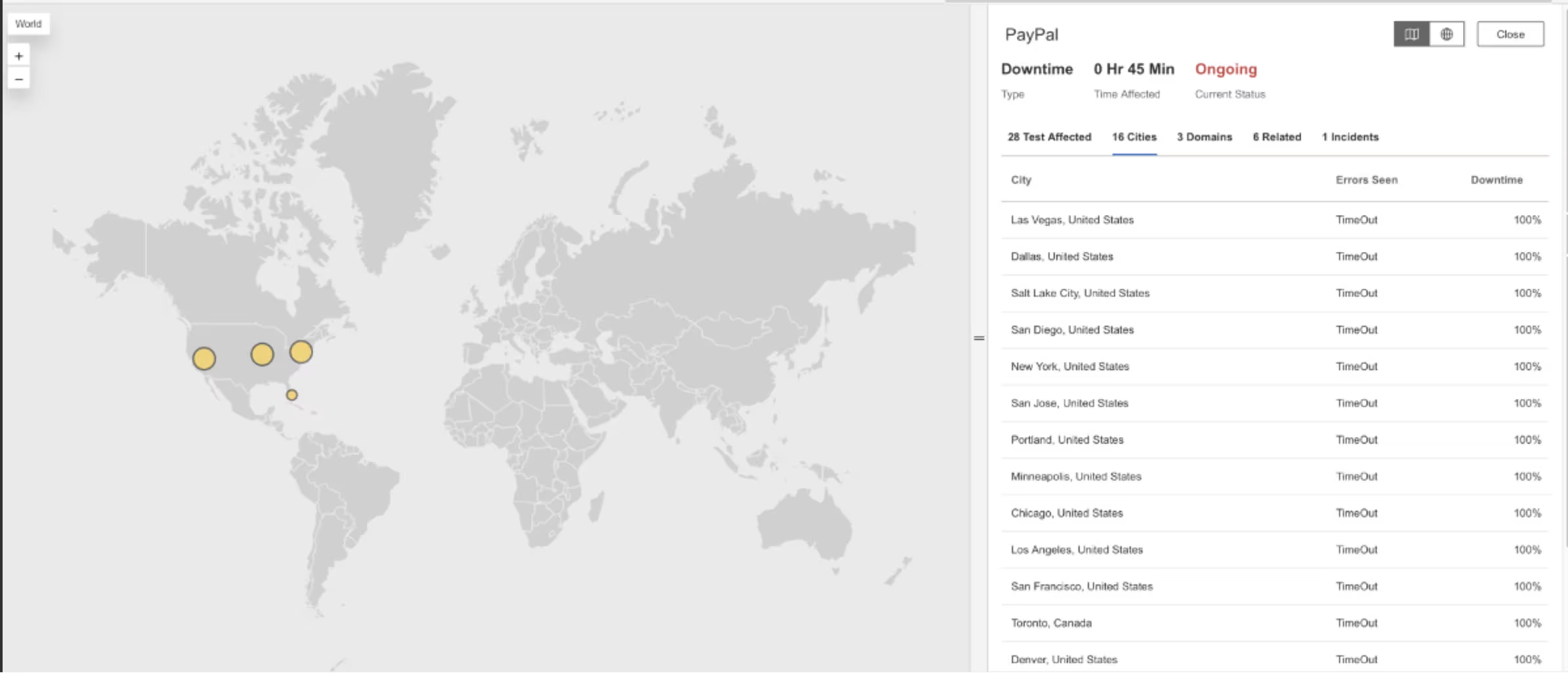

Was ist passiert?

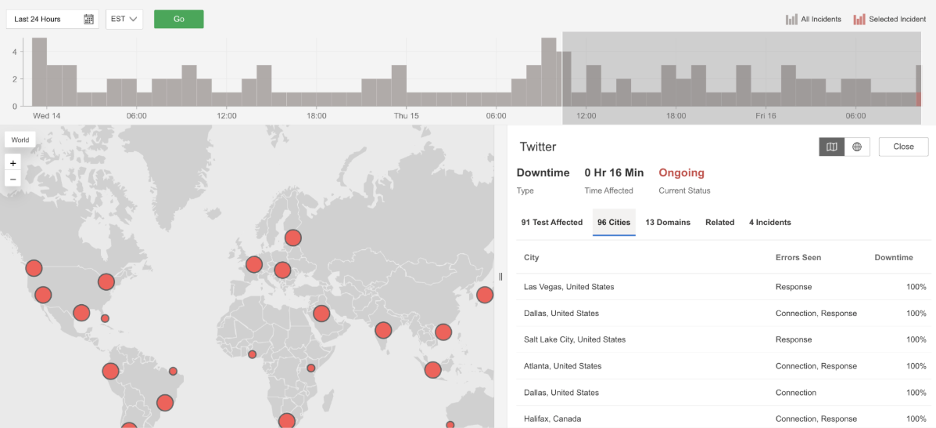

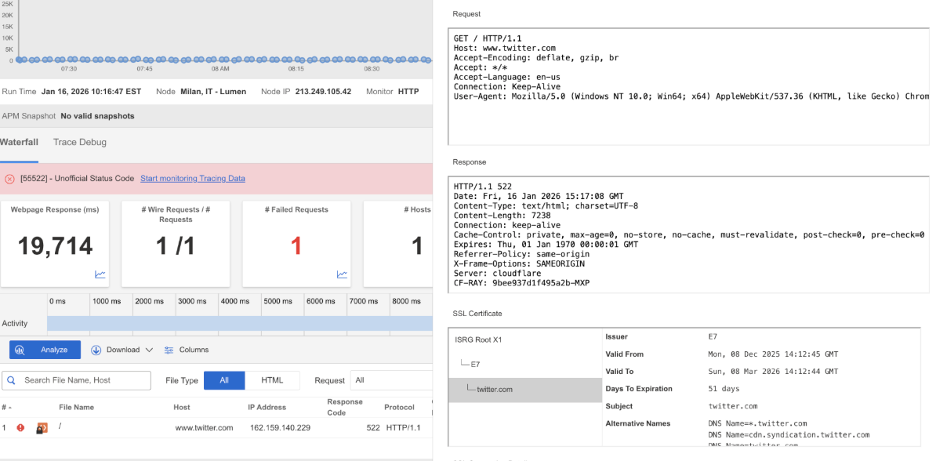

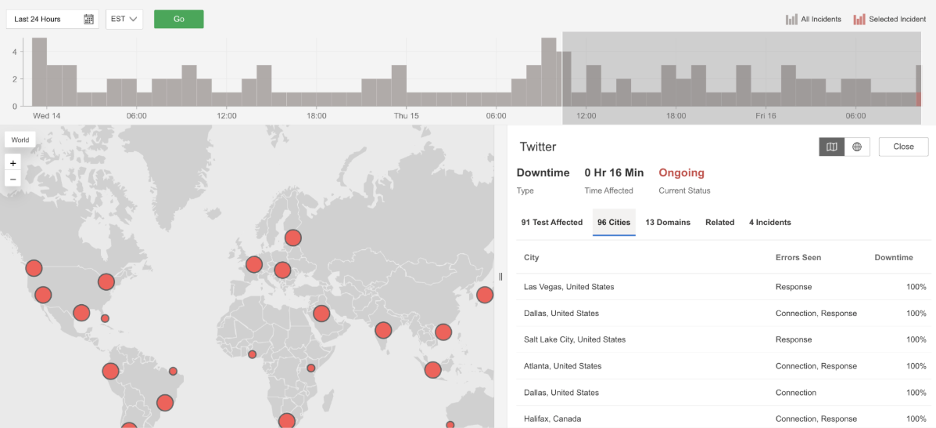

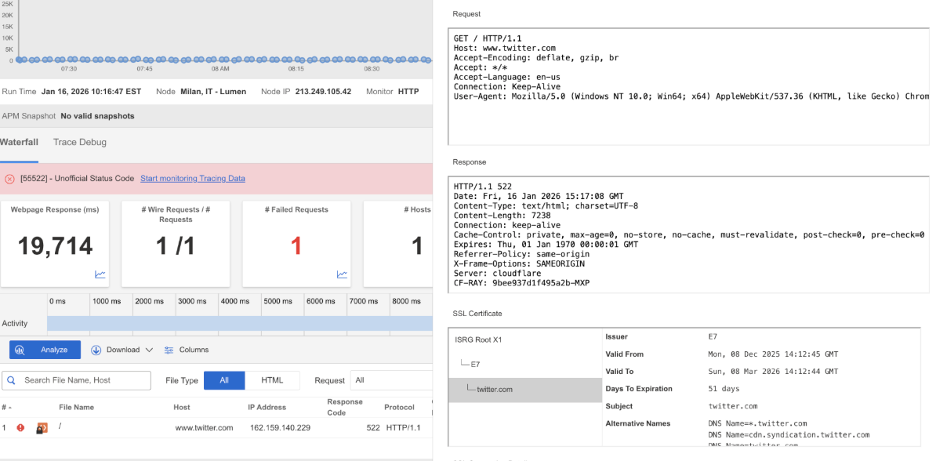

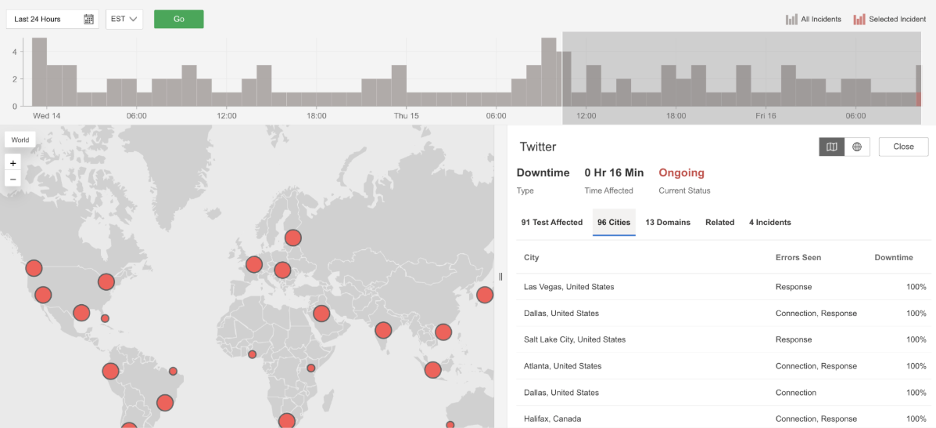

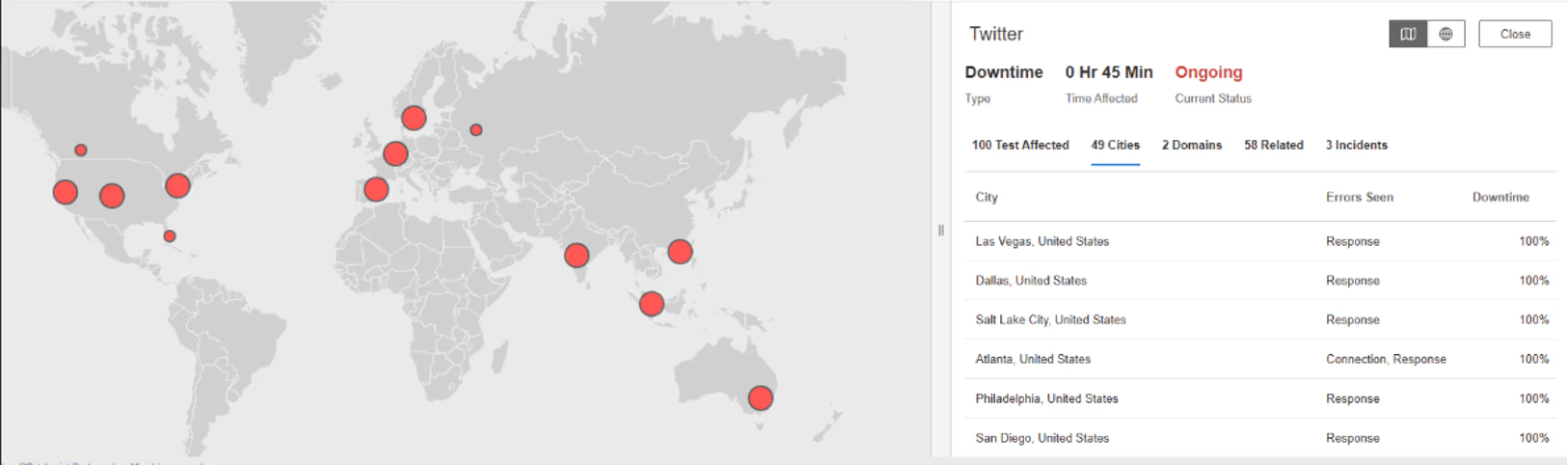

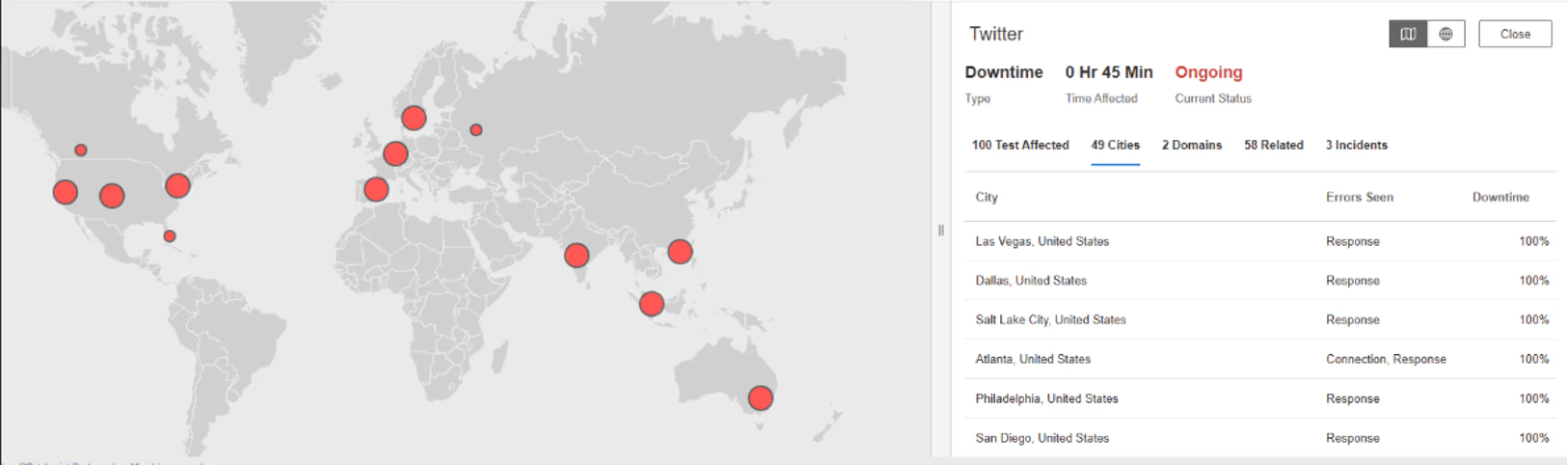

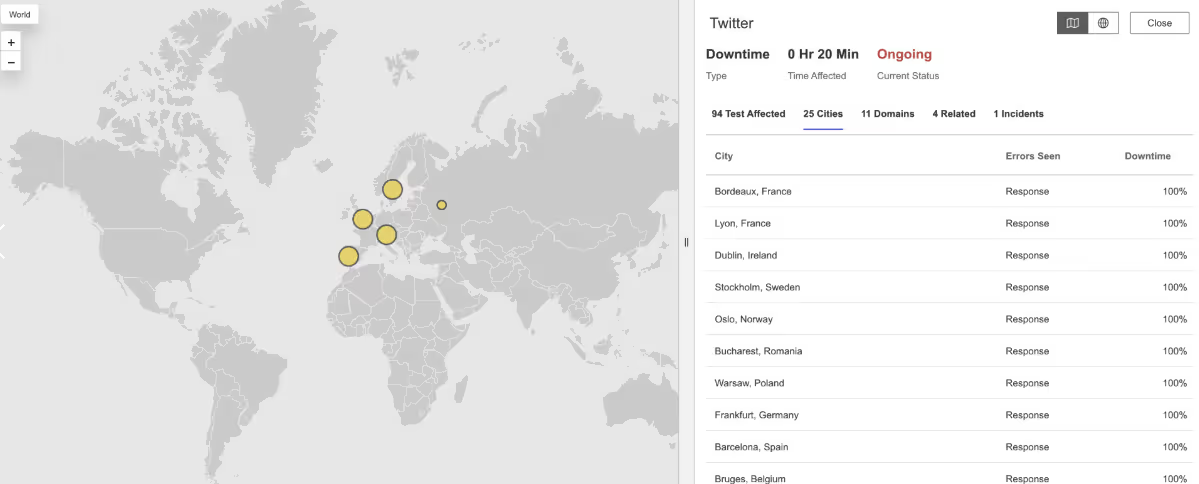

Um 10:11 Uhr EDT kam es bei Twitter zu einer weitreichenden Dienstunterbrechung, von der Nutzer in mehreren Regionen betroffen waren. Viele Nutzer konnten die Plattform nicht laden oder überhaupt keine Verbindung herstellen. Die Anfragen schlugen mit HTTP-522-Fehlern (Connection Timed Out) fehl, was bedeutet, dass das Netzwerk innerhalb der erwarteten Zeit keine erfolgreiche Verbindung zu den Servern von Twitter herstellen konnte. Dies deutete eher auf ein globales Verbindungs- oder serverseitiges Problem hin als auf ein Problem, das auf ein einzelnes Land oder Netzwerk beschränkt war.

Mitbringsel

Da die Verbindungen zu den Servern von Twitter zeitlich begrenzt waren, konnten die Nutzer während des Ausfalls nicht auf Timelines zugreifen, Inhalte posten oder den Dienst aktualisieren. Große soziale Plattformen sind auf eine zuverlässige Verbindung zwischen Nutzern, Netzwerken und Backend-Infrastruktur angewiesen, und Ausfälle an irgendeiner Stelle dieser Verbindung können schnell Millionen von Menschen betreffen. Eine unabhängige, externe Überwachung über Regionen hinweg hilft dabei, festzustellen, ob Ausfälle wirklich global sind, und gibt Aufschluss darüber, ob die Probleme auf das Netzwerk-Routing, die Konnektivität oder die Anwendungsschicht selbst zurückzuführen sind.

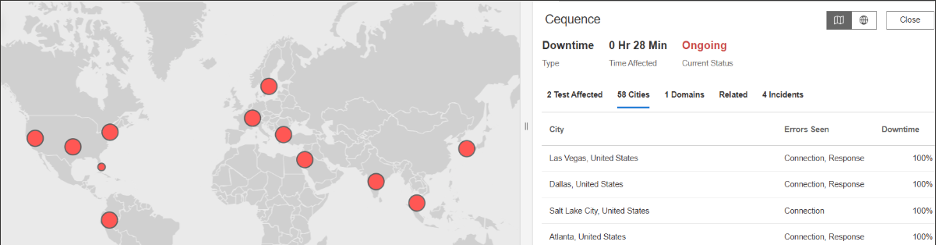

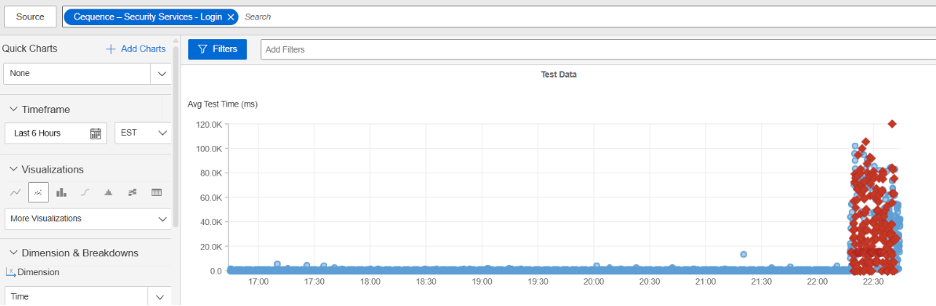

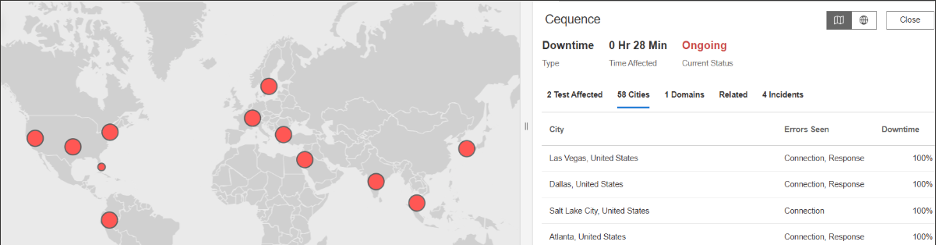

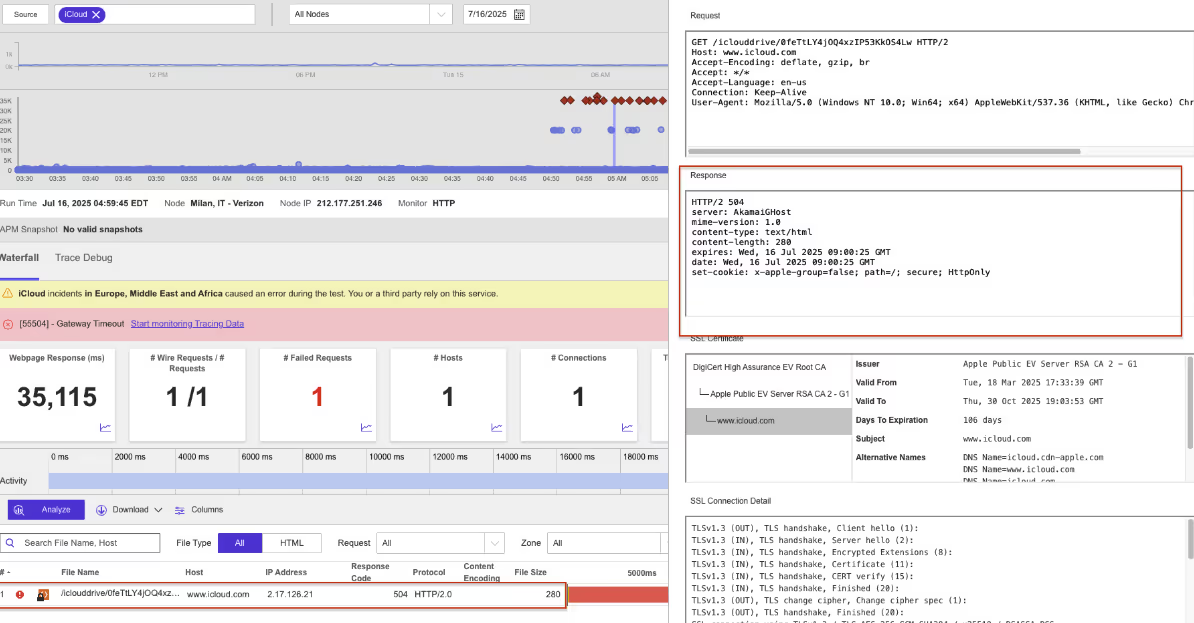

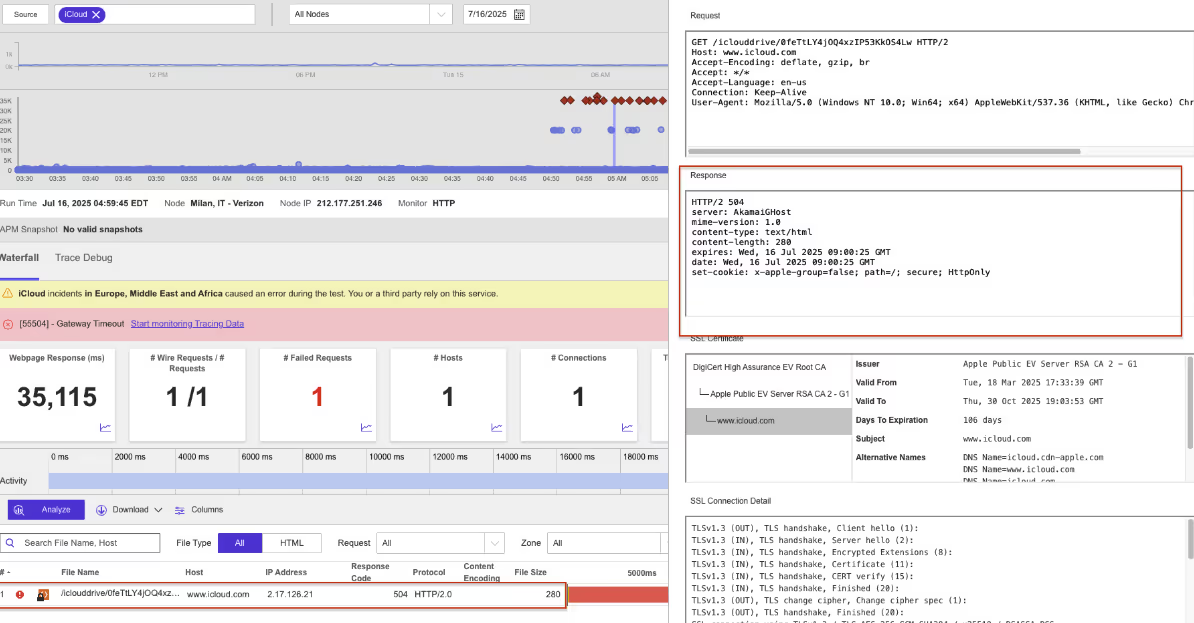

Cequence

Was ist passiert?

Um ca. 22:17 Uhr EST kam es bei Cequence zu einer Dienstunterbrechung, von der Nutzer in mehreren Regionen betroffen waren, darunter die Vereinigten Staaten, Polen und Frankreich. Anfragen an die Dienste von Cequence schlugen mit HTTP 504-Fehlern (Gateway Timeout) fehl. Das bedeutet, dass der Dienst keine rechtzeitige Antwort von einem vorgelagerten System erhielt, wodurch Anfragen fehlschlugen und die Nutzer während des Ausfallzeitraums nicht verfügbare oder nicht reagierende Dienste erlebten.

Mitbringsel

Da Cequence auf die schnelle Reaktion vorgelagerter Systeme angewiesen ist, können Verzögerungen oder Ausfälle in diesen Abhängigkeiten direkte Auswirkungen auf den Benutzerzugriff haben. Ausfälle aufgrund von Zeitüberschreitungen verdeutlichen, wie Probleme in der Backend-Infrastruktur oder in Netzwerkpfaden zu sichtbaren Dienstausfällen führen können. Externe, regionenübergreifende Internet-Performance-Monitoring (IPM)-Maßnahmen helfen dabei, festzustellen, ob Probleme lokal begrenzt oder weit verbreitet sind, und bieten unabhängige Einblicke in die Verfügbarkeit, wenn interne Systeme beeinträchtigt sind.

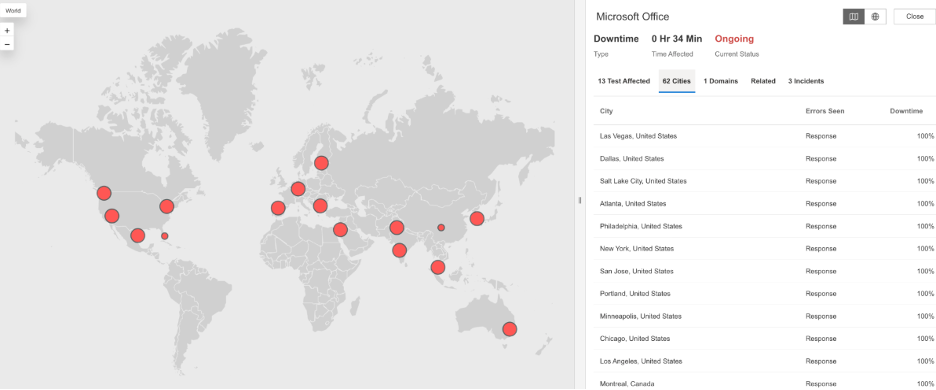

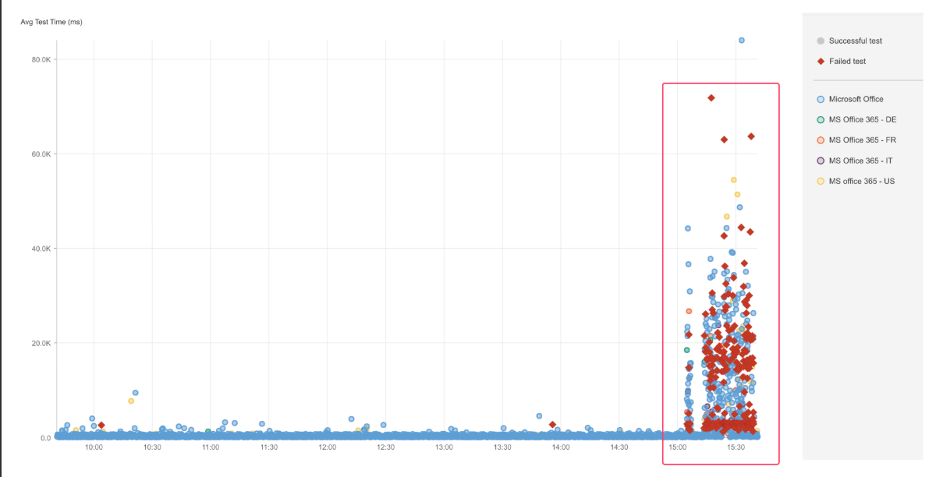

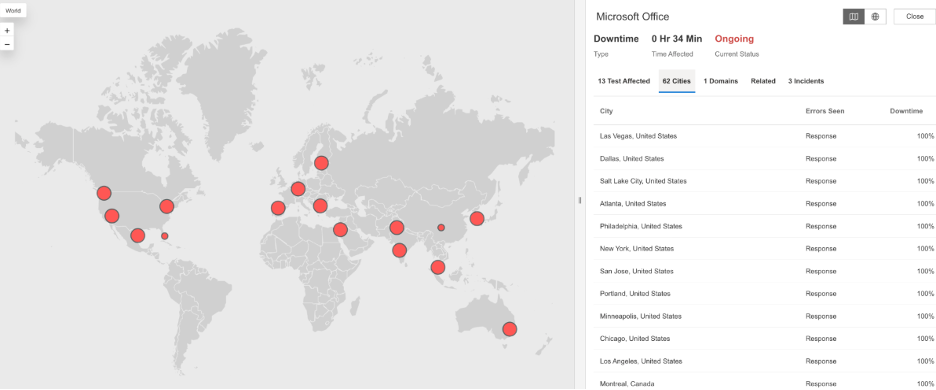

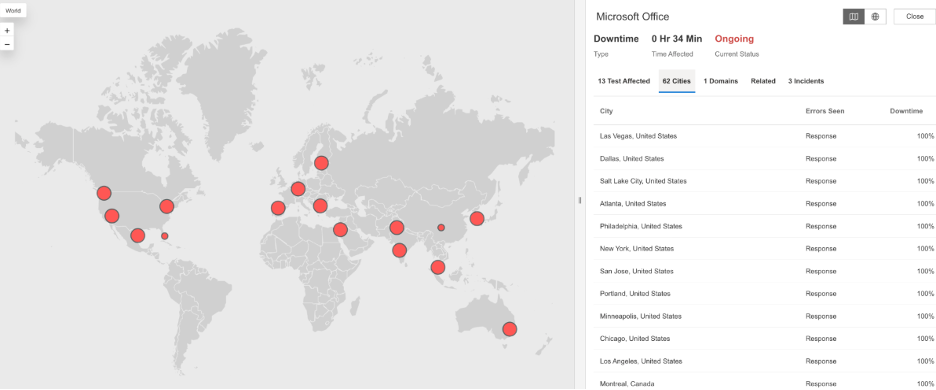

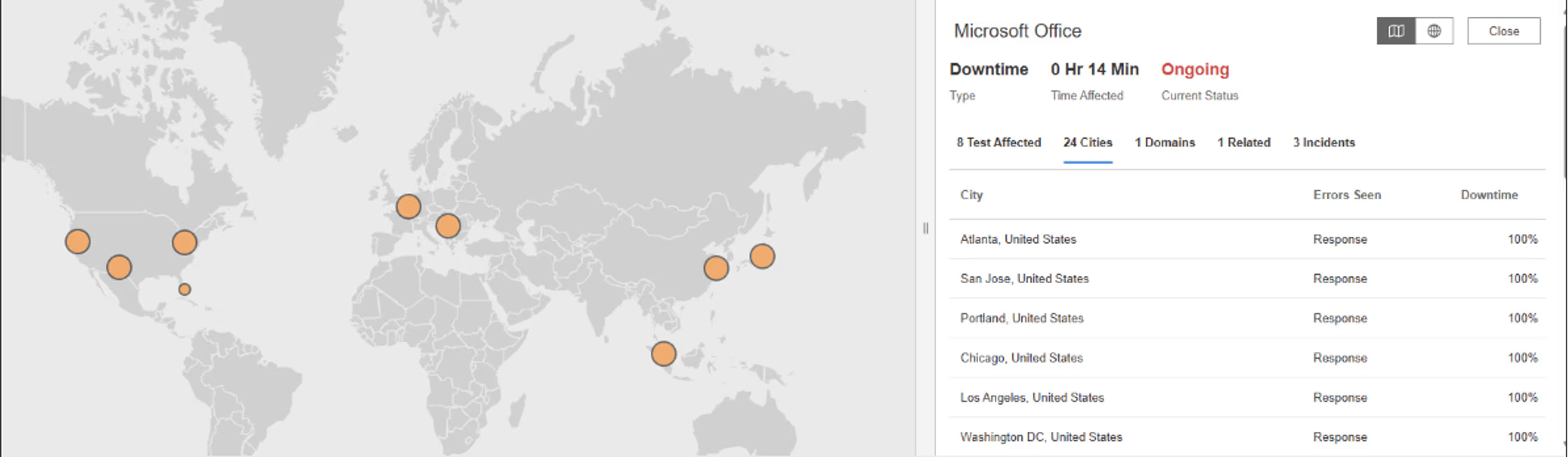

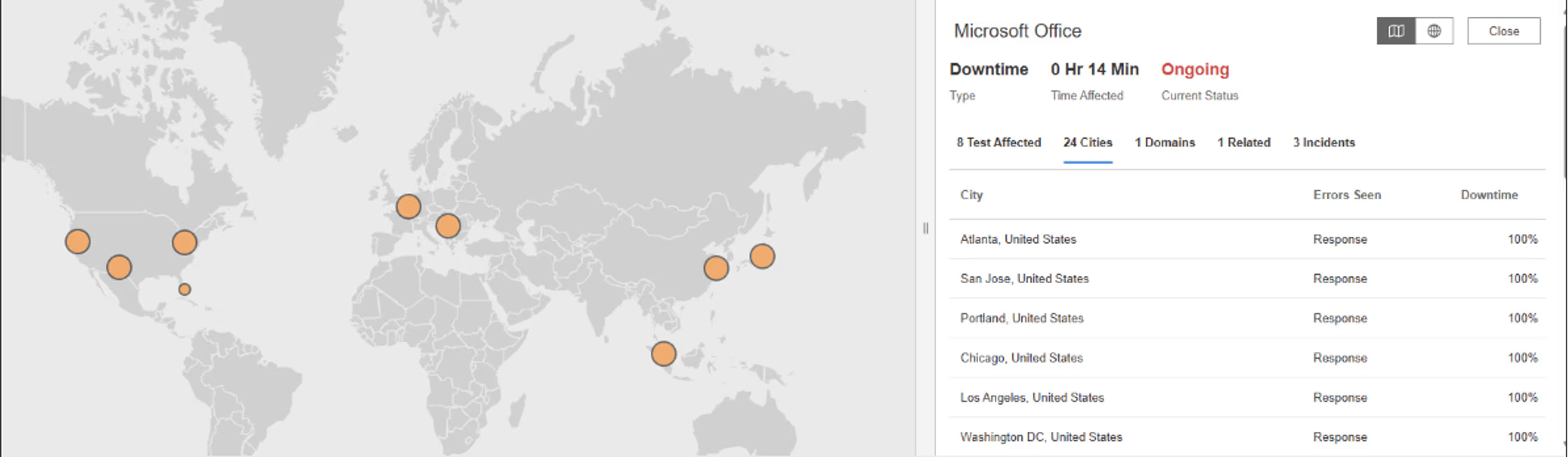

Microsoft Büro

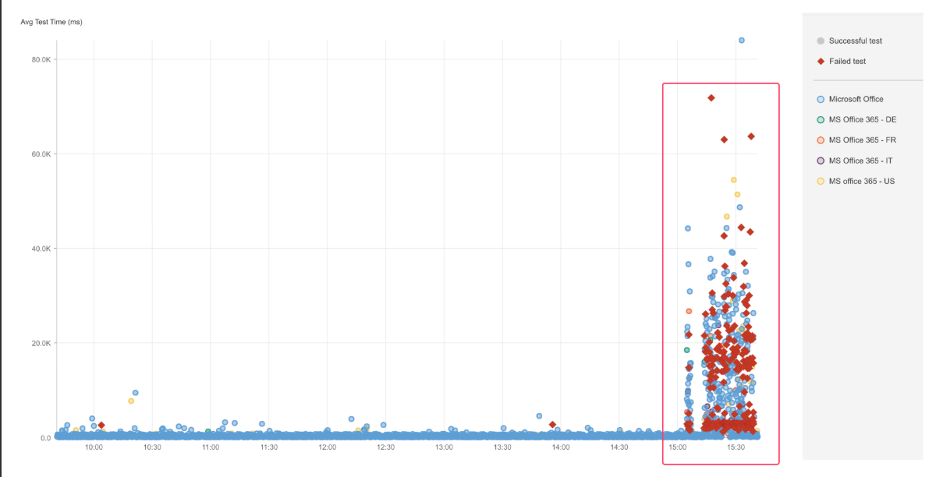

Was ist passiert?

Um 15:04 Uhr EST kam es bei Microsoft Office zu einer Dienstunterbrechung, von der Nutzer in mehreren Regionen betroffen waren. Nutzer, die versuchten, auf Office-Dienste zuzugreifen, erhielten den Fehler „HTTP 503 (Dienst nicht verfügbar)“, was bedeutet, dass der Dienst vorübergehend keine Anfragen bearbeiten konnte. Dies deutete darauf hin, dass die Microsoft Office-Server während des Ausfalls entweder überlastet oder nicht verfügbar waren, sodass Nutzer nicht auf Office-Anwendungen und -Dienste zugreifen konnten.

Mitbringsel

Da die Microsoft Office-Dienste nicht verfügbar waren, konnten die Benutzer nicht auf die Produktivitätswerkzeuge zugreifen, auf die sie für ihre tägliche Arbeit angewiesen sind. Selbst kurze Ausfallzeiten können enorme Auswirkungen haben, wenn es sich um weit verbreitete Cloud-Anwendungen handelt. Die Überwachung der Verfügbarkeit von mehreren Standorten weltweit hilft dabei, festzustellen, ob Probleme regional oder weit verbreitet sind, während die Transparenz über die Anwendungs- und Bereitstellungsebenen hinweg den Teams hilft zu verstehen, ob Ausfälle auf die Serverkapazität, vorgelagerte Abhängigkeiten oder allgemeine Probleme mit der Internetverbindung zurückzuführen sind.

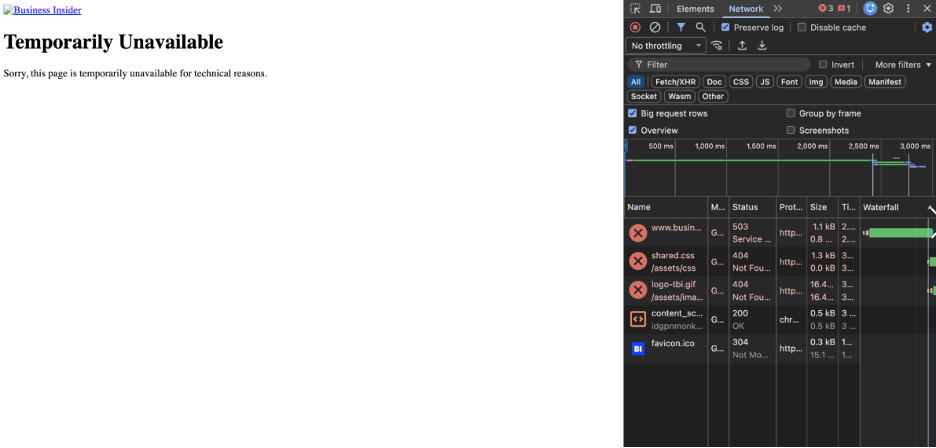

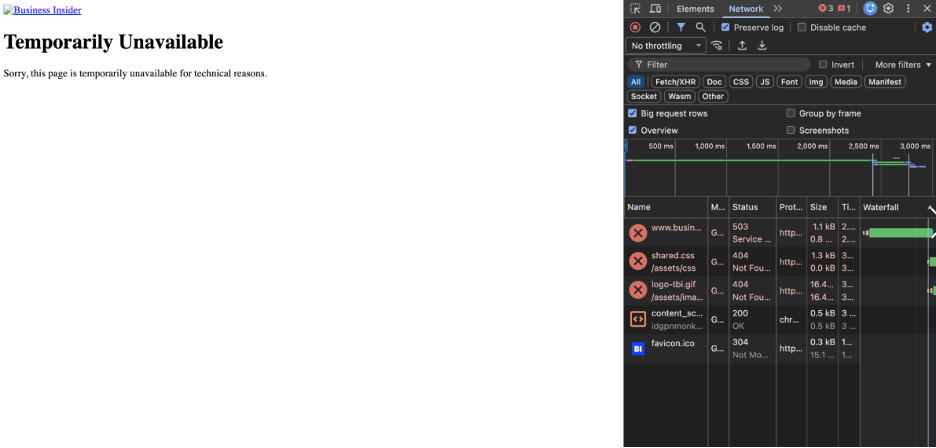

Business Insider

Was ist passiert?

Um 2:20 Uhr EST kam es bei Business Insider zu einer Dienstunterbrechung, von der Nutzer in Nordamerika betroffen waren. Nutzer, die versuchten, Artikel zu laden, erhielten den Fehlercode HTTP 503 (Dienst nicht verfügbar), was bedeutet, dass die Server der Website vorübergehend nicht in der Lage waren, Anfragen zu bearbeiten. Infolgedessen konnten die Seiten nicht geladen werden und der Dienst war während des Ausfallzeitraums nicht verfügbar.

Mitbringsel

Da die Server von Business Insider nicht auf Anfragen reagieren konnten, konnten die Leser bei Bedarf nicht auf Nachrichteninhalte zugreifen. Für Medien- und Verlagswebsites ist die Verfügbarkeit von entscheidender Bedeutung, insbesondere während aktueller Nachrichtenzyklen. Die externe Überwachung von mehreren Standorten aus hilft dabei, festzustellen, wann Ausfälle echte Nutzer betreffen, während die Transparenz der Anwendungs- und Bereitstellungsebenen den Teams hilft, festzustellen, ob Ausfälle durch Probleme mit der Serverkapazität, Backend-Abhängigkeiten oder umfassendere Konnektivitätsprobleme verursacht werden.

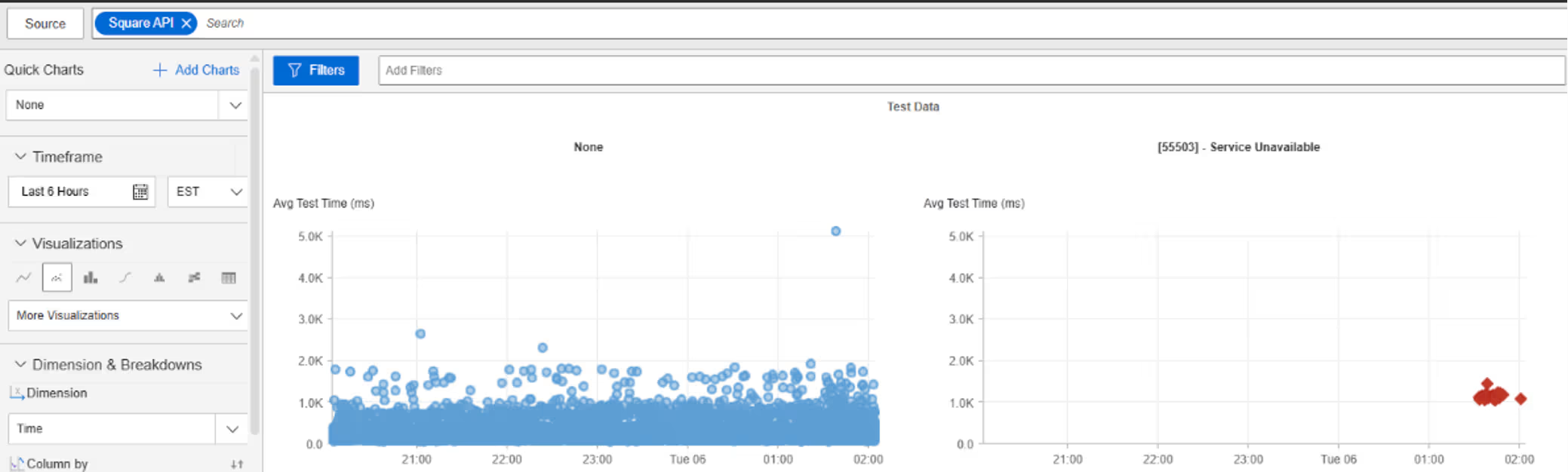

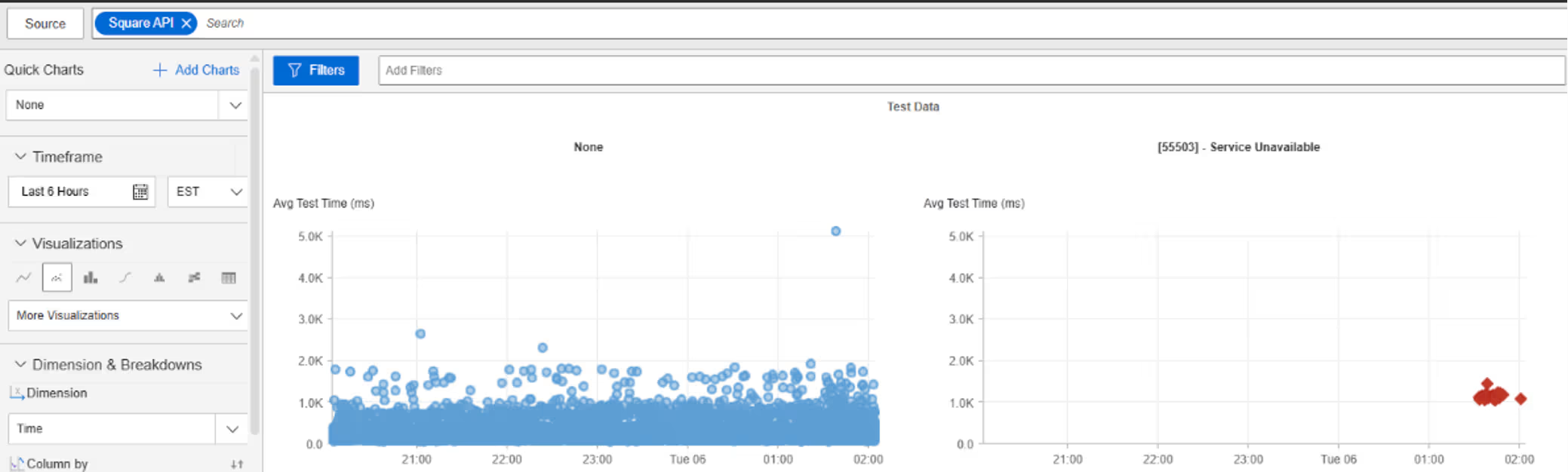

Quadrat

Was ist passiert?

Um ca. 1:33 Uhr EST kam es bei Square zu einer Dienstunterbrechung, von der Nutzer in mehreren europäischen Ländern betroffen waren. Nutzer, die versuchten, auf Square-Dienste zuzugreifen, erhielten den Fehler „HTTP 503 (Dienst nicht verfügbar)“, was bedeutet, dass die Server von Square vorübergehend nicht in der Lage waren, eingehende Anfragen zu bearbeiten. Infolgedessen waren die Dienste während des Ausfallzeitraums nicht verfügbar oder reagierten nicht.

Mitbringsel

Weit verbreitete HTTP-503-Fehler deuten darauf hin, dass die Anwendungsschicht von Square erreichbar war, aber keine Anfragen bearbeiten konnte, was eher auf Probleme mit der Kapazität auf Serverseite, interne Ausfälle oder Abhängigkeiten von vorgelagerten Diensten als auf Probleme mit der Erreichbarkeit des Internets hindeutet. Für transaktionsgesteuerte Plattformen unterstreicht dies, wie Ausfälle innerhalb der Anwendung und des Abhängigkeitsstacks sofort zu für den Benutzer sichtbaren Ausfallzeiten führen können. Aus Sicht des SRE hilft die Internet Performance Monitoring (IPM) dabei, zu überprüfen, ob der Internetpfad trotz einer Verschlechterung der Verfügbarkeit weiterhin intakt ist, wodurch der Auswirkungsradius auf den Dienst selbst begrenzt wird. Durch die Korrelation regionaler Fehlerraten mit Latenz- und Verfügbarkeitsmetriken können Teams feststellen, ob Ausfälle durch eine Überlastung des Backends, Probleme bei der Einführung oder kaskadierende Abhängigkeitsausfälle verursacht werden, wodurch weniger Zeit für die Suche nach irrelevanten Netzwerk- oder Routing-Signalen aufgewendet werden muss.

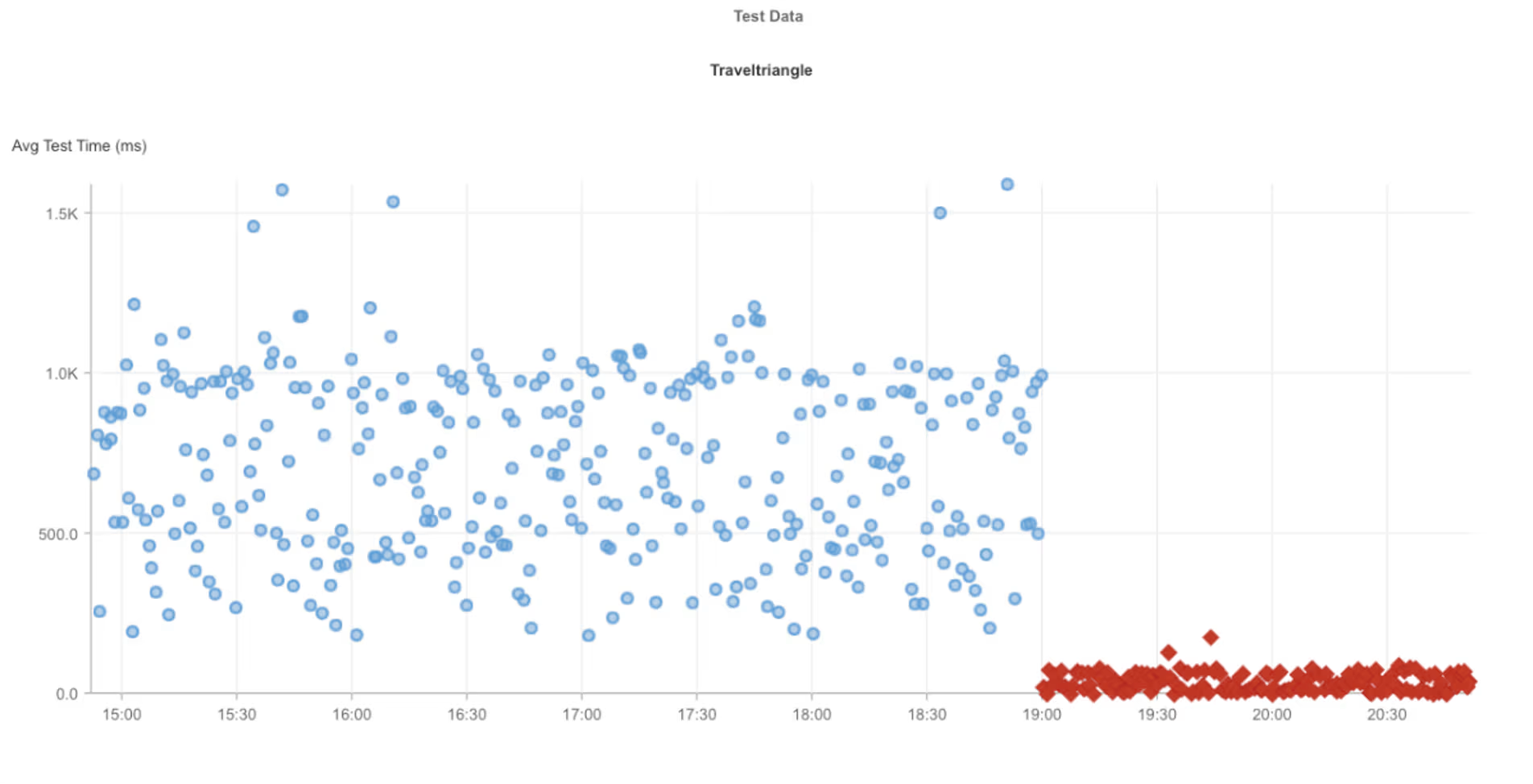

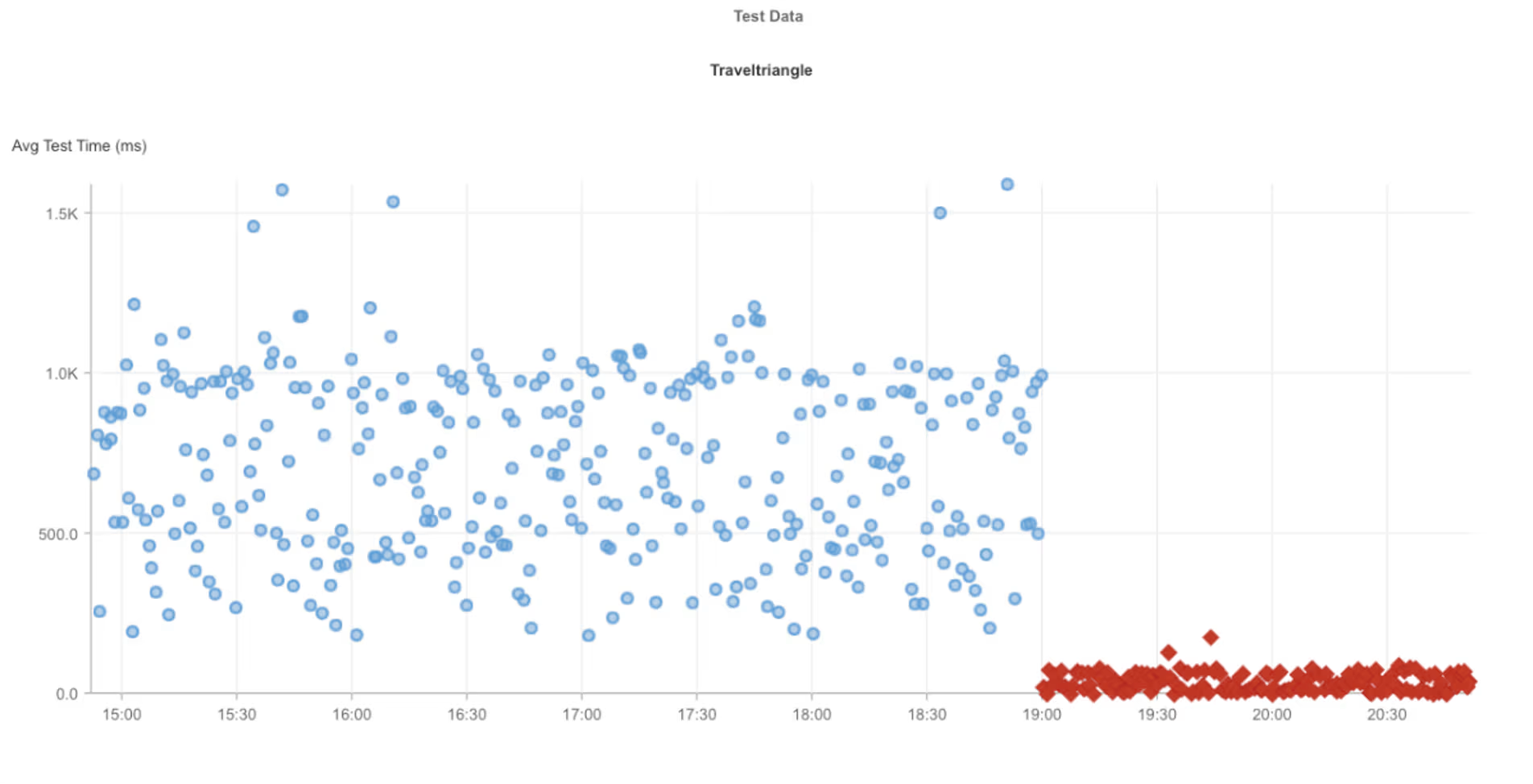

Dezember

Reisendreieck

Was ist passiert?

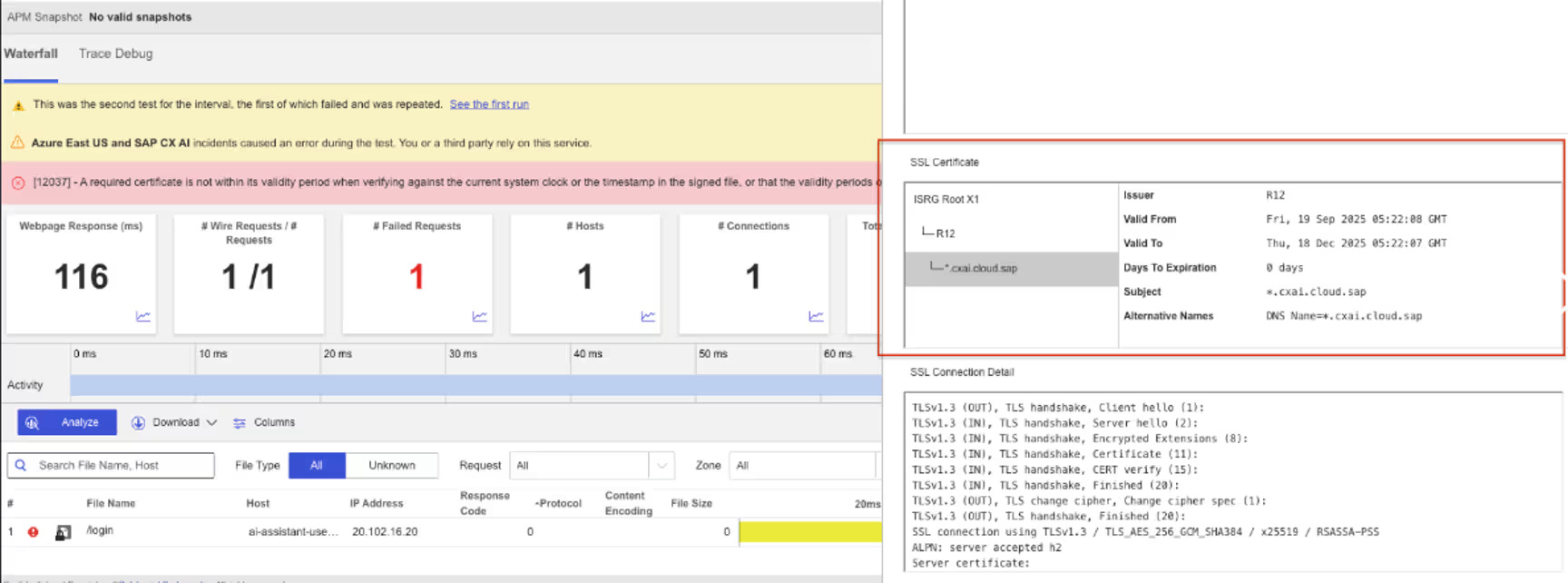

Um ca. 19:00 Uhr EST kam es bei Traveltriangle zu einer Dienstunterbrechung, von der Nutzer in Nordamerika betroffen waren. Anfragen an die Traveltriangle-Website schlugen aufgrund von SSL-Zertifikatsvalidierungsfehlern fehl, die durch ein abgelaufenes SSL-Zertifikat verursacht wurden. Das bedeutet, dass die Browser der Nutzer keine sichere HTTPS-Verbindung herstellen konnten, was während des Ausfallzeitraums zu einer Sperrung des Zugriffs und einer Nichtverfügbarkeit des Dienstes führte.

Mitbringsel

Ein abgelaufenes SSL-Zertifikat ist ein Single-Point-Failure, der einen Dienst sofort unzugänglich machen kann, selbst wenn die zugrunde liegende Anwendung und Infrastruktur ansonsten einwandfrei funktionieren. Aus Sicht des SRE unterstreicht dies die Bedeutung der Überwachung der Gültigkeit von TLS/SSL-Zertifikaten als Teil der externen Verfügbarkeitsprüfungen und nicht nur der Anwendungsbetriebszeit. Internet Performance Monitoring (IPM) hilft dabei, zu bestätigen, dass Fehler während des Verbindungsaufbaus und nicht tiefer im Lebenszyklus der Anfrage auftreten, sodass Teams Anwendungsfehler oder Probleme mit dem Netzwerkpfad schnell ausschließen können. Die proaktive Überwachung des Zertifikatslebenszyklus und die Ausgabe von Warnmeldungen sind entscheidend, um vermeidbare, für den Benutzer sichtbare Ausfälle zu verhindern, die durch ablaufende Sicherheitsabhängigkeiten verursacht werden.

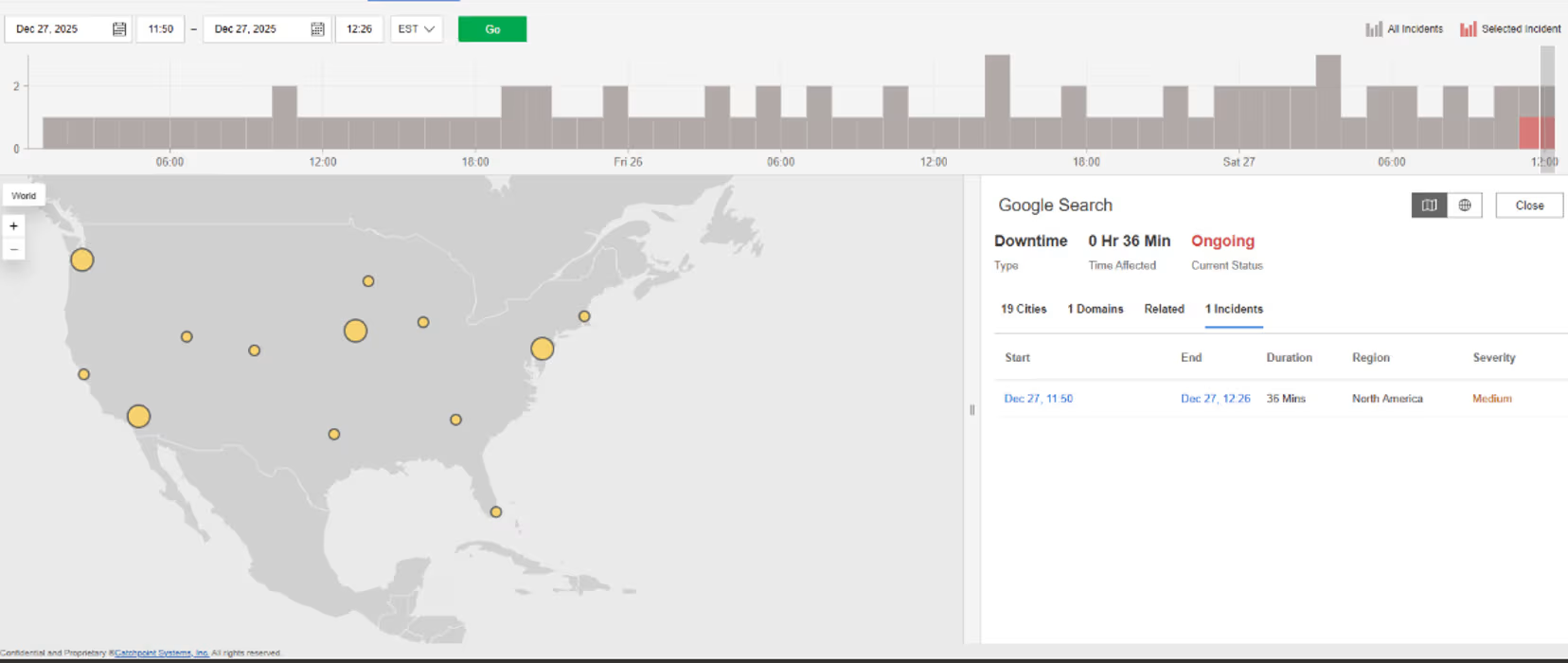

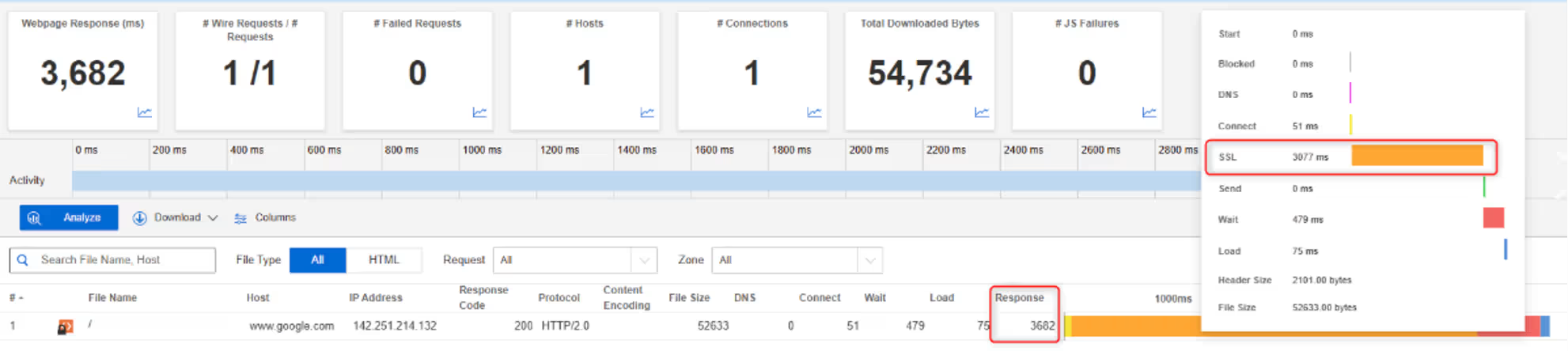

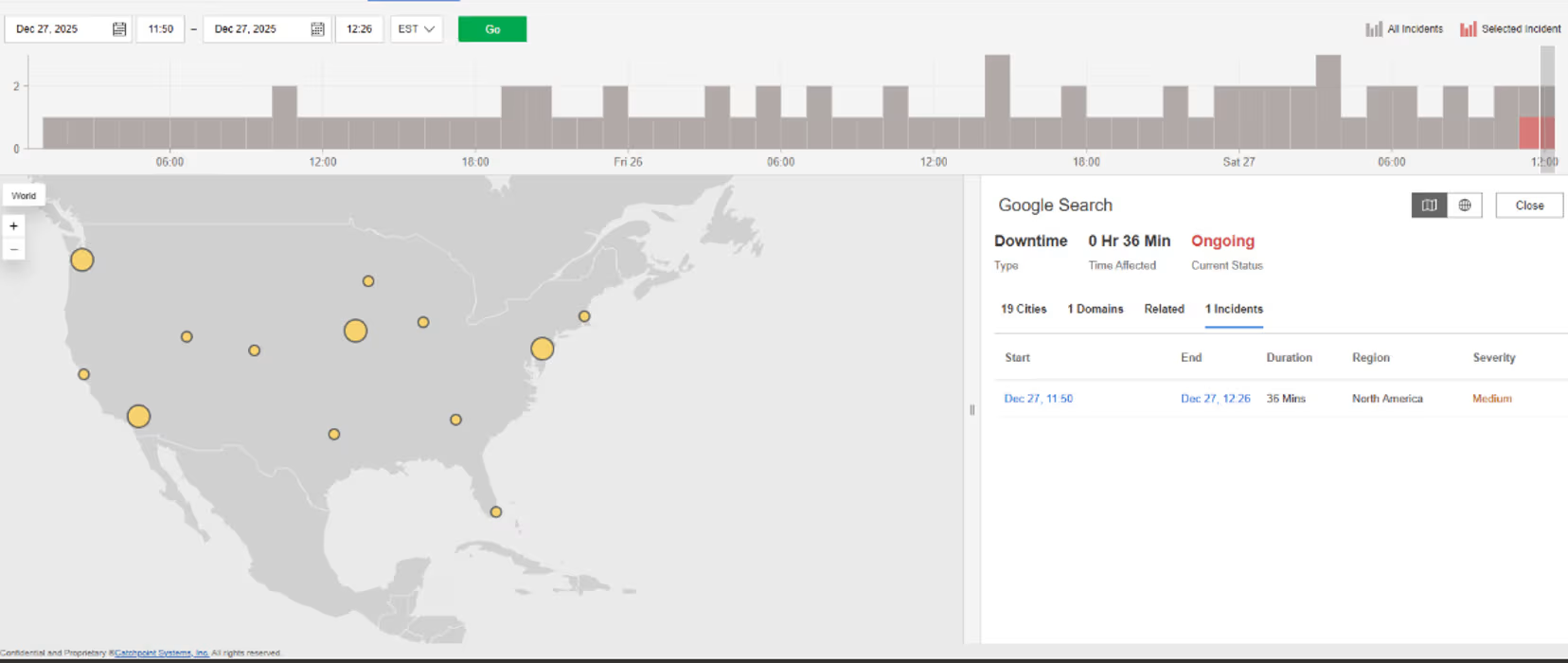

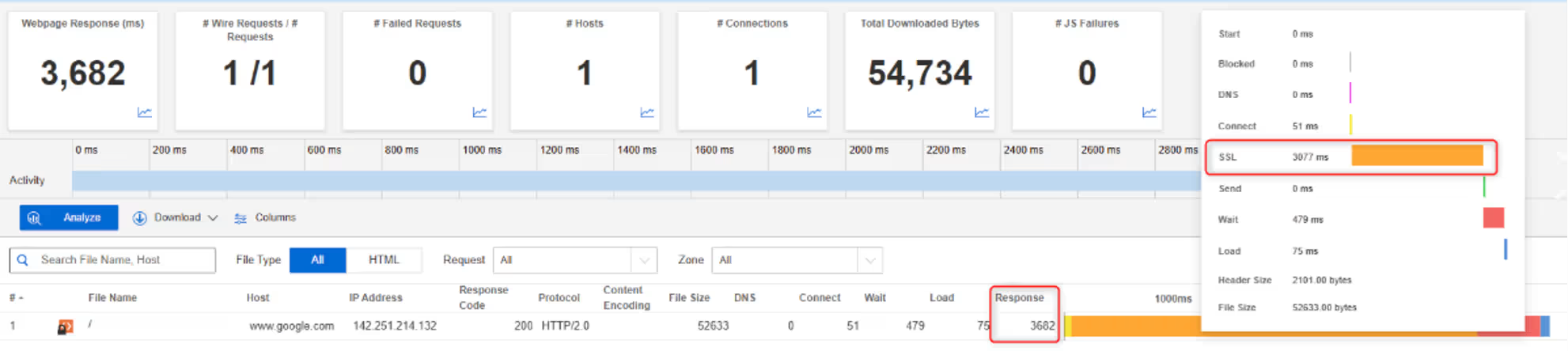

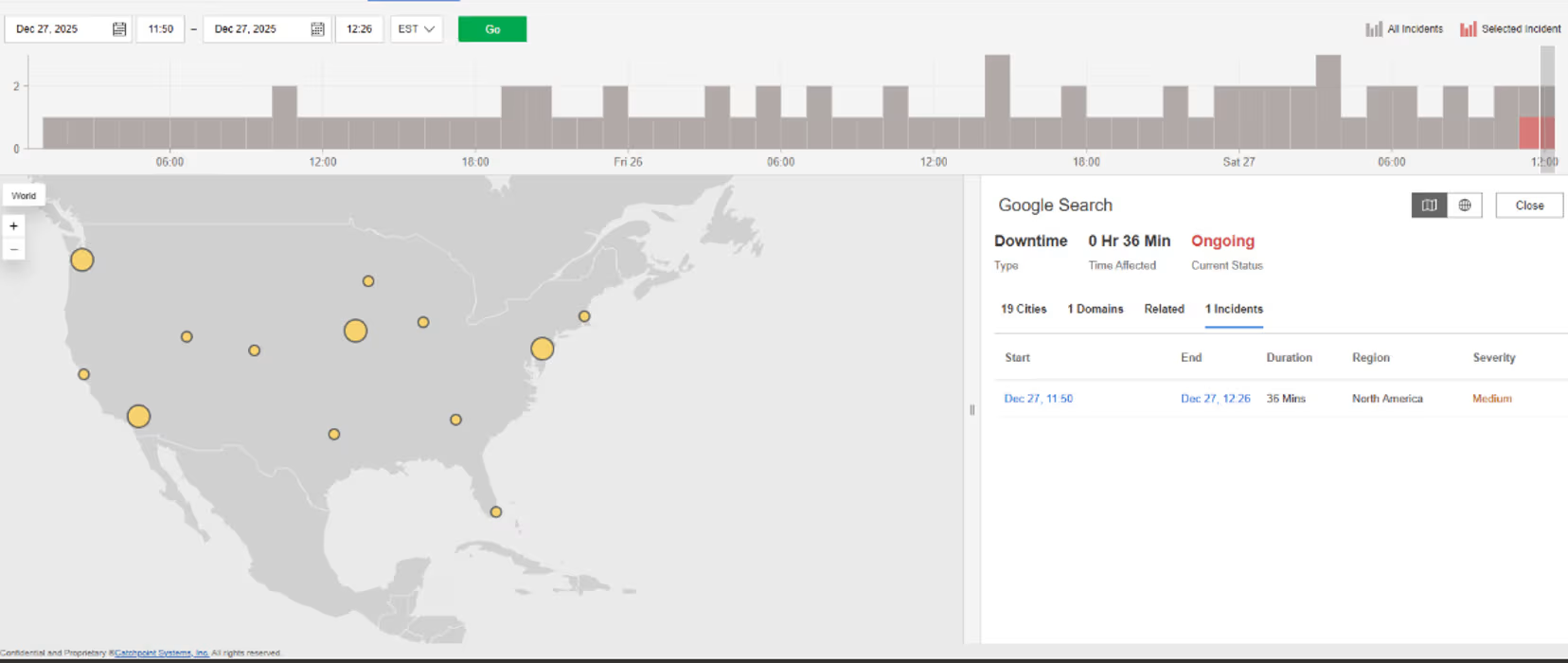

Google-Suche

Was ist passiert?

Um ca. 11:50 Uhr EDT kam es bei der Google-Suche zu Leistungseinbußen, von denen Nutzer in ganz Nordamerika betroffen waren. Anfragen an die Google-Suche schlugen nicht vollständig fehl, aber die Antwortzeiten waren erhöht, was hauptsächlich auf SSL/TLS-Verzögerungen beim Verbindungsaufbau zurückzuführen war. Das bedeutet, dass der Aufbau sicherer Verbindungen deutlich länger als normal dauerte, was während des Ausfalls zu langsamen Suchantworten führte.

Mitbringsel

Wenn die SSL/TLS-Verhandlung langsam wird, kommt es für die Benutzer zu Leistungseinbußen, obwohl der Dienst technisch weiterhin verfügbar ist. Diese Art von Problem liegt zwischen der Netzwerkverfügbarkeit und der Anwendungsausführung, sodass es ohne detaillierte Einblicke leicht zu Fehlklassifizierungen kommen kann. Aus Sicht des SRE hilft die Internet Performance Monitoring (IPM) dabei, aufzuschlüsseln, wo im Lebenszyklus einer Anfrage Zeit verloren geht, indem sie TLS-Handshake-Verzögerungen von der Serververarbeitungszeit unterscheidet. Durch die Überwachung der SSL-Leistung neben der allgemeinen Verfügbarkeit können Teams Engpässe auf der Sicherheitsebene frühzeitig erkennen und längere „Brownout“-Zustände vermeiden, in denen Dienste zwar verfügbar sind, sich für die Benutzer jedoch langsam oder unzuverlässig anfühlen.

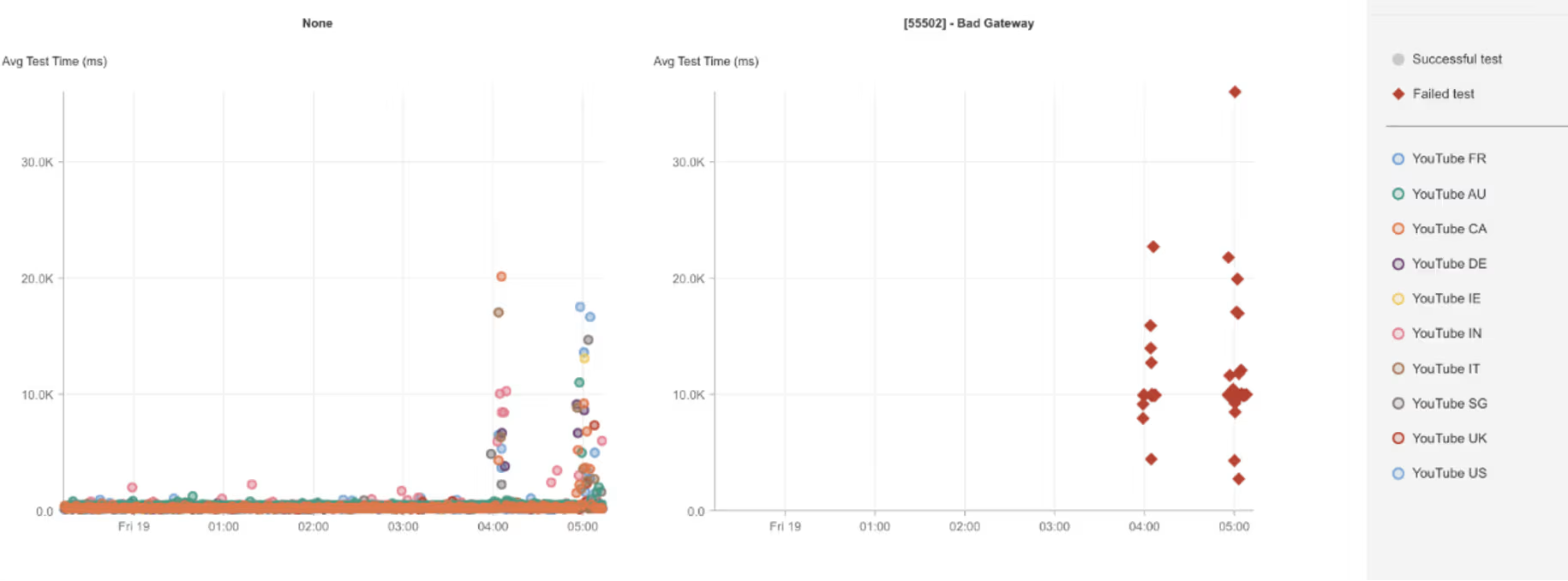

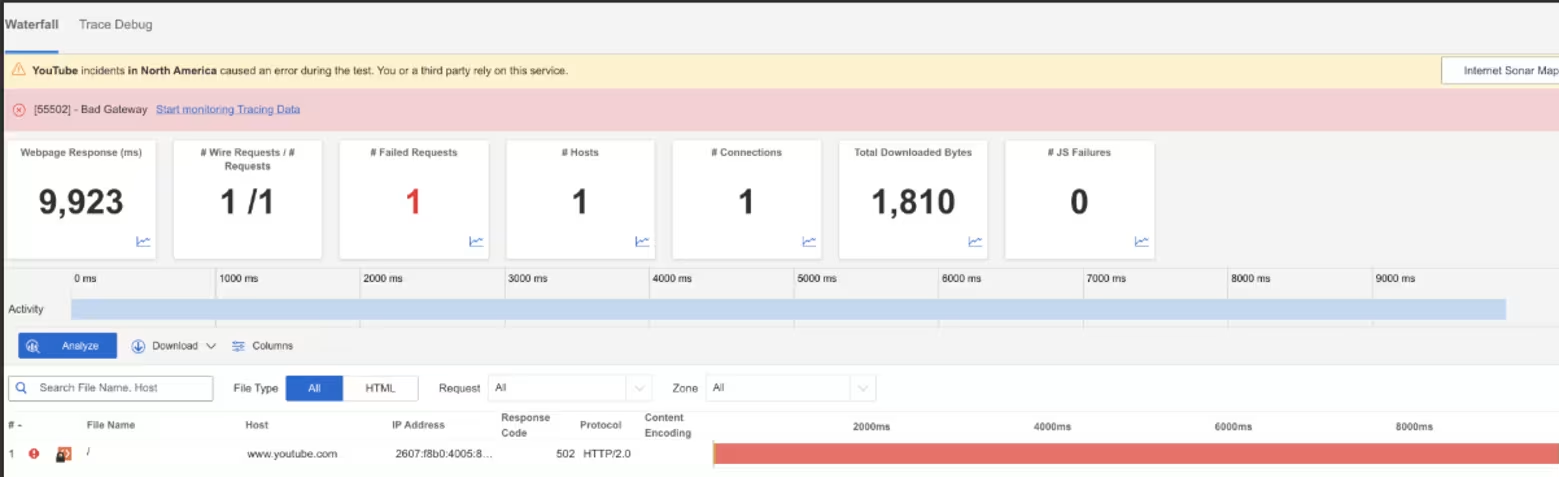

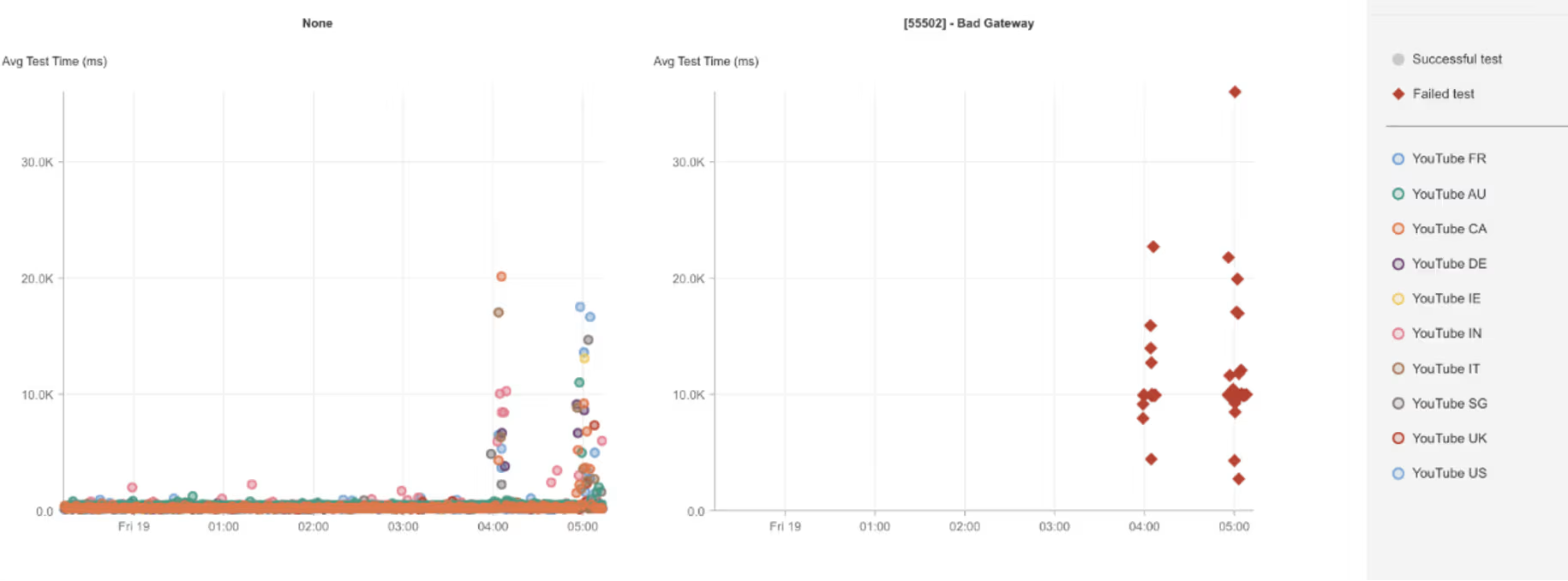

Google Maps und Google-Suche

Was ist passiert?

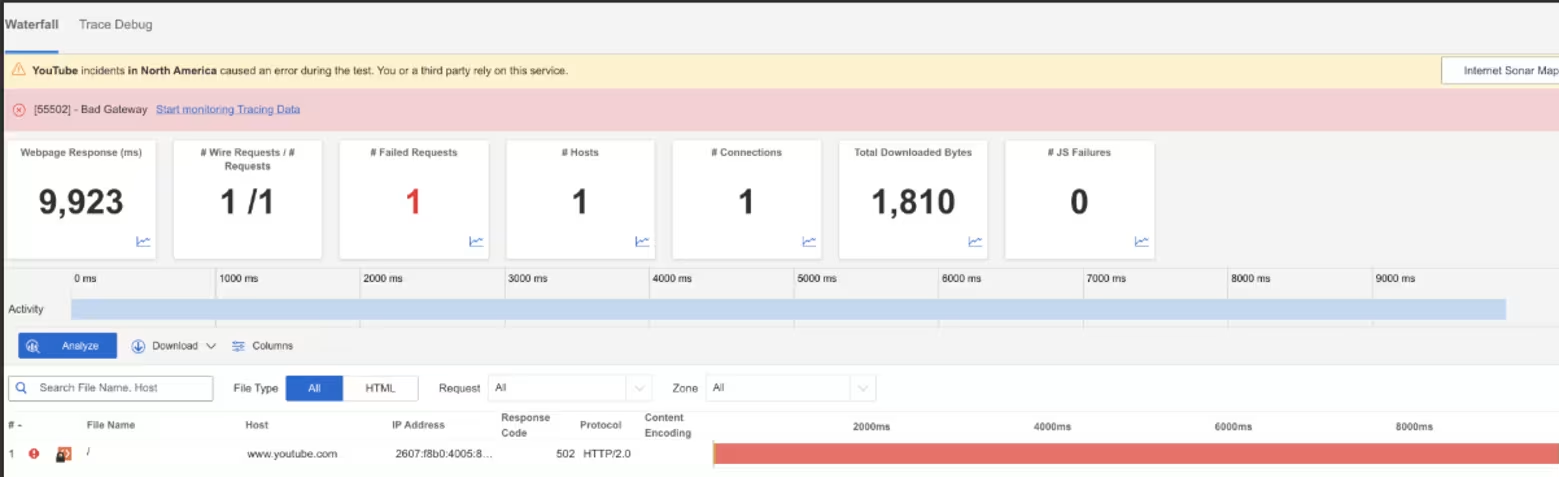

Um ca. 7:11 Uhr EST kam es bei Google zu Dienstunterbrechungen, von denen mehrere Verbraucherdienste betroffen waren. In Nordamerika hatten Nutzer von Google Maps und Google Search mit Leistungseinbußen und fehlgeschlagenen Anfragen zu kämpfen. Die Anfragen schlugen mit HTTP 502-Fehlern (Bad Gateway) fehl, was bedeutet, dass ein Dienst eine ungültige Antwort von einem vorgelagerten System erhielt, von dem er abhängig ist. Dies deutete eher auf eine Instabilität innerhalb der internen Dienstkette von Google hin als auf einen vollständigen Verlust der Internetverbindung.

Mitbringsel

HTTP-502-Fehler, die in mehreren Google-Diensten auftreten, deuten eher auf einen Fehler innerhalb einer gemeinsamen Backend-Abhängigkeit oder Steuerungsebene hin als auf isolierte Anwendungsfehler. Selbst bei Google kann die enge Kopplung zwischen internen Diensten den Auswirkungsradius vergrößern, wenn eine zentrale Abhängigkeit instabil wird. Dieser Vorfall veranschaulicht, wie Multi-Tenant-Backend-Komponenten korrelierte Ausfälle über Produkte hinweg verursachen können, die auf Benutzerebene unabhängig erscheinen. Die gemeinsame Verfolgung von Fehlertyp, Zeitpunkt und geografischem Umfang hilft dabei, Probleme auf Edge-Ebene von tieferen Abhängigkeitsfehlern zu unterscheiden, und unterstreicht, wie wichtig es ist, zu verstehen, wie sich gemeinsam genutzte interne Dienste auf die nach außen sichtbare Zuverlässigkeit auswirken.

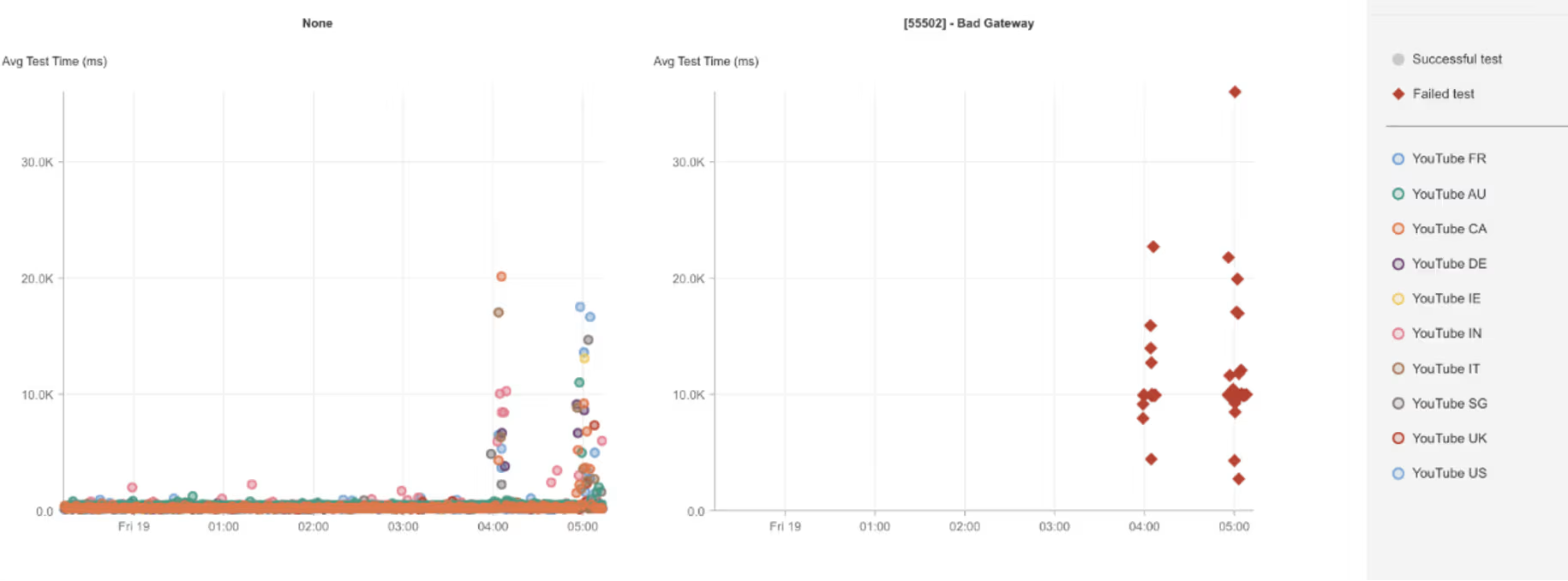

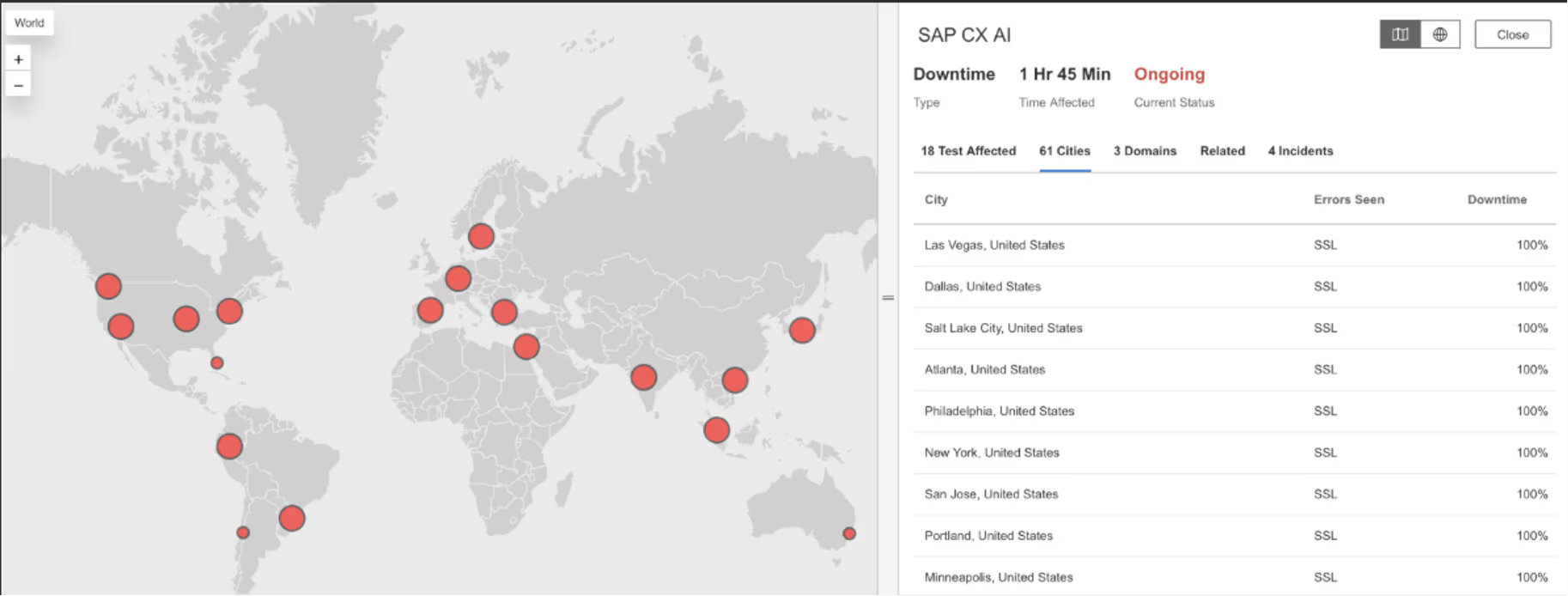

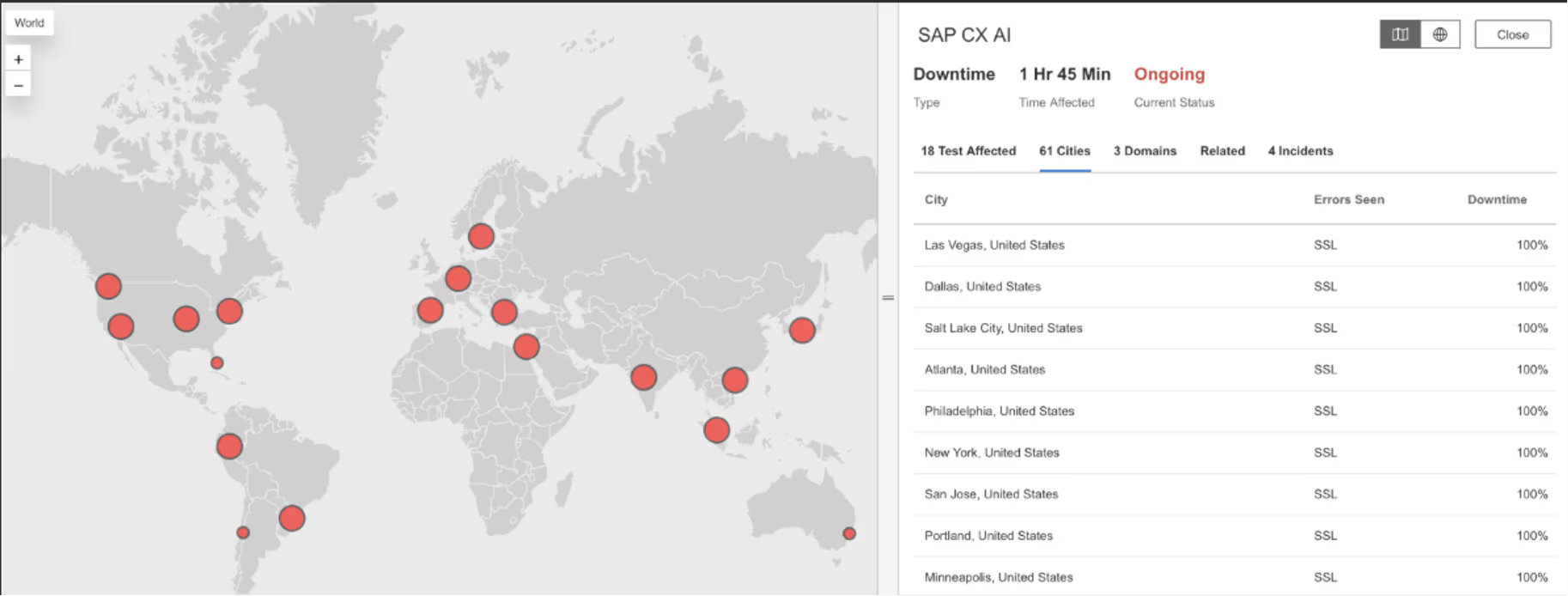

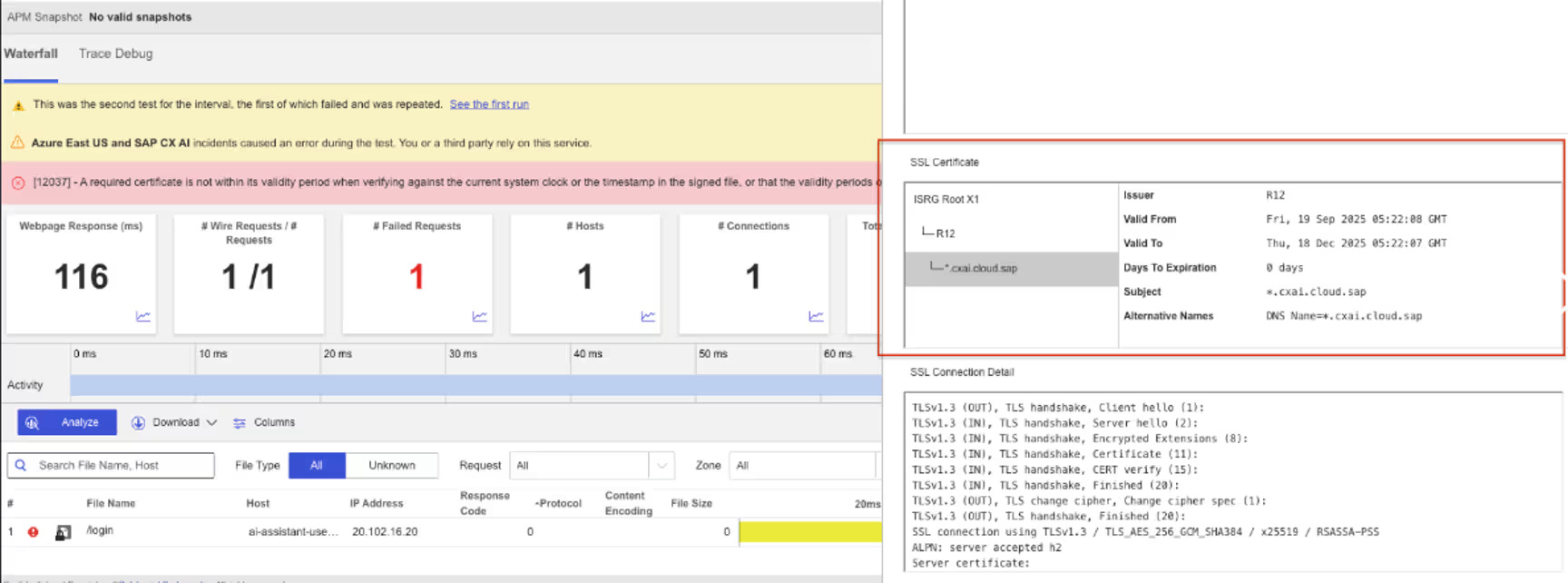

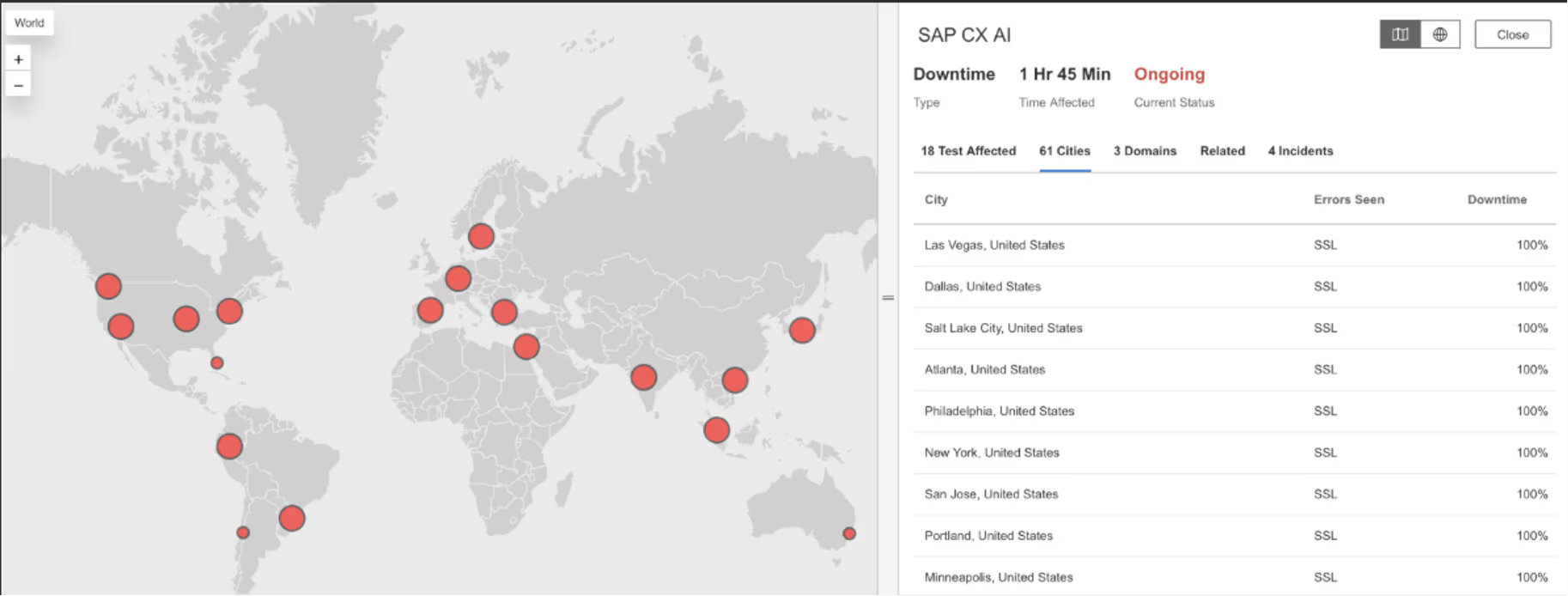

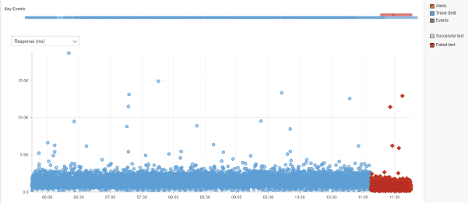

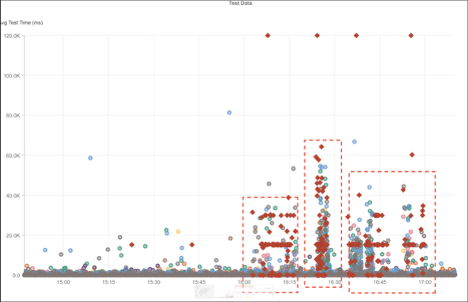

SAP CX KI

Was ist passiert?

Zwischen ca. 00:22 Uhr und 02:09 Uhr EST kam es bei SAP CX AI zu einer Dienstunterbrechung, von der Nutzer in mehreren Regionen weltweit betroffen waren. Anfragen an SAP CX AI-Dienste schlugen aufgrund von SSL/TLS-Fehlern fehl, sodass keine sicheren Verbindungen hergestellt werden konnten. Das Problem betraf mehrere SAP CX AI-Dienstendpunkte, was während des Ausfallzeitraums zu einer weitreichenden Nichtverfügbarkeit der Dienste führte.

Mitbringsel

SSL/TLS-Fehler können Dienste sofort und weltweit lahmlegen, unabhängig vom Zustand der Anwendung, da sie auftreten, bevor eine Anfrage die Anwendungsschicht erreicht. Dieser Vorfall verdeutlicht, wie Zertifikatsverwaltung und kryptografische Abhängigkeiten in modernen SaaS-Plattformen zu hochwirksamen Fehlerquellen mit geringer Toleranz werden können. Wenn mehrere Endpunkte aufgrund von SSL-Fehlern gleichzeitig ausfallen, deutet dies oft auf ein gemeinsames Zertifikat, eine Vertrauenskette oder eine Konfigurationsabhängigkeit hin, wodurch sich der Auswirkungsradius über Regionen und Dienste hinweg vergrößert. Die kontinuierliche Überprüfung von TLS-Handshakes und dem Ablauf von Zertifikaten außerhalb der Umgebung des Anbieters hilft, diese Probleme frühzeitig aufzudecken, bevor sie den gesamten Benutzerzugriff blockieren und zu lang anhaltenden, globalen Ausfällen eskalieren.

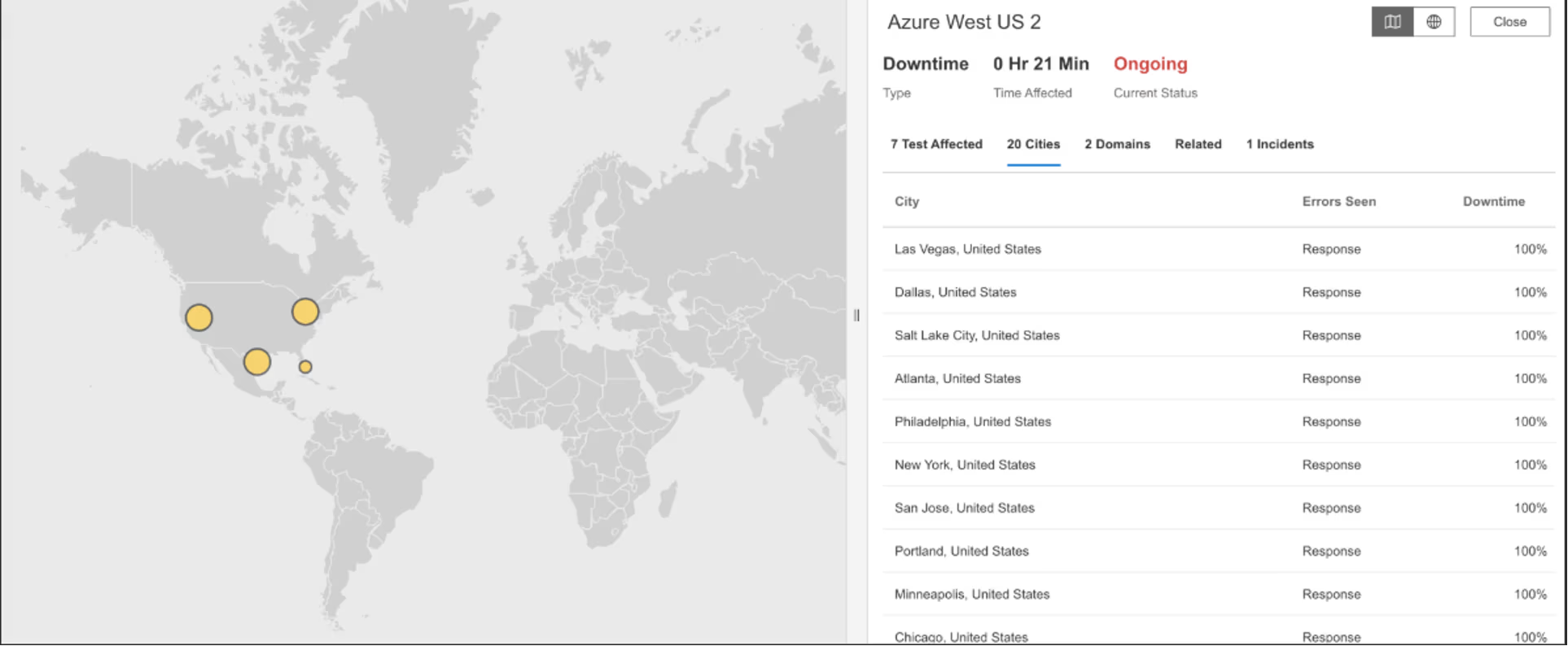

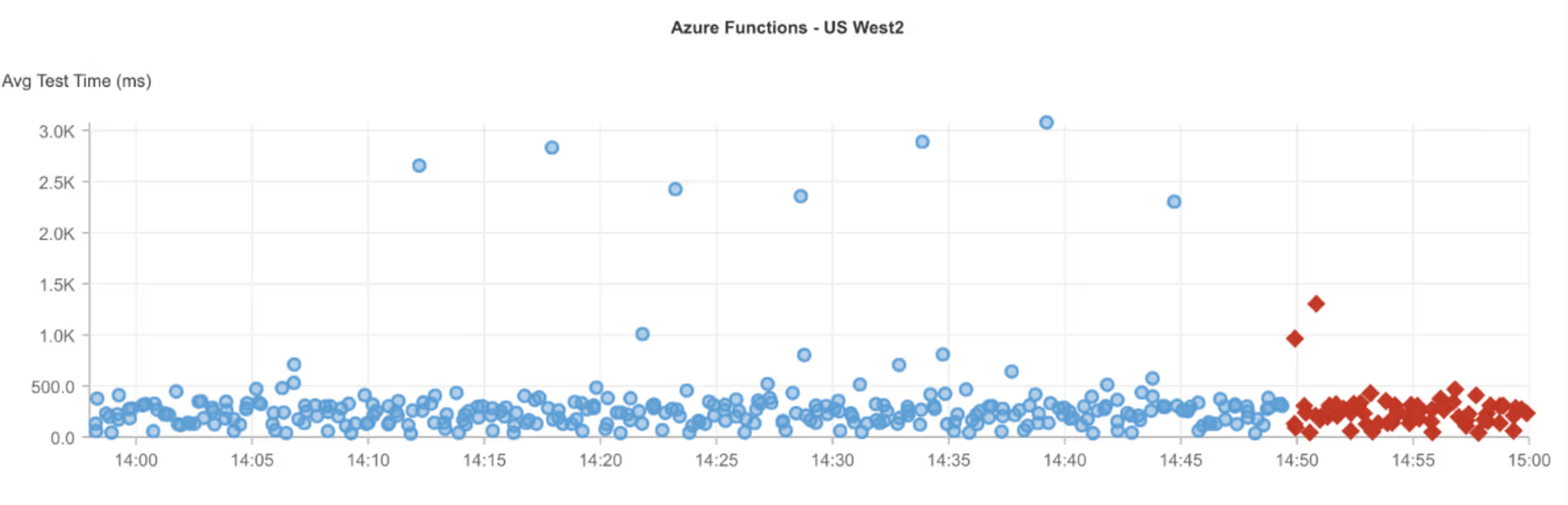

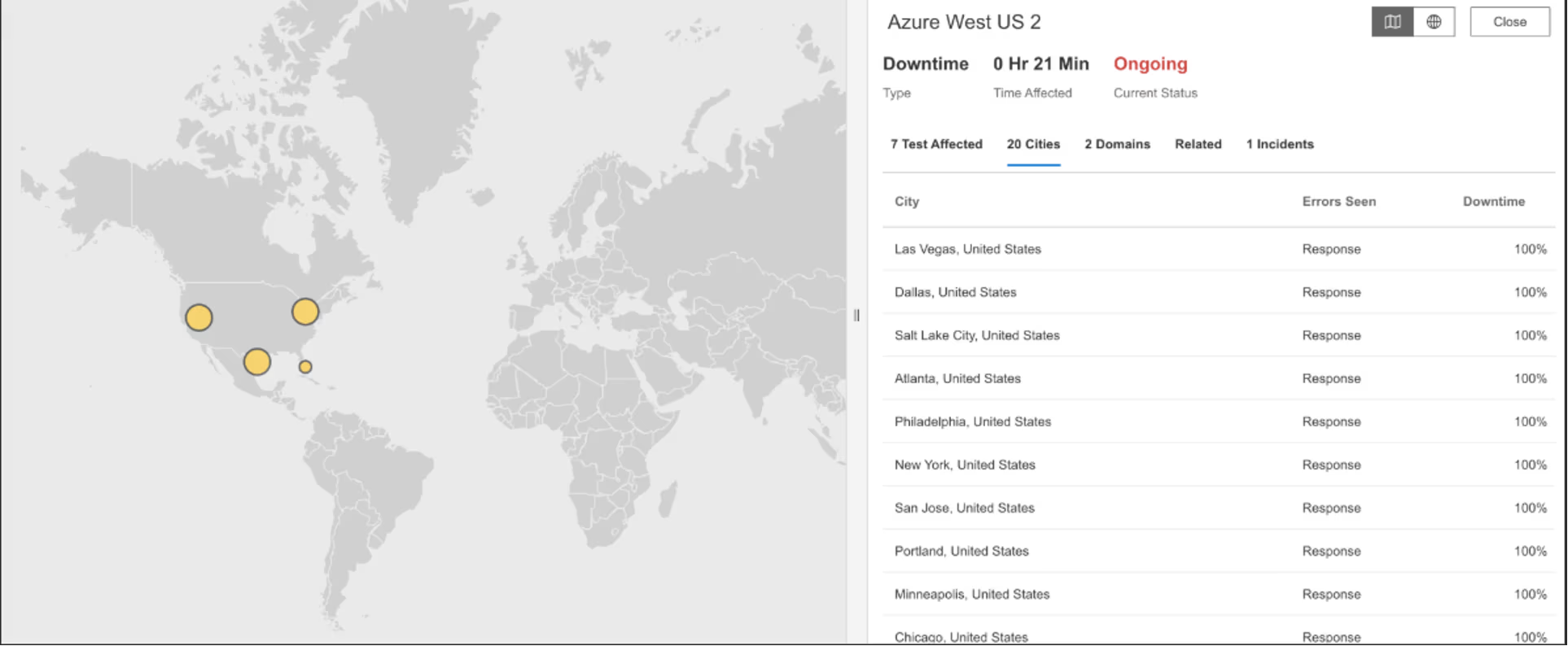

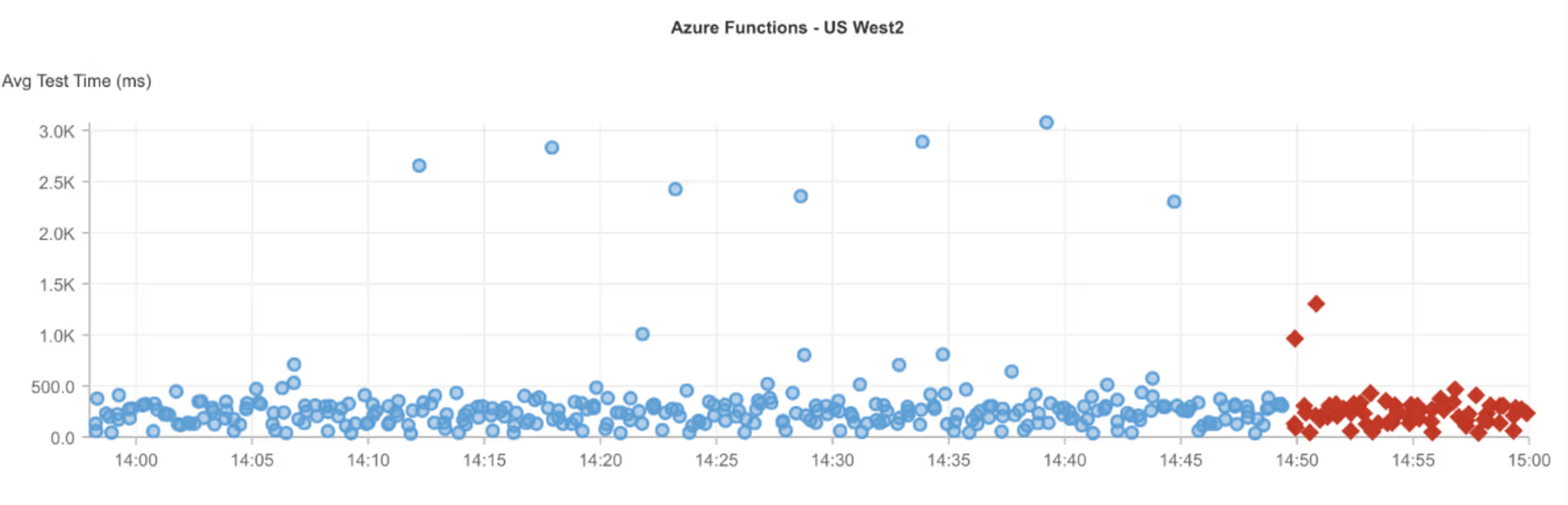

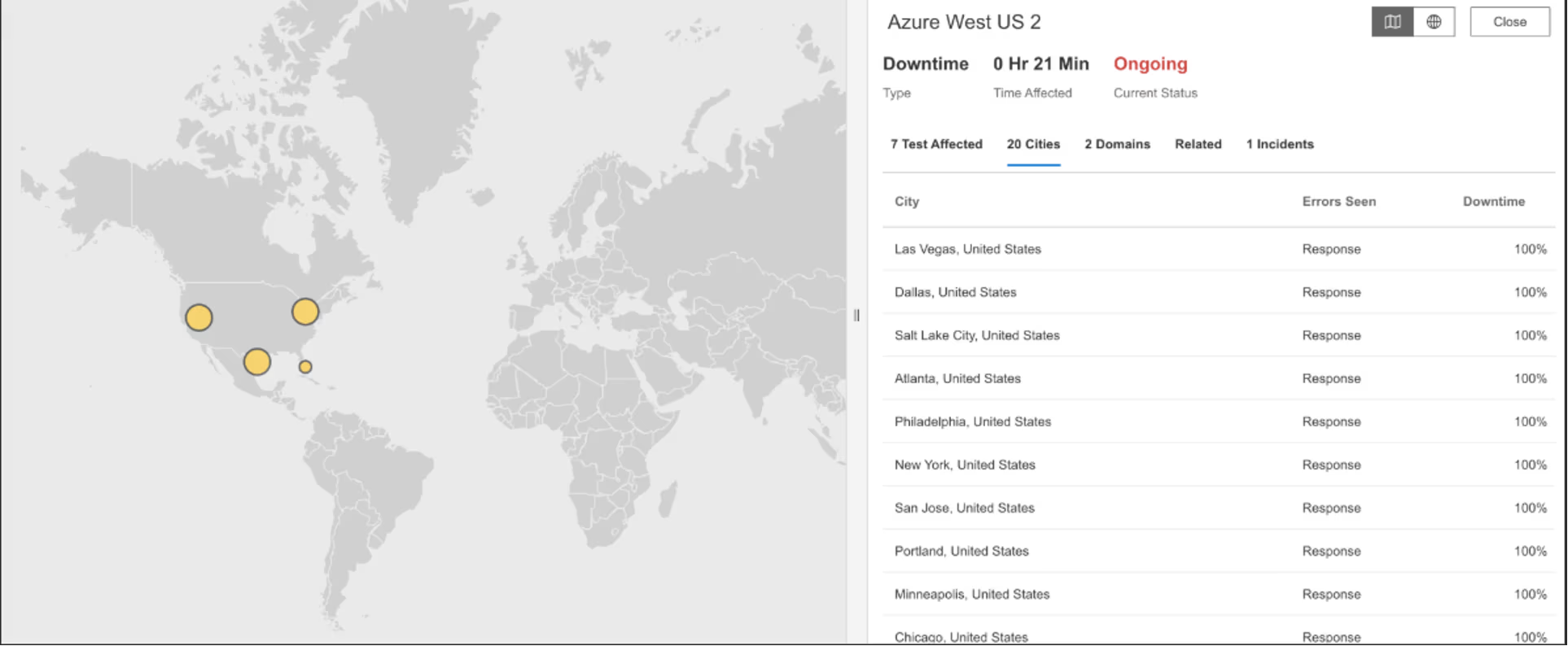

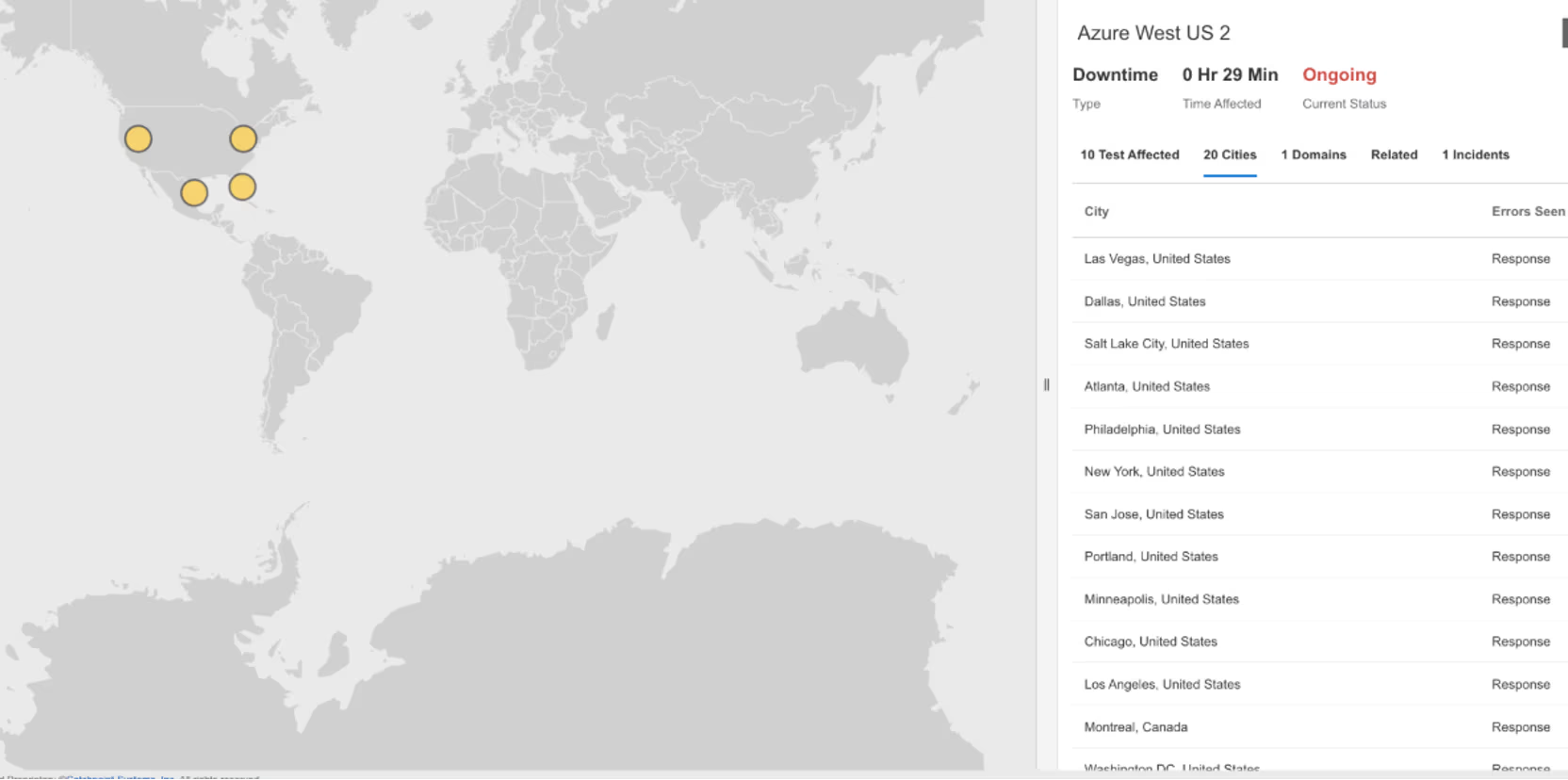

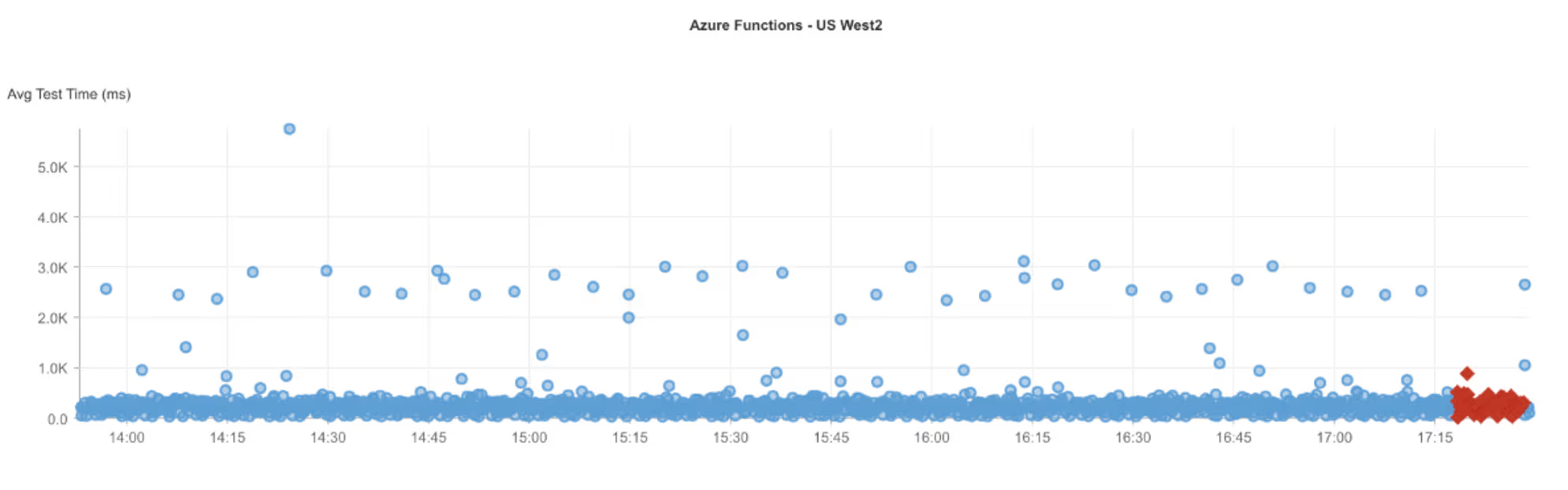

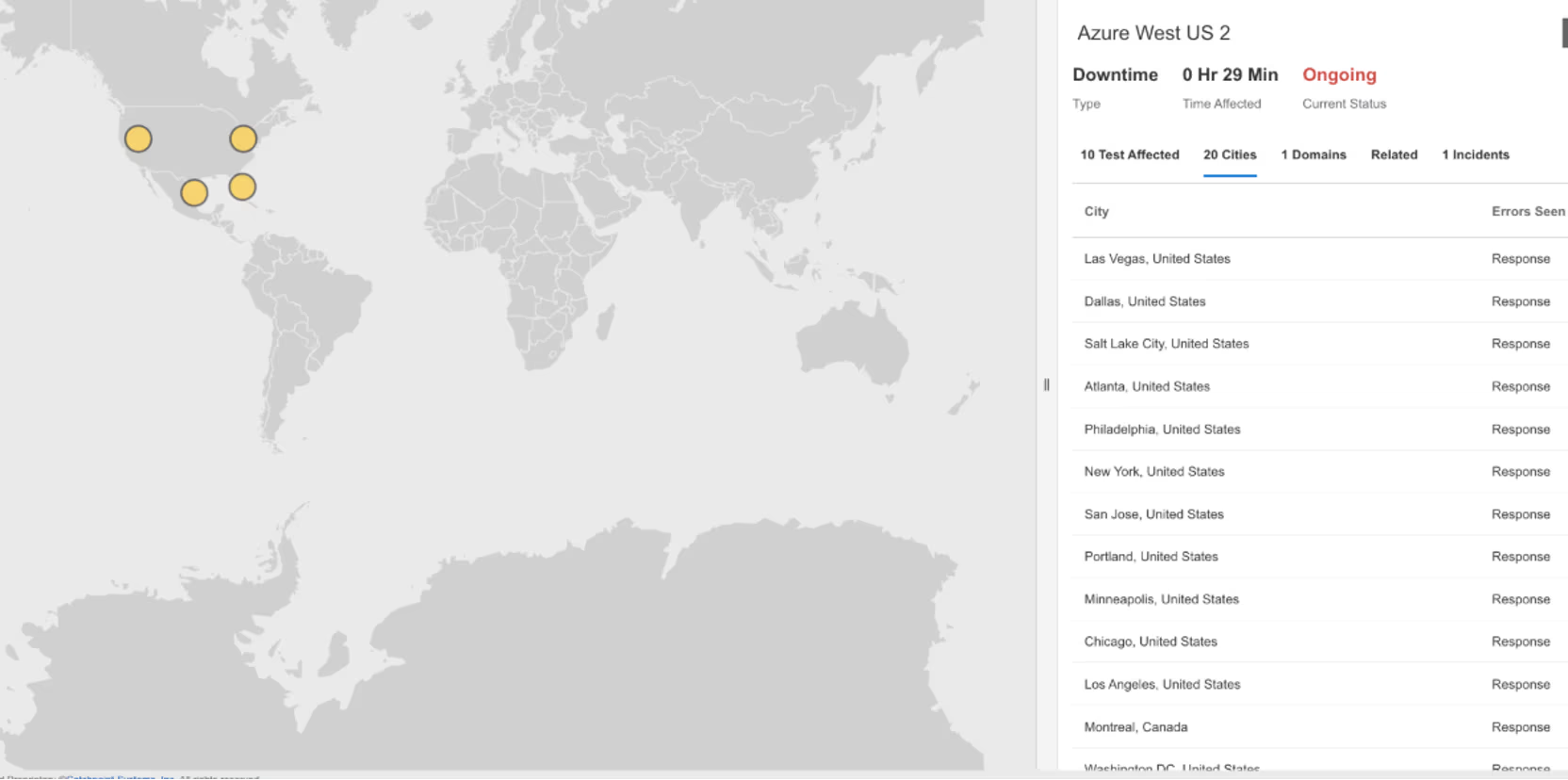

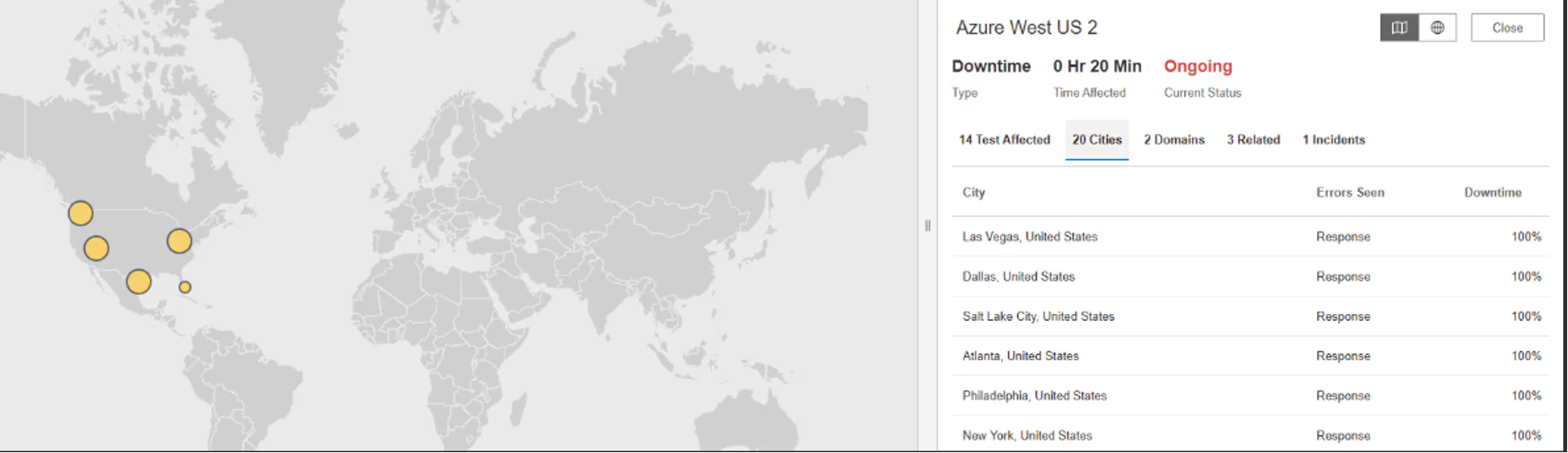

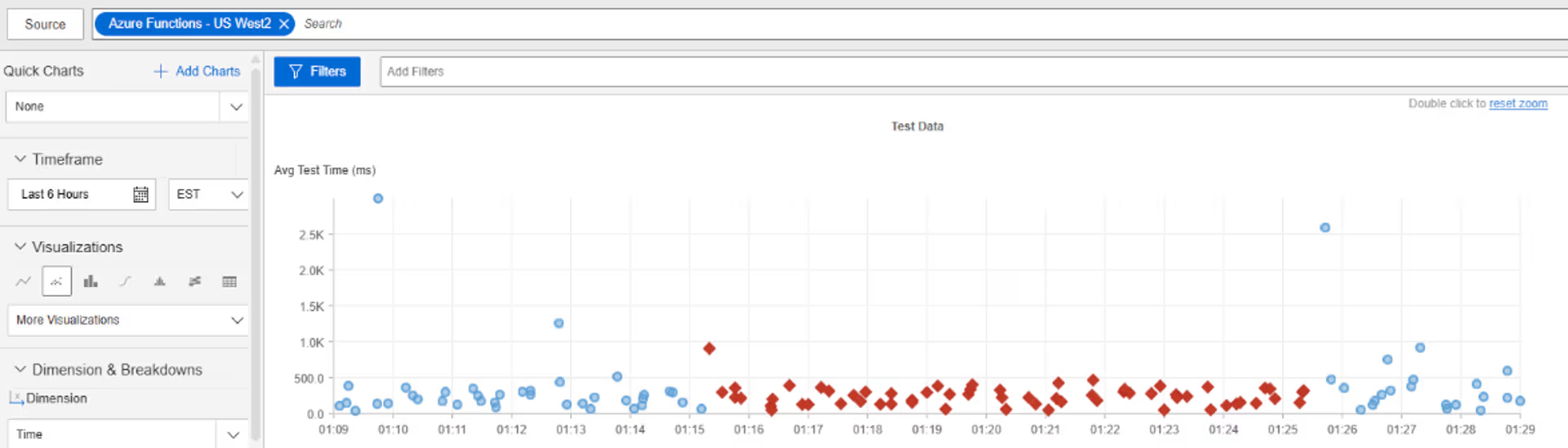

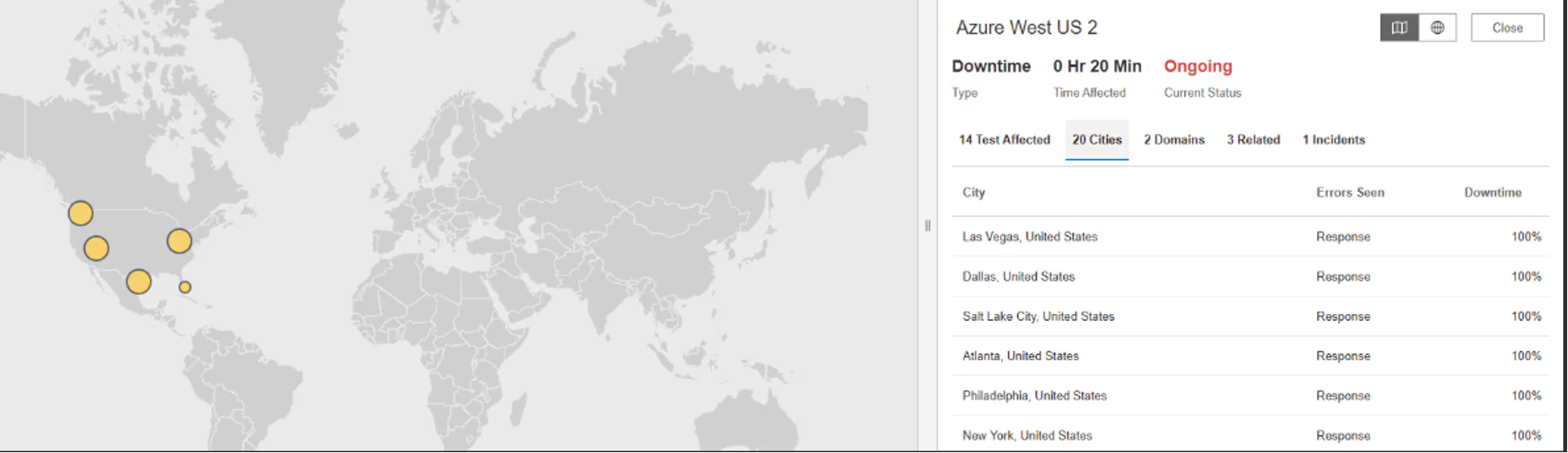

Azure West USA 2

Was ist passiert?

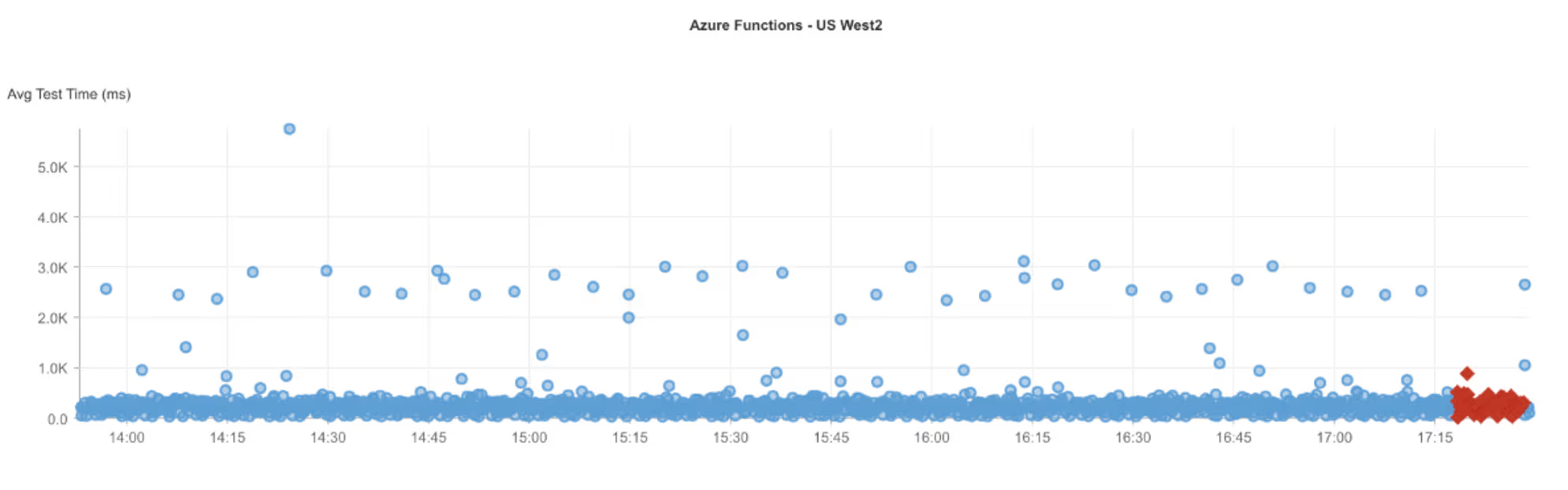

Um ca. 14:49 Uhr EST kam es zu einer Störung der in der Region Azure West US 2 gehosteten Dienste, von der Nutzer in Nordamerika betroffen waren. Anfragen an Azure-gehostete Anwendungen führten zu HTTP 503-Fehlern (Dienst nicht verfügbar), was bedeutet, dass die Server vorübergehend keine Anfragen verarbeiten konnten. Dies deutete auf ein regionales Dienstproblem innerhalb von Azure West US 2 hin, das zu einer eingeschränkten Verfügbarkeit während des Ausfallzeitraums führte.

Mitbringsel

Regionale HTTP-503-Fehler verdeutlichen die Risiken einer Abhängigkeit von einer einzigen Region, selbst innerhalb hochgradig ausfallsicherer Cloud-Plattformen. Wenn eine Cloud-Region unter Kapazitätsdruck steht oder interne Dienstinstabilitäten auftreten, können Workloads, die nicht aktiv über mehrere Regionen verteilt sind, trotz der allgemeinen Funktionsfähigkeit der Plattform nicht mehr verfügbar sein. Dieses Ereignis unterstreicht die Bedeutung regionaler Isolierungsstrategien wie Multi-Region-Bereitstellungen und Failover-Tests für Anwendungen mit Verfügbarkeitsanforderungen. Die Beobachtung von Ausfällen, die auf eine einzelne Cloud-Region beschränkt sind, hilft dabei, regionale Infrastrukturprobleme von Anwendungsfehlern oder internetweiten Problemen zu unterscheiden, was eine schnellere Eingrenzung und Reaktion bei Vorfällen bei Cloud-Anbietern ermöglicht.

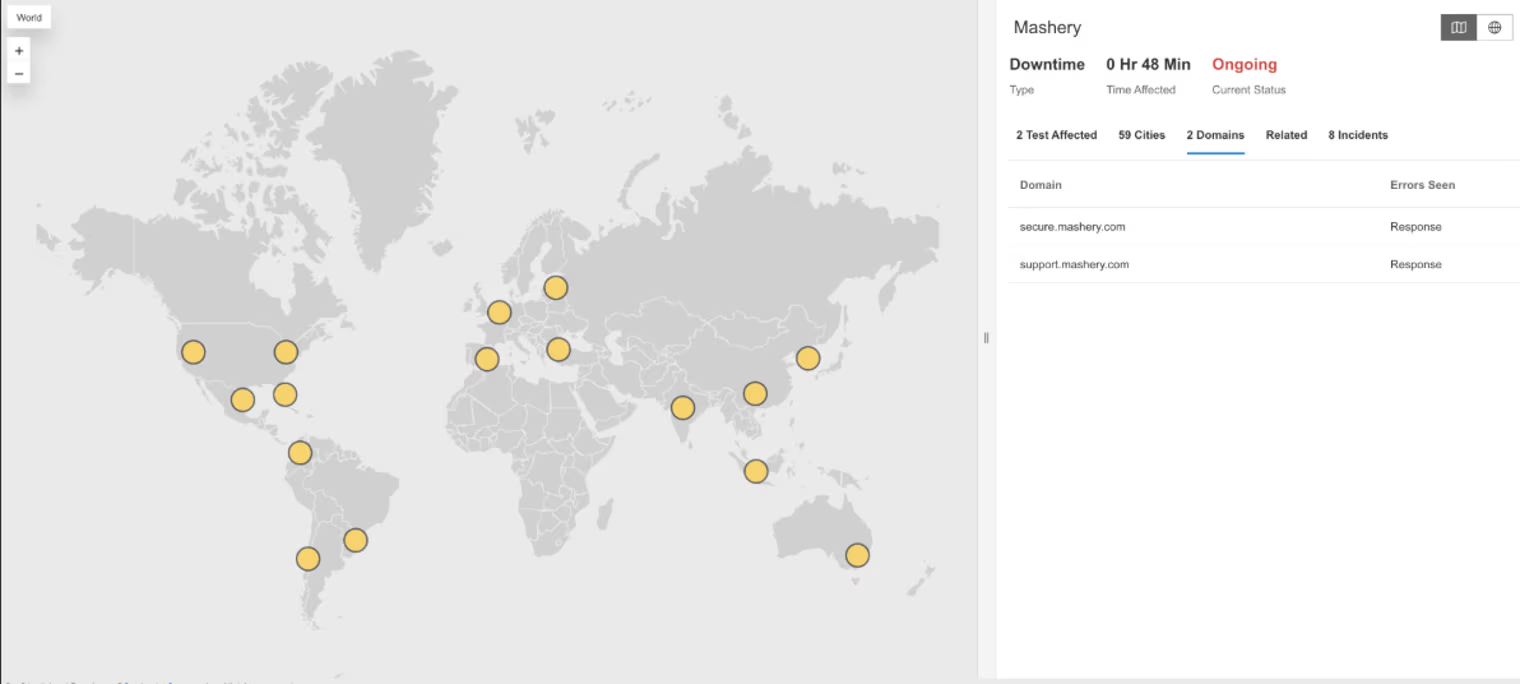

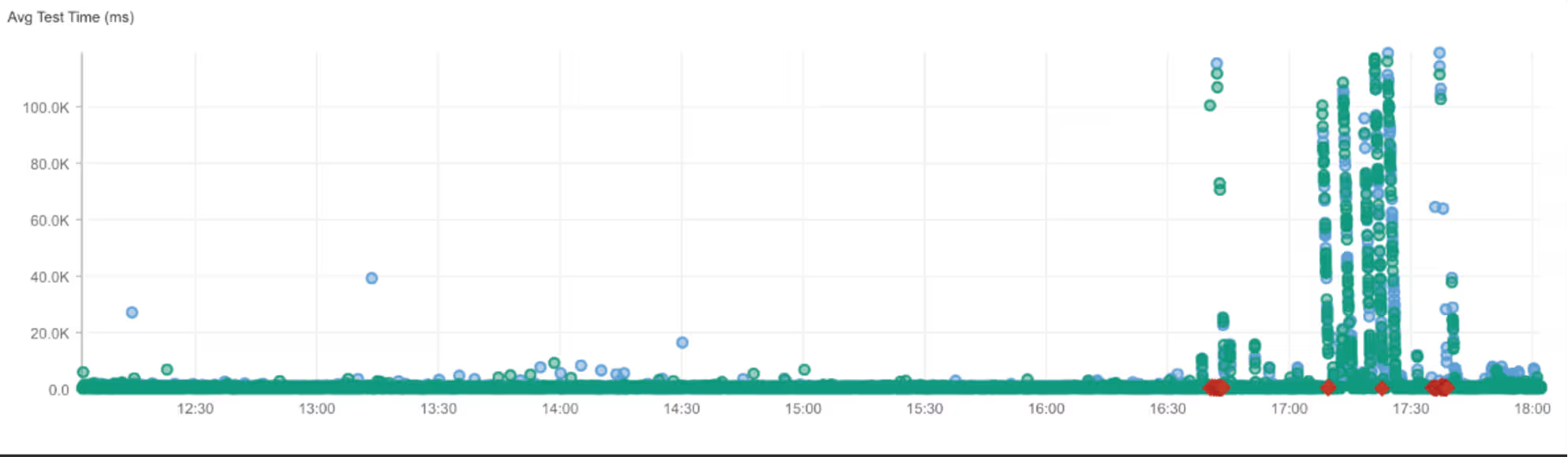

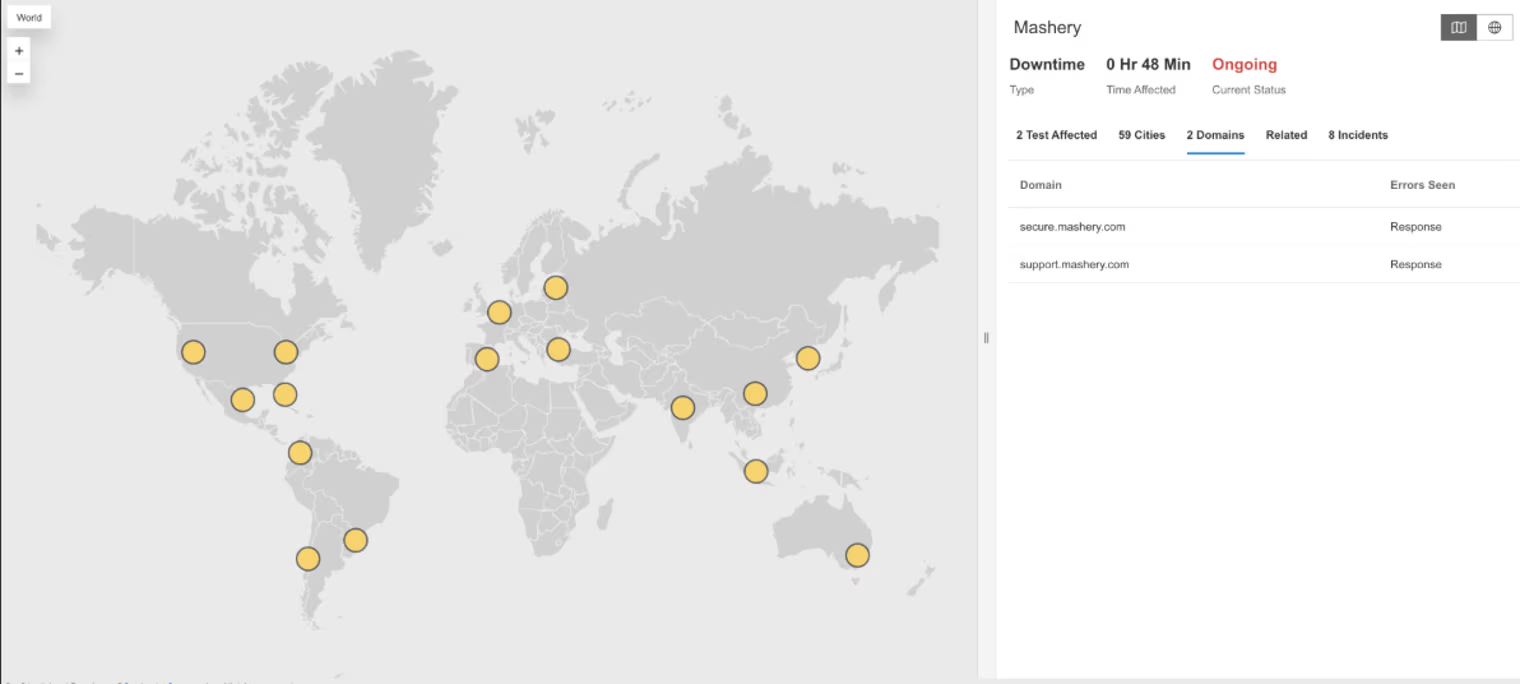

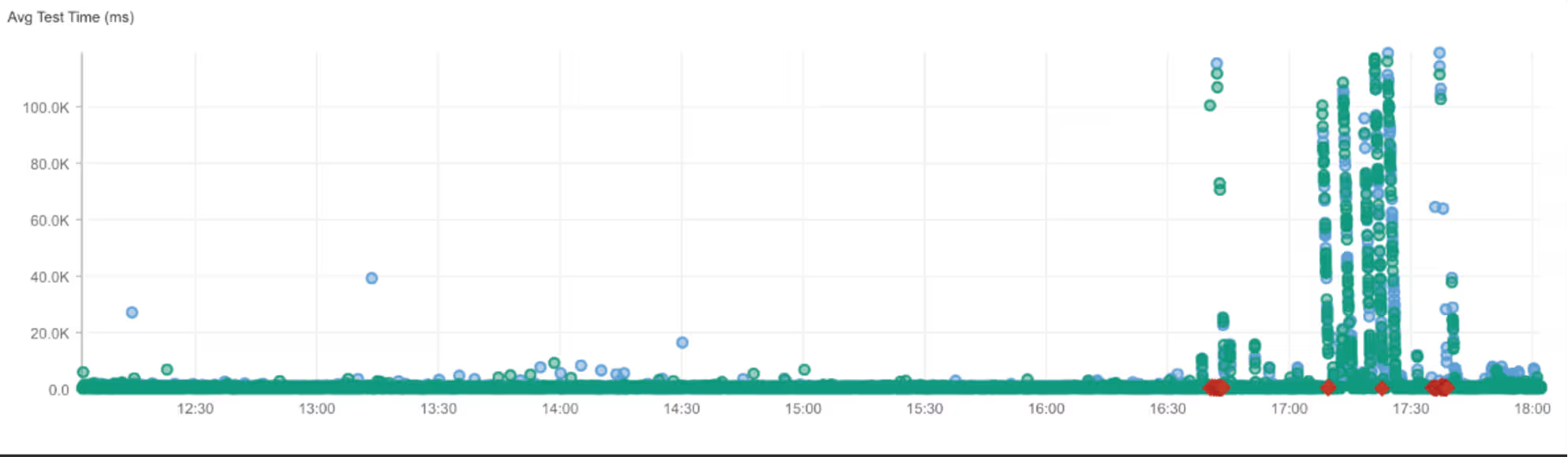

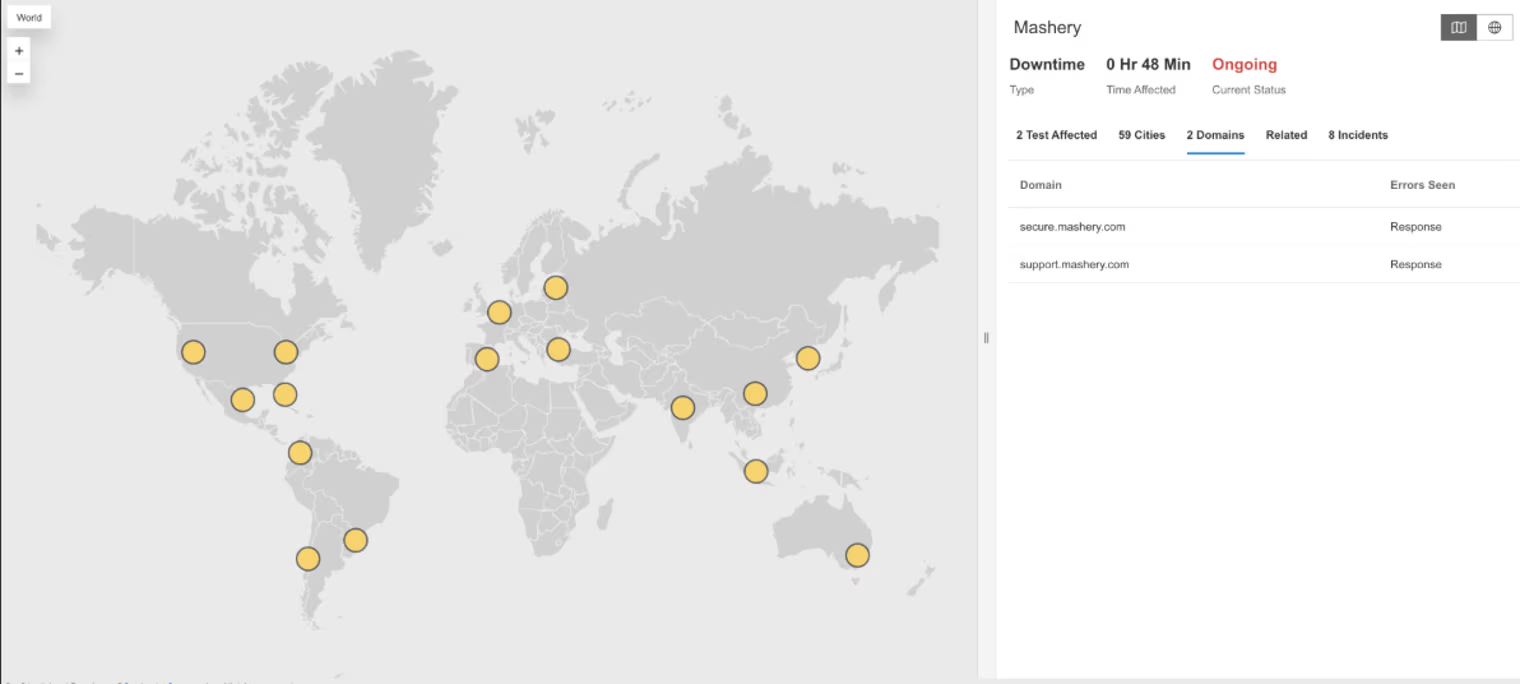

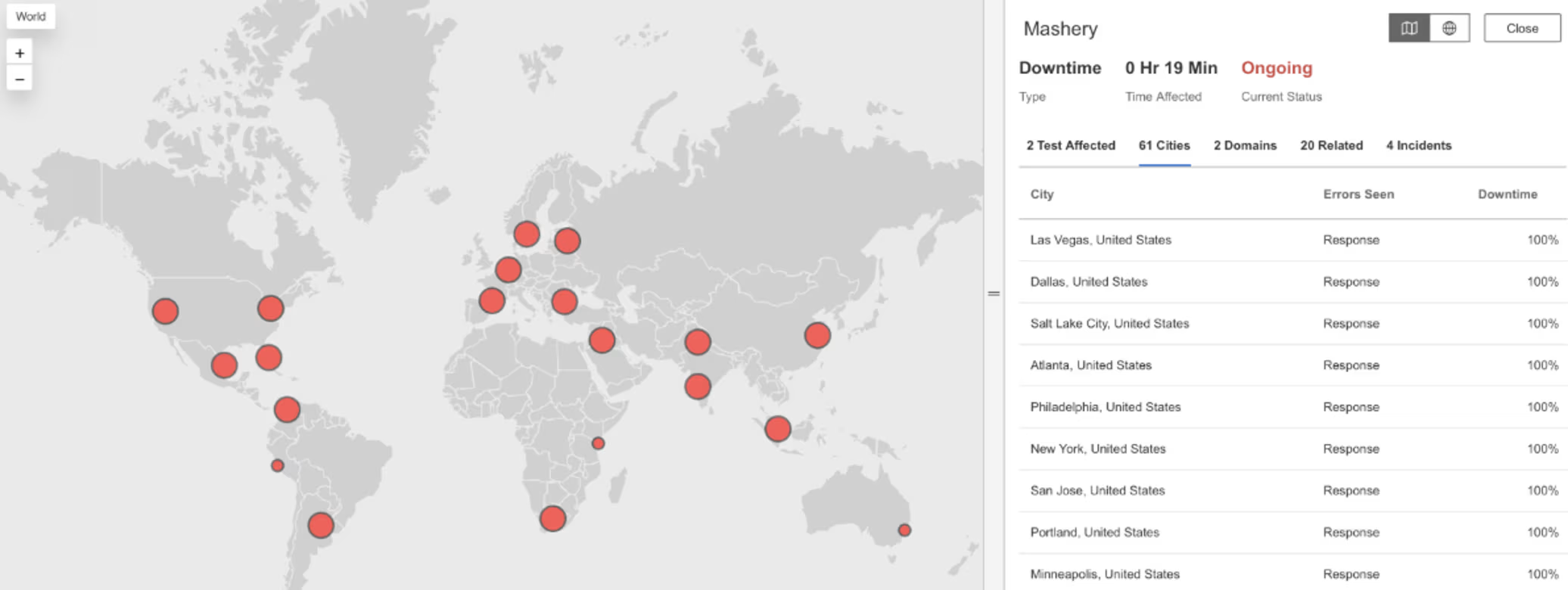

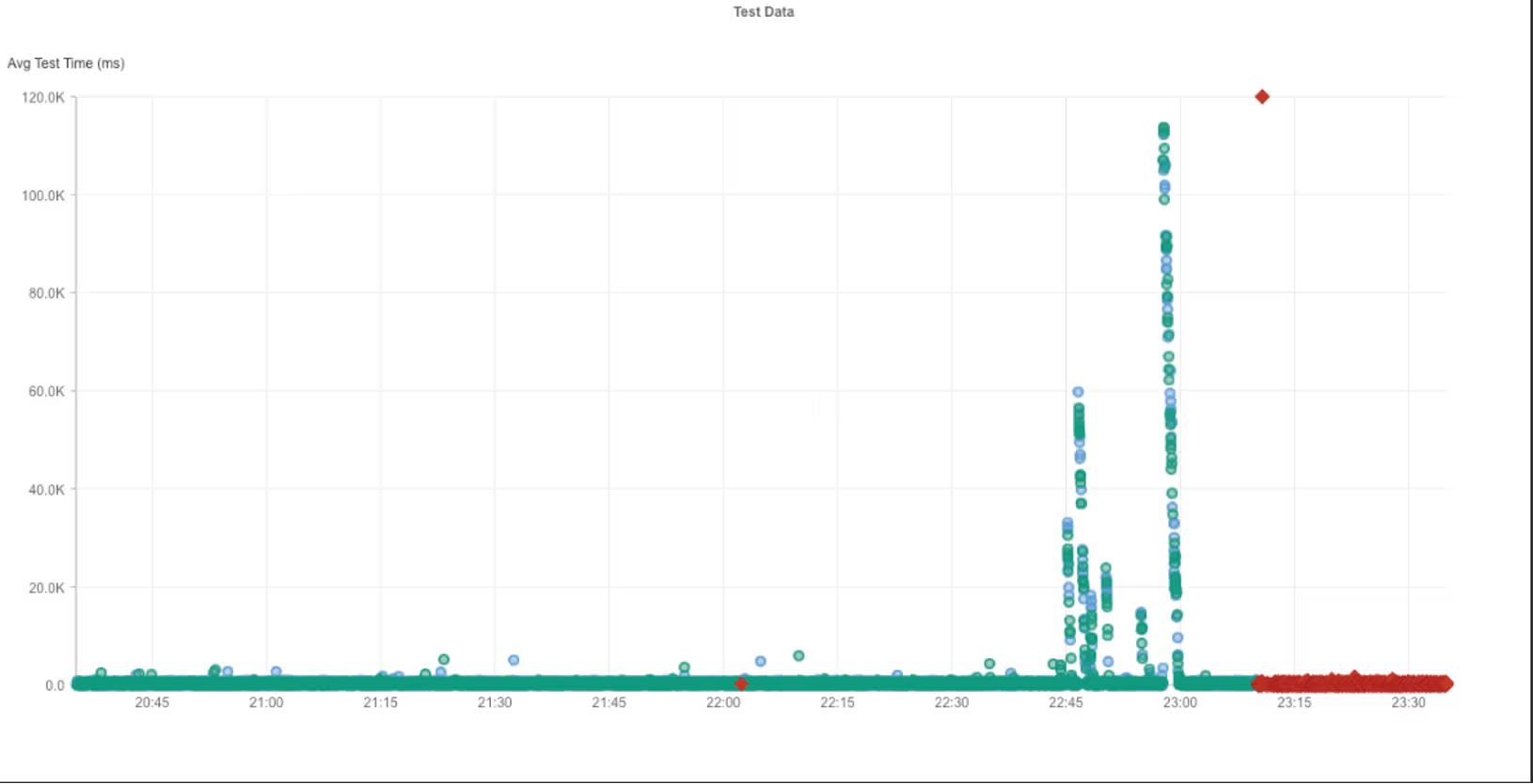

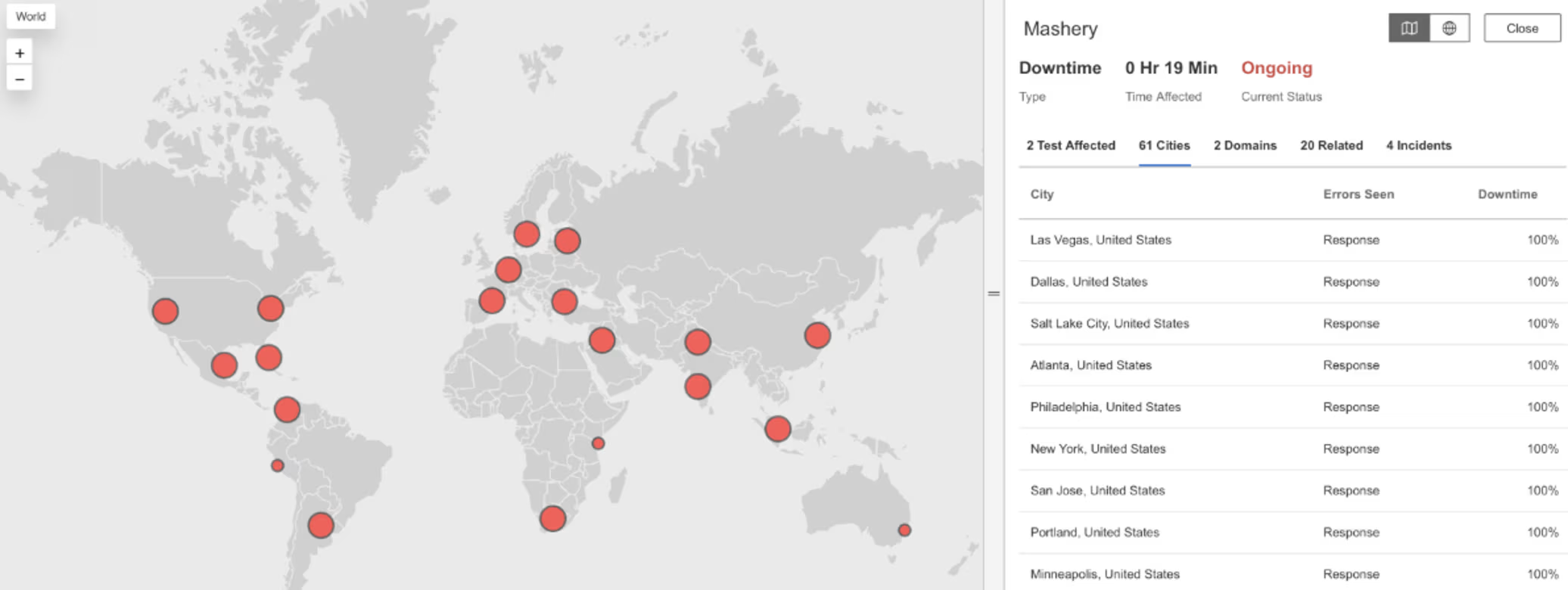

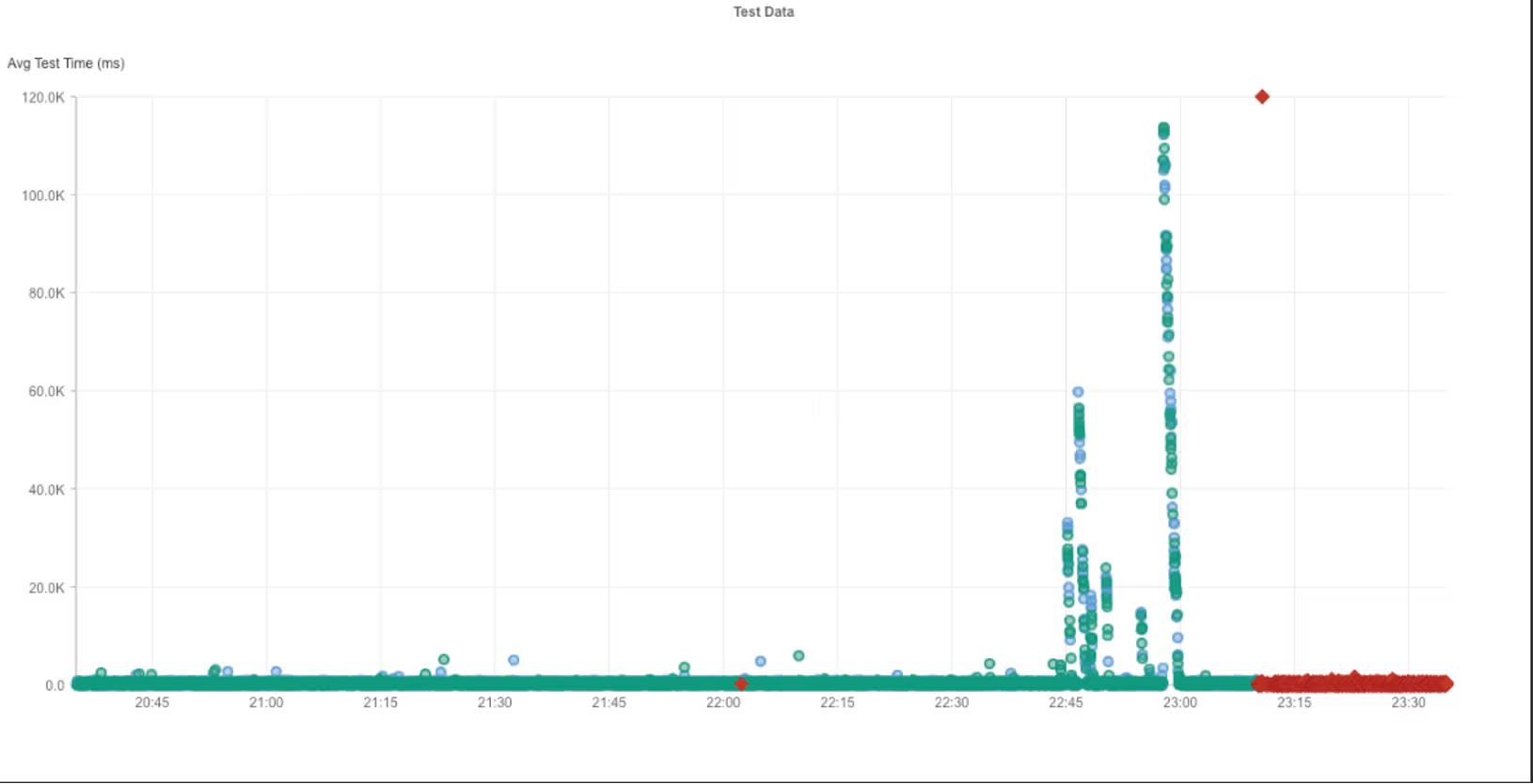

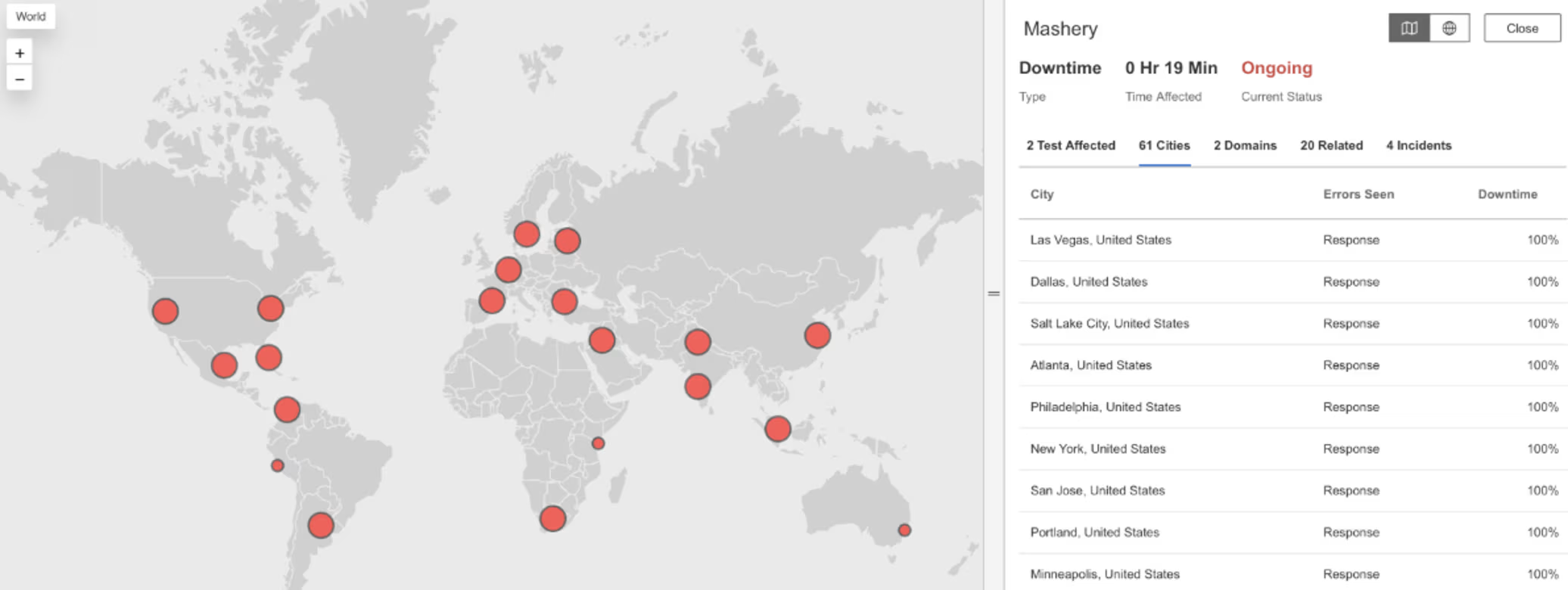

Mashery

Was ist passiert?

Um ca. 16:40 Uhr EST kam es bei Mashery zu einer weltweiten Dienstunterbrechung, von der Nutzer in mehreren Regionen betroffen waren. Anfragen an Mashery-Dienste führten zu HTTP 503-Fehlern (Dienst nicht verfügbar), was bedeutet, dass die Server vorübergehend nicht in der Lage waren, eingehende Anfragen zu bearbeiten. Neben diesen Fehlern kam es zu einem starken Anstieg der Anfragezeiten, sodass Nutzer lange Wartezeiten in Kauf nehmen mussten, bevor es zu Ausfällen kam. Diese Kombination deutete auf eine verminderte Leistung und eine eingeschränkte Verfügbarkeit während des Ausfallzeitraums hin.

Mitbringsel

Bei API-Management-Plattformen deutet eine erhöhte Latenz, gefolgt von HTTP-503-Fehlern, häufig auf eine Überlastung der Steuerungsebene oder des Gateways hin, wobei der Datenverkehr zwar den Dienst erreicht, aber nicht schnell genug verarbeitet werden kann. Der starke Anstieg der Wartezeiten zeigt, wie Leistungsabfälle als Frühwarnung dienen können, bevor es zu weitreichenden Ausfällen bei der Bearbeitung von Anfragen kommt. Da API-Gateways für viele nachgelagerte Dienste direkt im Anfragepfad liegen, kann Instabilität auf dieser Ebene schnell zu Ausfällen führen, die für den Kunden sichtbar sind. Die Verfolgung der Latenzverteilung neben den Fehlerraten hilft dabei, Überlastungszustände von schwerwiegenden Ausfällen zu unterscheiden, und liefert frühzeitig Signale für Abhilfemaßnahmen, bevor die Verfügbarkeit vollständig sinkt.

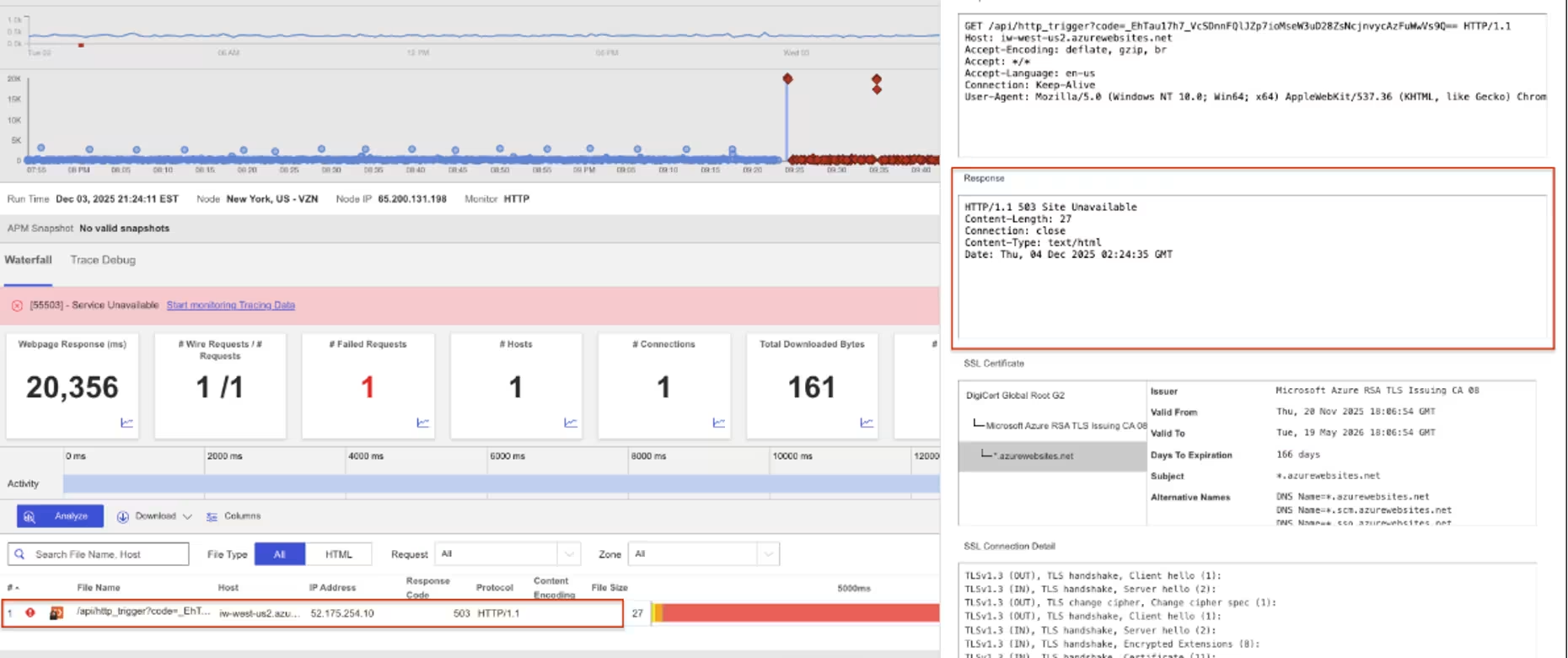

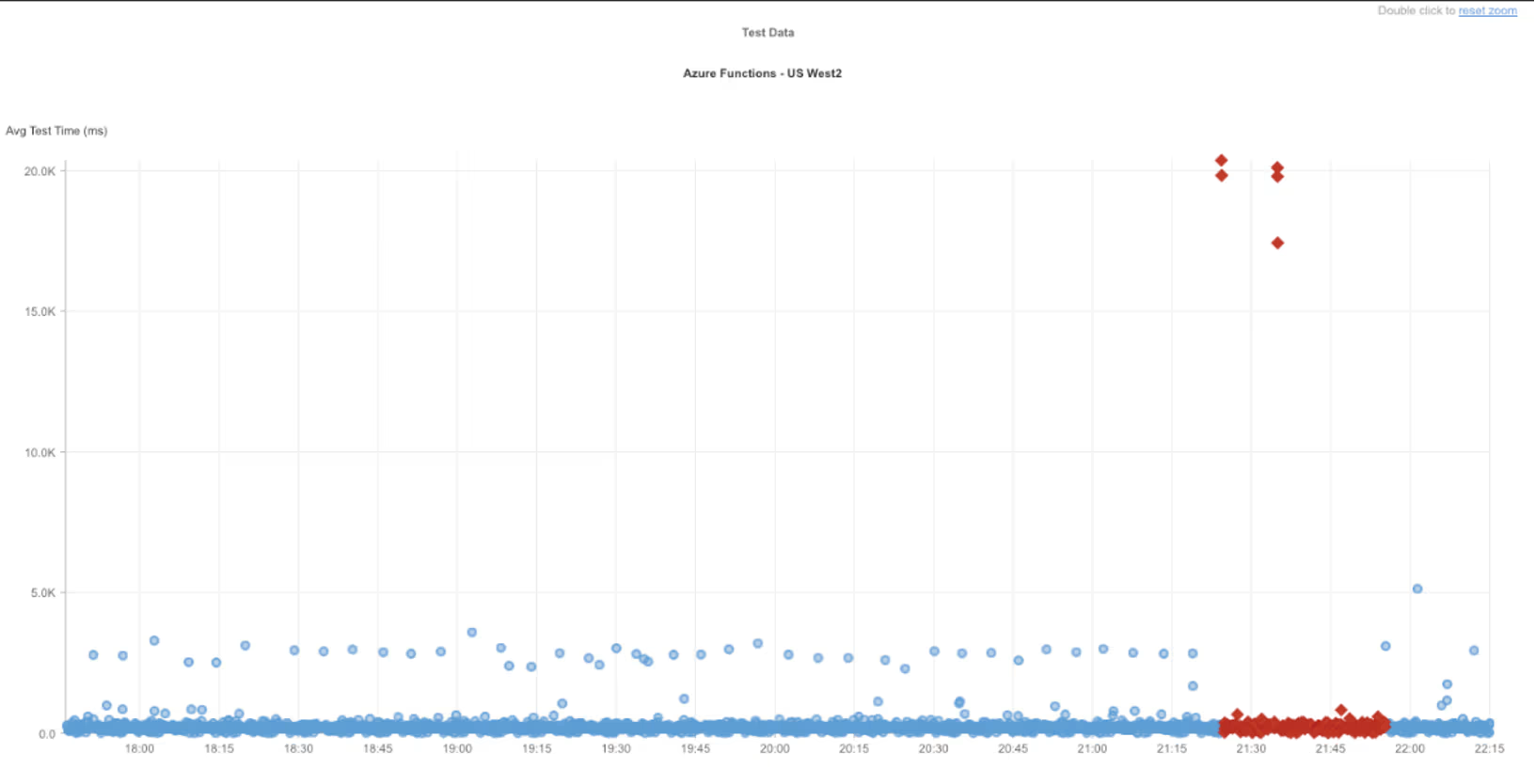

Azure West USA 2

Was ist passiert?

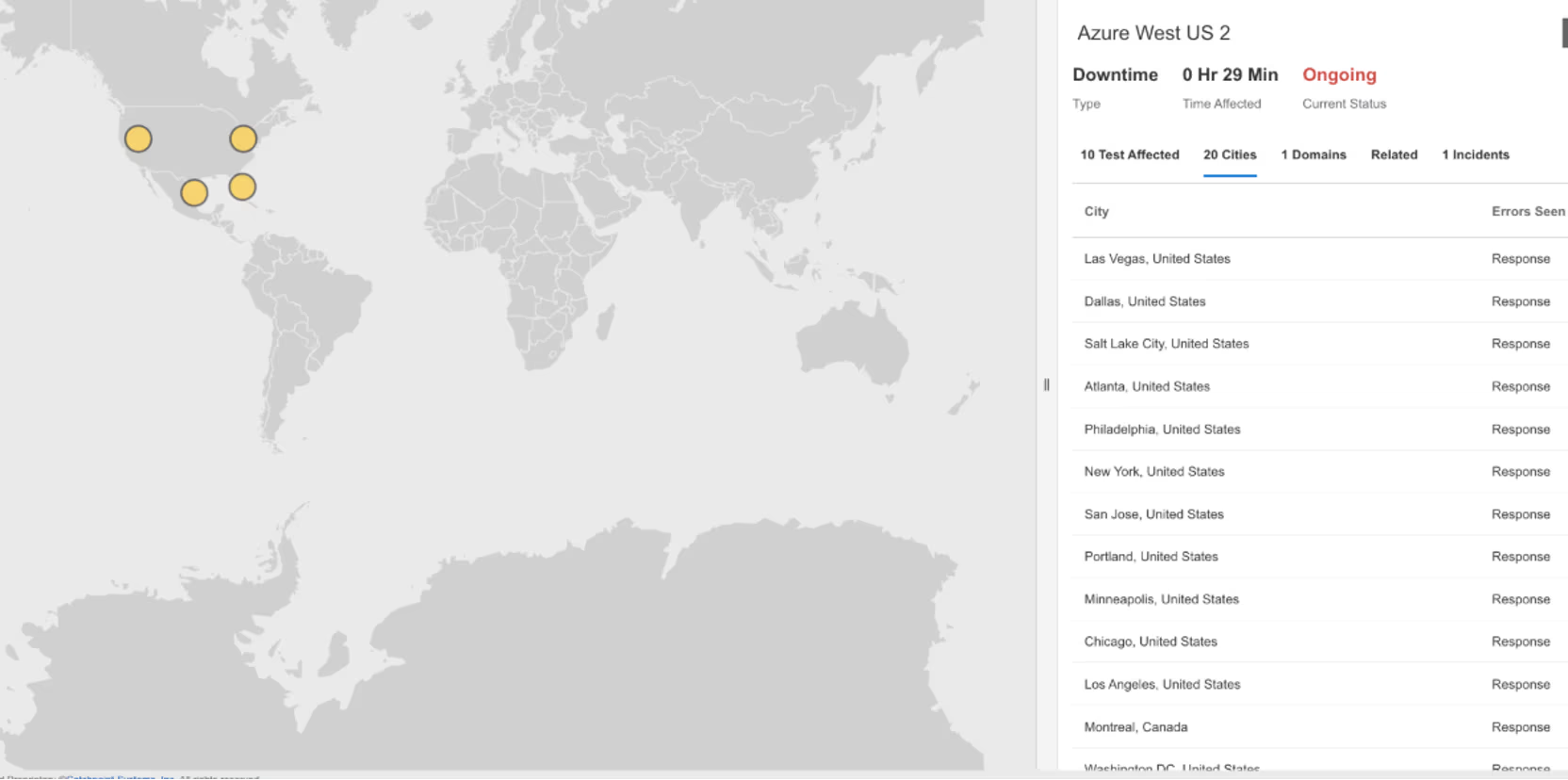

Am 11. Dezember 2025 um 17:18 Uhr EST stellte Internet Sonar einen Ausfall fest, der Azure West US 2 in mehreren Regionen Nordamerikas betraf. Anfragen an iw-west-us2.azurewebsites.net führten zu HTTP 503 (Dienst nicht verfügbar – der Server kann vorübergehend keine Anfragen bearbeiten), was auf eine Dienstunterbrechung in Azure West US 2 hindeutete.

Mitbringsel

Kurze Ausbrüche von HTTP 503-Fehlern spiegeln oft vorübergehende Kapazitätsengpässe oder interne Neustarts von Diensten wider und sind nicht unbedingt Ausdruck anhaltender regionaler Ausfälle. Diese Ereignisse sind extern möglicherweise nur für einen kurzen Zeitraum sichtbar, können jedoch dennoch Workloads beeinträchtigen, die keine Wiederholungsversuche oder Traffic-Pufferung vorsehen. Dieser Vorfall unterstreicht die Bedeutung von Graceful-Degradation-Mustern, wie z. B. clientseitigen Wiederholungsversuchen mit Backoff und queuenbasierter Verarbeitung, um kurze Störungen auf Plattformebene abzufangen. Selbst Cloud-Vorfälle mit geringer Schwere verstärken die Notwendigkeit, Anwendungen so zu gestalten, dass sie vorübergehende Dienstausfälle tolerieren, ohne dass es zu kaskadierenden Ausfällen für die Benutzer kommt.

Azure West USA 2

Was ist passiert?

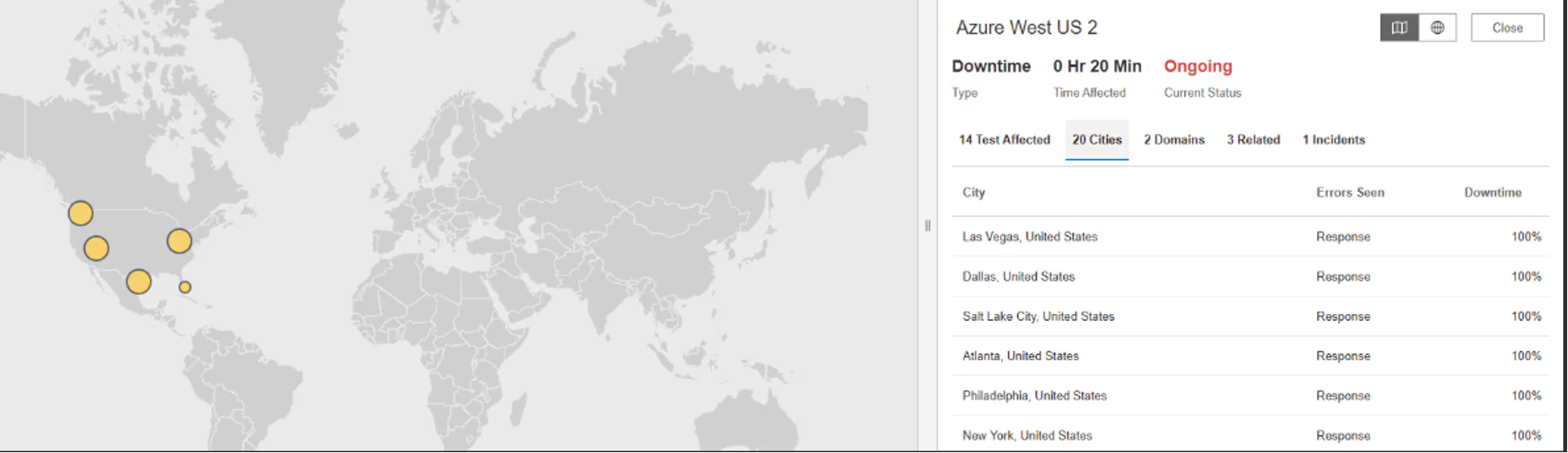

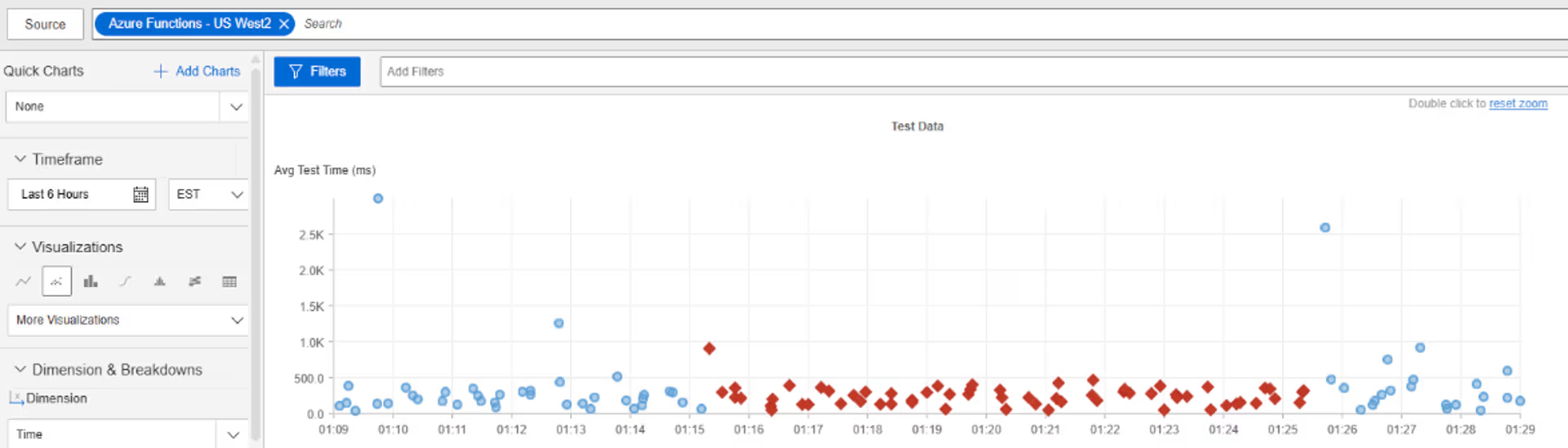

Am 10. Dezember 2025, von 01:15:20 bis 01:25:21 EST, stellte Internet Sonar einen Ausfall fest, der Azure West US 2 in mehreren Regionen, darunter den USA und Kanada, betraf. Anfragen an iw-west-us2.azurewebsites.net führten zu HTTP 503 (Dienst nicht verfügbar – der Server kann vorübergehend keine Anfragen bearbeiten), was auf eine kurze Dienstunterbrechung in Azure West US 2 hinwies.

Mitbringsel

Ein kurzes, klar definiertes Zeitfenster mit HTTP-503-Fehlern deutet eher auf einen vorübergehenden Ausfall der Dienstbereitschaft hin als auf einen schwerwiegenden Fehler, wie z. B. Komponenten, die während der Wiederherstellung die Zustandsprüfungen nicht bestehen, oder Datenverkehr, der an Instanzen weitergeleitet wird, die noch nicht bereit sind, Dienste bereitzustellen. Diese Ereignisse treten häufig während interner Korrekturmaßnahmen, Skalierungsaktionen oder Neustarts von Abhängigkeiten auf und können auch dann extern sichtbar werden, wenn die Wiederherstellung bereits im Gange ist. Für Nutzer von Cloud-Plattformen unterstreicht dies die Bedeutung von Bereitschaftsprüfungen, Wiederholungsversuchen und Circuit Breaking, da kurze Zeiträume teilweiser Nichtverfügbarkeit immer noch zu für den Benutzer sichtbaren Fehlern führen können, wenn Clients 503-Fehler als endgültige Ausfälle behandeln.

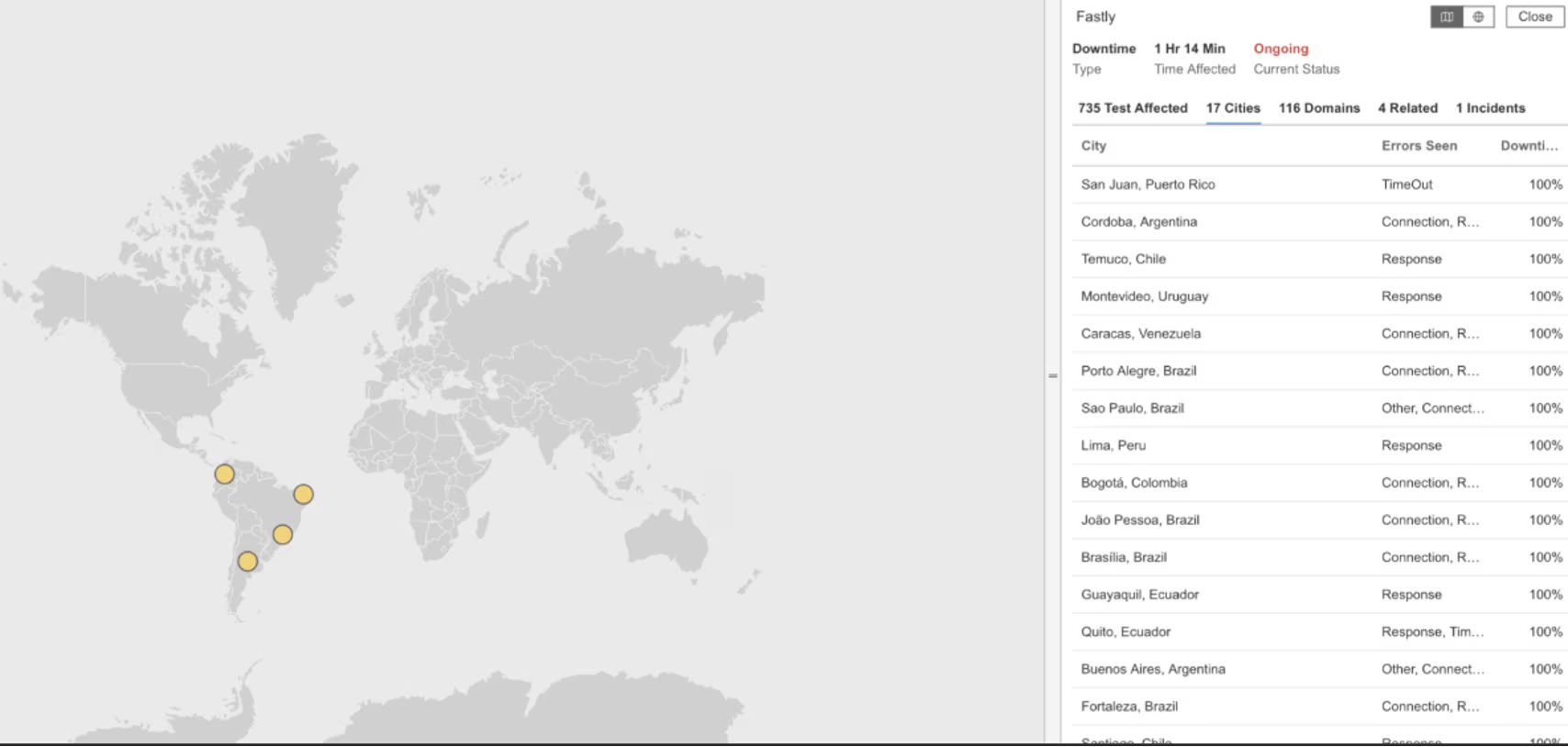

Fastly

Was ist passiert?

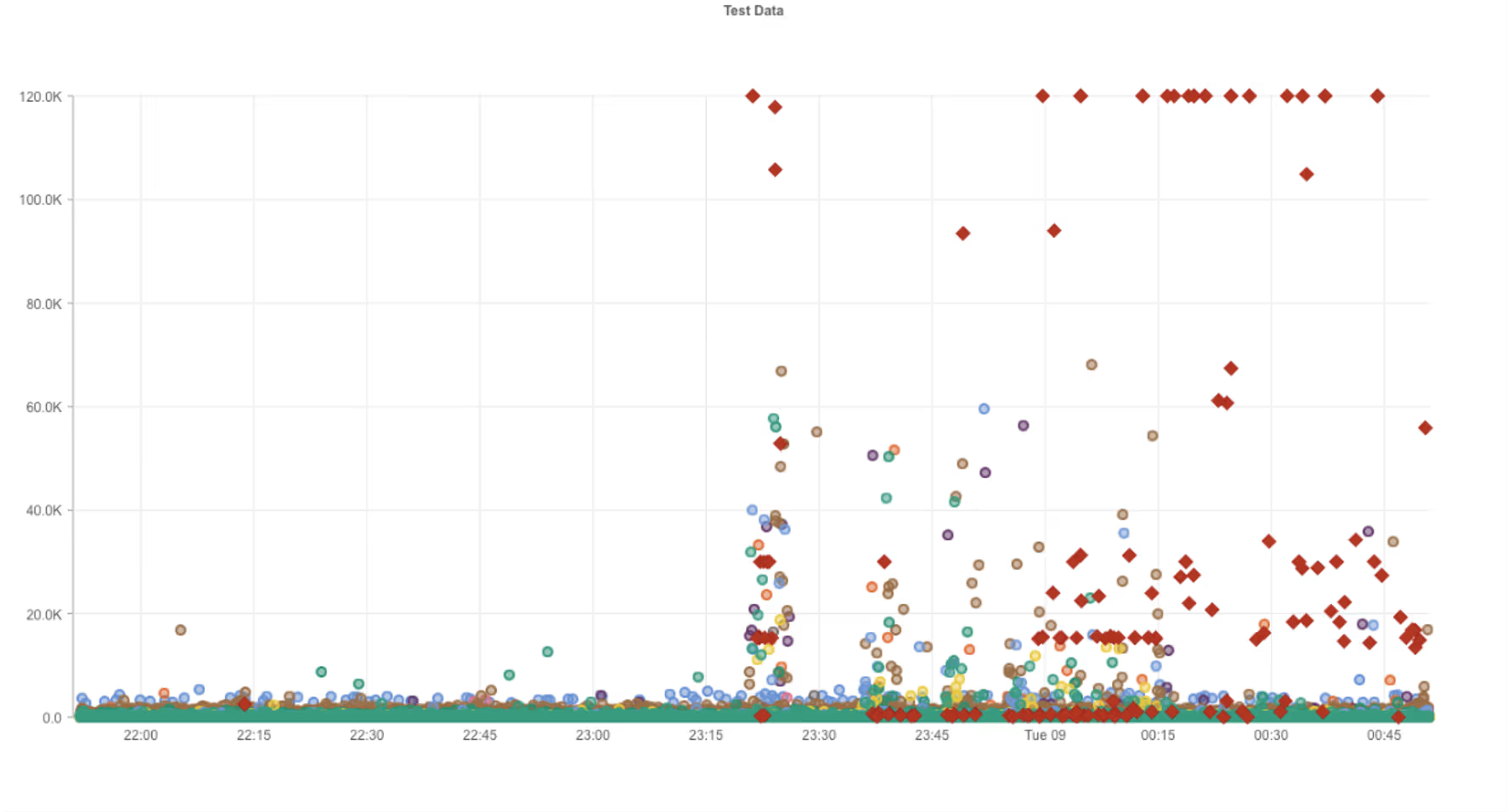

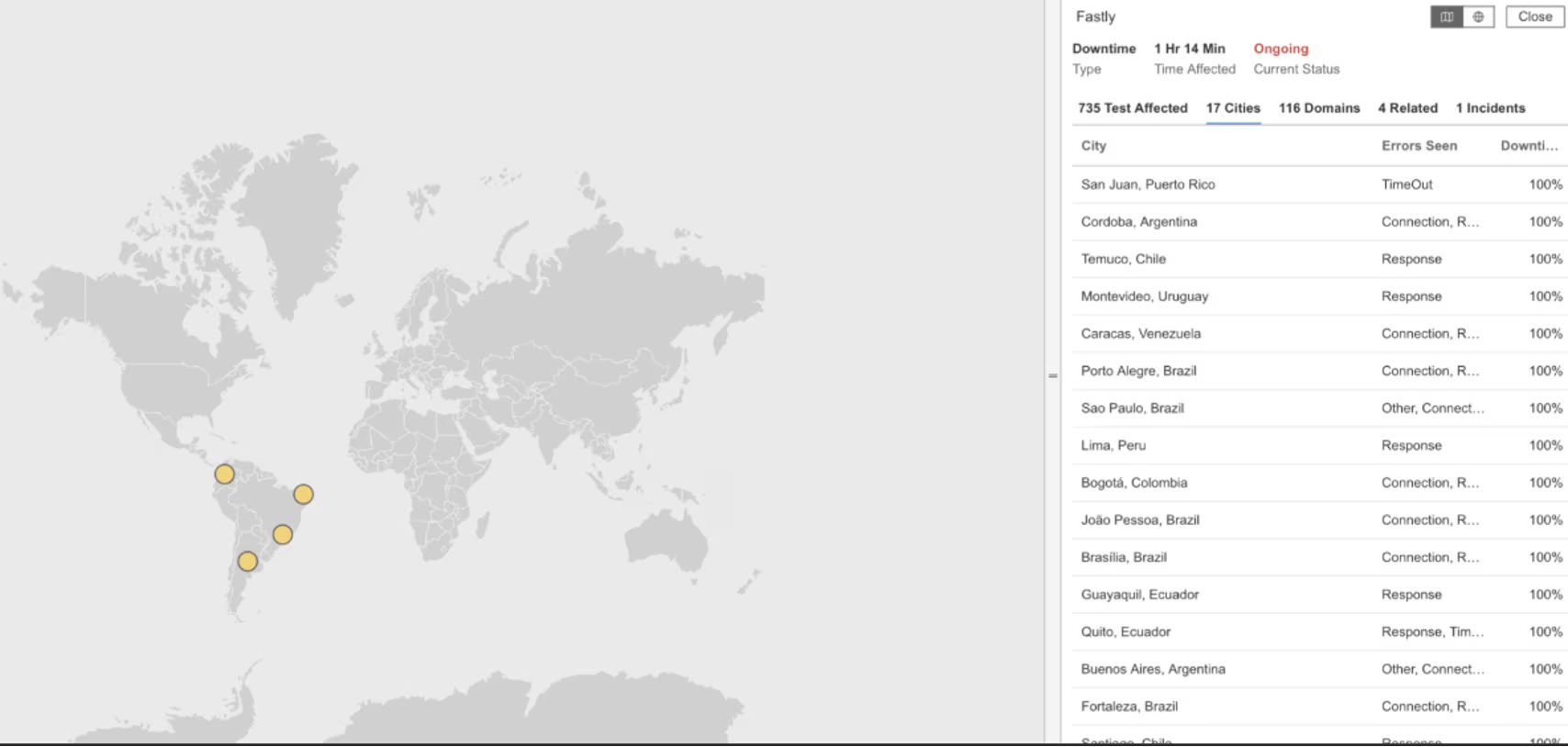

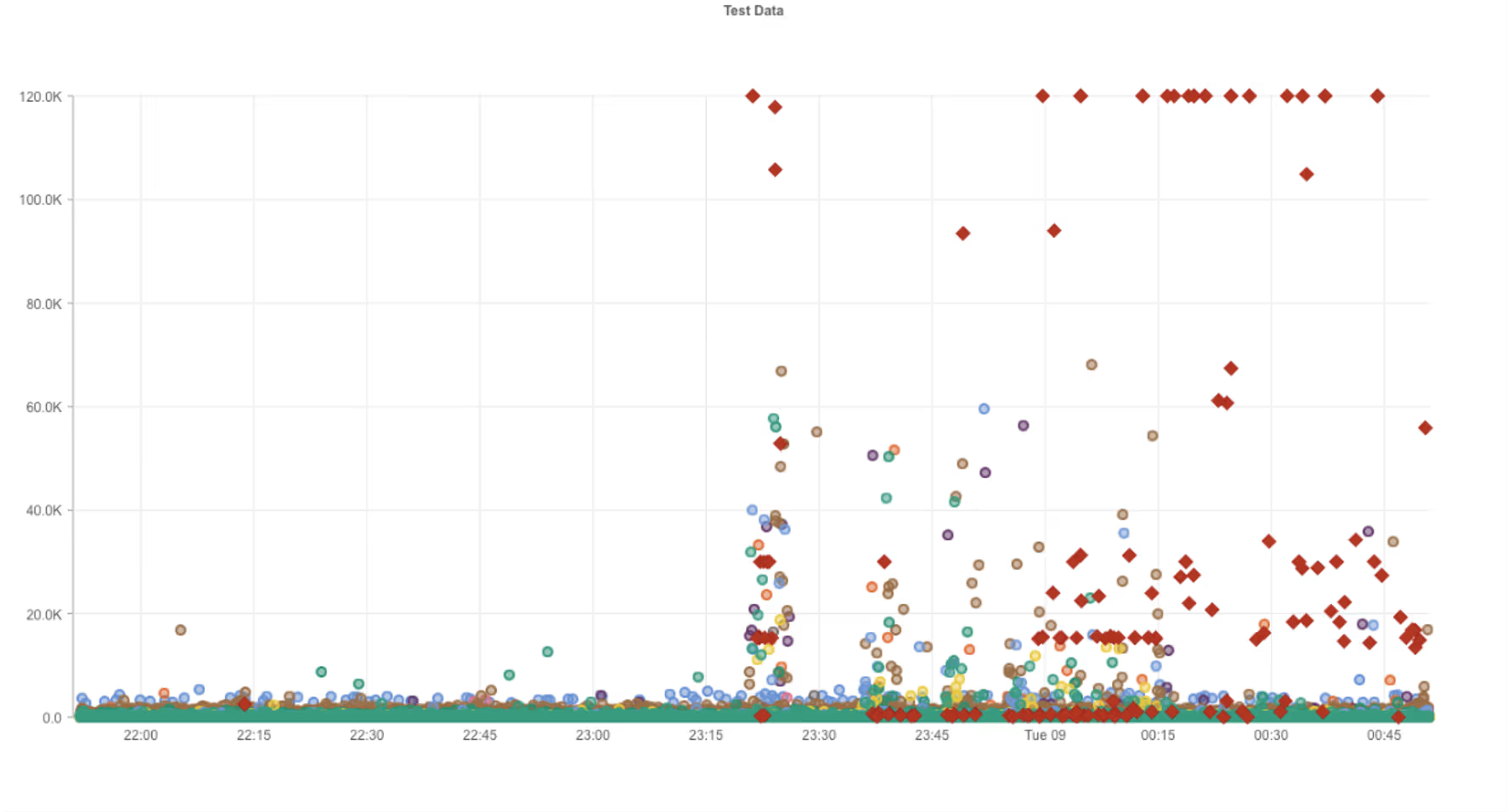

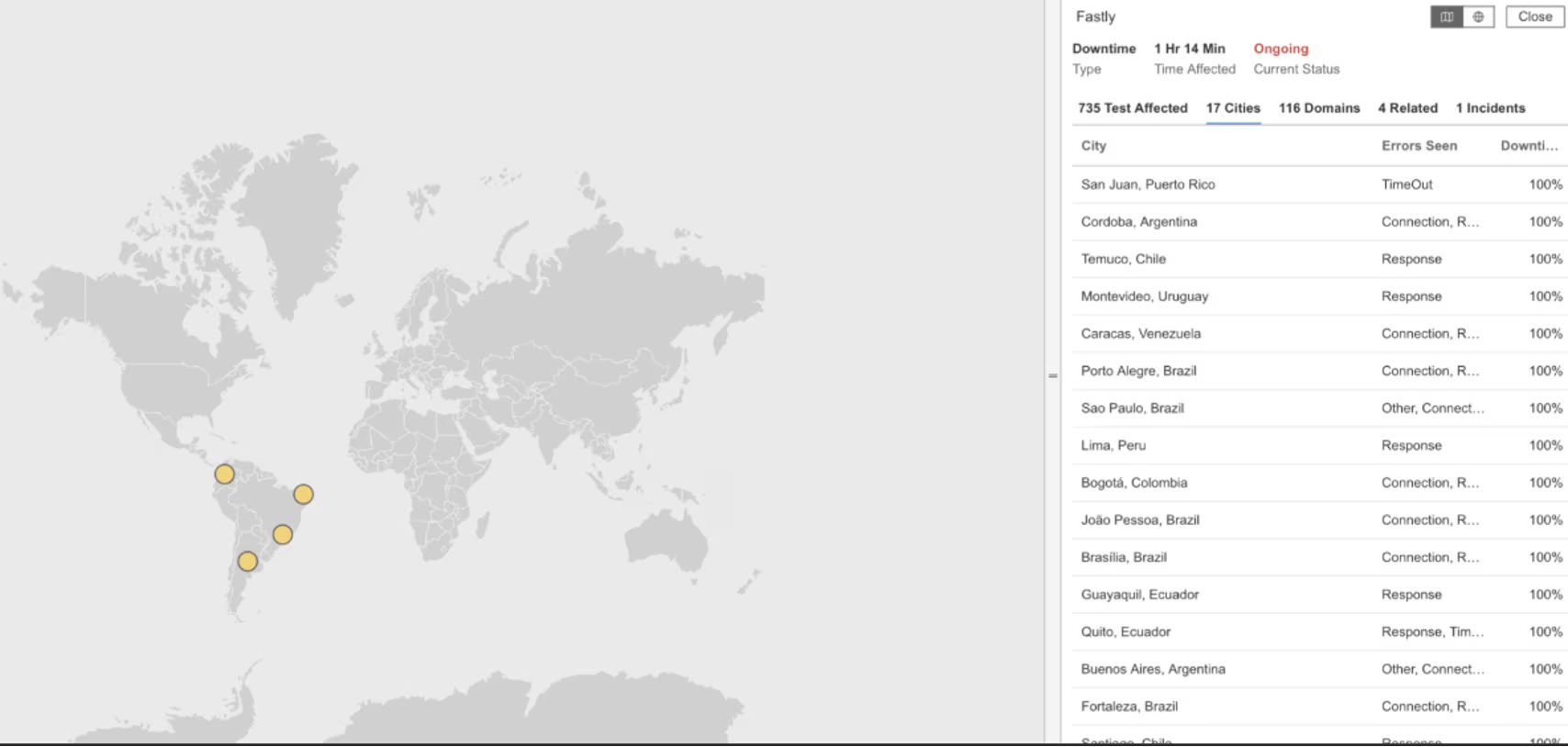

Am 8. Dezember 2025 um 23:21 Uhr EST stellte Internet Sonar einen Ausfall fest, von dem Fastly in Lateinamerika betroffen war. Anfragen von mehreren Standorten hatten lange Verbindungszeiten und führten zu HTTP 502 (Bad Gateway) für mehrere Domains, darunter fastly.cedexis-test.com, forms.gle, cdn.evgnet.com, api.paypalworld.com, www.paypalobjects.com und help.venmo.com, was darauf hindeutete, dass Fastly-abhängige Dienste betroffen waren.

Mitbringsel

Die Kombination aus langsamer Verbindungsherstellung und anschließenden HTTP-502-Fehlern deutet eher auf Instabilität am CDN-Edge oder am regionalen Point-of-Presence hin als auf isolierte Anwendungsausfälle. Wenn ein CDN in einer bestimmten Region auf Probleme stößt, kann sich dies schnell auf viele unabhängige Dienste auswirken, die dieselbe Bereitstellungsschicht nutzen. Dieser Vorfall verdeutlicht, wie regionale CDN-Ausfälle den Auswirkungsradius vergrößern können, selbst wenn die Ursprungsdienste an anderen Standorten weiterhin einwandfrei funktionieren. Die Beobachtung, wo die Latenz zunimmt, bevor Fehler auftreten, hilft dabei, Instabilitäten am Rand von Ausfällen am Ursprung zu unterscheiden, und unterstreicht die Bedeutung regionaler Transparenz, wenn man sich auf eine gemeinsam genutzte Internet-Bereitstellungsinfrastruktur verlässt.

Mashery

Was ist passiert?

Am 4. Dezember 2025 um 23:10:14 EST stellte Internet Sonar einen Ausfall fest, der Mashery in mehreren Regionen betraf. Anfragen an secure.mashery.com und support.mashery.com führten zu HTTP 503 (Dienst nicht verfügbar – der Server kann vorübergehend keine Anfragen bearbeiten), was auf eine Dienstunterbrechung hinwies, die sich auf die Verfügbarkeit von Mashery auswirkte.

Mitbringsel

Ein nahezu gleichzeitiger Anstieg der HTTP-503-Fehler in verschiedenen Regionen deutet eher auf eine Überlastung oder einen Ausfall einer gemeinsamen Steuerungsebene hin als auf unabhängige regionale Überlastungen. Bei global verteilten API-Plattformen deutet diese Art von Ausfall häufig darauf hin, dass zentralisierte Komponenten wie Authentifizierung, Konfigurationsverteilung oder Verkehrsmanagement nicht mehr verfügbar sind. Der starke Anstieg der Antwortzeiten vor den Ausfällen deutet auf eine Anhäufung von Warteschlangen und einen Rückstau hin, bei dem Anfragen zwar angenommen werden, aber vor der Ablehnung ins Stocken geraten. Dieses Muster unterstreicht die Bedeutung der Überwachung von Latenzverteilungen neben Fehlerraten, um frühe Anzeichen einer systemischen Sättigung zu erkennen, bevor es zu einer vollständigen globalen Nichtverfügbarkeit kommt.

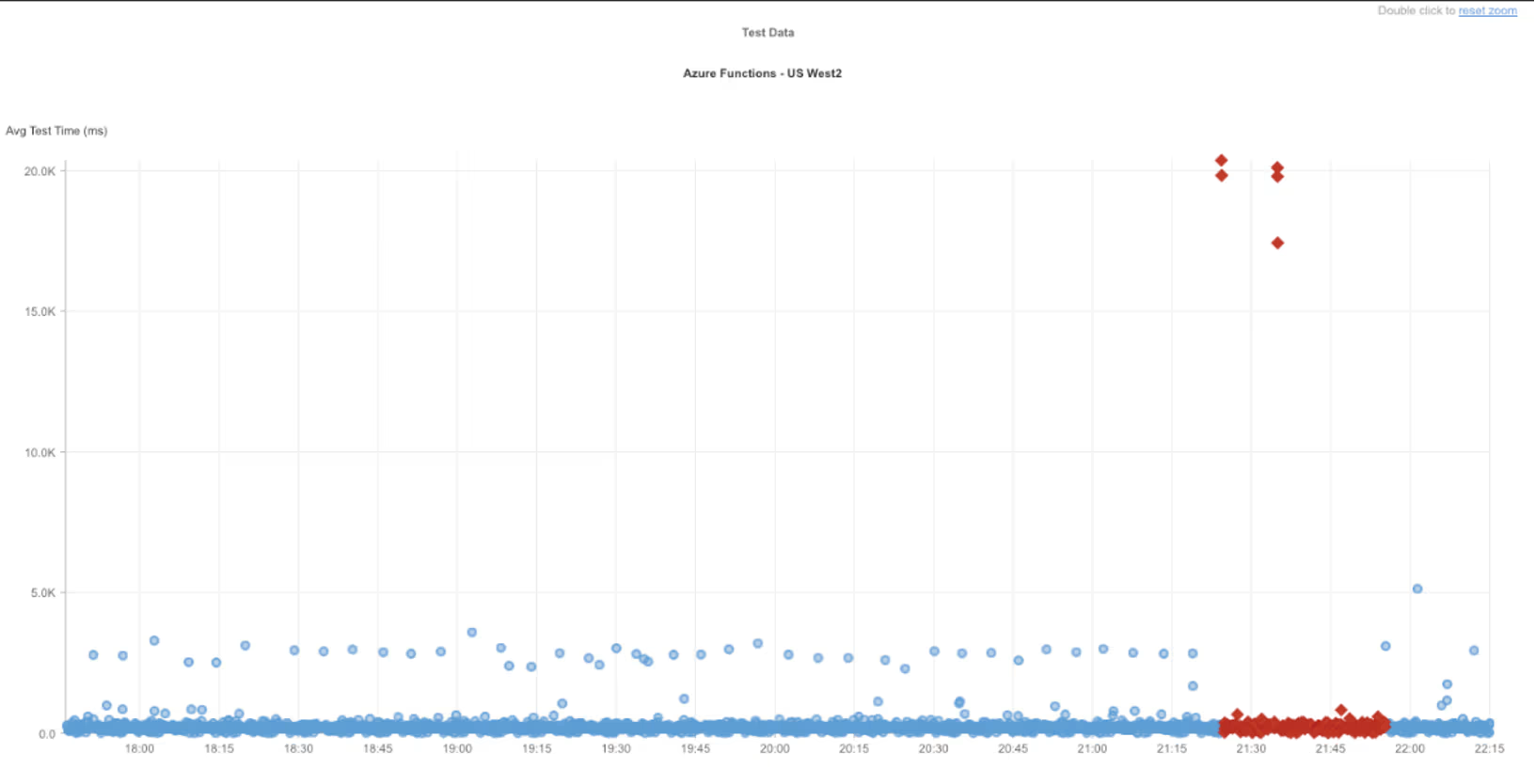

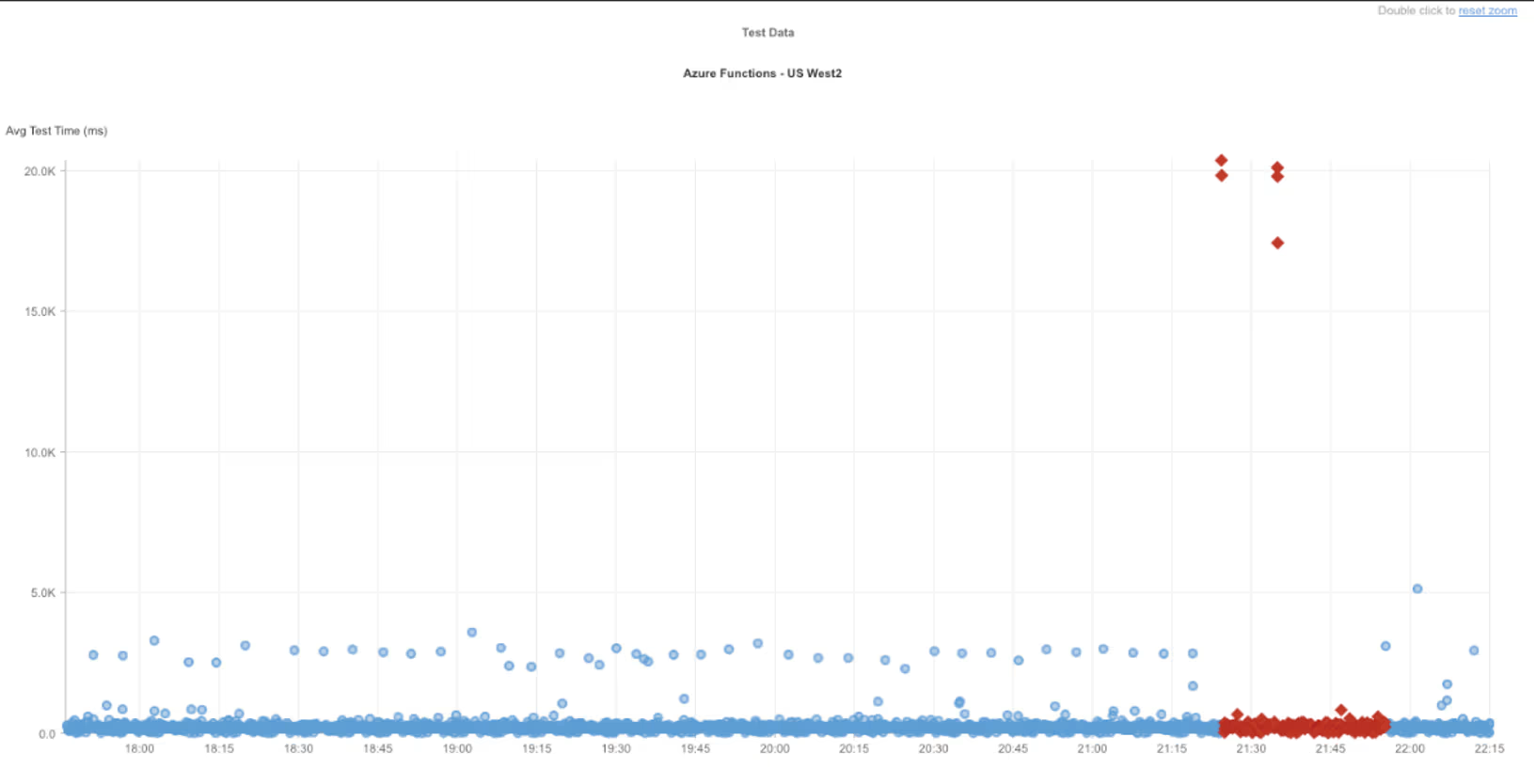

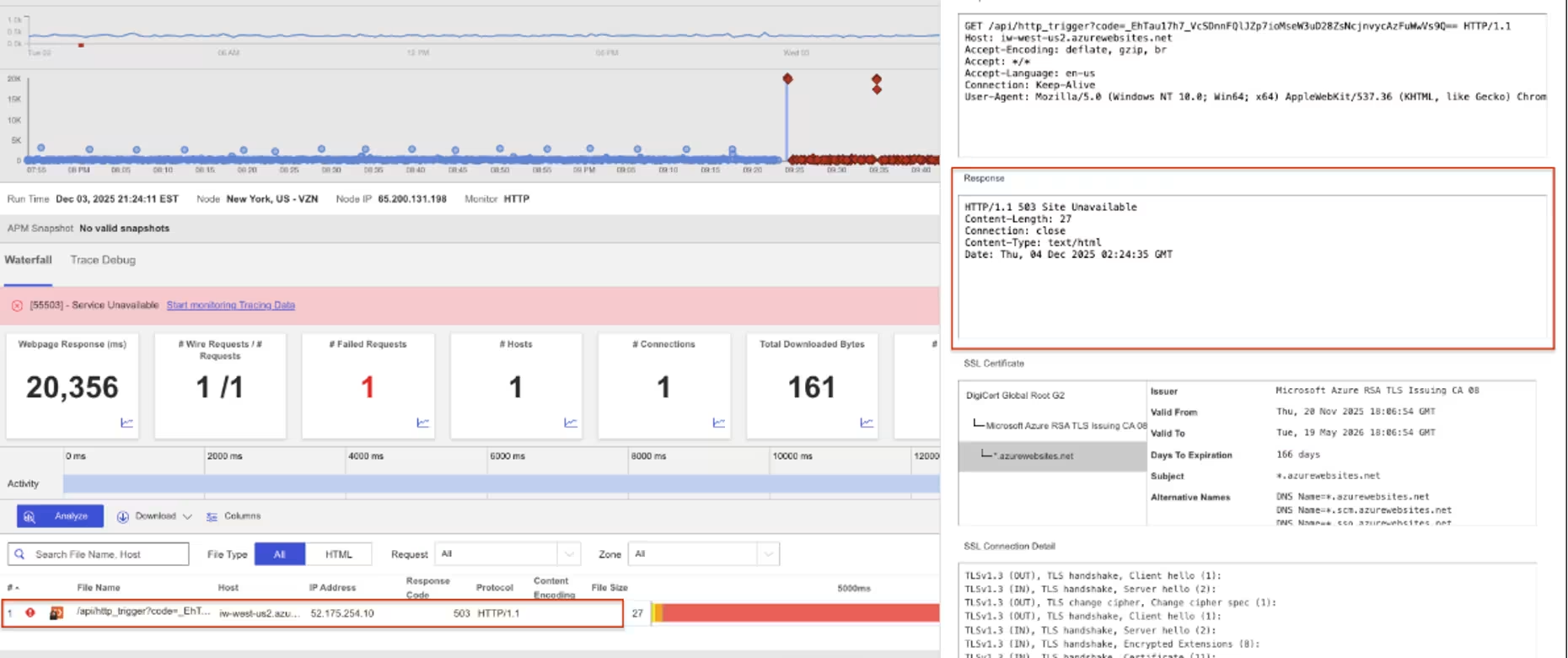

Azure West USA 2

Was ist passiert?

Am 3. Dezember 2025, von 21:24:11 bis 21:54:58 EST, hat Internet Sonar einen Ausfall festgestellt, der Azure West US 2 an mehreren Standorten in Nordamerika betraf, wobei Anfragen an iw-west-us2.azurewebsites.net den HTTP-Fehler 503 (Dienst nicht verfügbar – der Server kann vorübergehend keine Anfragen bearbeiten) zurückgaben.

Mitbringsel

Ein anhaltendes Zeitfenster mit HTTP-503-Fehlern, gefolgt von einer sauberen Wiederherstellung, deutet auf eine Stabilisierungsphase hin, in der die Dienste nach einem internen Fehler allmählich wieder in einen fehlerfreien Zustand zurückkehren, anstatt abrupt auszufallen und wiederhergestellt zu werden. Diese Muster stehen häufig im Zusammenhang mit dem Aufwärmen von Abhängigkeiten, der Neuverteilung des Datenverkehrs oder der schrittweisen Wiederherstellung der Dienstbereitschaft in einer Region. Selbst wenn die Wiederherstellung bereits im Gange ist, können Benutzer weiterhin Fehler feststellen, bis alle Komponenten wieder in einen fehlerfreien Zustand zurückgekehrt sind. Die Messung der Zeit bis zur Stabilisierung und nicht nur der Zeit bis zum ersten Erfolg ist entscheidend, um die tatsächlichen Auswirkungen auf die Benutzer während Vorfällen in der Cloud-Region zu verstehen und um zu überprüfen, ob die Wiederherstellungsmaßnahmen den Dienst vollständig wiederhergestellt haben.

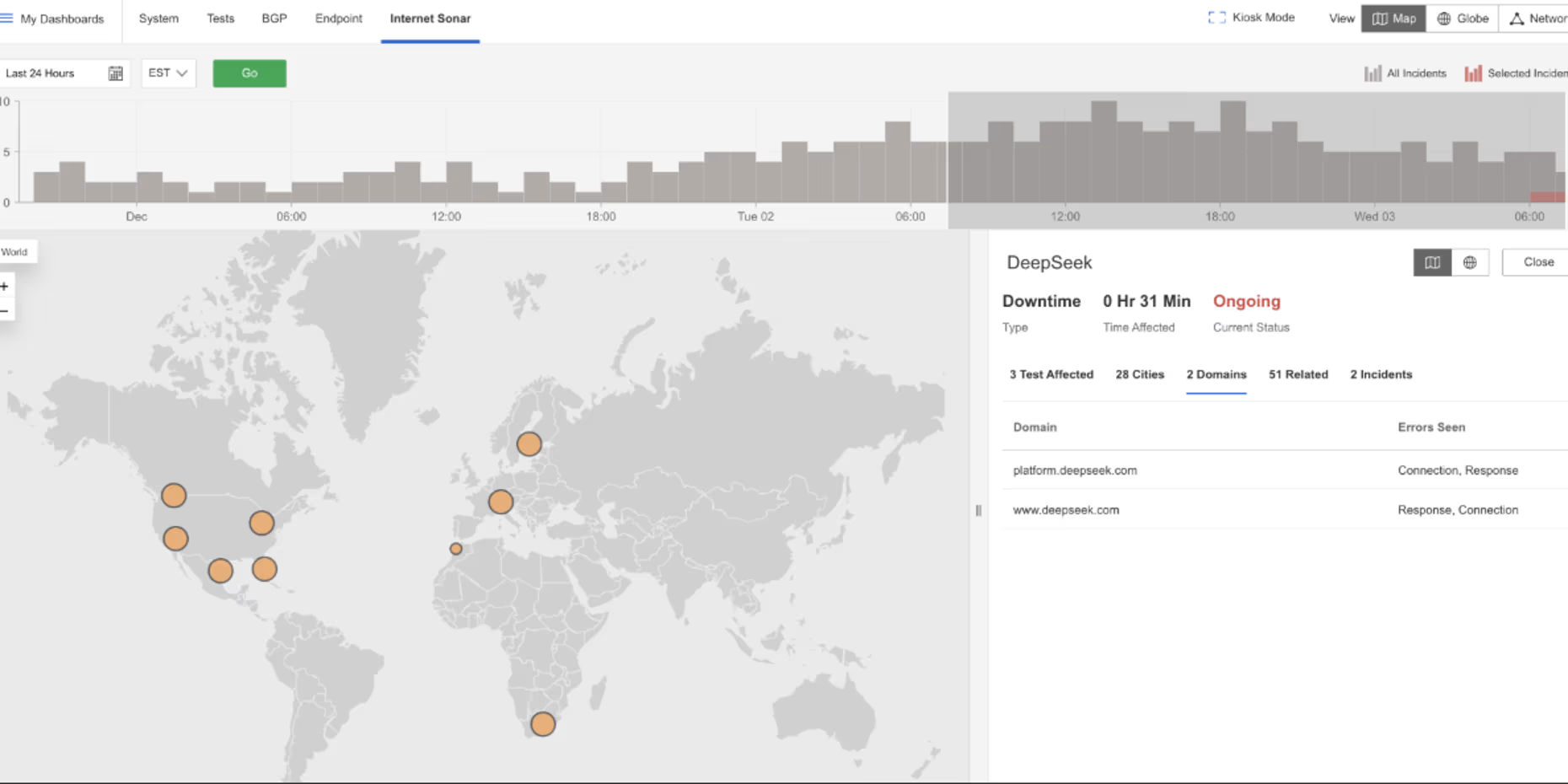

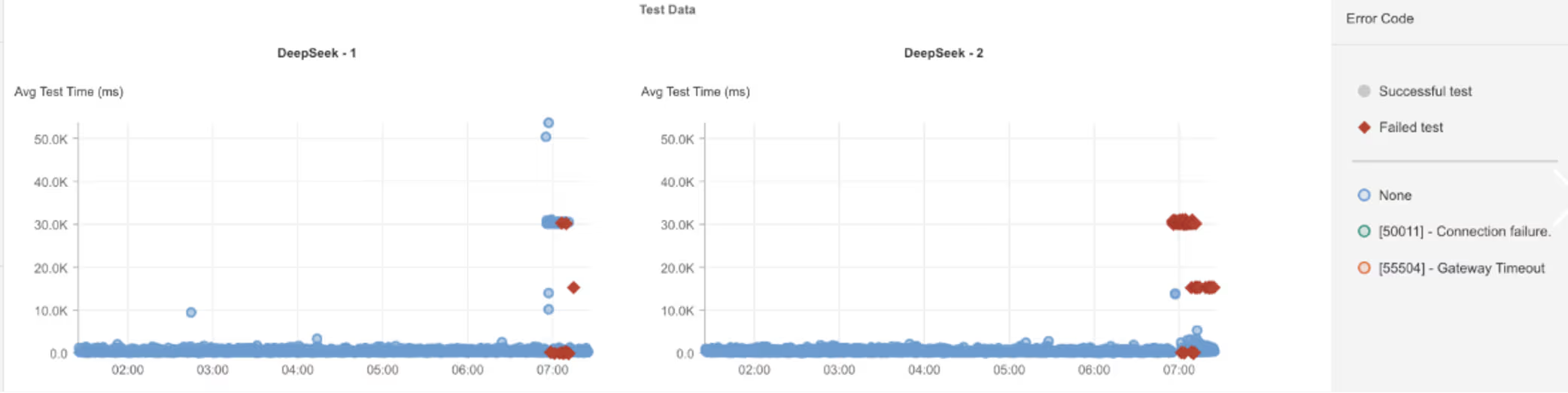

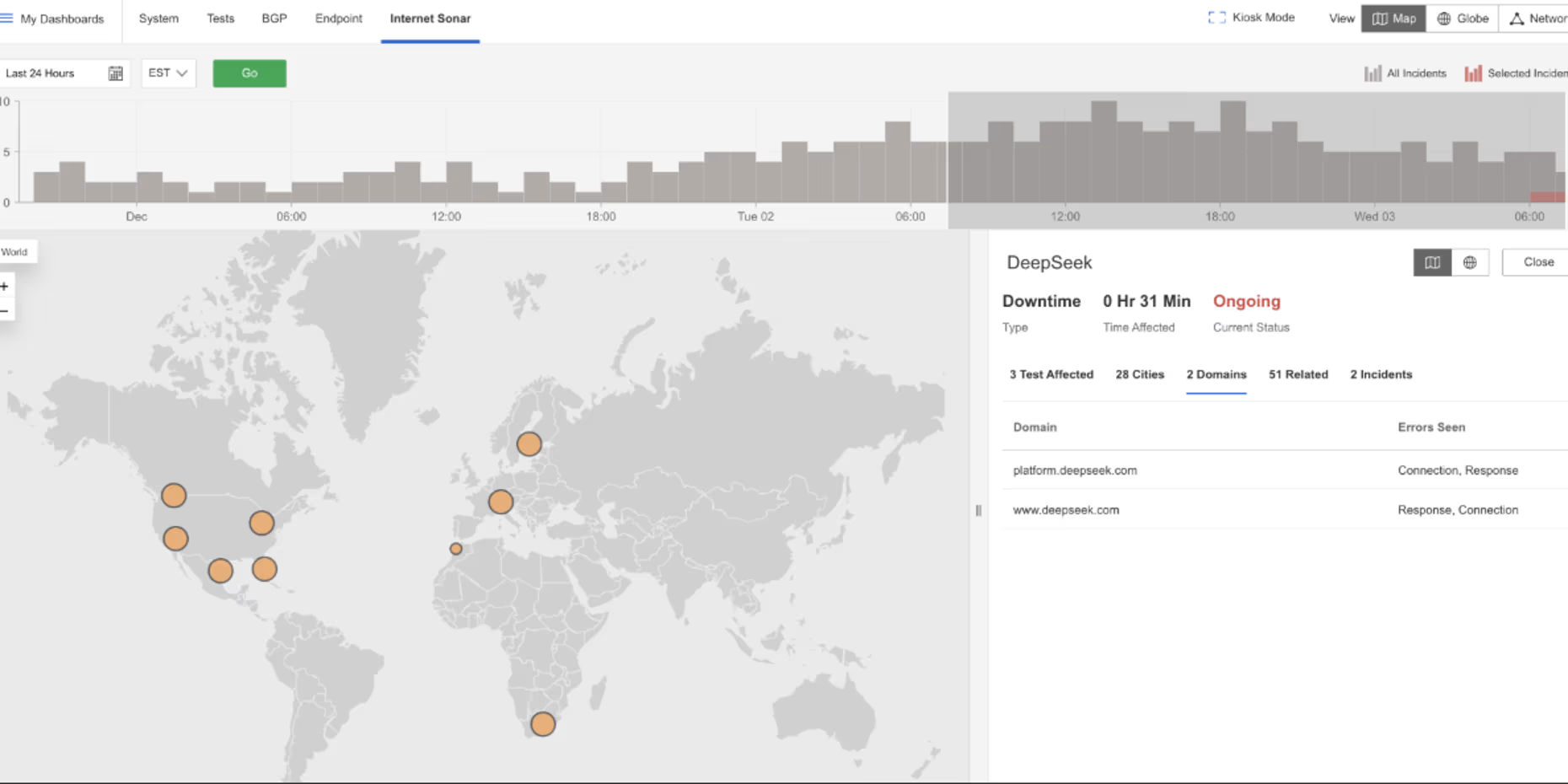

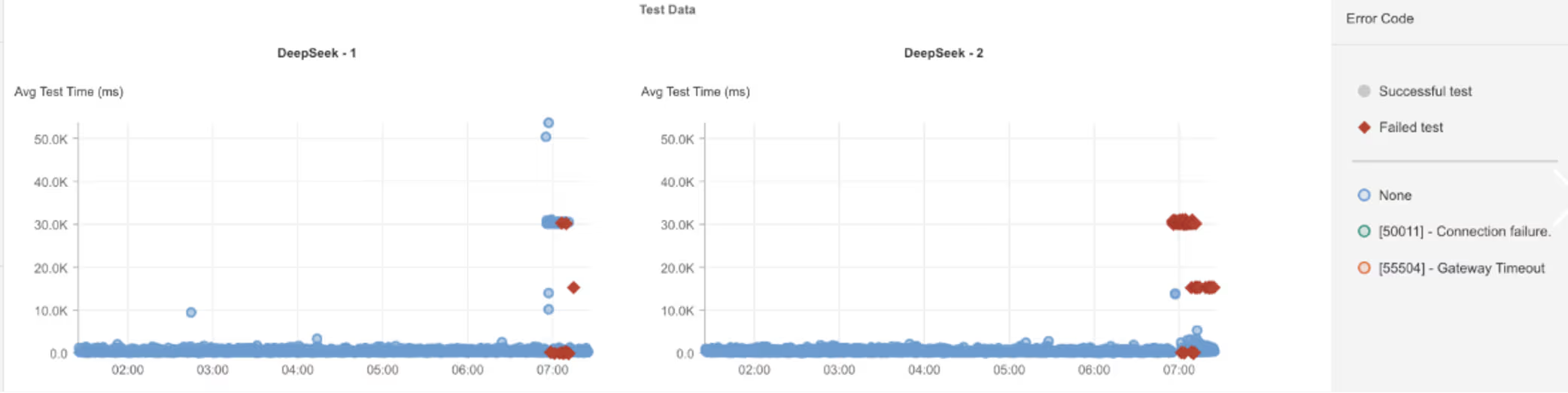

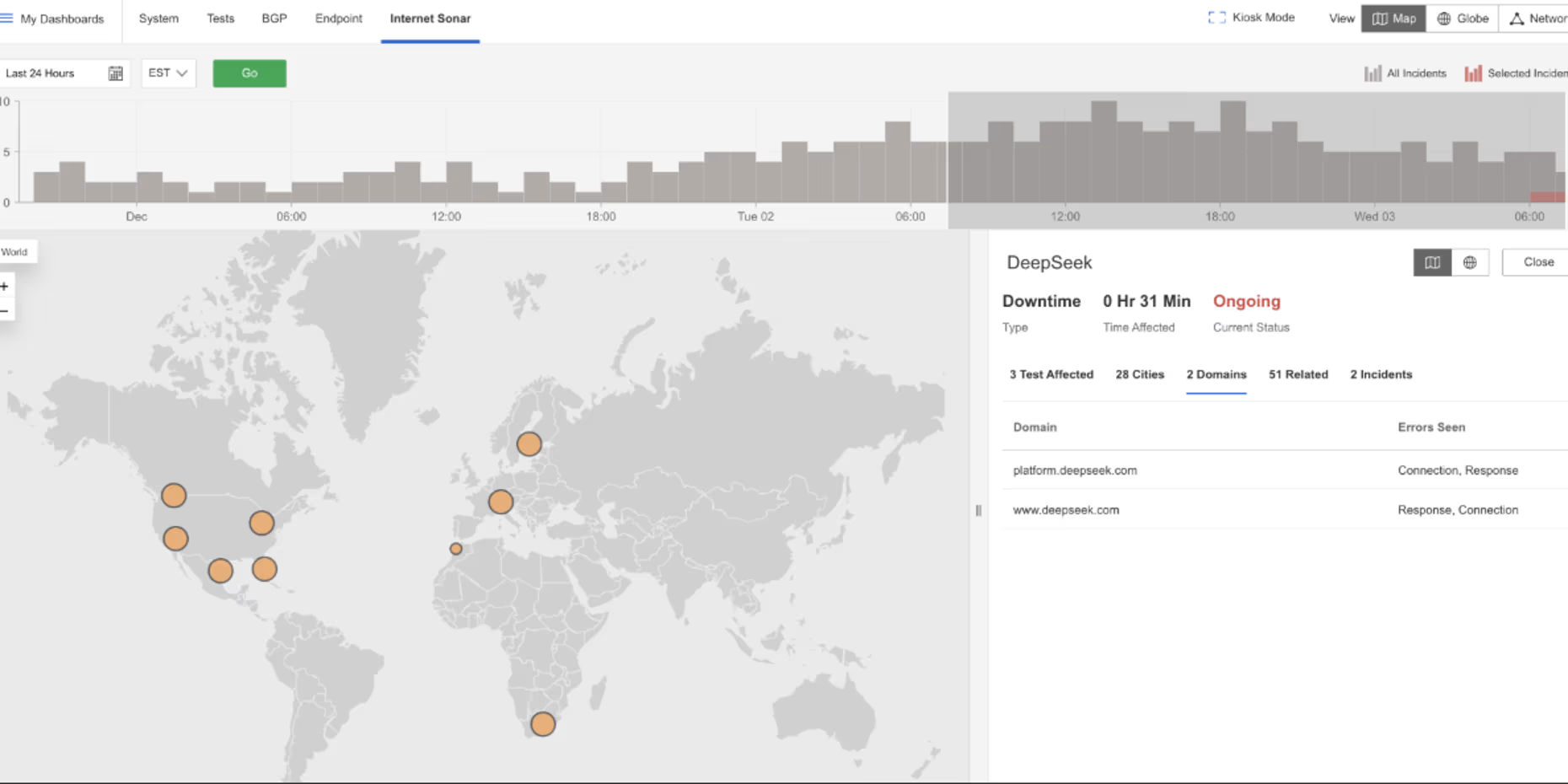

DeepSeek

Was ist passiert?

Am 3. Dezember 2025 um 06:55 EST stellte Internet Sonar einen Ausfall fest, der DeepSeek-Dienste in mehreren Ländern betraf, darunter die USA, Kanada, Indien und Deutschland. Anfragen an https://platform.deepseek.com/ und https://www.deepseek.com/ führten zu langen Wartezeiten und der Rückgabe des HTTP-Fehlers 504 (Gateway-Zeitüberschreitung – der Server erhielt keine rechtzeitige Antwort von einem Upstream-Server).

Mitbringsel

HTTP-504-Fehler in Verbindung mit steigenden Wartezeiten deuten in der Regel eher auf eine Sättigung der vorgelagerten Abhängigkeiten oder Probleme mit der Reaktionsfähigkeit hin als auf Ausfälle am Rand des Dienstes. Dieses Muster lässt vermuten, dass das Frontend von DeepSeek weiterhin erreichbar war, während nachgelagerte Komponenten – wie Backends für die Modellinferenz, Datendienste oder interne APIs – nicht innerhalb der erwarteten Zeitlimits reagieren konnten. Bei KI-gesteuerten Plattformen, bei denen die Verarbeitung von Anfragen rechenintensiv sein kann, verdeutlichen diese Zeitüberschreitungen das Risiko von Warteschlangenbildung und kaskadierenden Latenzen unter Last. Die Überwachung der Verteilung der Anfragedauer zusammen mit Zeitüberschreitungsfehlern hilft dabei, Kapazitätserschöpfung von schwerwiegenden Ausfällen zu unterscheiden, und liefert frühzeitige Signale, bevor Verzögerungen bei Abhängigkeiten zu einem weitreichenden Ausfall der Verfügbarkeit führen.

November

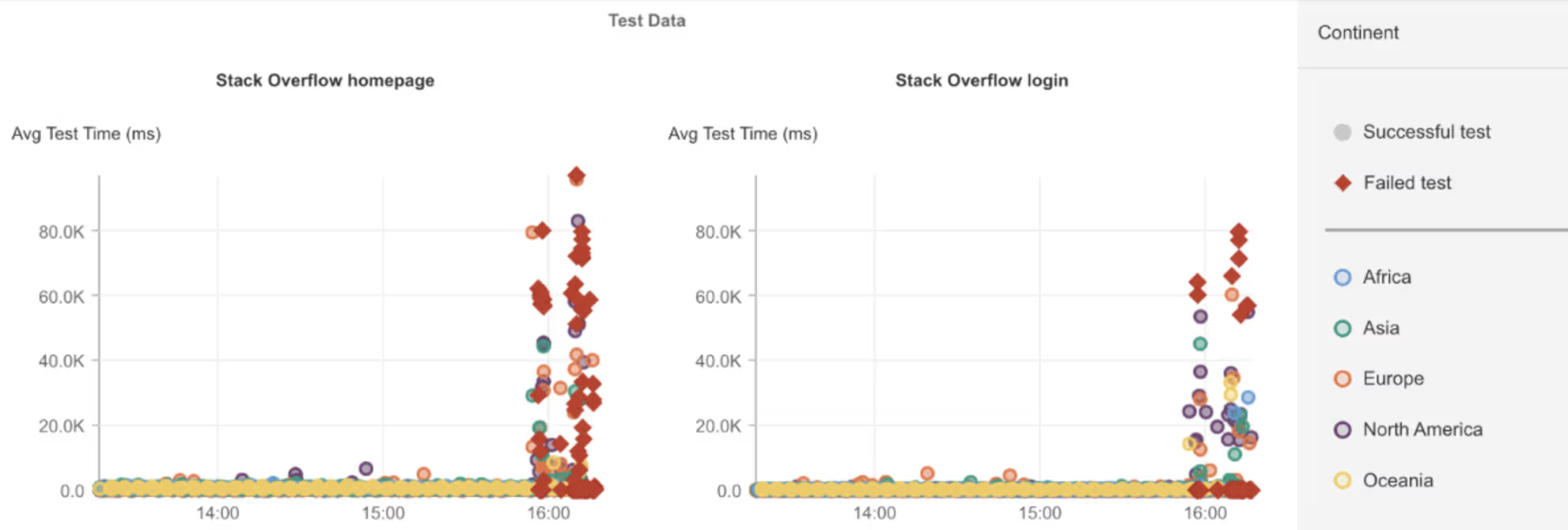

Stapelüberlauf

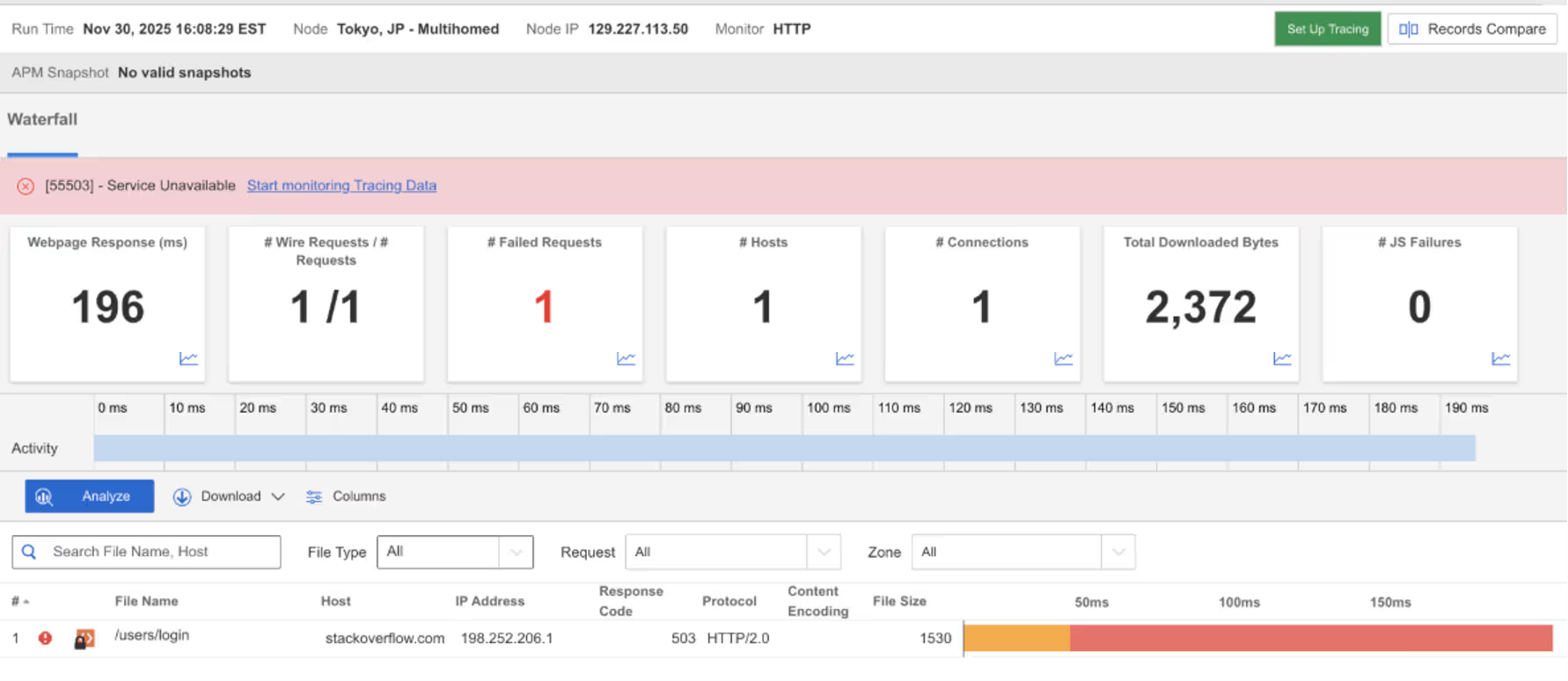

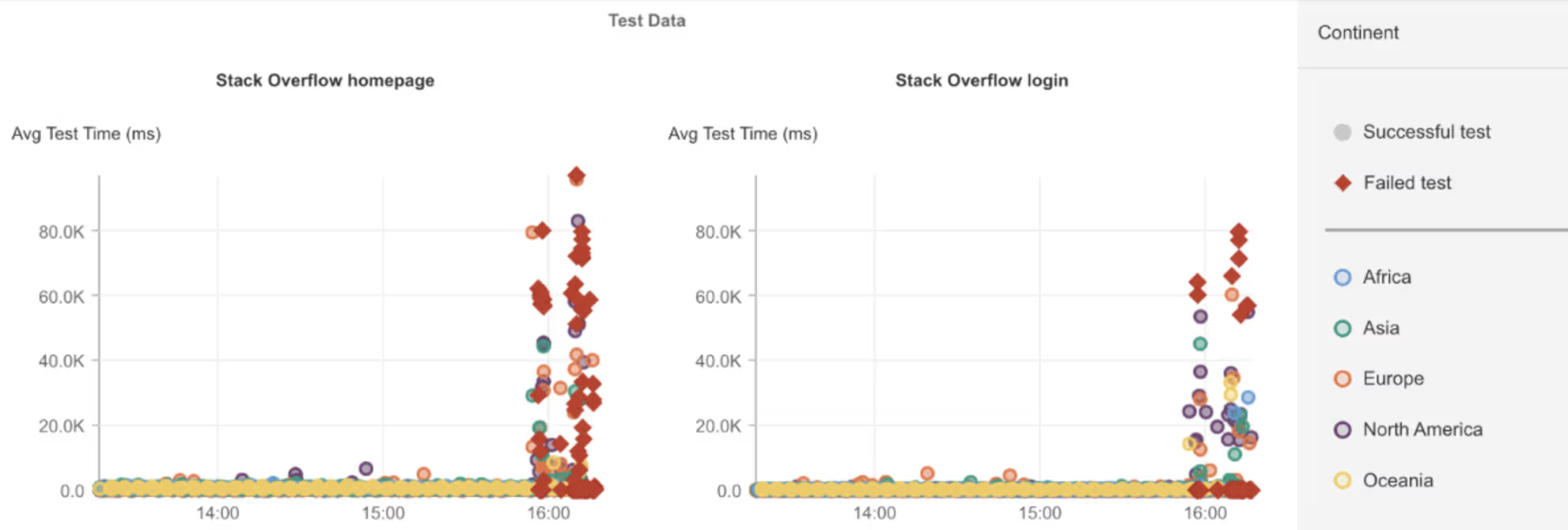

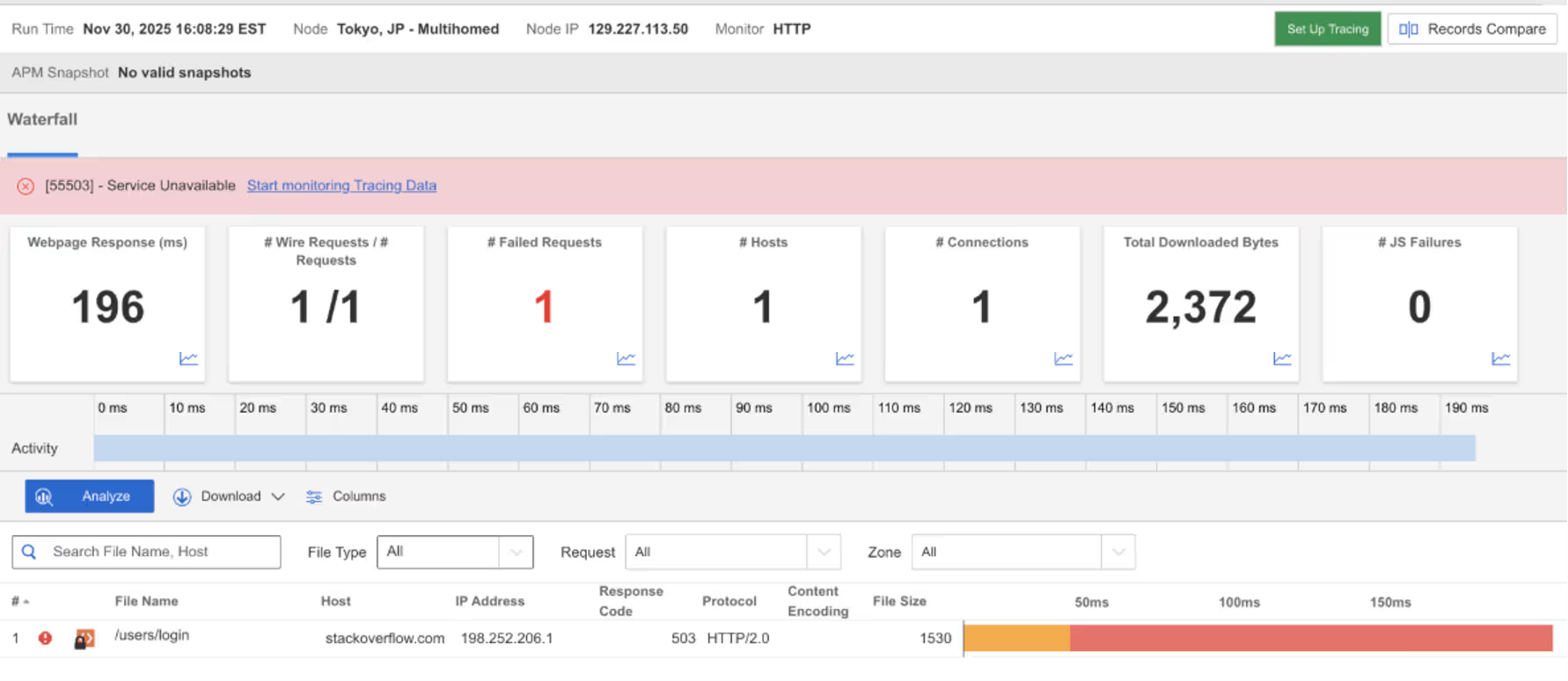

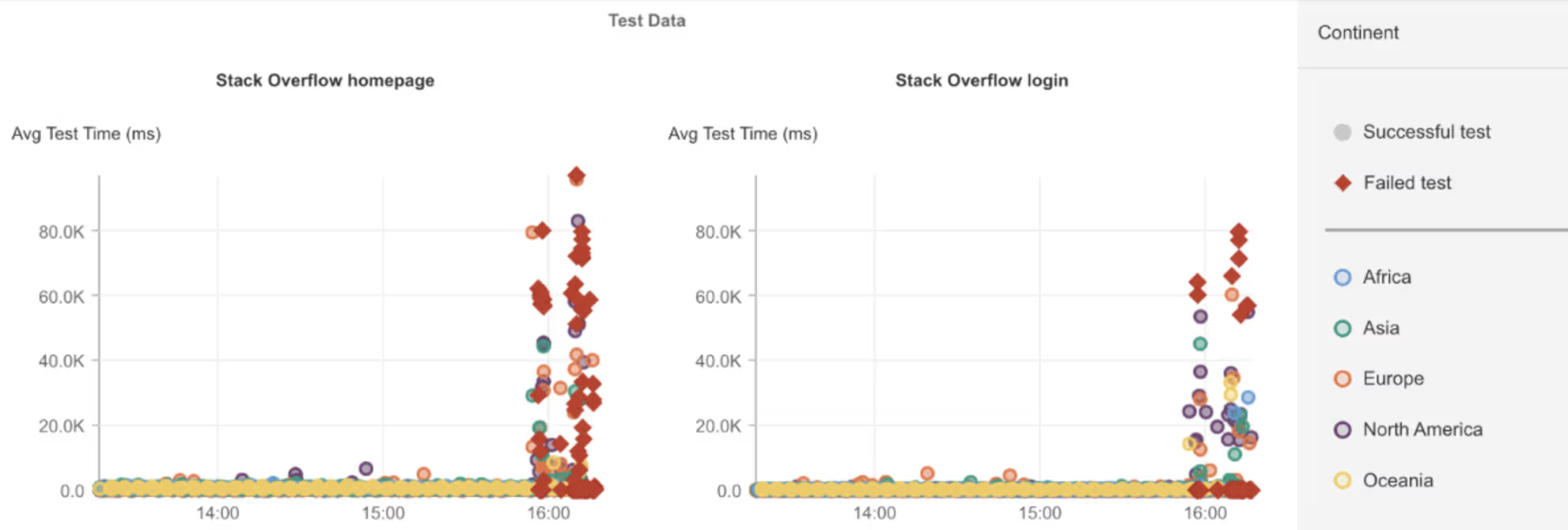

Was ist passiert?

Am 30. November 2025 um 15:55 Uhr EST stellte Internet Sonar einen Ausfall fest, der Stack Overflow in mehreren Ländern betraf. Anfragen an https://stackoverflow.com und https://stackoverflow.com/users/login lieferten den HTTP-Fehler 503 (Dienst nicht verfügbar – der Server kann vorübergehend keine Anfragen bearbeiten).

Mitbringsel

Gleichzeitige Ausfälle auf der Startseite und beim Anmeldepfad deuten eher auf ein Problem in einer gemeinsamen Anwendungsabhängigkeit hin als auf ein Problem, das nur die Authentifizierung oder Benutzersitzungen betrifft. Wenn Kernservices wie Request Routing, Konfiguration oder interne APIs nicht mehr verfügbar sind, können sie sowohl den schreibgeschützten als auch den authentifizierten Datenverkehr gleichzeitig blockieren. Dieser Vorfall zeigt, wie grundlegende Anwendungskomponenten selbst in ansonsten modularen Architekturen zu Single Points of Failure werden können. Die Beobachtung, welche Benutzerpfade gemeinsam ausfallen, hilft dabei, die Ursache schnell einzugrenzen und zu vermeiden, dass Probleme fälschlicherweise ausschließlich den Authentifizierungssystemen oder benutzerspezifischen Diensten zugeschrieben werden, wenn der zugrunde liegende Fehler umfassender ist.

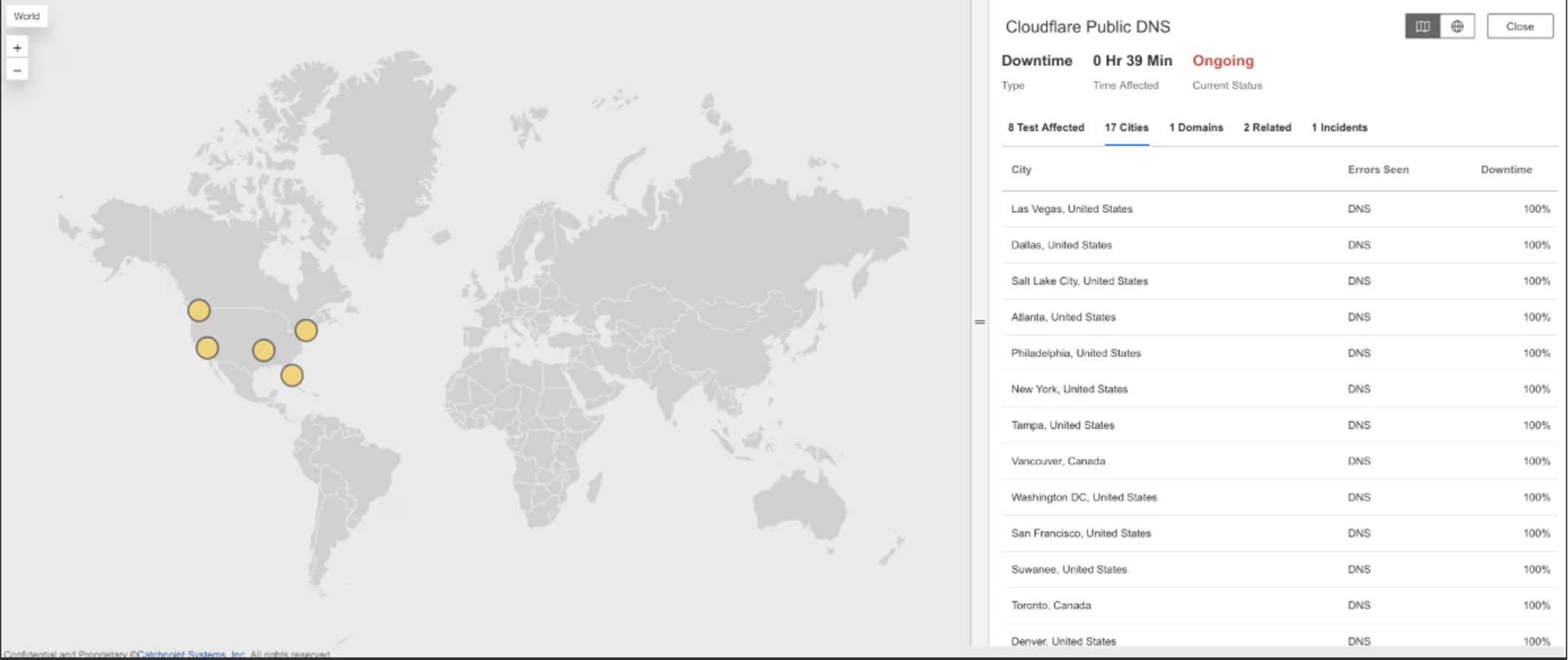

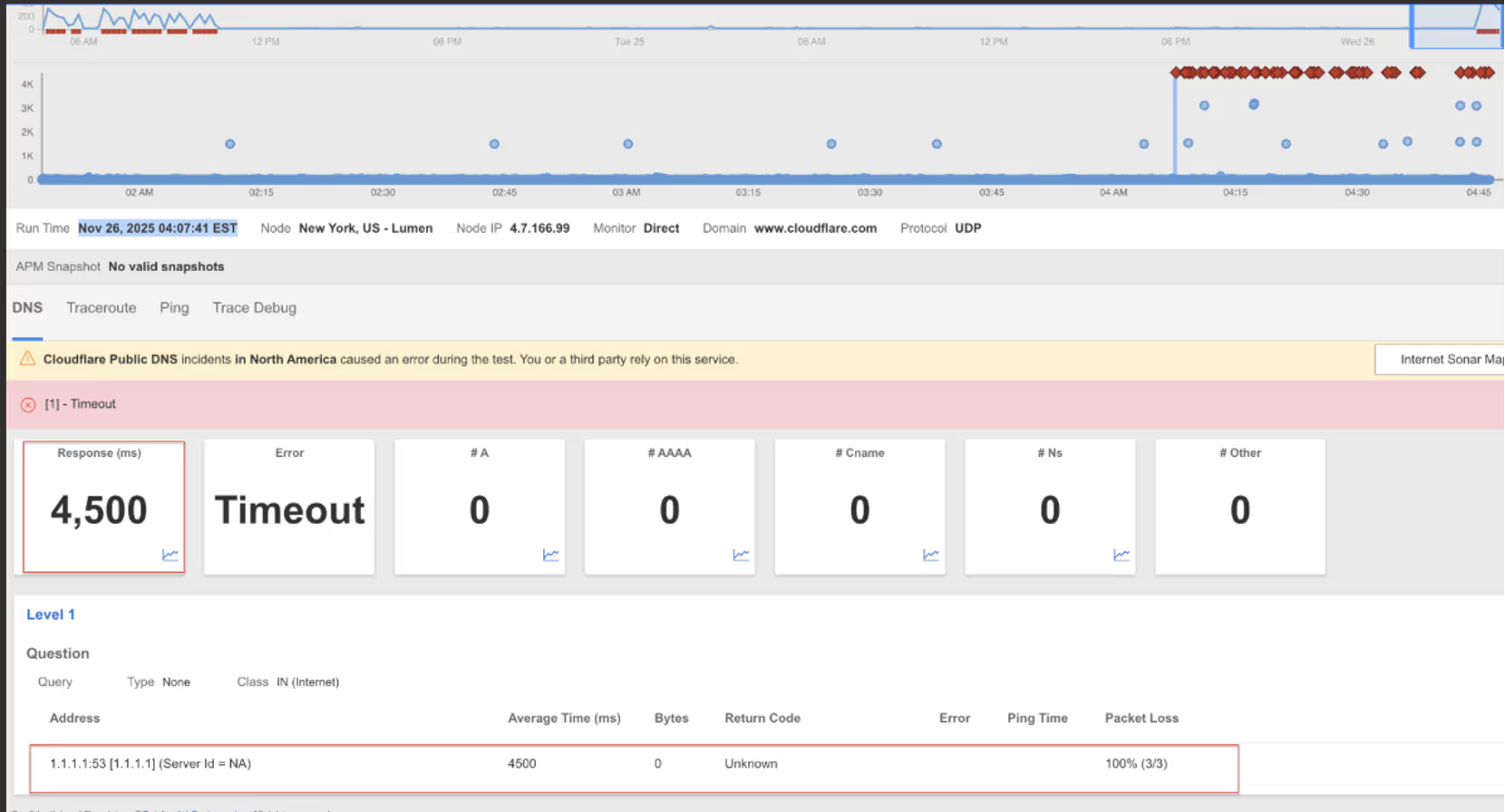

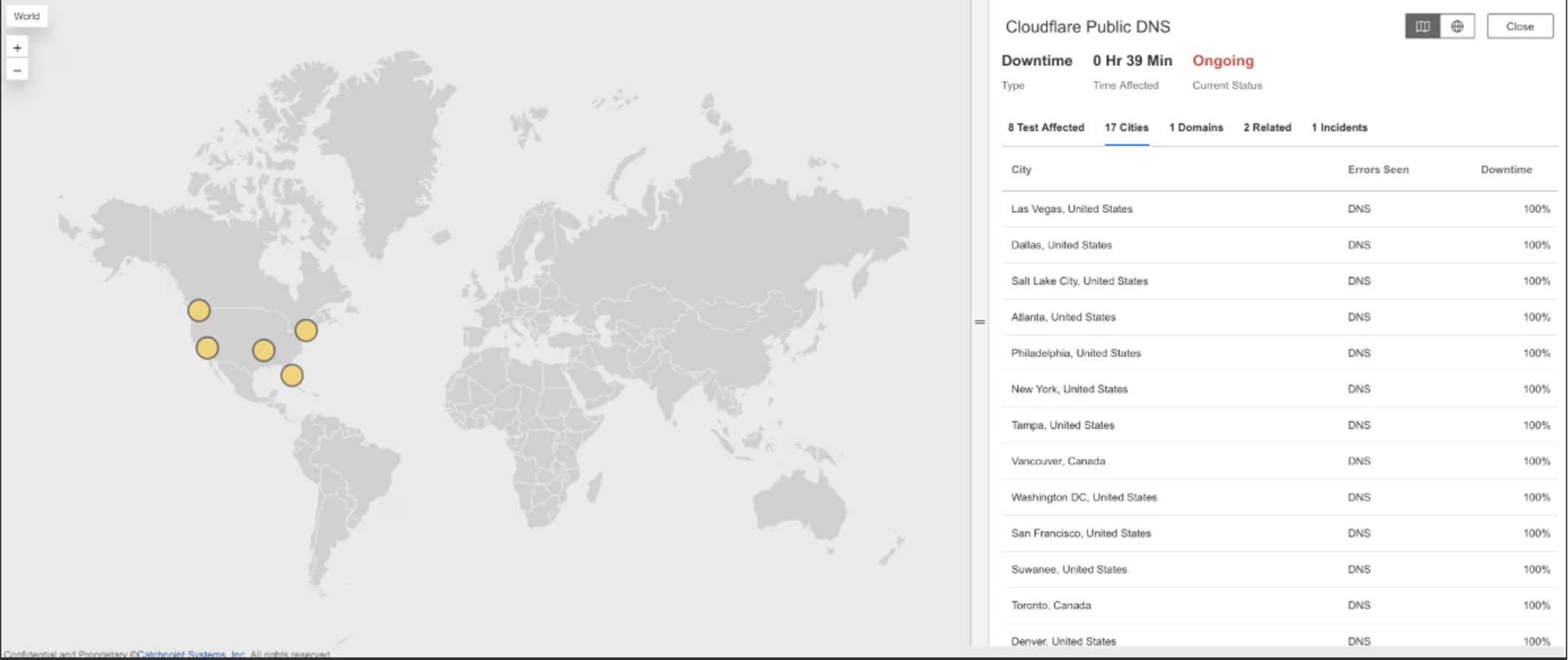

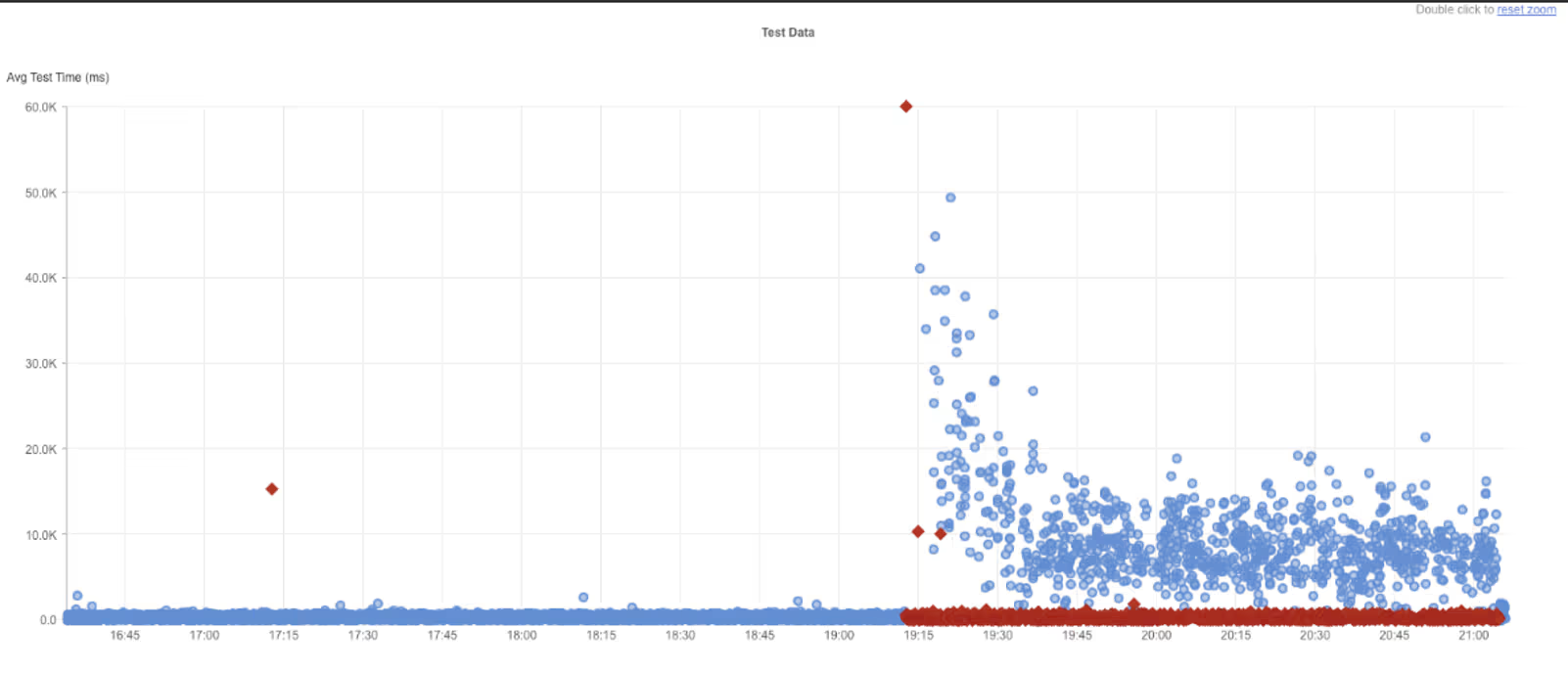

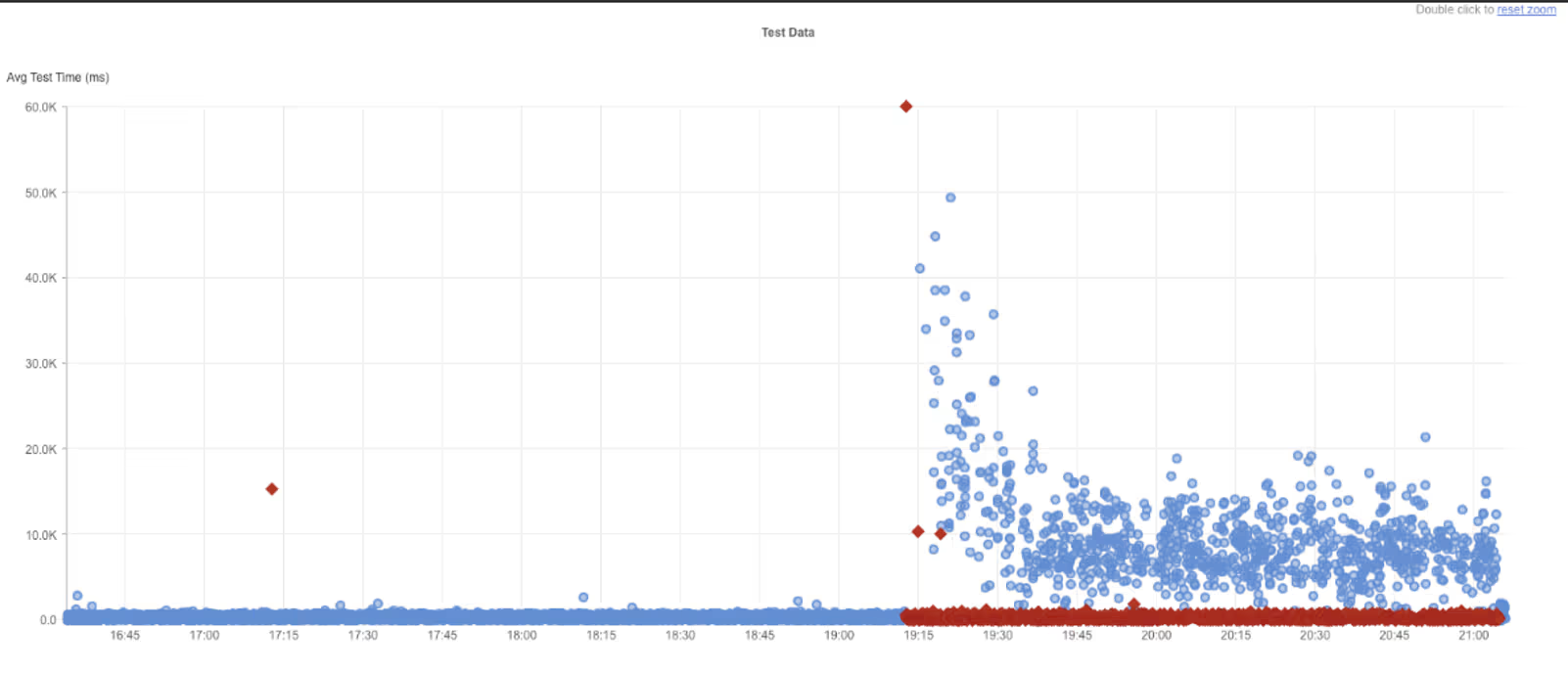

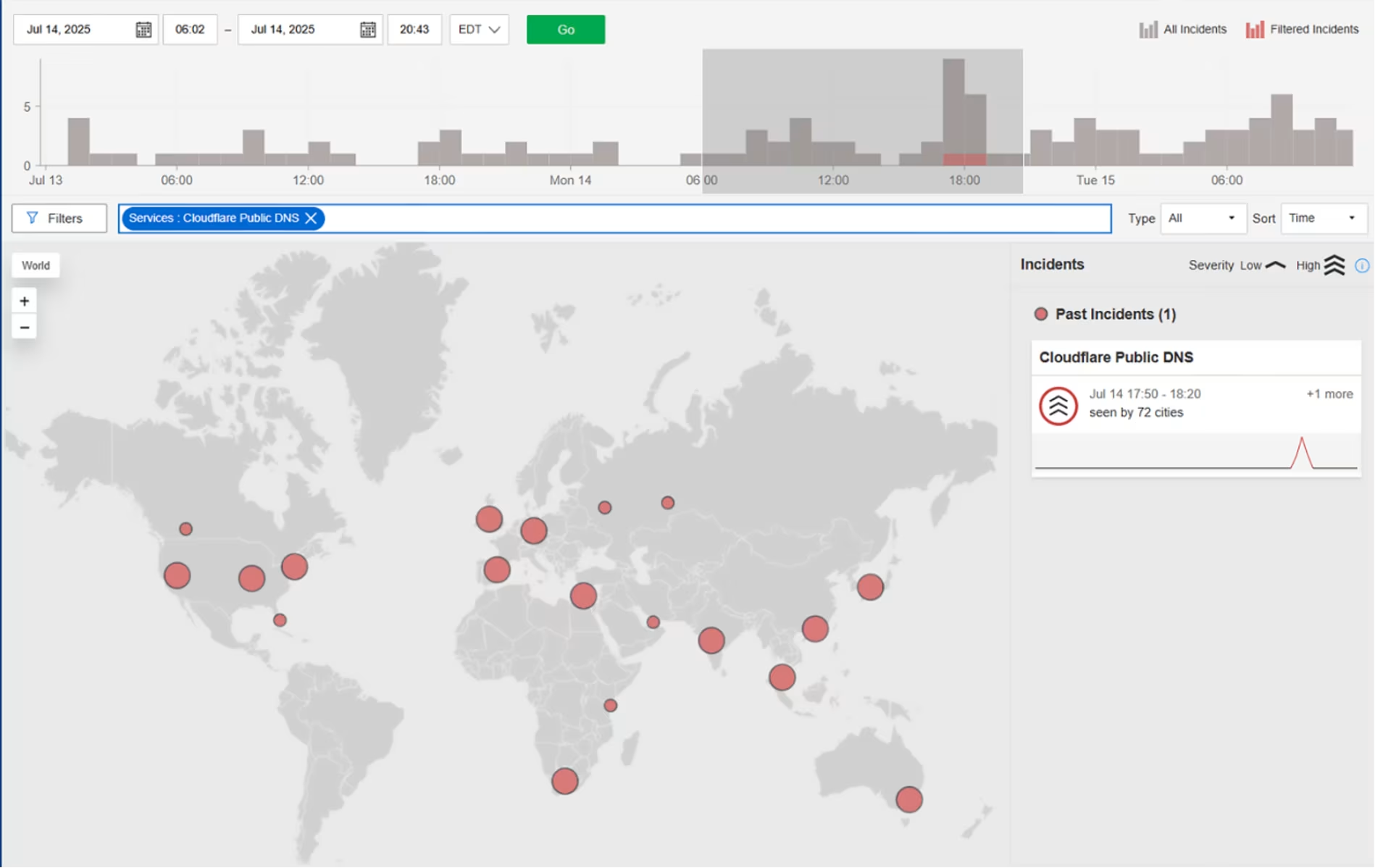

Öffentliches DNS von Cloudflare 1.1.1.1

Was ist passiert?

Am 26. November 2025 um 00:28:20 EST hat Internet Sonar einen Ausfall festgestellt, der Cloudflare Public DNS 1.1.1.1 in mehreren Regionen betraf, darunter die USA, Kanada, Chile, Deutschland, Italien, die Niederlande, Peru und Spanien. Zeitüberschreitungsfehler bei Abfragen vom Cloudflare Public DNS 1.1.1.1-Resolver Endbenutzer, die sich auf den 1.1.1.1-Resolver von Cloudflare verlassen, können aufgrund von Zeitüberschreitungen bei DNS-Abfragen zu verlangsamten oder fehlgeschlagenen Website- und Anwendungssuchen kommen.

Mitbringsel

DNS-Zeitüberschreitungen sind besonders störend, da sie den Verbindungsaufbau vollständig verhindern, selbst wenn Netzwerke und Anwendungen ansonsten einwandfrei funktionieren. Im Gegensatz zu Fehlern auf Anwendungsebene äußern sich DNS-Ausfälle oft in Form von weitreichenden, nicht eindeutig zuordenbaren Verbindungsproblemen, die viele nicht miteinander in Zusammenhang stehende Dienste gleichzeitig betreffen. Dieser Vorfall verdeutlicht, wie rekursive Resolver als wichtige gemeinsame Abhängigkeit fungieren, wobei eine lokale Instabilität des Resolvers zu weitreichenden, für den Benutzer sichtbaren Ausfällen führen kann. Die Überwachung der Erfolgsraten und Antwortzeiten von DNS-Abfragen unabhängig vom Zustand der Anwendungen ist unerlässlich, um Fehler bei der Namensauflösung schnell von Routing-, TLS- oder serverseitigen Problemen zu unterscheiden, wenn große Teile des Internets gleichzeitig nicht erreichbar sind.

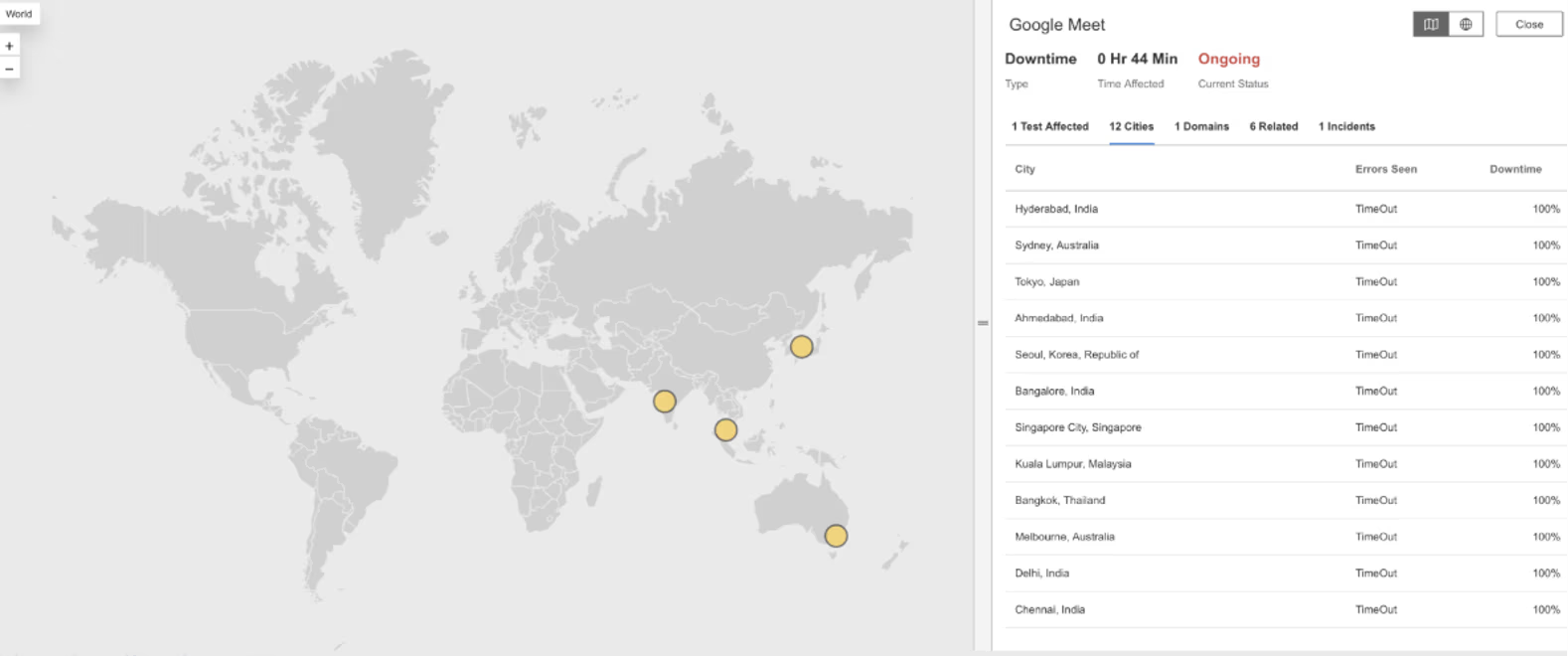

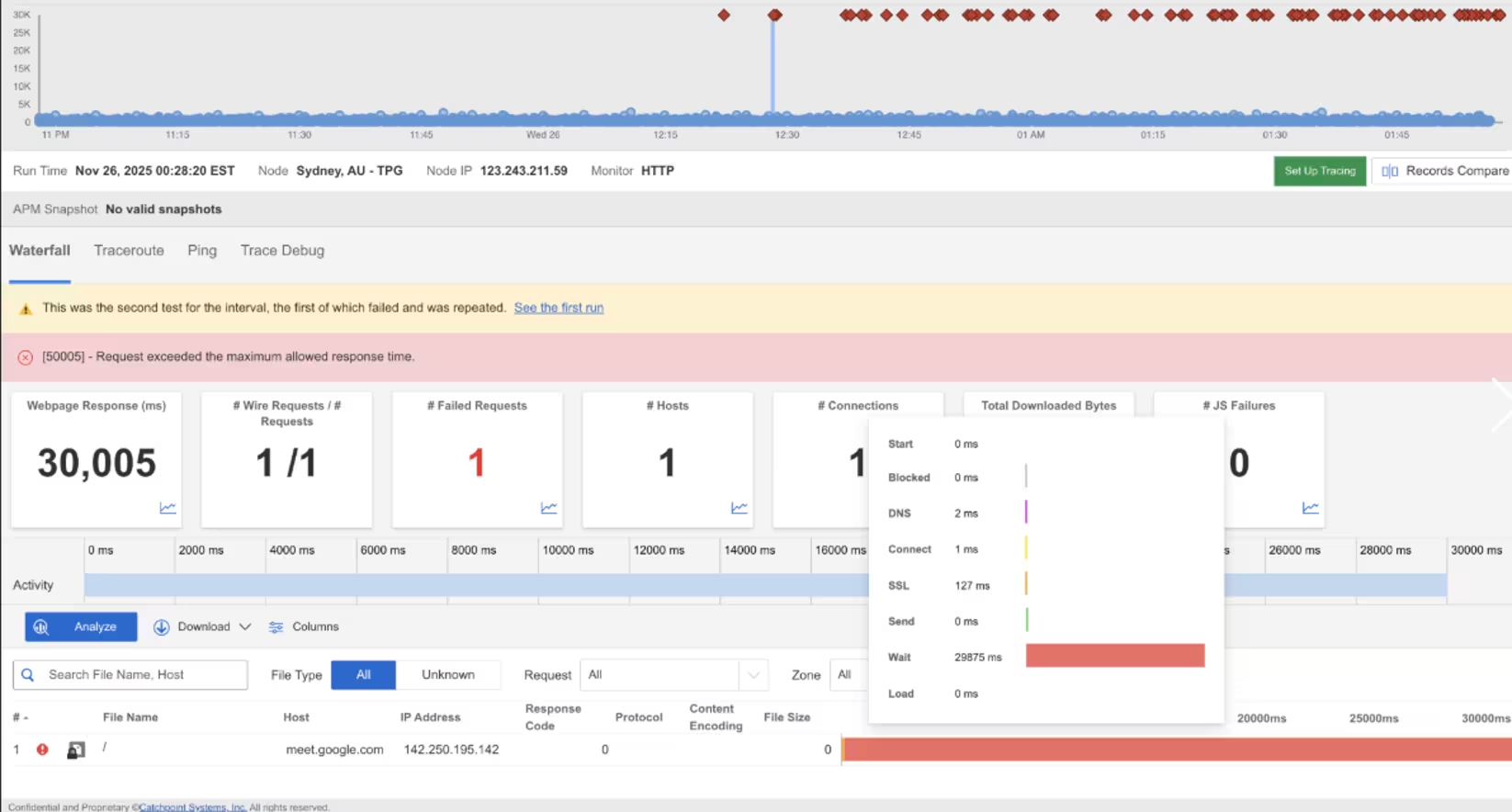

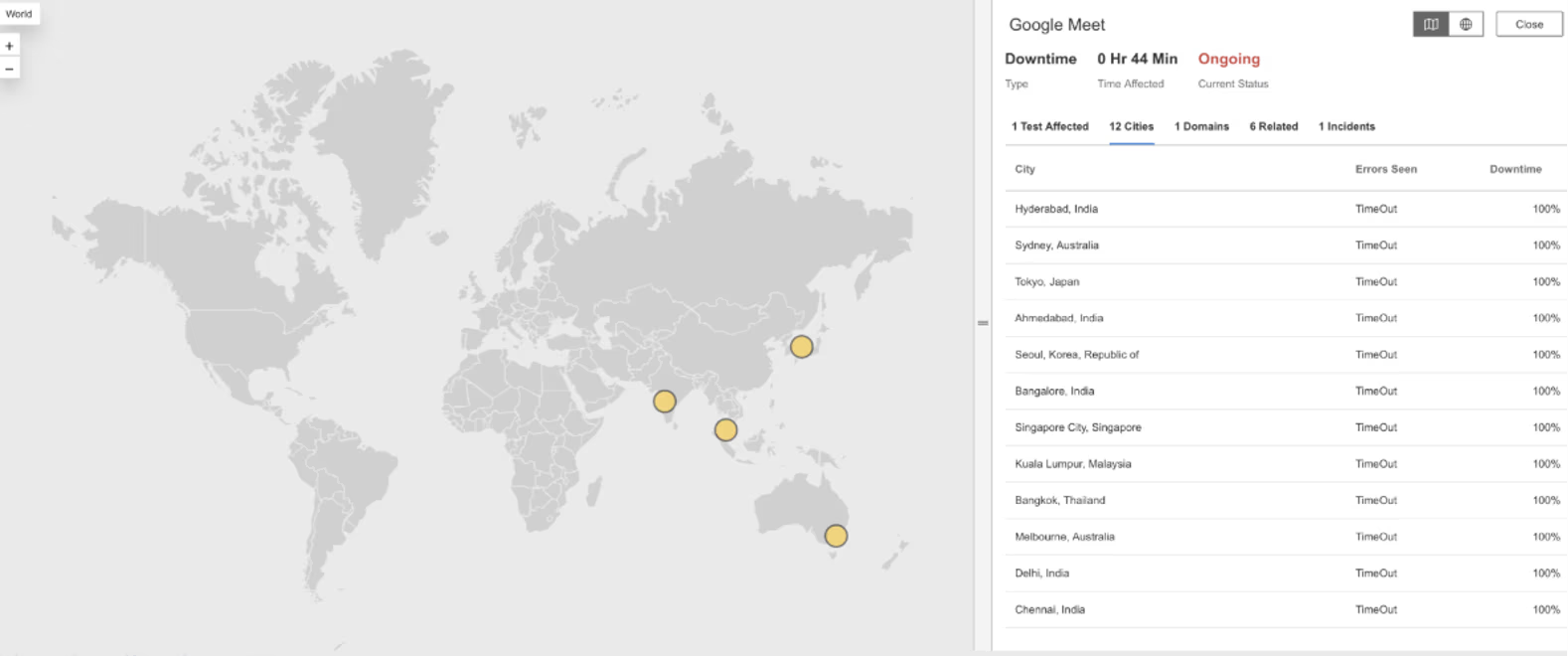

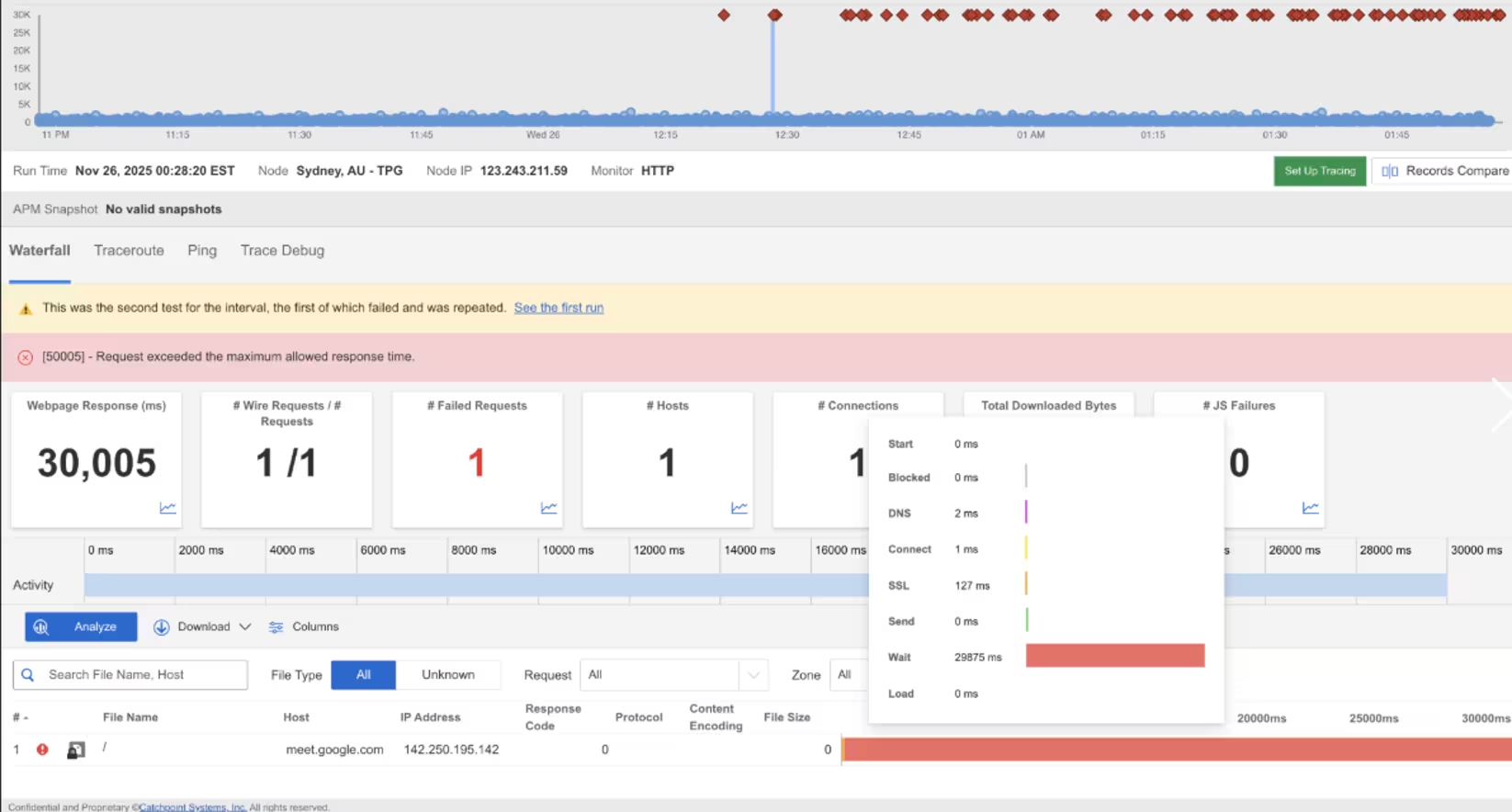

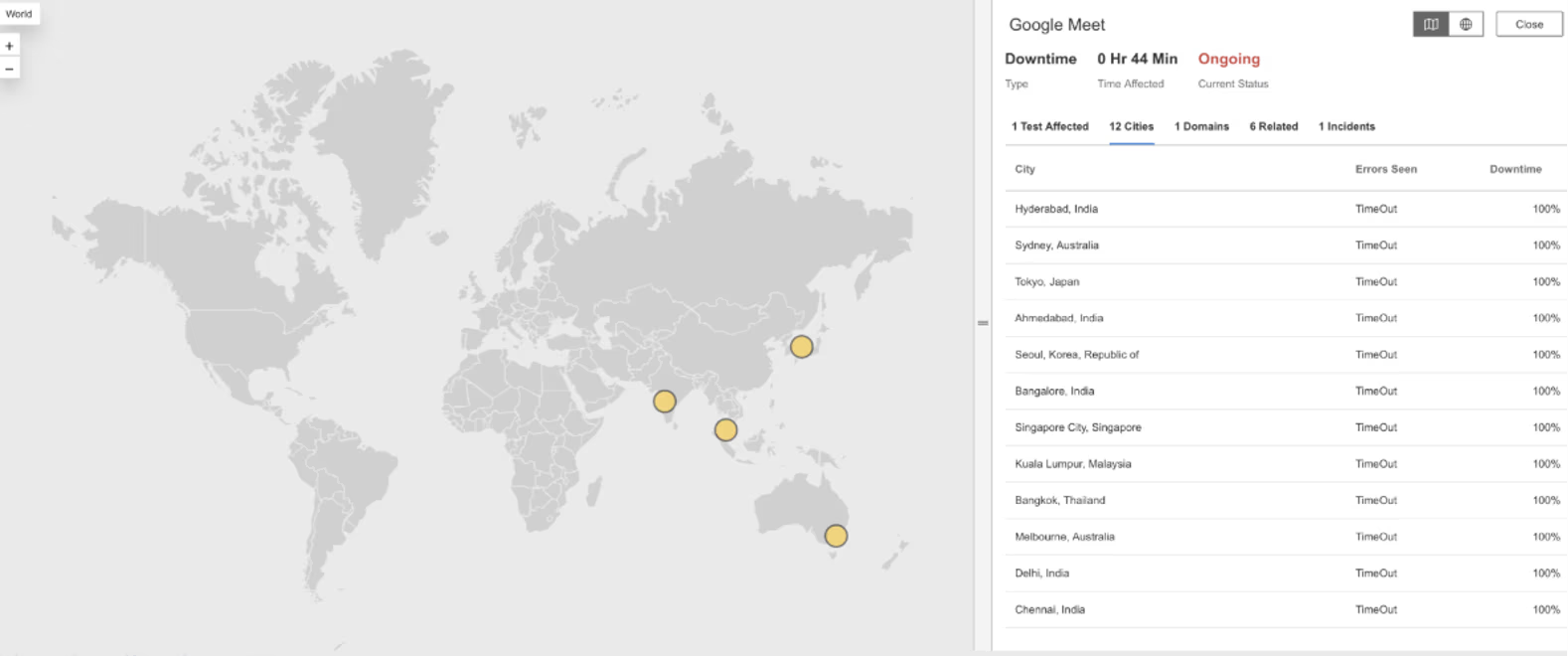

Google Meet

Was ist passiert?

Am 26. November 2025 um 00:28:20 EST stellte Internet Sonar einen Ausfall fest, der Google Meet in mehreren Regionen betraf, darunter Australien, Indien, Japan, Korea, Malaysia, Singapur und Thailand, wobei Anfragen an https://meet.google.com/ von mehreren Standorten aus mit langen Wartezeiten verbunden waren.

Mitbringsel

Bei Tools für die Echtzeit-Zusammenarbeit hat Latenz ebenso gravierende Auswirkungen wie ein vollständiger Ausfall. Selbst wenn Dienste technisch verfügbar bleiben, können Verzögerungen beim Verbindungsaufbau oder bei der Signalisierung Meetings sowie die Audio- und Videoqualität beeinträchtigen. Dieser Vorfall verdeutlicht, wie sich regionale Leistungseinbußen im Vergleich zu herkömmlichen Web-Workloads unverhältnismäßig stark auf interaktive Anwendungen auswirken können. Die Überwachung der Latenzverteilungen und Verbindungsaufbauzeiten nach Region ist entscheidend, um diese „Brownout“-Zustände frühzeitig zu erkennen, insbesondere bei Echtzeitdiensten, bei denen die Toleranz der Benutzer gegenüber Verzögerungen extrem gering ist und Leistungsprobleme sofort als Usability-Probleme zutage treten.

Granulieren

Was ist passiert?

Am 18. November 2025 um 19:12:42 EST stellte Internet Sonar einen Ausfall fest, von dem Granify in mehreren Regionen betroffen war, darunter die USA, Großbritannien, Kanada, Australien und viele andere. Anfragen an matching.granify.com lieferten von mehreren Standorten aus den HTTP-Fehler 502 (Bad Gateway – der Server hat eine ungültige Antwort von einem Upstream-Server erhalten).

Mitbringsel

HTTP-502-Fehler, die gleichzeitig in mehreren Regionen auftreten, deuten auf einen Ausfall zwischen den Service-Ebenen hin, wobei die Frontend-Systeme von Granify zwar erreichbar blieben, aber nicht erfolgreich mit den vorgelagerten Abhängigkeiten kommunizieren konnten. Dieses Muster weist häufig eher auf Instabilität in gemeinsam genutzten Backend-Diensten, Partnerintegrationen oder Ursprungssystemen hin als auf Probleme mit der Edge-Konnektivität. Da diese Ausfälle an der Gateway-Grenze auftreten, können sie alle Regionen gleichzeitig betreffen, selbst wenn das zugrunde liegende Problem in einer einzigen Abhängigkeit liegt. Die Verfolgung der Fehler in der Anforderungskette hilft dabei, Fehler in vorgelagerten Abhängigkeiten von Anwendungsfehlern zu unterscheiden und zeigt, wie eng gekoppelte Integrationen den Ausbreitungsradius bei globalen Vorfällen vergrößern können.

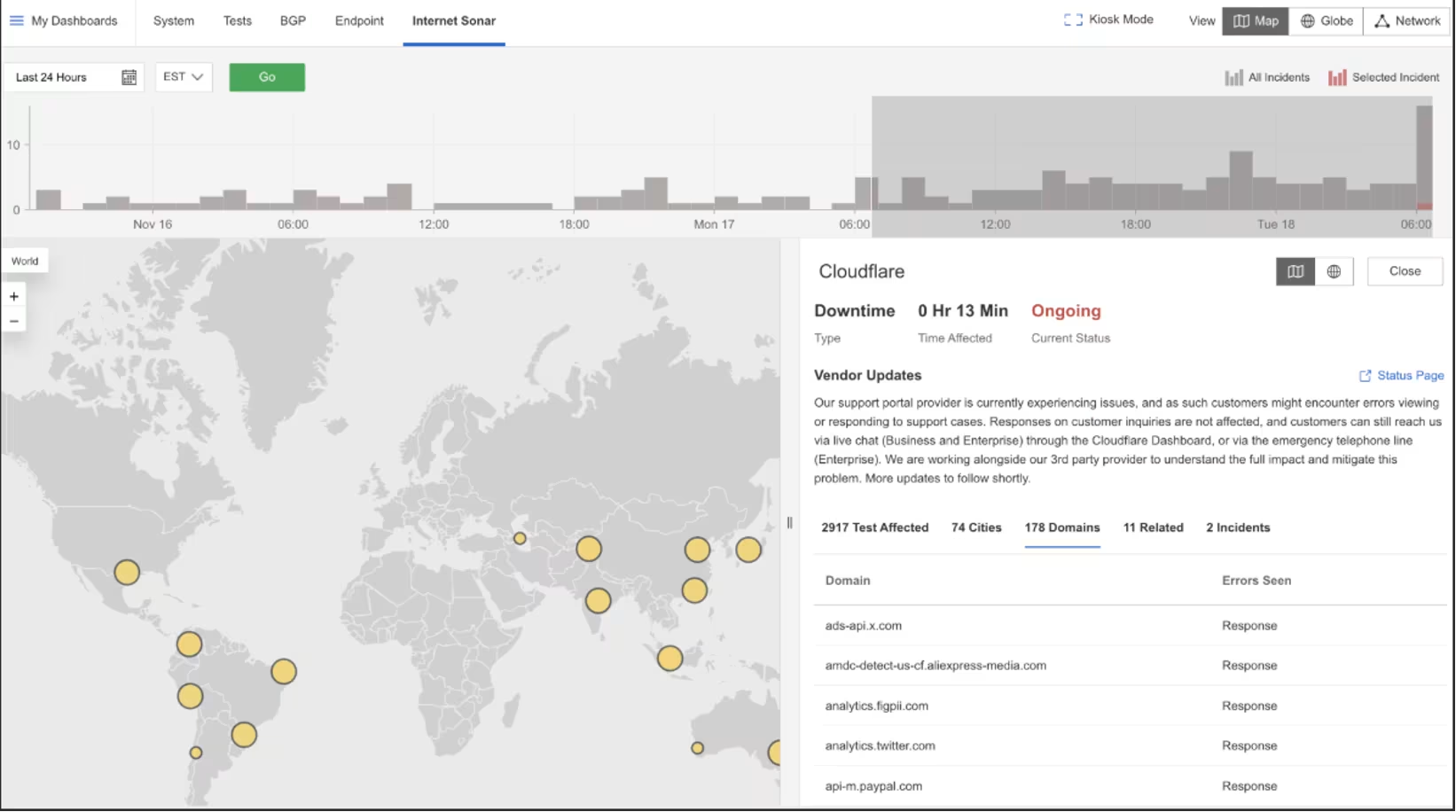

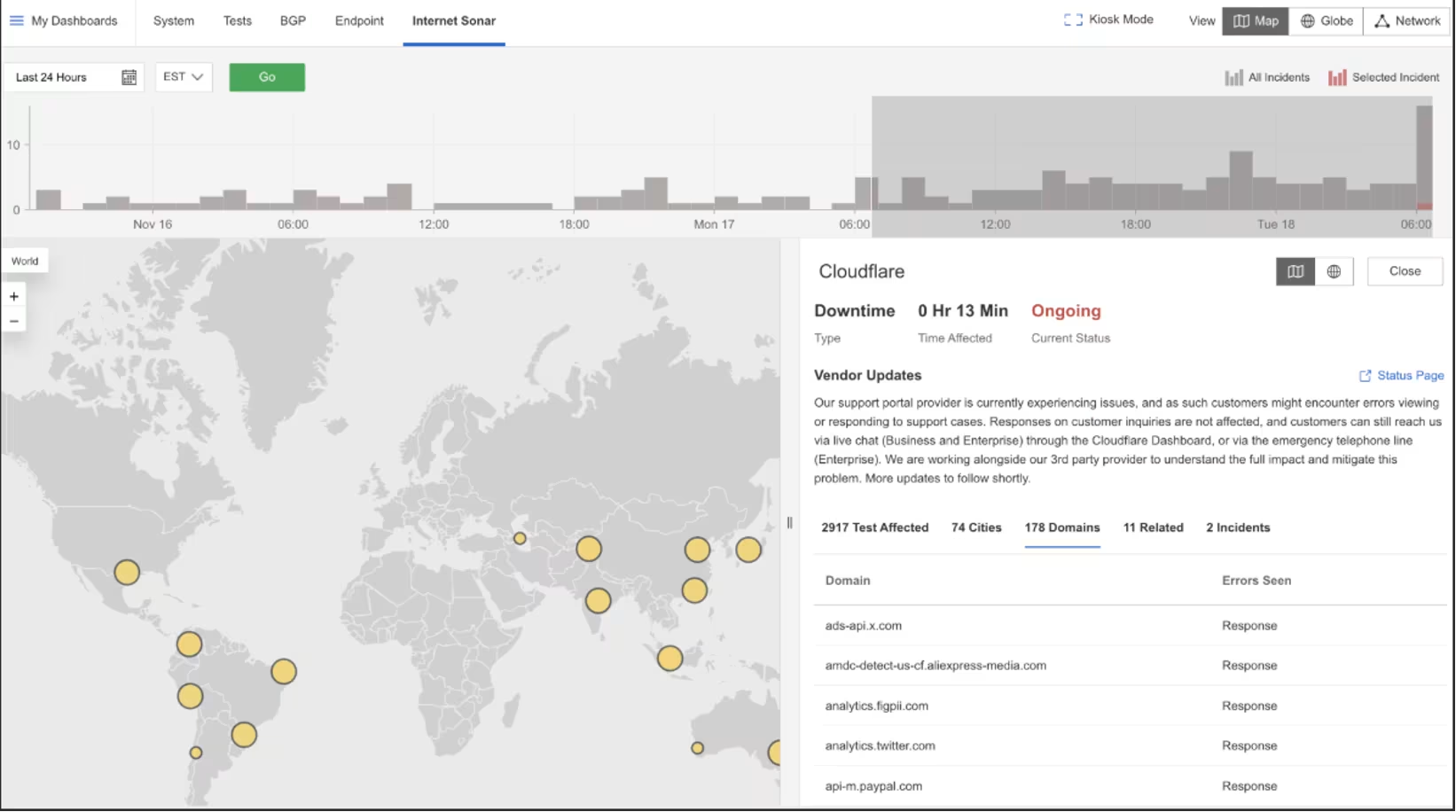

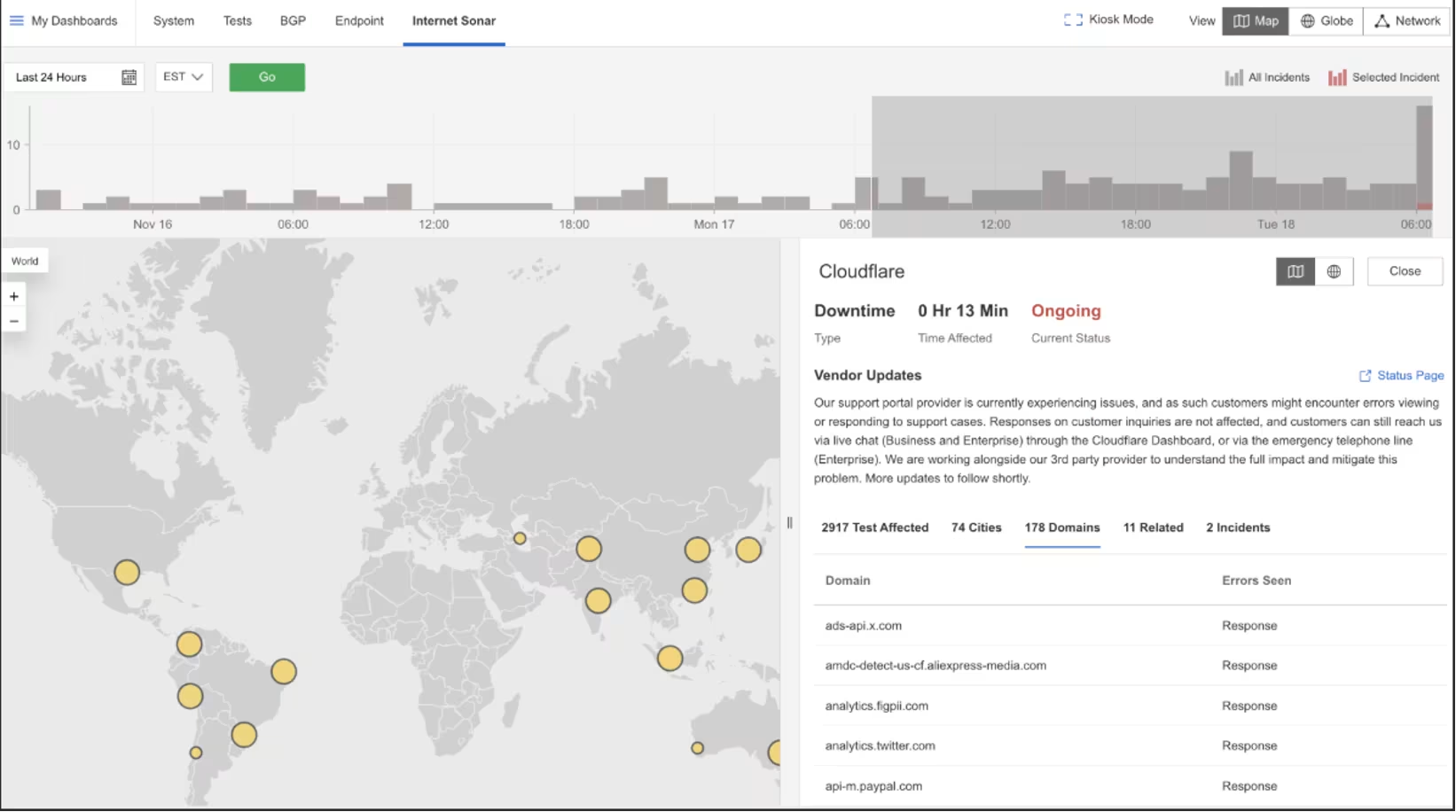

Cloudflare

Was ist passiert?

Am 18. November 2025 um 06:30:40 EST stellte Internet Sonar einen anhaltenden Ausfall fest, der Cloudflare weltweit betraf, wobei mehrere Domains HTTP 500 zurückgaben (interner Serverfehler – der Server stieß auf eine unerwartete Bedingung, die ihn daran hinderte, die Anfrage zu erfüllen).

Mitbringsel

HTTP-500-Fehler auf globaler Ebene deuten in der Regel auf Fehler innerhalb der internen Steuerungsebene oder der gemeinsamen Servicelogik eines Anbieters hin und nicht auf Probleme mit der Konnektivität, dem Routing oder der Erreichbarkeit der Quelle. Wenn eine interne Kernkomponente ausfällt, bleiben Edge-Standorte möglicherweise erreichbar, können aber dennoch den Datenverkehr nicht korrekt bedienen. Dieser Vorfall verdeutlicht, wie sich die Instabilität der Steuerungsebene extern in Form von weit verbreiteten Anwendungsfehlern äußern kann, selbst wenn die Netzwerkpfade und Kundenursprünge intakt sind. Die Unterscheidung zwischen Fehlern der Stufe 500 und 502/503-Antworten ist von entscheidender Bedeutung, da sie grundlegend unterschiedliche Fehlermodi signalisieren und Teams dabei helfen, globale Plattformprobleme nicht fälschlicherweise der Kundeninfrastruktur oder Internet-Routing-Problemen zuzuschreiben.

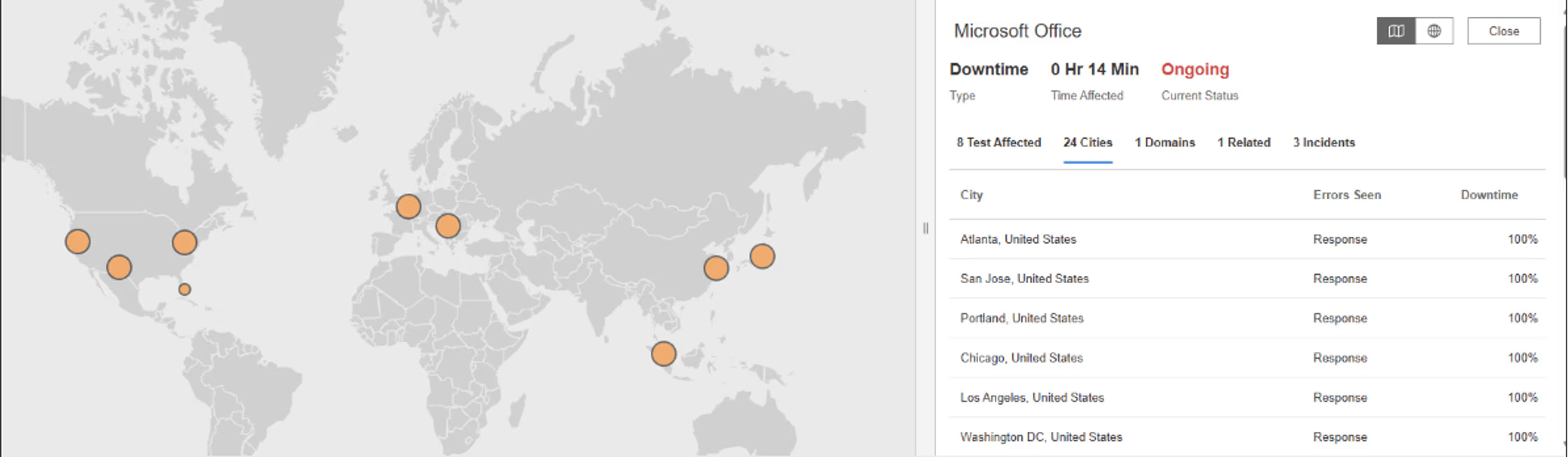

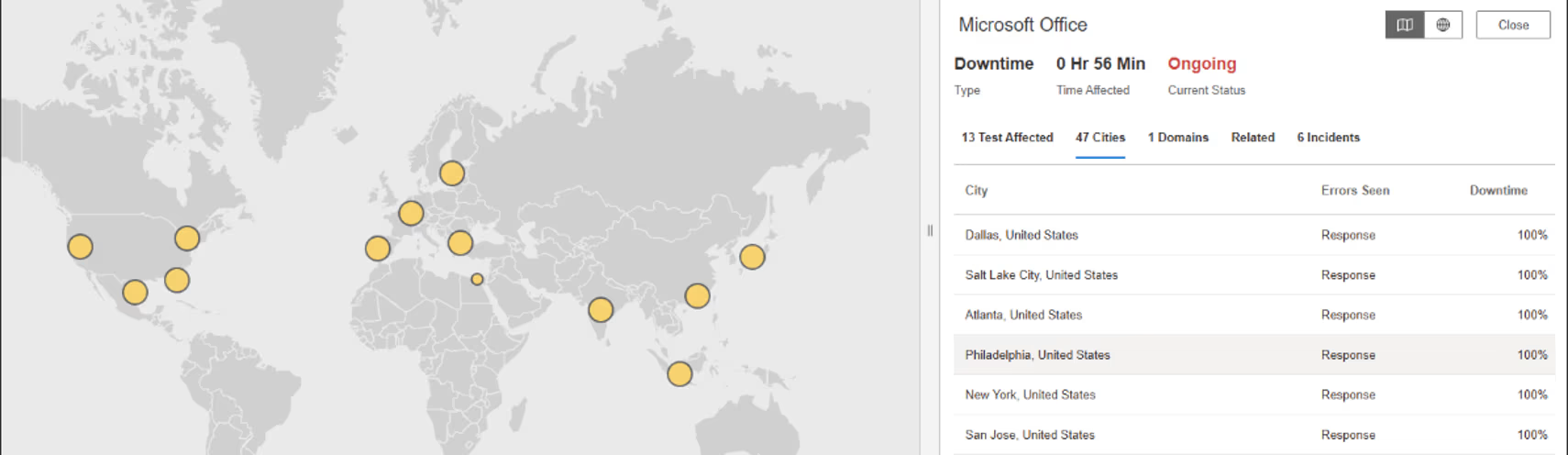

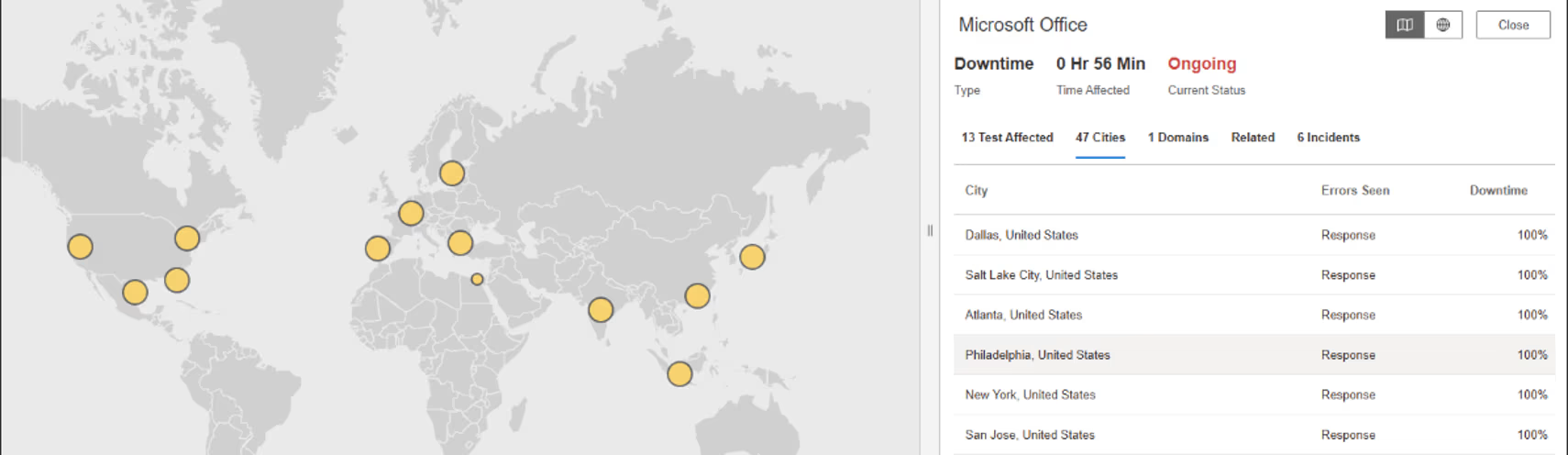

Microsoft Büro

Was ist passiert?

Am 18. November 2025 um 01:43:53 EST stellte Internet Sonar einen Ausfall fest, der Microsoft Office in mehreren Regionen betraf, darunter die USA, Kanada, Japan und China. Anfragen an www.office.com führten von mehreren Standorten aus zu HTTP 503 (Antwortcode „Dienst nicht verfügbar“ – Server kann Anfragen vorübergehend nicht bearbeiten).

Mitbringsel

Gleichzeitige HTTP-503-Fehler in mehreren Regionen deuten eher auf einen Ausfall oder eine Überlastung in einer gemeinsam genutzten Service-Ebene hin als auf isolierte regionale Vorfälle. Bei großen SaaS-Plattformen deutet dies häufig auf Abhängigkeiten wie Authentifizierung, Konfigurationsdienste oder interne APIs hin, die den Zugriff auf viele Front-End-Workloads gleichzeitig regeln. Selbst wenn die Infrastruktur online bleibt, kann der Ausfall dieser gemeinsam genutzten Komponenten den Zugriff für Benutzer weltweit blockieren. Dieser Vorfall zeigt, wie wichtig globales Kapazitätsmanagement und die Integrität von Abhängigkeiten sind, um weitreichende Verfügbarkeitsausfälle zu verhindern, und warum es unerlässlich ist, zu wissen, welche gemeinsam genutzten Dienste sich auf dem kritischen Pfad befinden, um die Auswirkungen bei plattformweiten Ereignissen zu begrenzen.

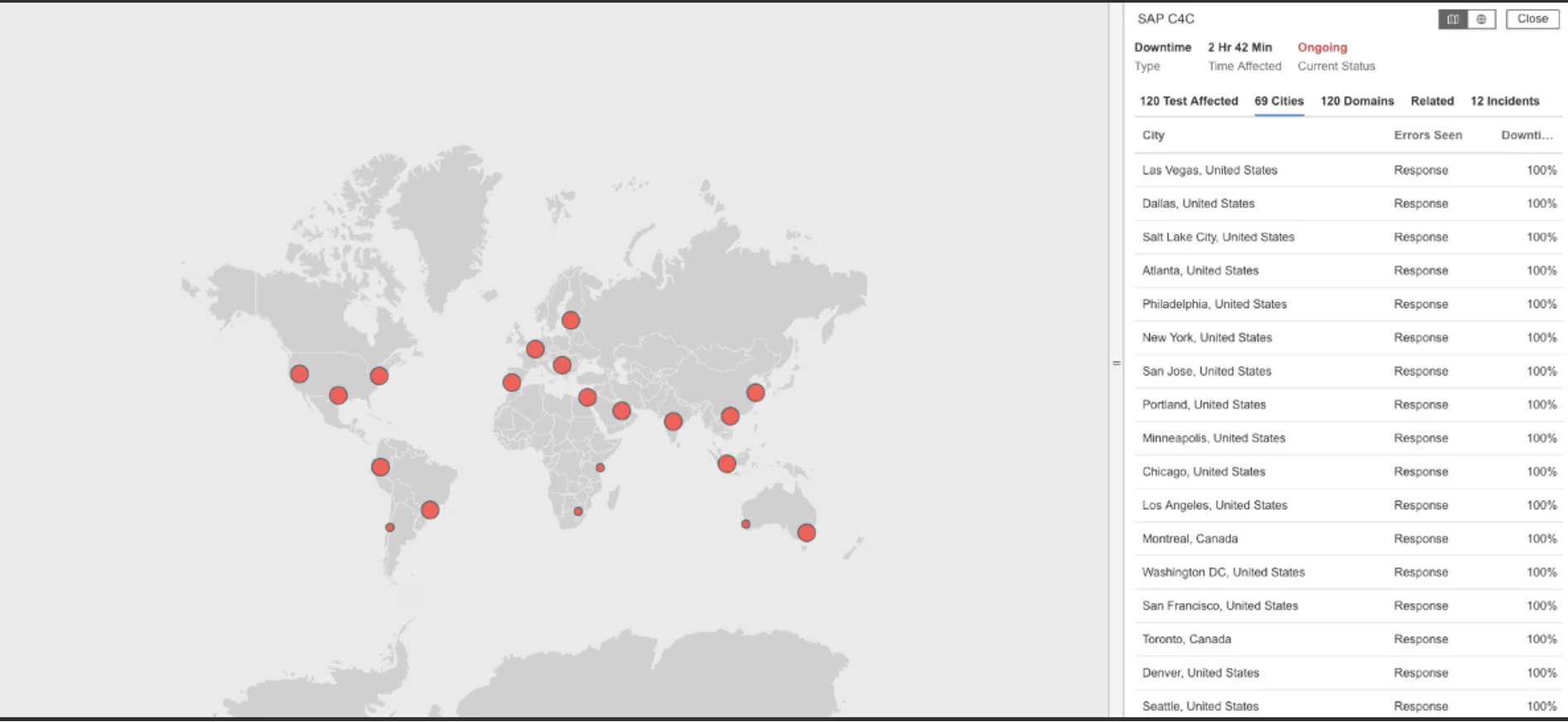

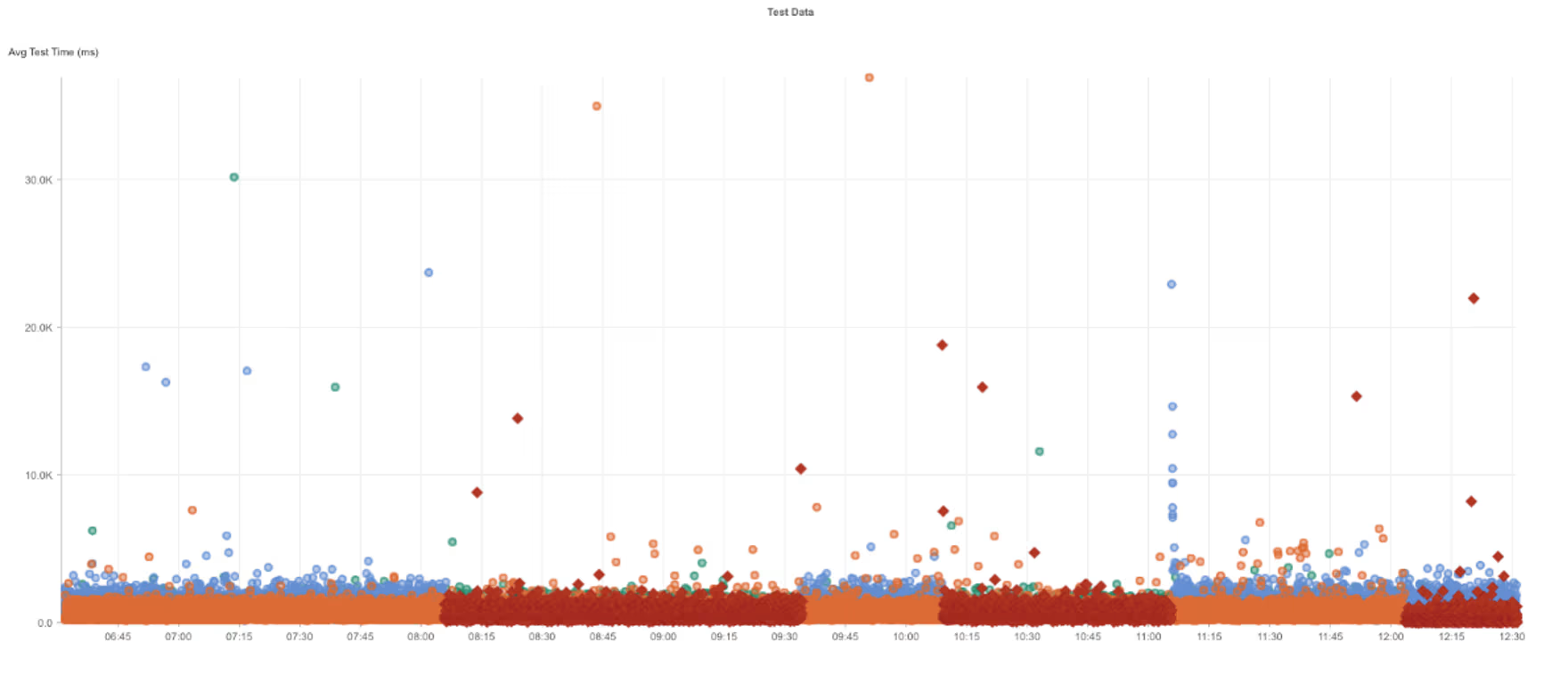

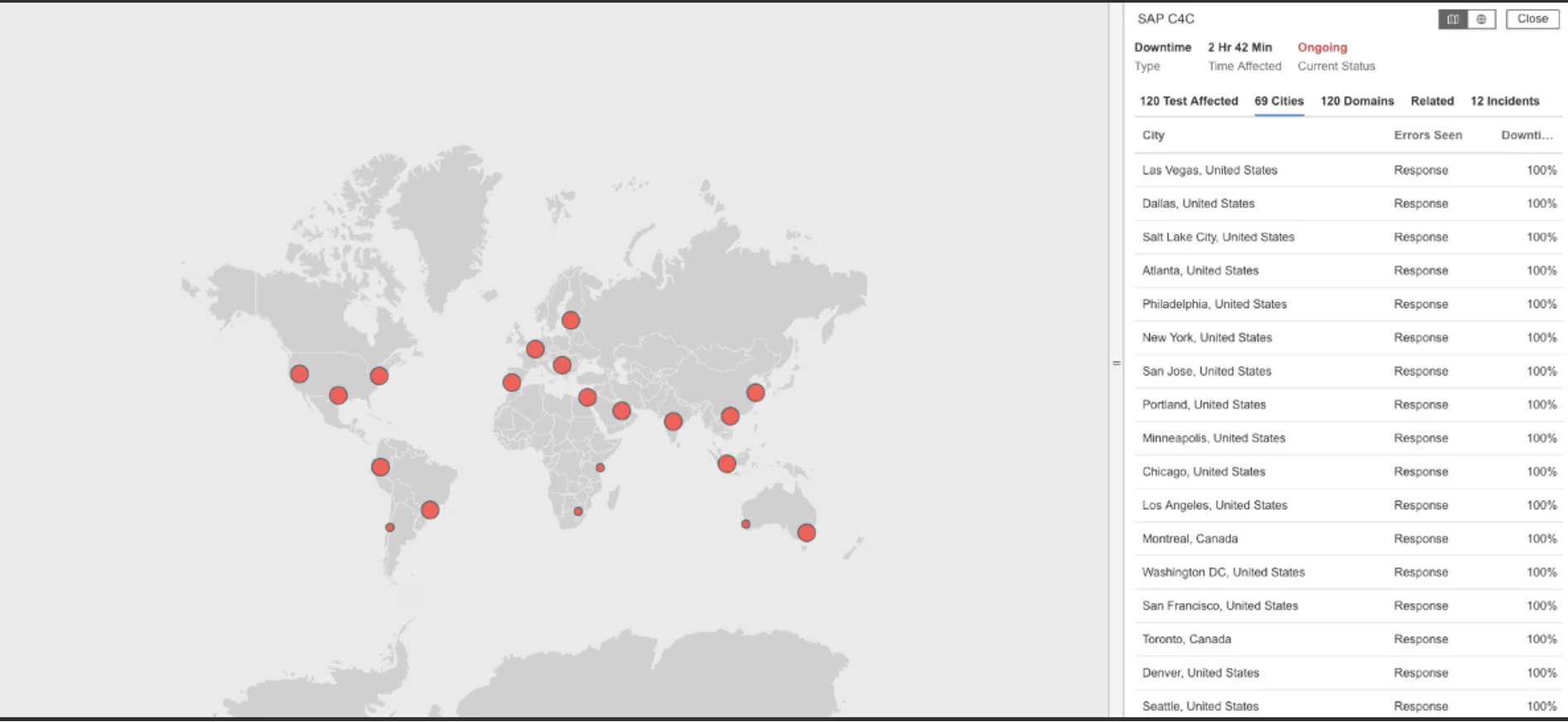

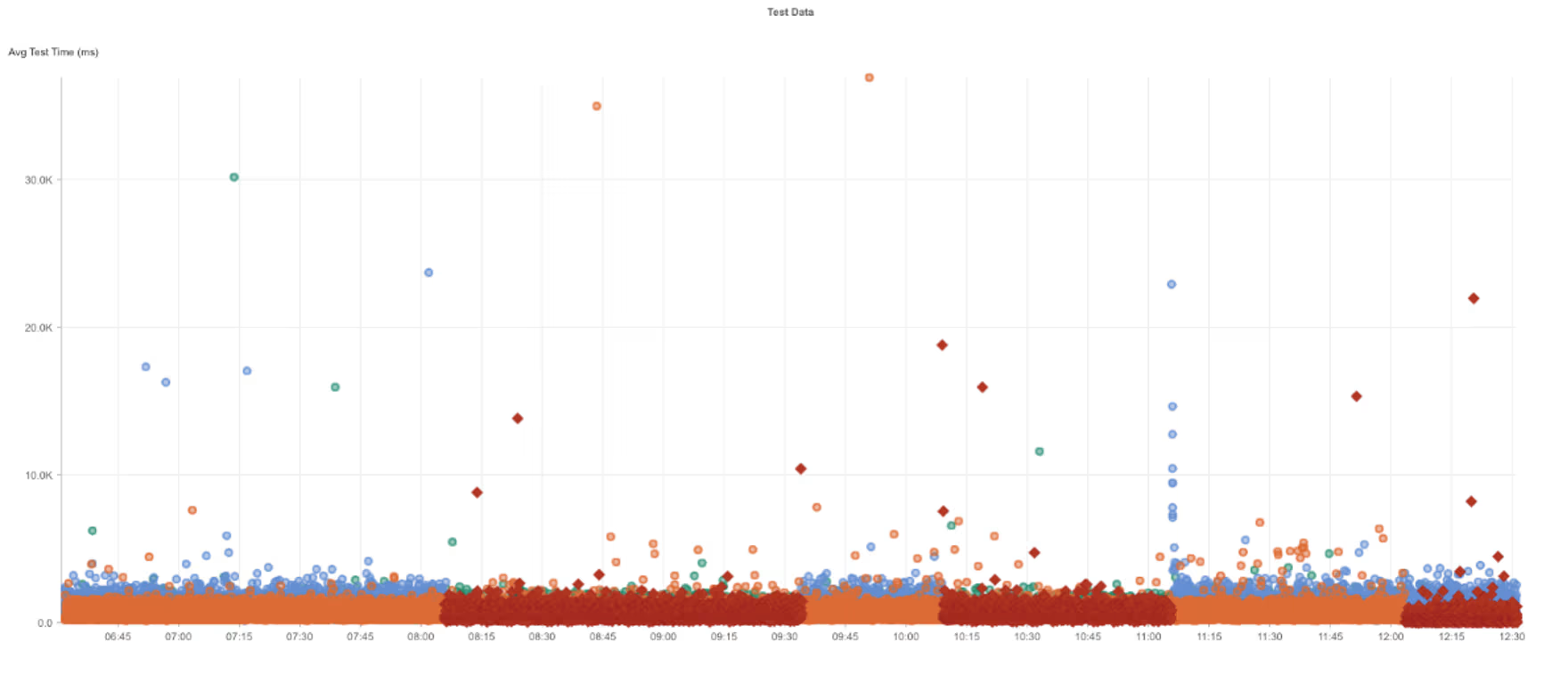

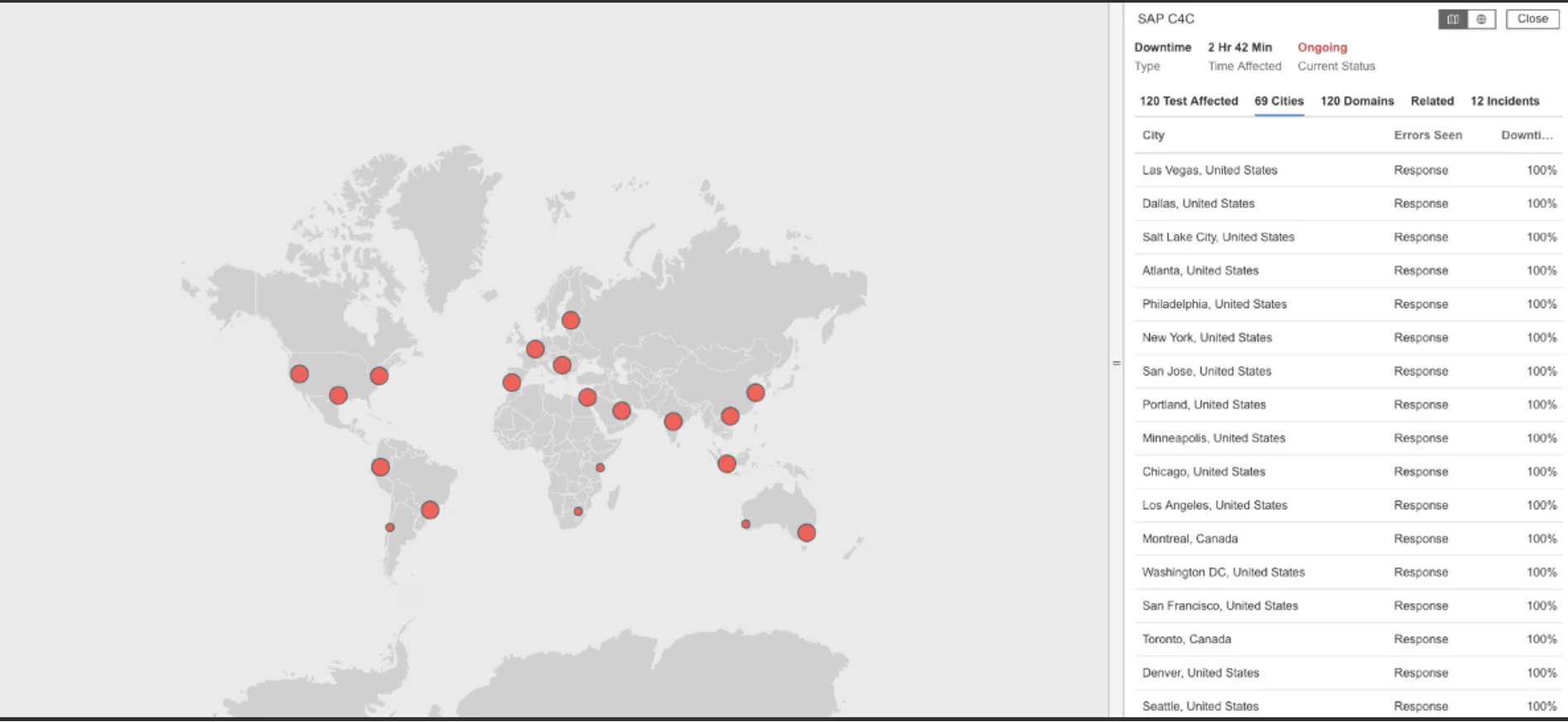

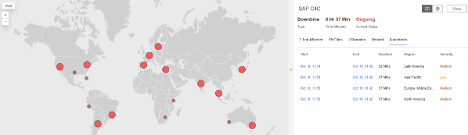

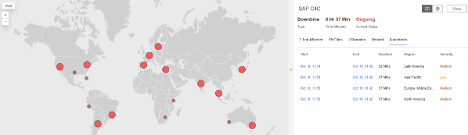

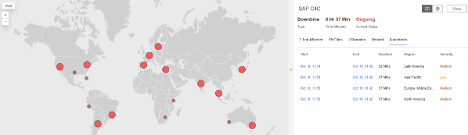

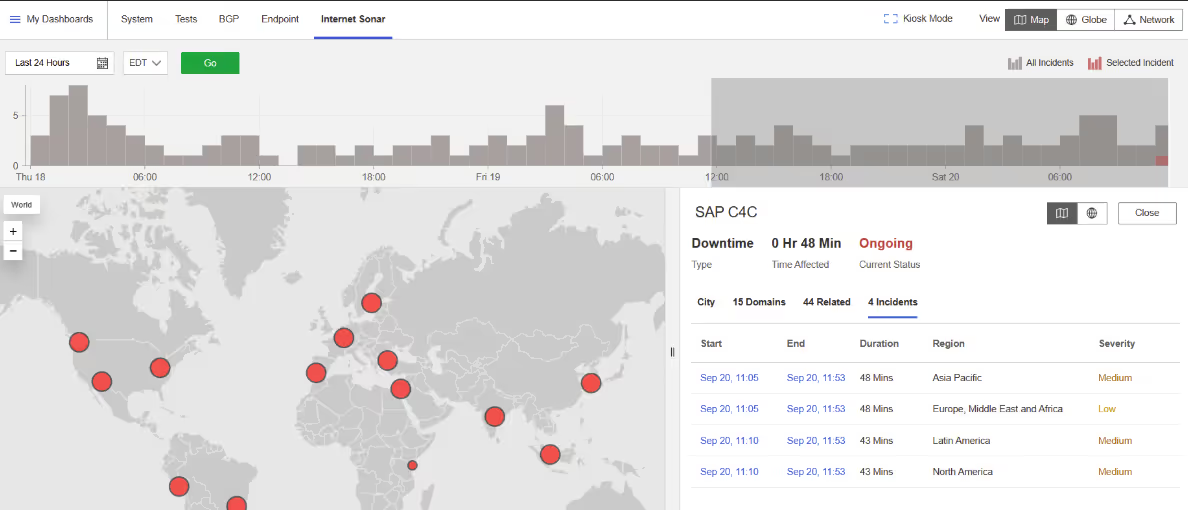

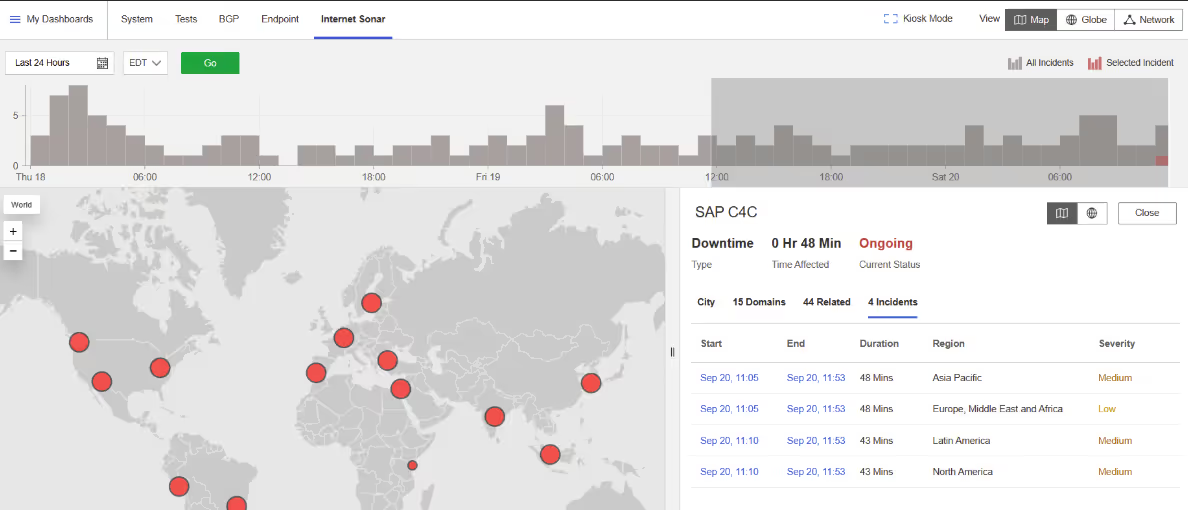

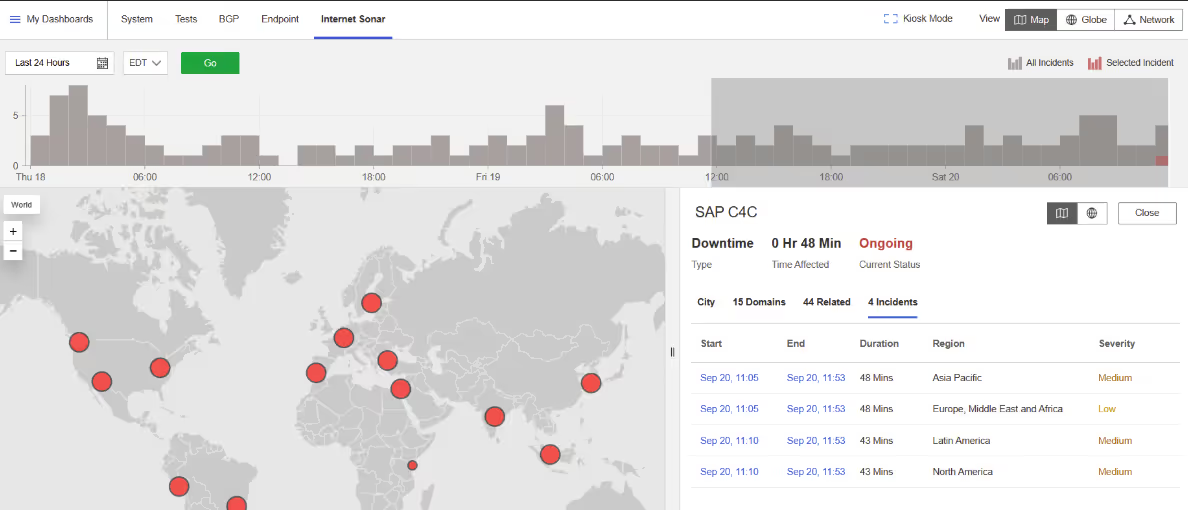

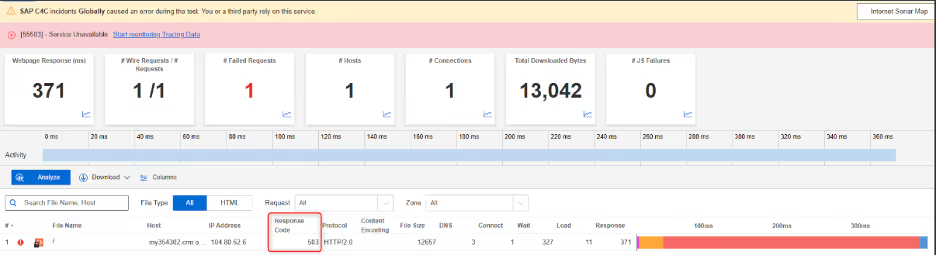

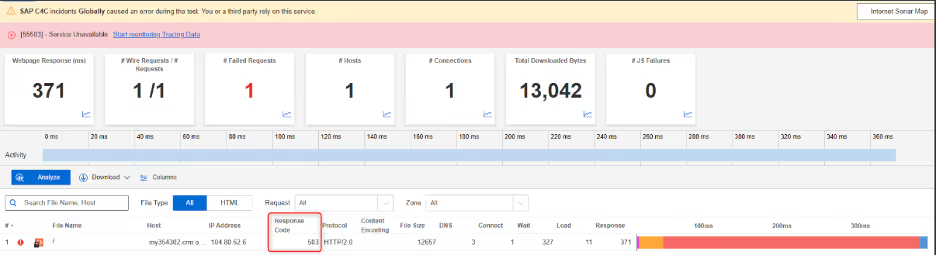

SAP C4C

Was ist passiert?

Am 15. November 2025 um 08:05:49 EST stellte Internet Sonar einen weltweiten Ausfall fest, von dem SAP C4C in mehreren Regionen betroffen war. Anfragen an mehrere crm.ondemand.com-Domains führten an mehreren Standorten zu einer HTTP-503-Fehlermeldung (Antwortcode „Dienst nicht verfügbar“ – Server kann Anfragen vorübergehend nicht bearbeiten), sodass sich Benutzer nicht beim SAP-Dienst anmelden konnten.

Mitbringsel

Anmeldefehler aufgrund von HTTP-503-Fehlern deuten häufig auf Probleme bei der Authentifizierung oder beim Aufbau von Sitzungen hin, die als Hard Gates zum Rest der Anwendung fungieren. Selbst wenn die Kernfunktionen des CRM noch funktionsfähig sind, macht der Verlust des Anmeldepfads die Plattform praktisch unbrauchbar. Dieser Vorfall verdeutlicht, wie sehr Authentifizierungsdienste in SaaS-Plattformen von Abhängigkeiten mit hoher Auswirkung geprägt sind, bei denen ein einziger Ausfall den gesamten Benutzerzugriff weltweit blockieren kann. Die separate Verfolgung der Verfügbarkeit für Anmeldeabläufe im Vergleich zu Anwendungspfaden nach der Anmeldung hilft dabei, Ausfälle auf der Authentifizierungsebene von umfassenderen Anwendungsfehlern zu unterscheiden und die Isolierung der Grundursache zu beschleunigen, wenn Benutzer melden, dass sie „ausgesperrt” sind, anstatt Funktionsfehler zu sehen.

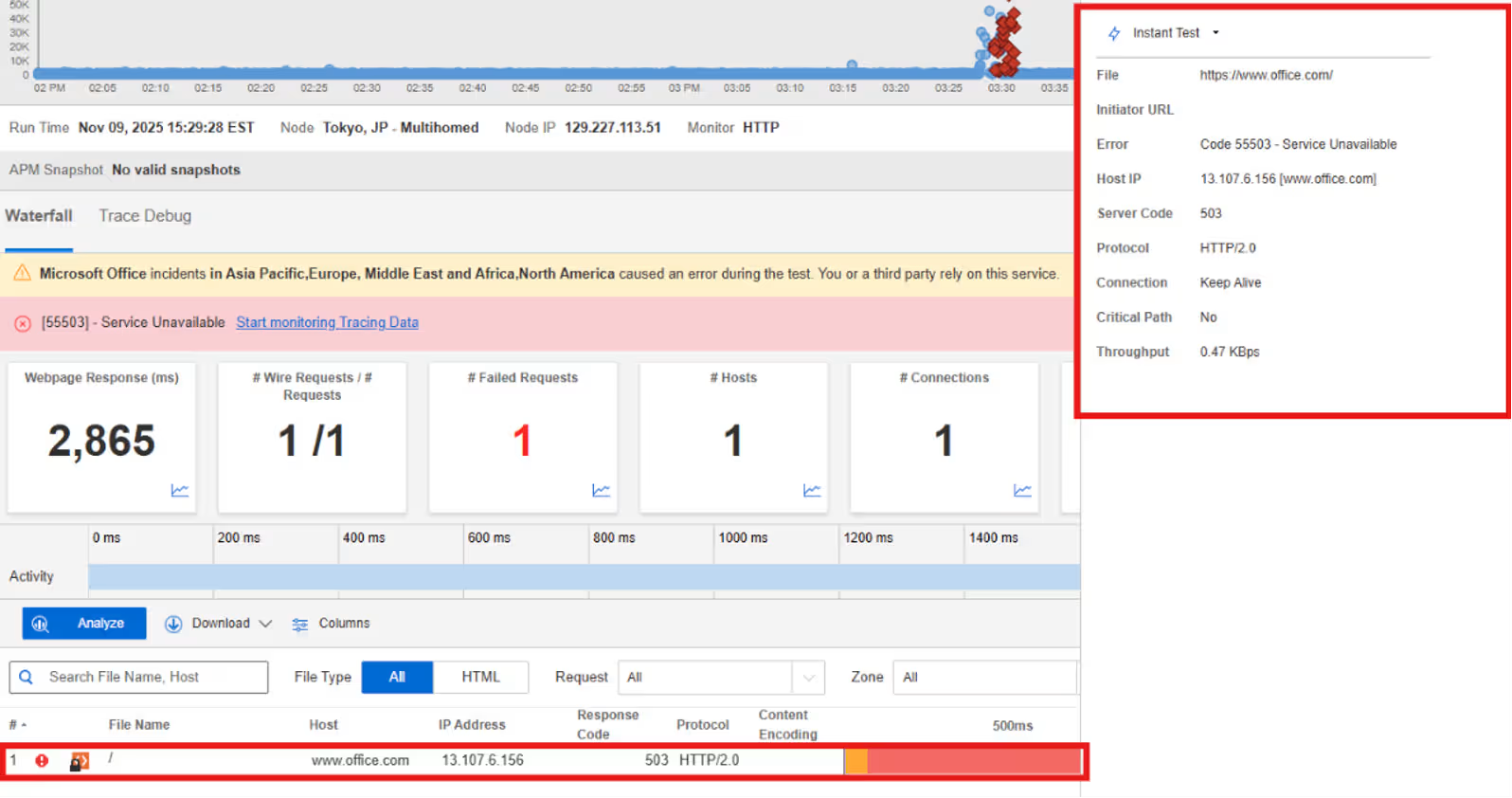

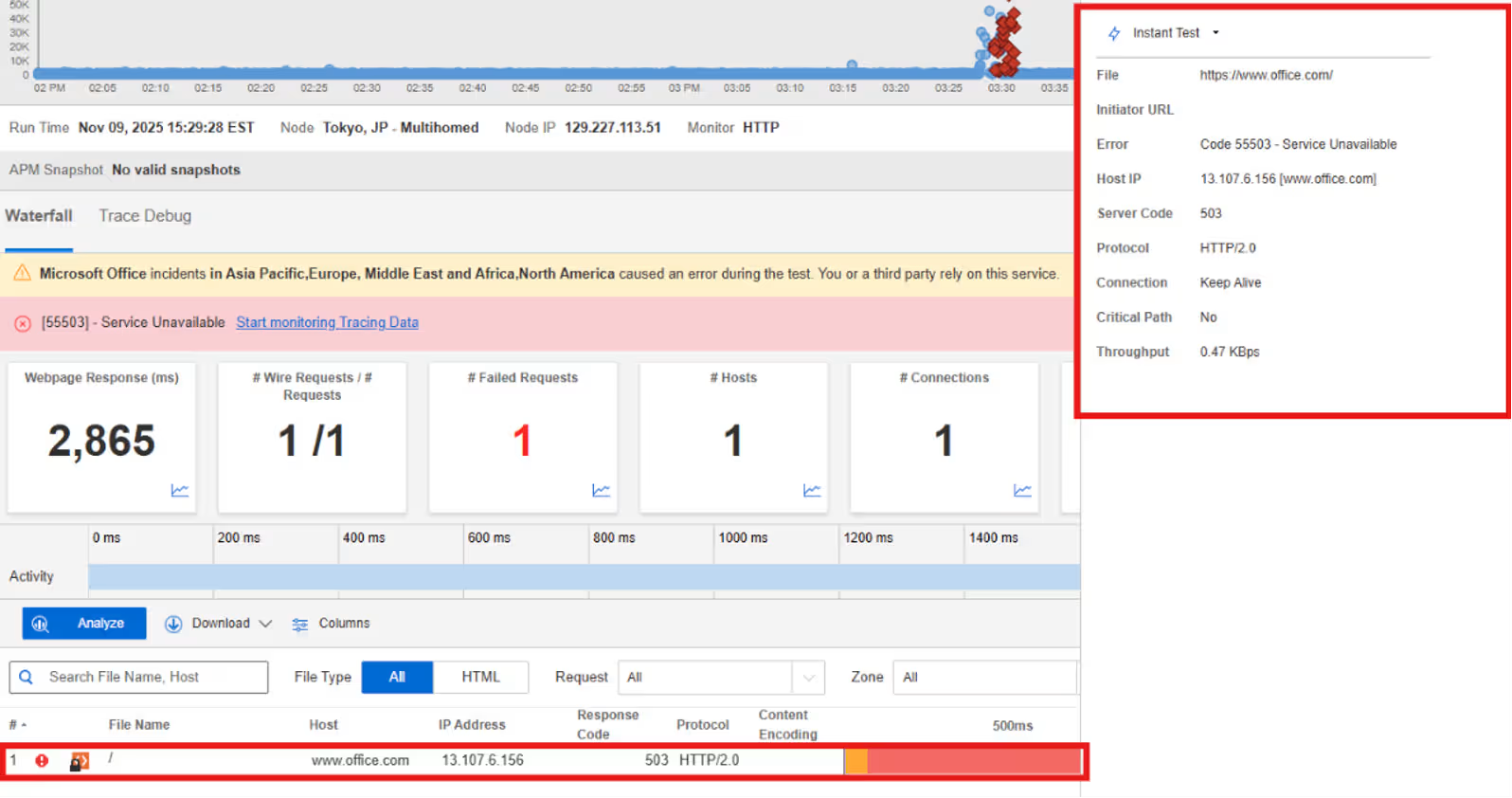

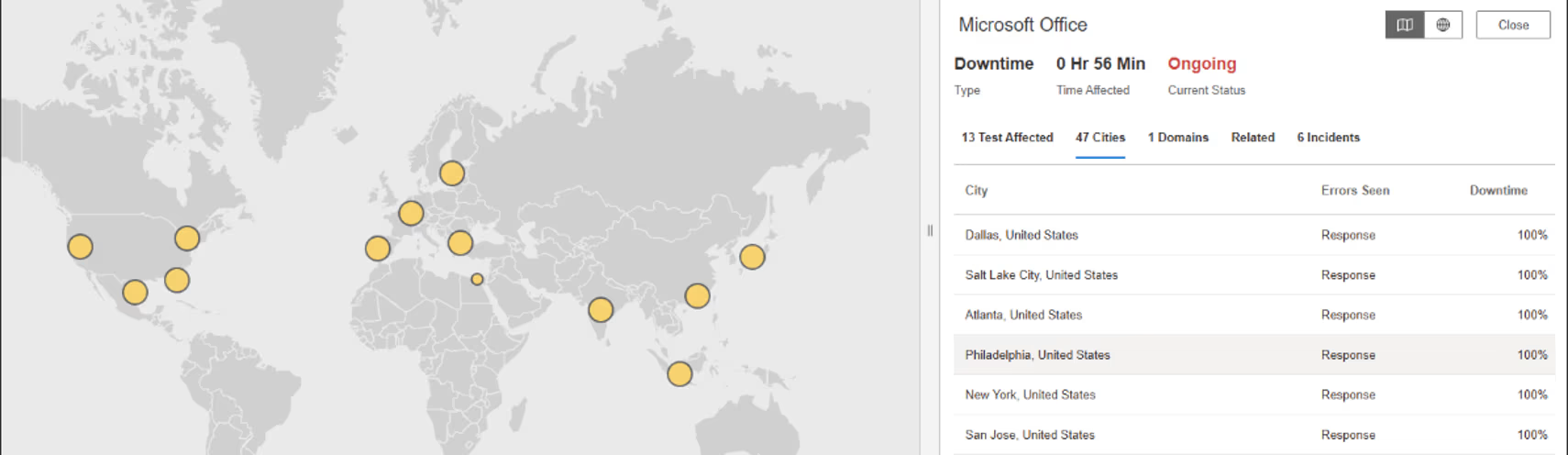

Microsoft Büro

Was ist passiert?

Am 9. November 2025 um 15:29:28 EST stellte Internet Sonar einen Ausfall fest, der Microsoft Office in mehreren Regionen betraf, darunter die USA, Kanada, Schweden, Norwegen, Polen, Großbritannien und Frankreich. Anfragen an www.office.com führten zu HTTP 503 (Antwortcode für nicht verfügbaren Dienst – Server kann Anfragen vorübergehend nicht bearbeiten).

Mitbringsel

Eine plötzliche Welle von HTTP-503-Fehlern in verschiedenen Regionen deutet oft darauf hin, dass Systeme ihre Bereitschafts- oder Aufnahmegrenzen erreichen, sodass der Datenverkehr zwar erfolgreich ankommt, aber nicht sicher angenommen werden kann. In großen Produktivitätsplattformen kann dies durch Nachfragespitzen, Backend-Drosselung oder schützende Lastabwurfmechanismen ausgelöst werden, die tiefgreifendere Ausfälle verhindern sollen. Diese Sicherheitsvorkehrungen tragen zwar zur Aufrechterhaltung der allgemeinen Plattformstabilität bei, äußern sich jedoch nach außen hin in Form von Ausfallzeiten für die Benutzer. Dieser Vorfall verdeutlicht, wie wichtig es ist, zu verstehen, wie und wann Dienste Last abwerfen und wie Datenverkehrsmuster globale Plattformen über die Bereitschaftsschwellen hinaus belasten können, selbst wenn die Infrastruktur online bleibt.

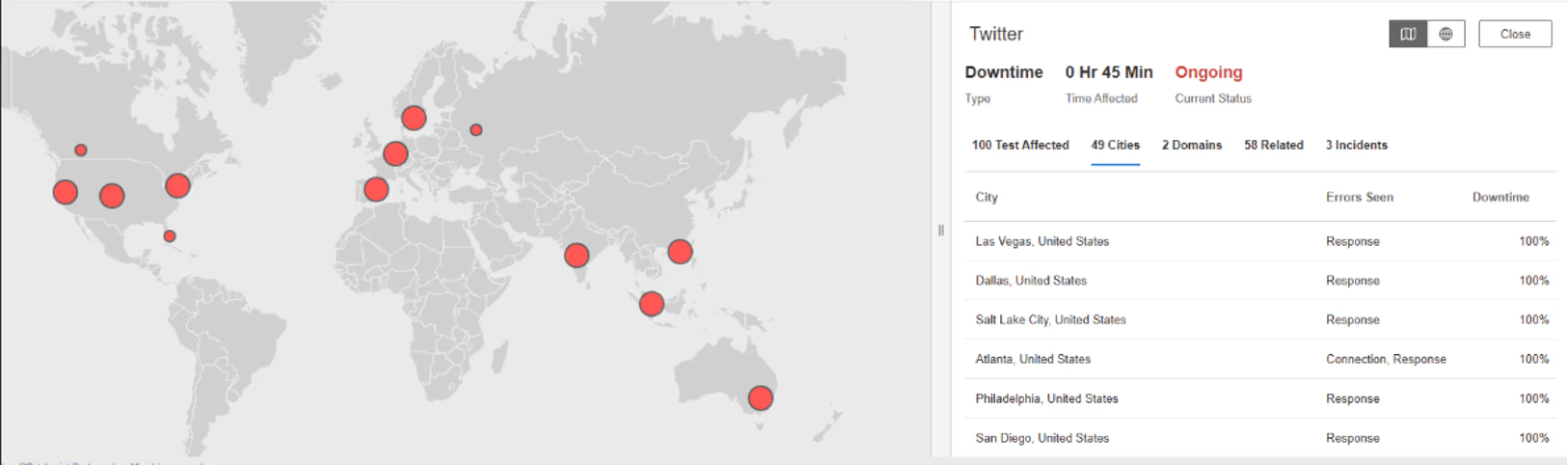

X Corp. (Twitter)

Was ist passiert?

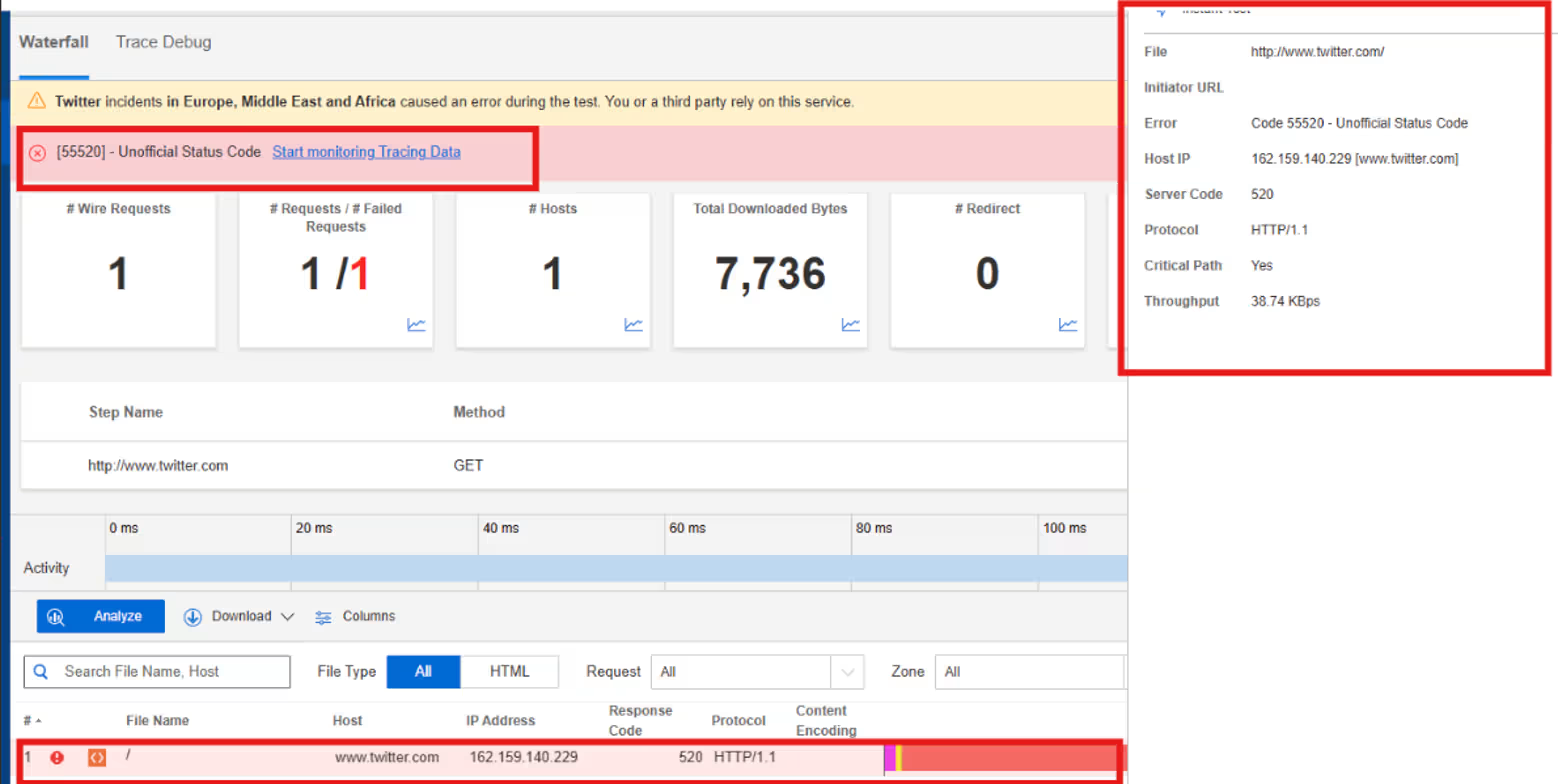

Am 6. November 2025 um 01:56:11 EST stellte Internet Sonar einen Ausfall fest, der X in mehreren Regionen betraf, darunter die USA, Frankreich, Großbritannien, Deutschland, Singapur, Irland und Australien. Anfragen an X.com führten von mehreren Standorten aus zu HTTP 520 (ein Serverfehler, der zurückgegeben wird, wenn die Quelle eine unerwartete oder ungültige Antwort gibt).

Mitbringsel

HTTP-520-Fehler treten an der Grenze zwischen Edge-Infrastruktur und Ursprungsservices auf und signalisieren Fehler, die sich nicht eindeutig Standard-Anwendungs- oder Serverfehlercodes zuordnen lassen. Diese Art von Fehler tritt häufig auf, wenn Ursprungsserver während der Antwort abstürzen, fehlerhafte Daten zurückgeben oder Verbindungen unerwartet zurücksetzen. Da die Edge weiterhin erreichbar bleibt, können Fehler global und gleichzeitig auftreten, selbst wenn das zugrunde liegende Problem auf eine Teilmenge der Ursprungssysteme beschränkt ist. Dieser Vorfall verdeutlicht, wie Verstöße gegen Edge-Origin-Verträge zu undurchsichtigen Fehlern führen können, die ohne Einblick in beide Seiten des Bereitstellungspfads schwer zu diagnostizieren sind, und warum die Verfolgung der Fehlersemantik entscheidend ist, um Fehler auf Protokollebene von reinen Anwendungs- oder Kapazitätsproblemen zu unterscheiden.

Oktober

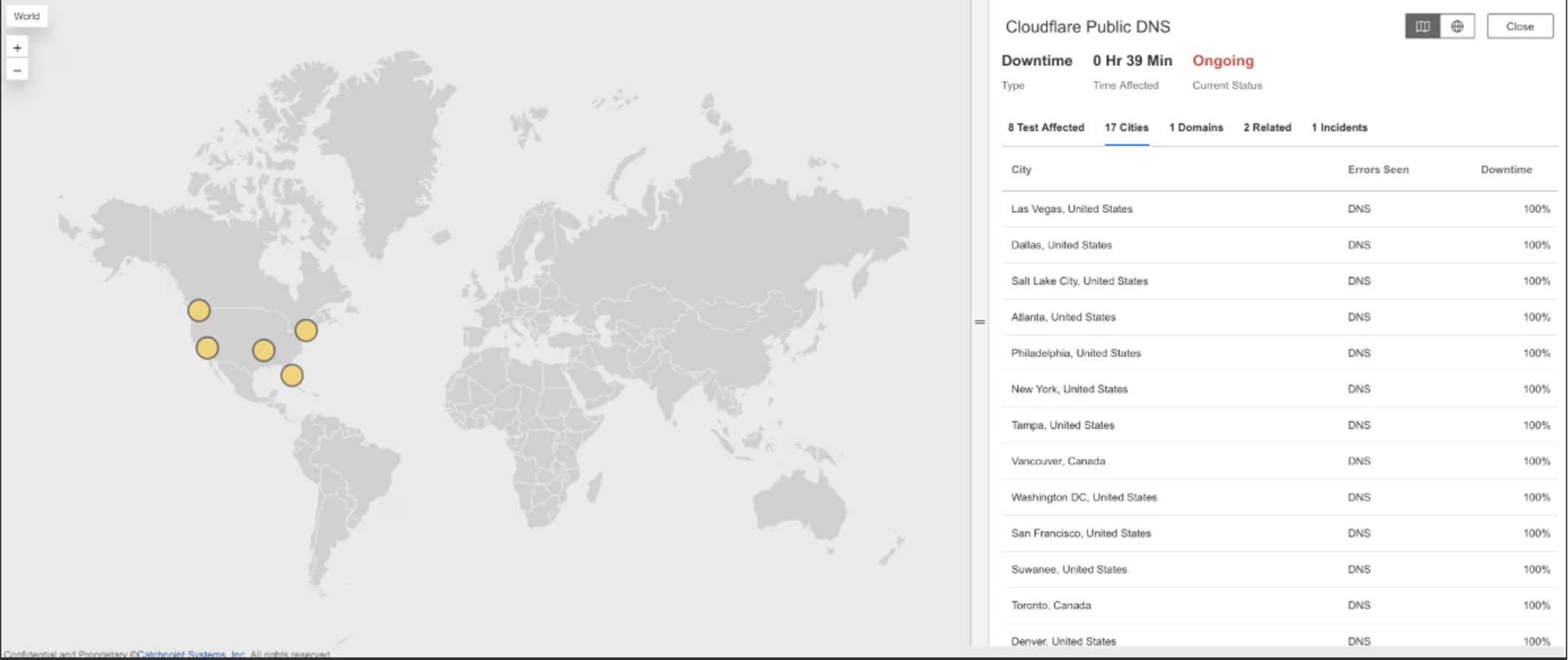

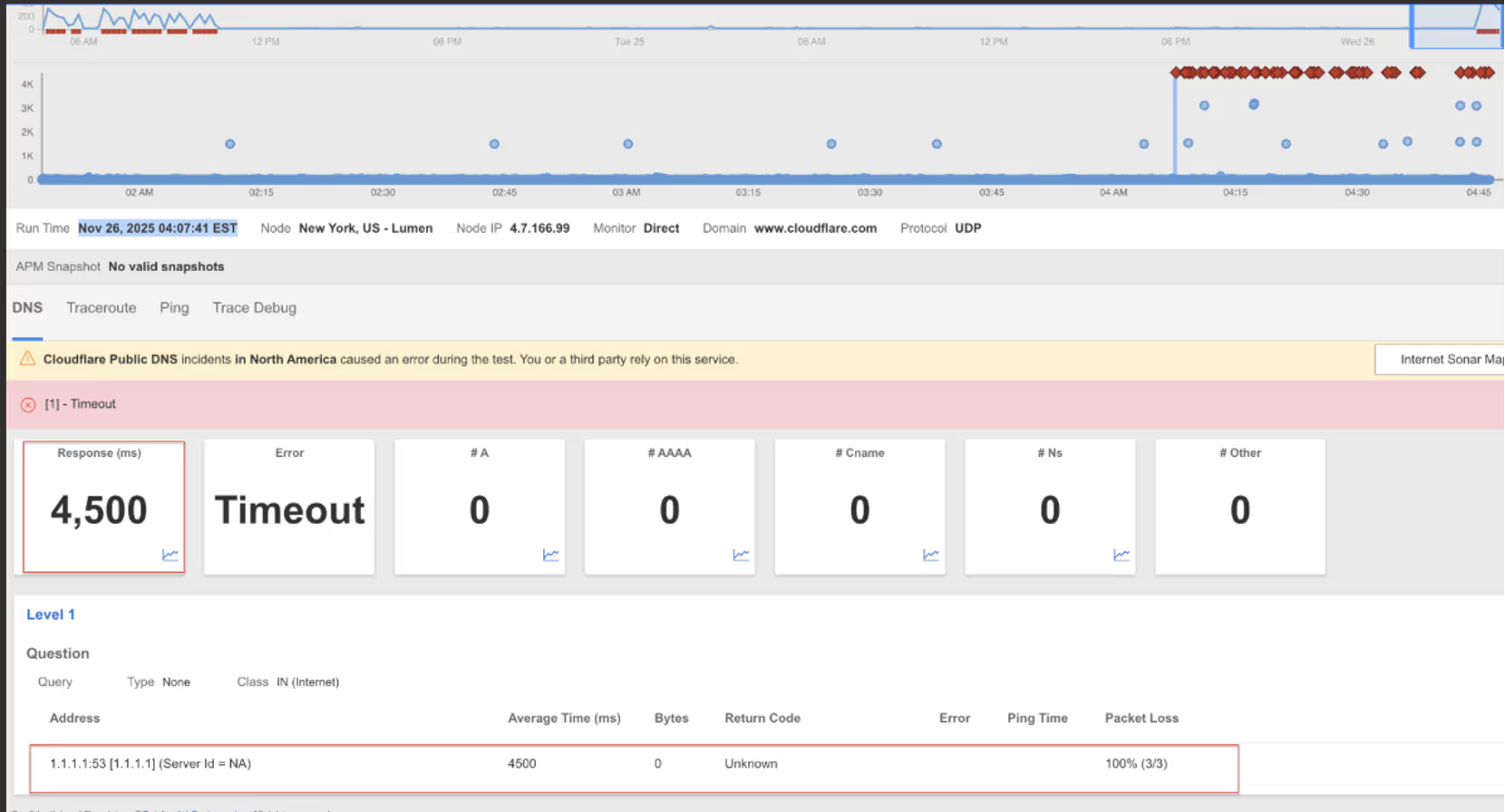

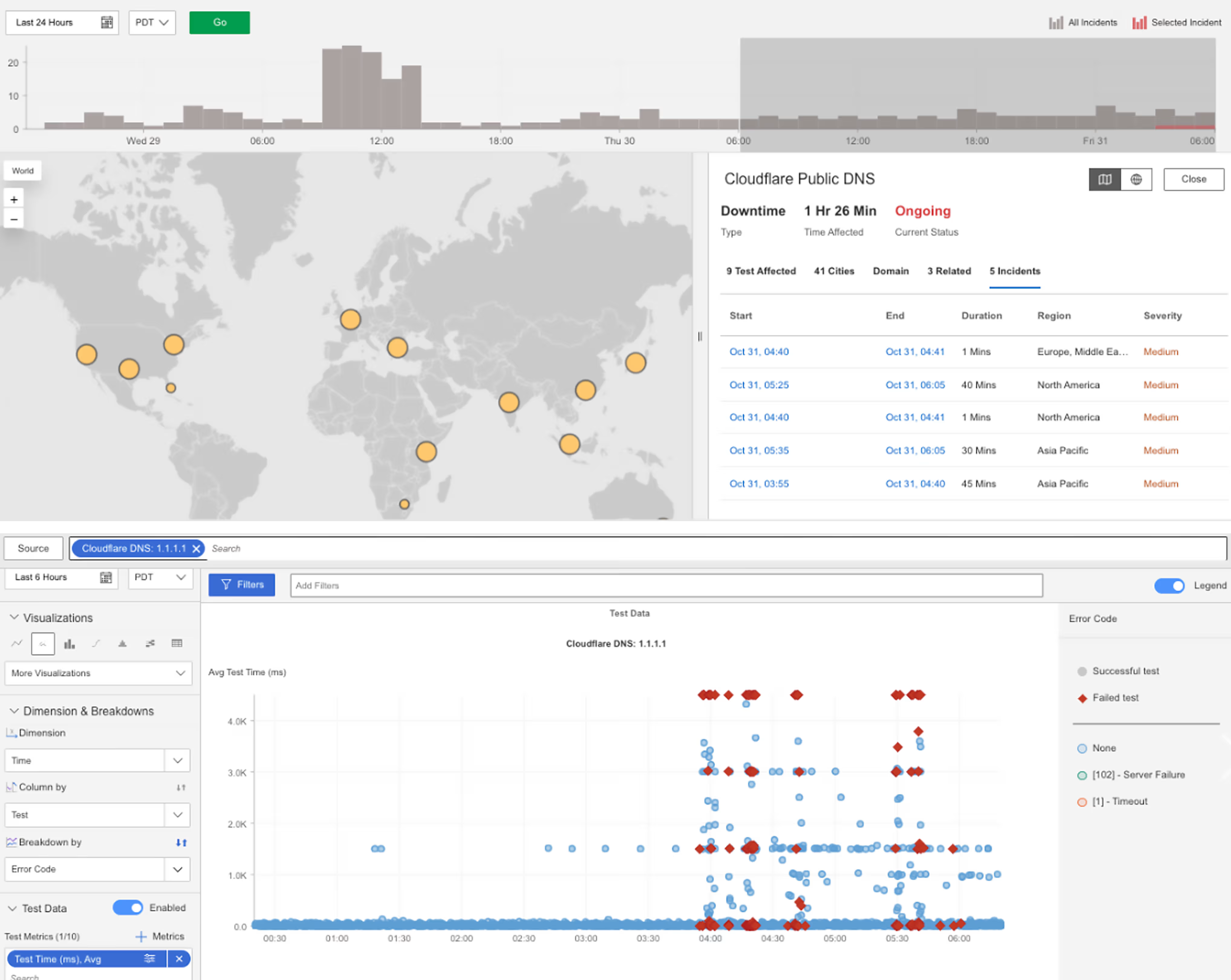

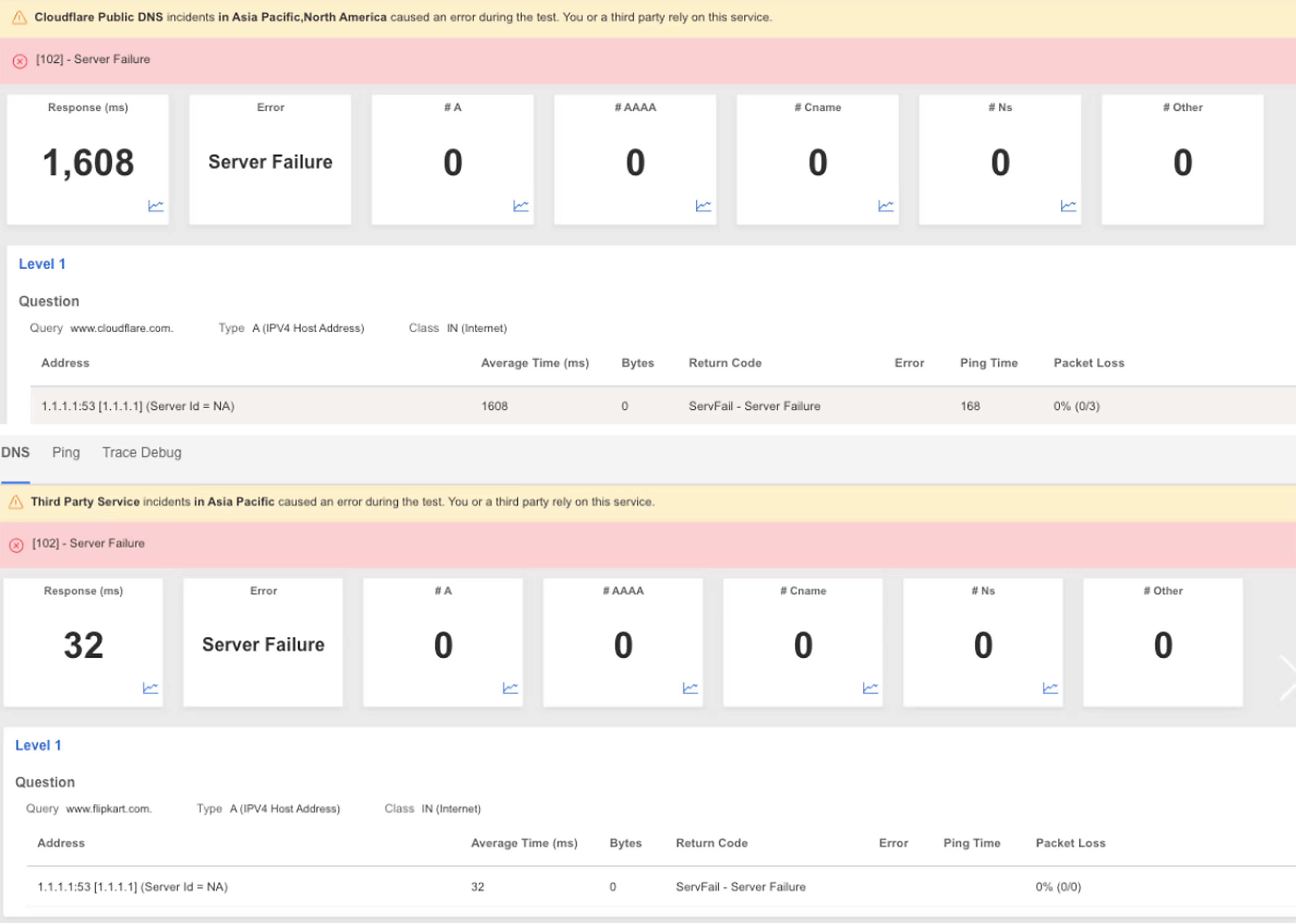

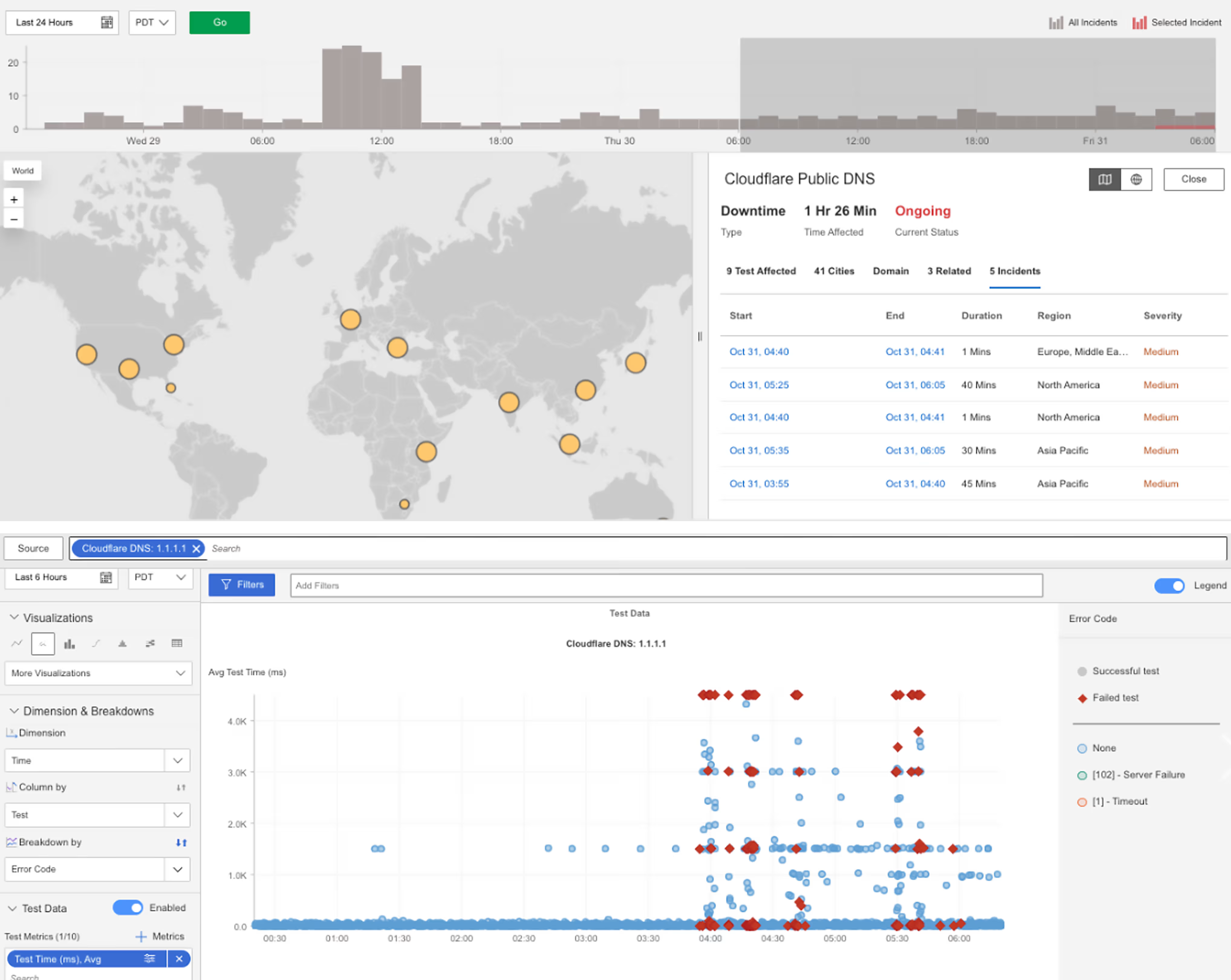

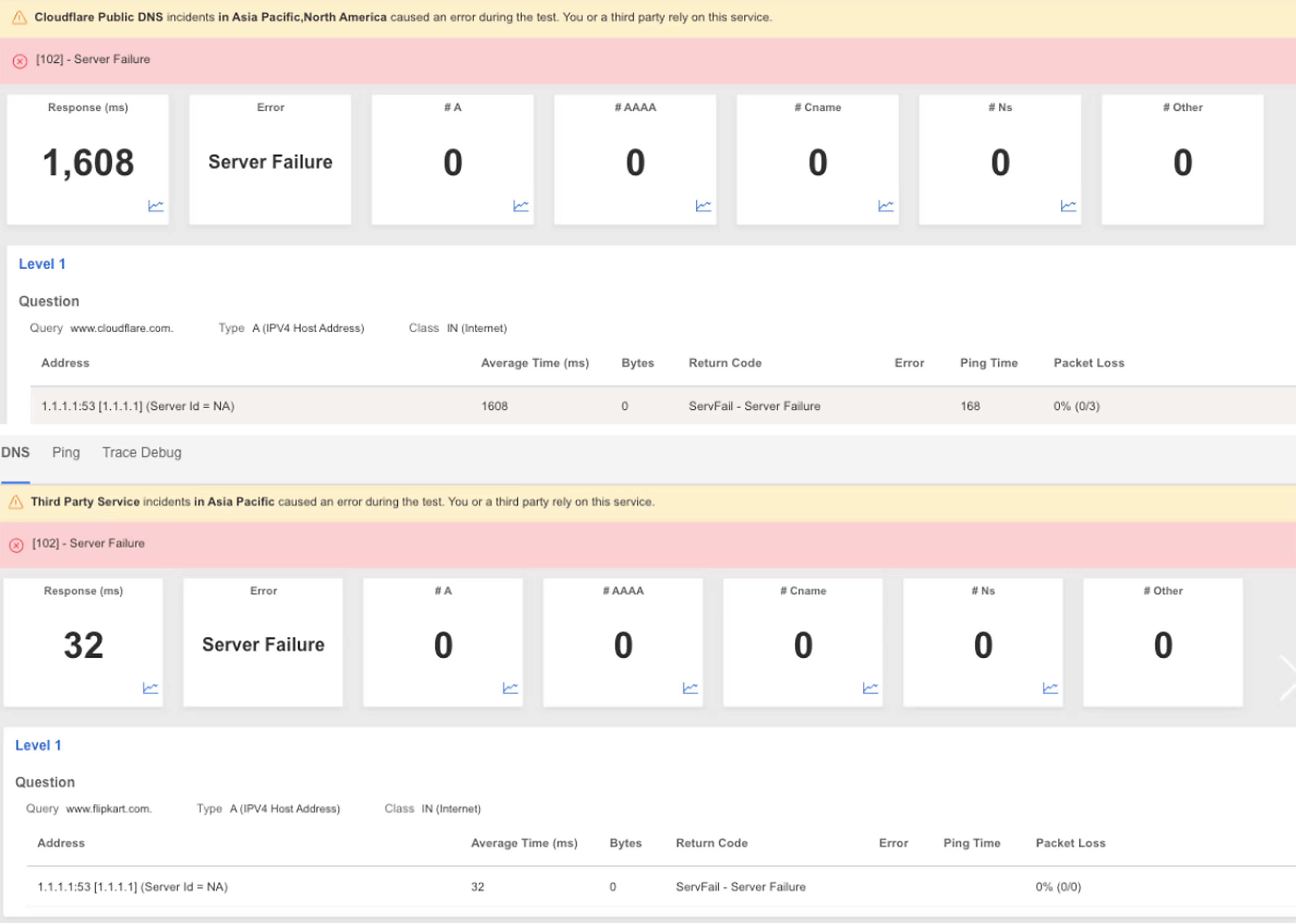

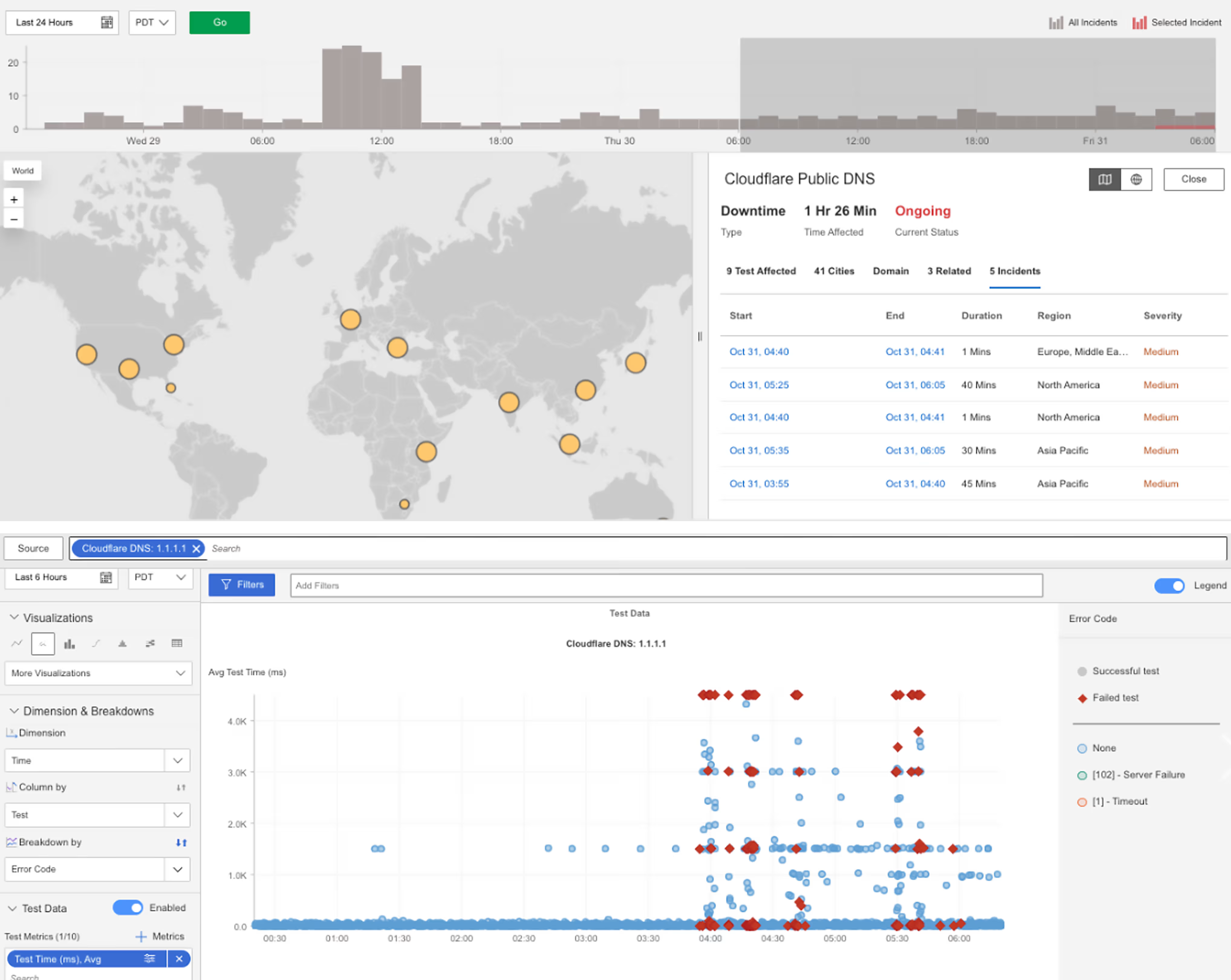

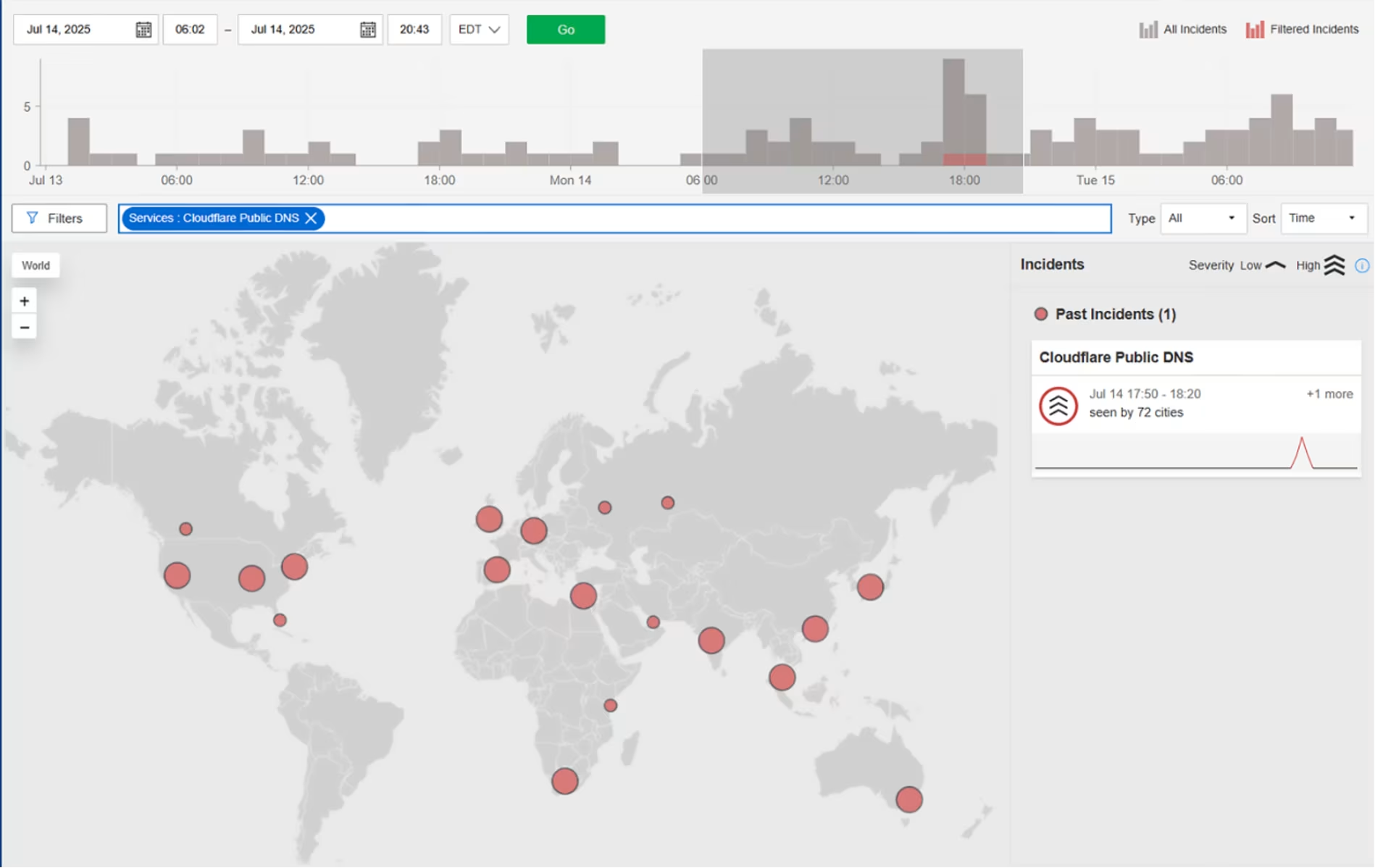

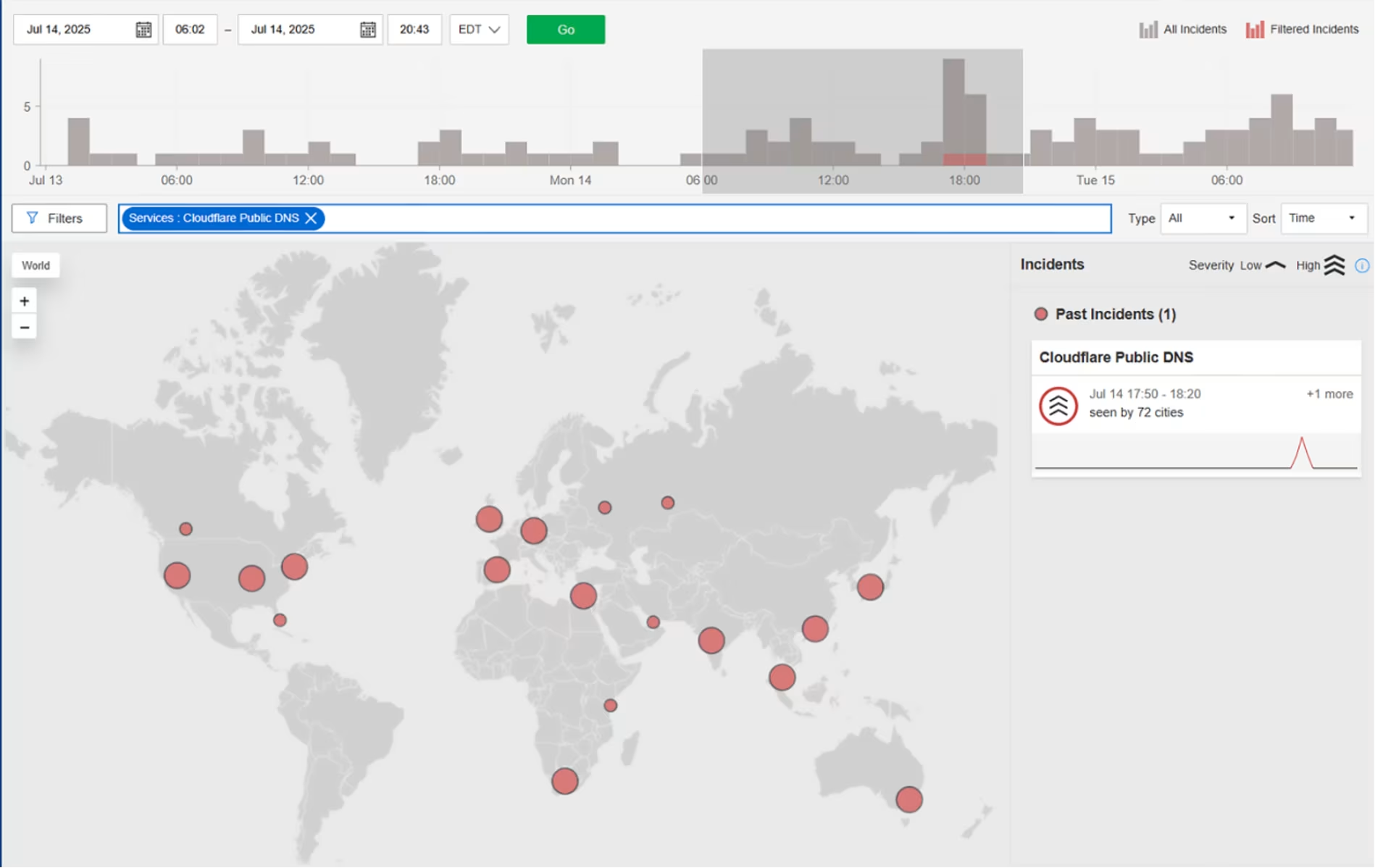

Öffentliches DNS von Cloudflare

Was ist passiert?

Am 31. Oktober 2025 um 03:54 Uhr PDT hat Internet Sonar einen anhaltenden Ausfall des Cloudflare Public DNS-Resolvers (1.1.1.1) festgestellt. Das Problem betrifft mehrere Kunden weltweit, und Untersuchungen zeigen Zeitüberschreitungen und Serverausfälle.

Mitbringsel

Serverausfälle auf der Ebene des rekursiven Resolvers sind besonders störend, da sie den allerersten Schritt der Internetkommunikation blockieren. Im Gegensatz zu Latenzspitzen oder partiellen Anwendungsfehlern verursachen DNS-Resolver-Ausfälle weitreichende, dienstübergreifende Auswirkungen, die für Endbenutzer wie ein „Ausfall des Internets“ erscheinen können. Dieser Vorfall unterstreicht, dass öffentliche rekursive DNS-Dienste eine gemeinsame Abhängigkeit mit großer Auswirkung darstellen, bei der Instabilität sofort viele nicht miteinander verbundene Anwendungen gleichzeitig beeinträchtigt. Es ist wichtig, zwischen Resolver-Timeouts und expliziten Serverausfällen zu unterscheiden, da sie auf unterschiedliche Fehlermodi hinweisen – Überlastung gegenüber internen Resolver-Fehlern – und dabei helfen, einzugrenzen, ob Probleme auf Kapazitätsengpässe, Softwarefehler oder Ausfälle von vorgelagerten Abhängigkeiten zurückzuführen sind.

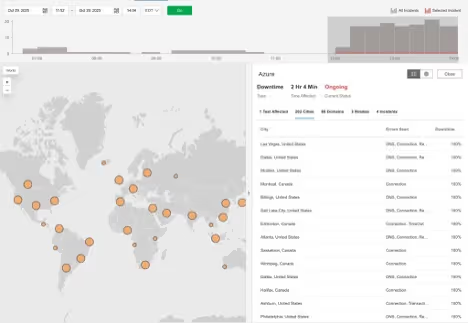

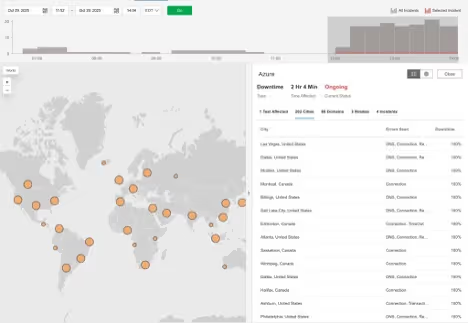

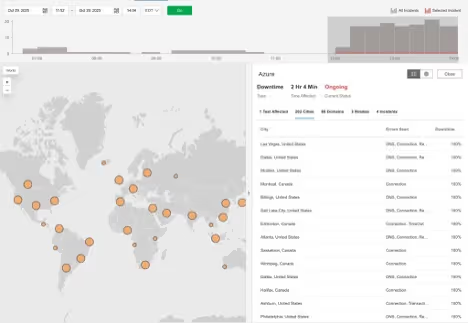

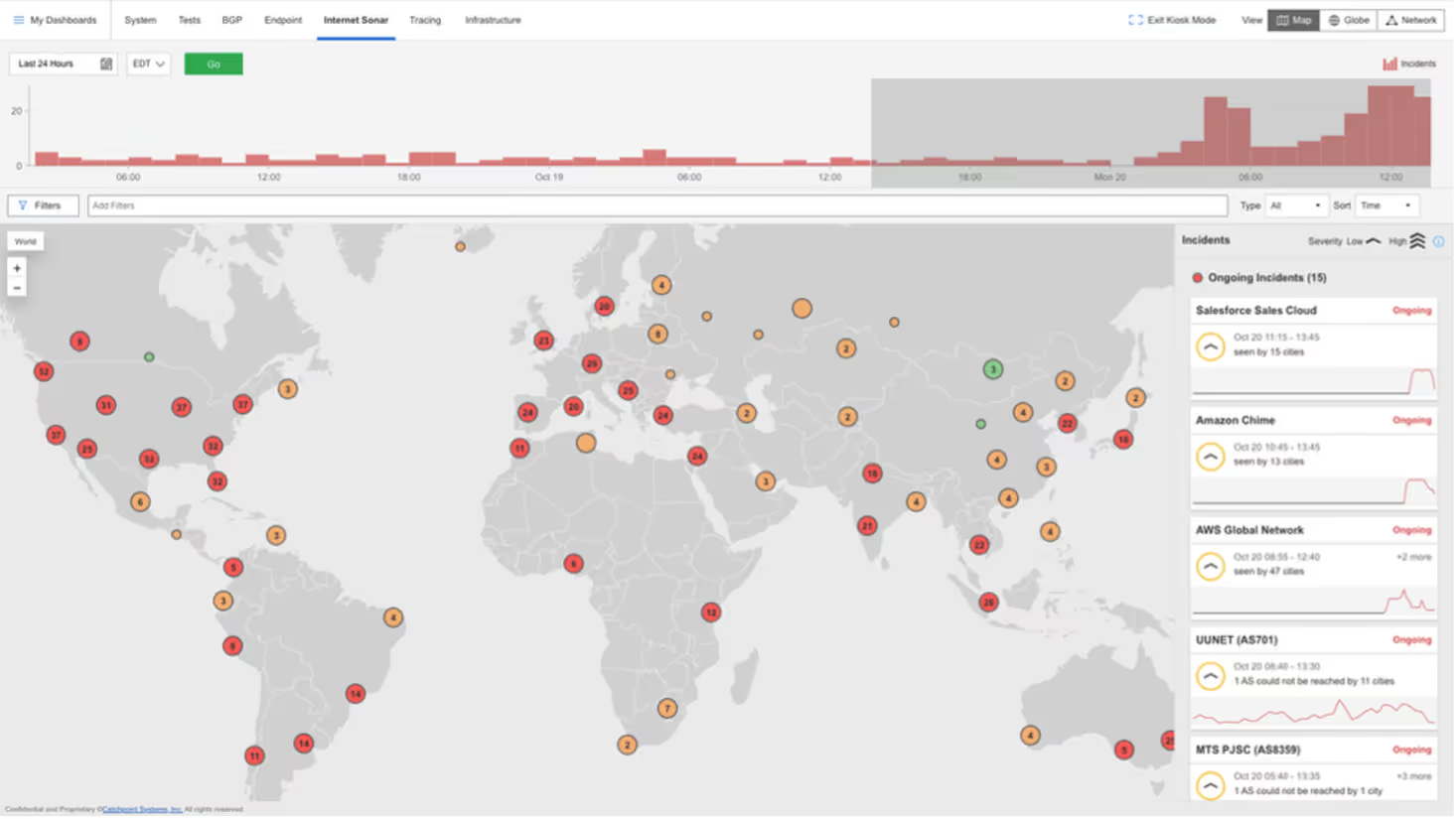

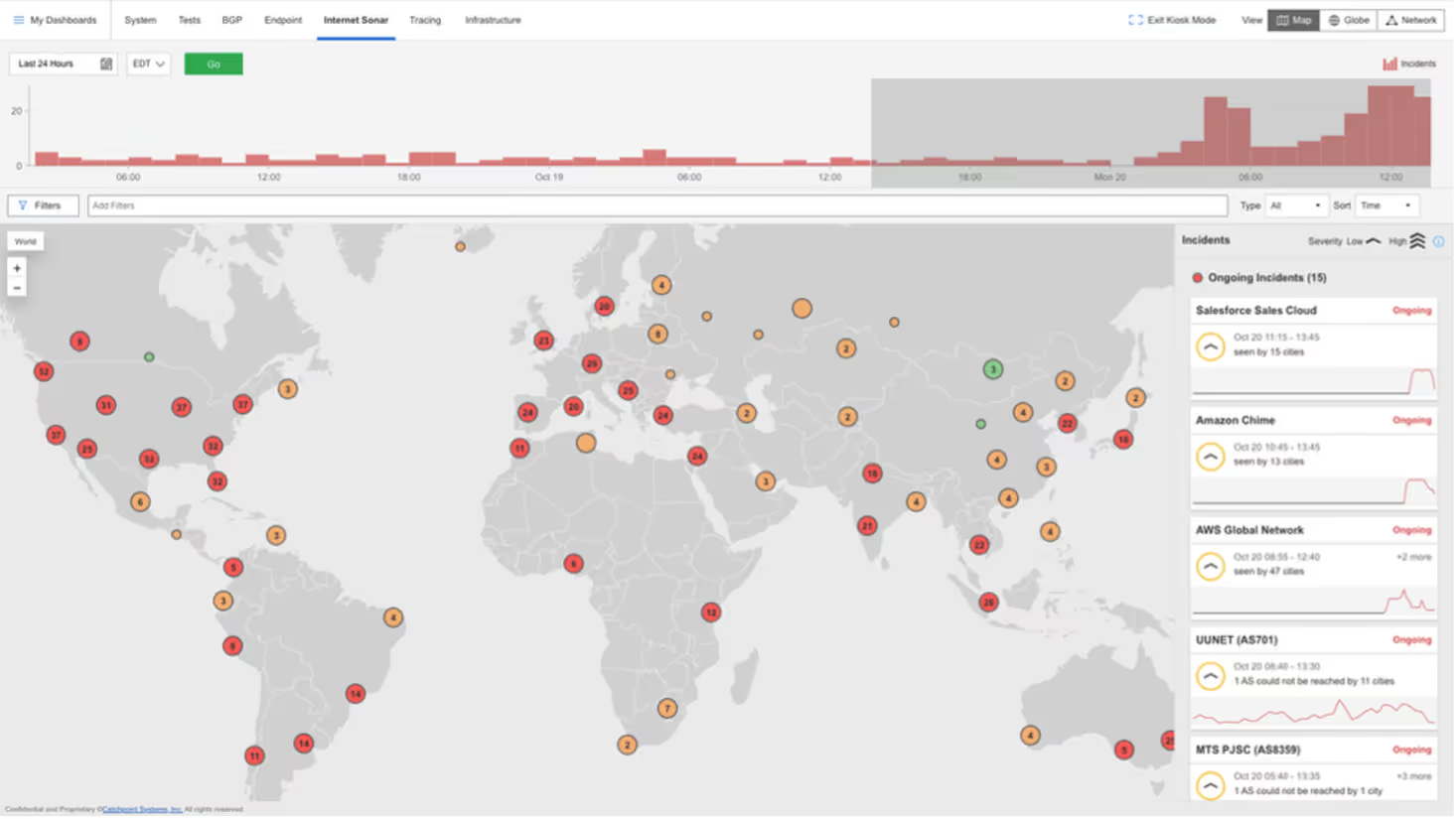

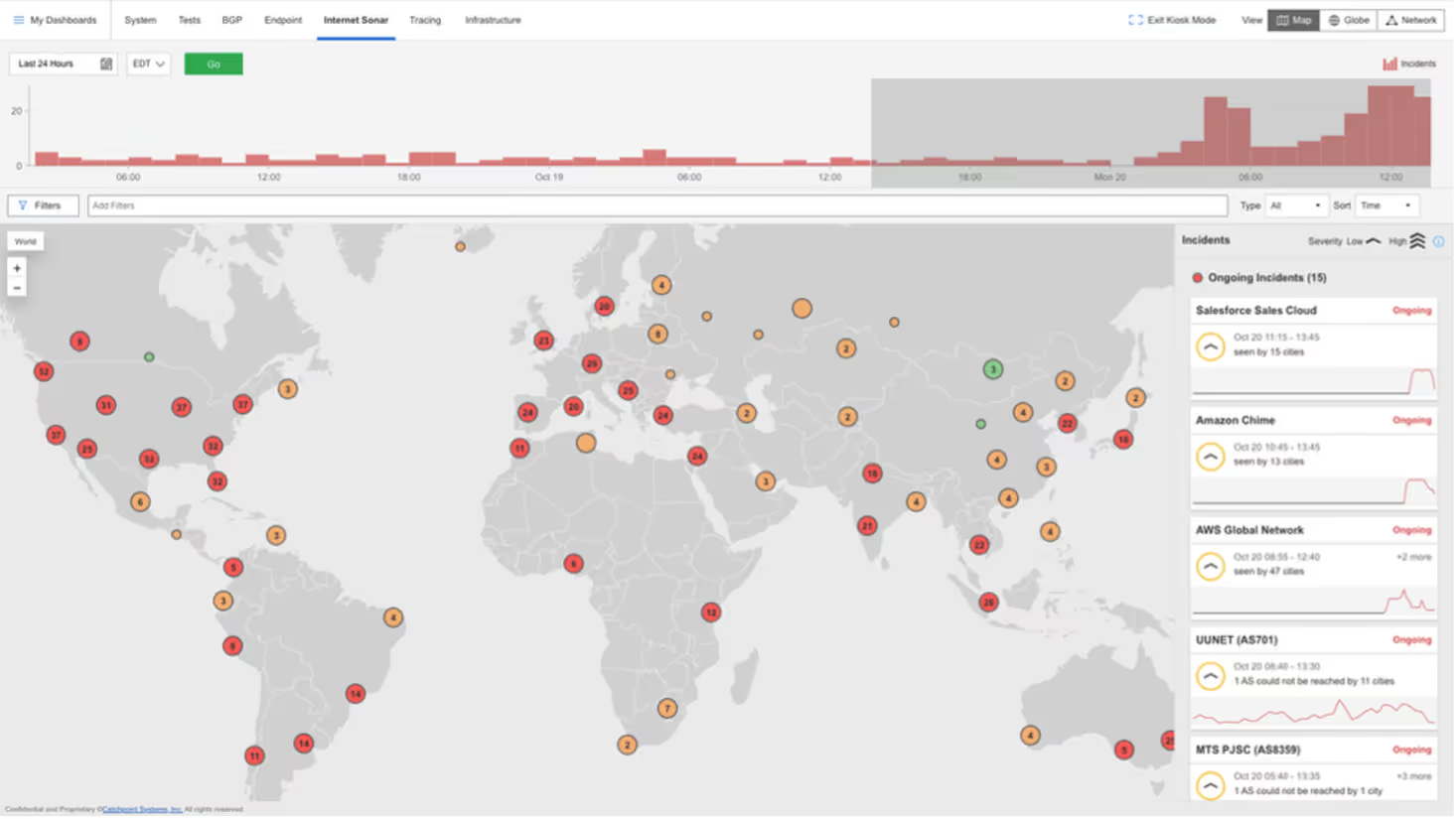

Azure

Was ist passiert?

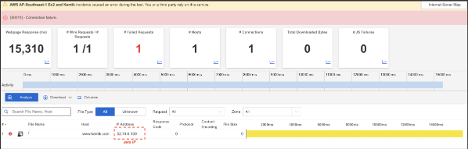

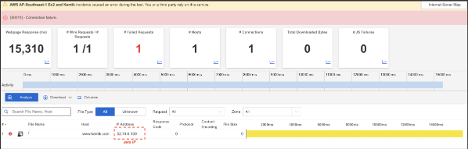

Um ca. 11:41 AM EDT entdeckte CatchpointInternet Sonar einen weitreichenden Ausfall, der Microsoft Azure Dienste betraf. Die Unterbrechung verursachte erhöhte DNS- und Verbindungsausfälle in Azure Front Door (AFD) Verfügbarkeitstests in mehr als 200 Städten weltweit. Benutzer und Anwendungen, die auf Azure angewiesen sind, erlebten langsame Antwortzeiten, fehlgeschlagene DNS-Lookups und Verbindungs-Timeouts, wenn sie versuchten, in der Cloud gehostete Ressourcen zu erreichen.

Die Analyse aus verschiedenen Blickwinkeln ergab, dass die Erreichbarkeit des Autonomen Systems (AS) von Microsoft gestört war, was eher auf ein Control-Plane- oder Edge-Networking-Problem innerhalb der globalen Azure-Infrastruktur als auf einen DNS-Auflösungsfehler hindeutet. Der Ausfall führte zu einer erheblichen Beeinträchtigung der Konnektivität für Unternehmensdienste, APIs und Webanwendungen, die vom Cloud-Backbone von Azure abhängig sind.

Mitbringsel

Dieser Vorfall folgt unmittelbar auf die Störung bei AWS und macht deutlich, wie anfällig moderne Cloud-Ökosysteme nach wie vor sind und wie wichtig eine unabhängige, globale Beobachtungsmöglichkeit ist, um Ausfälle im Internet-Maßstab in Echtzeit zu verstehen und zu entschärfen.

Der Ausfall von Azure unterstreicht, dass selbst erstklassige Cloud-Anbieter anfällig für Störungen auf Netzwerkebene sind. Die kontinuierliche Überwachung des gesamten Internet-Stacks, der DNS, BGP-Routing und Anwendungsleistung umfasst, ist entscheidend für die Erkennung und Diagnose von Ausfällen, die über die eigenen Statusaktualisierungen des Anbieters hinausgehen. Mit unabhängiger Echtzeit-Transparenz können Unternehmen feststellen, ob Konnektivitätsprobleme von Edge-Netzwerken, Fehlern auf der Steuerungsebene oder globalen Routing-Anomalien herrühren, wodurch Ausfallzeiten reduziert und die Ausfallsicherheit der Dienste verbessert werden.

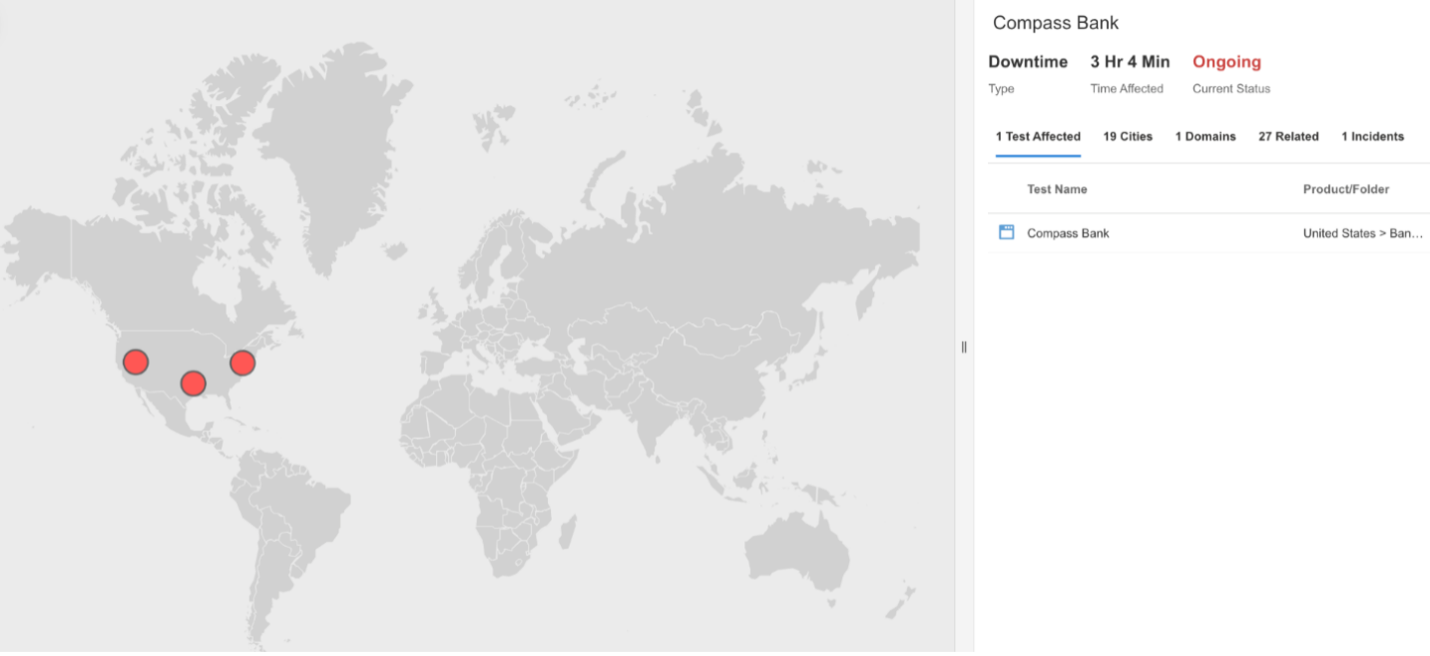

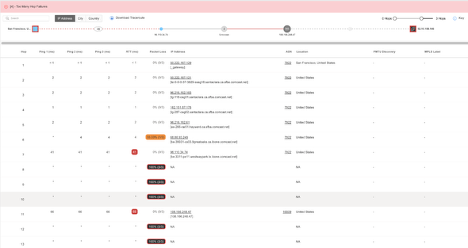

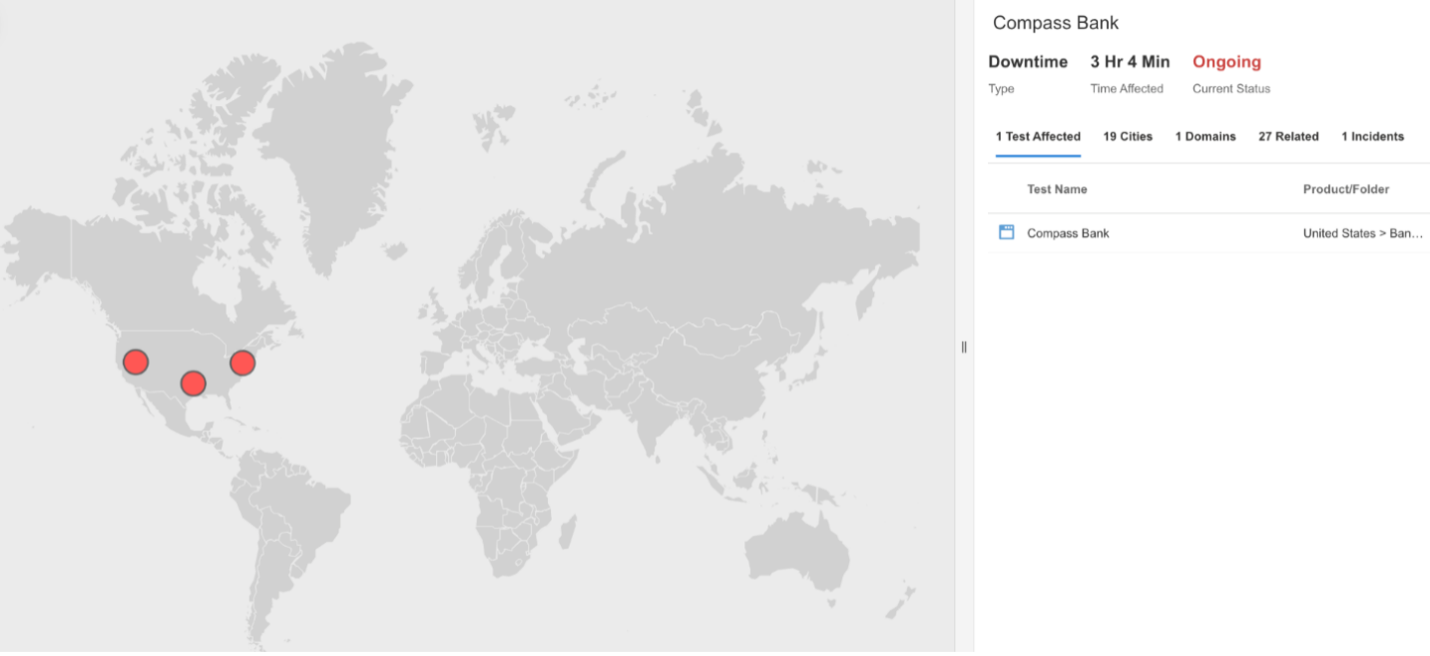

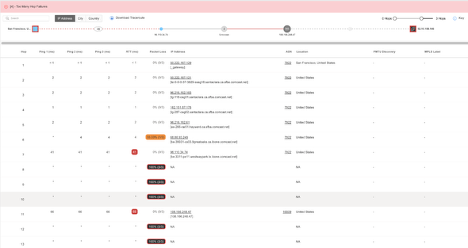

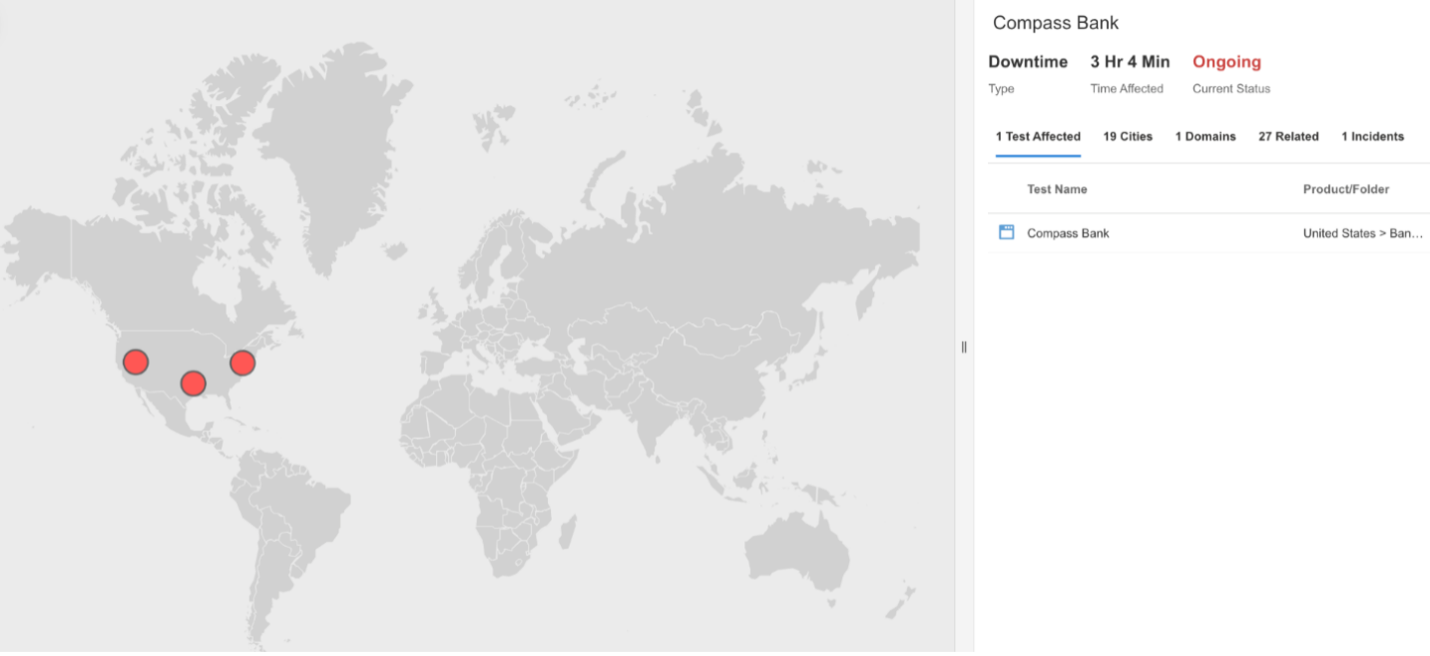

Kompass Bank

Was ist passiert?

Um ca. 1:28 PM EDT kam es bei der Compass Bank zu einem Ausfall, der dazu führte, dass die Haupt-Website vorübergehend nicht erreichbar war. Während des Vorfalls konnten sich die Nutzer aufgrund von Verbindungsausfällen nicht mit der Compass Bank-Website verbinden, was darauf schließen lässt, dass die Server aufgrund von Netzwerk- oder Infrastrukturproblemen nicht erreichbar waren. Durch die Unterbrechung konnten die Kunden bis zur Wiederherstellung des Dienstes nicht auf Online-Banking-Funktionen wie Kontoverwaltung, Rechnungszahlung und Transaktionsanzeige zugreifen.

Mitbringsel

Für Finanzinstitute können selbst kurze Ausfälle das Vertrauen der Kunden und den Zugang zu wichtigen Diensten erheblich beeinträchtigen. Durch die Überwachung der Anwendungsverfügbarkeit und der zugrunde liegenden Netzwerkkonnektivität lässt sich feststellen, ob Störungen durch lokale Routing-Probleme, Serverausfälle oder Ausfälle des Rechenzentrums verursacht werden. Die kontinuierliche Transparenz des Internet-Stacks, vom DNS bis zu den Anwendungsendpunkten, ermöglicht eine schnellere Erkennung, eine klarere Identifizierung der Grundursache und eine verbesserte Ausfallsicherheit für wichtige Bankgeschäfte.

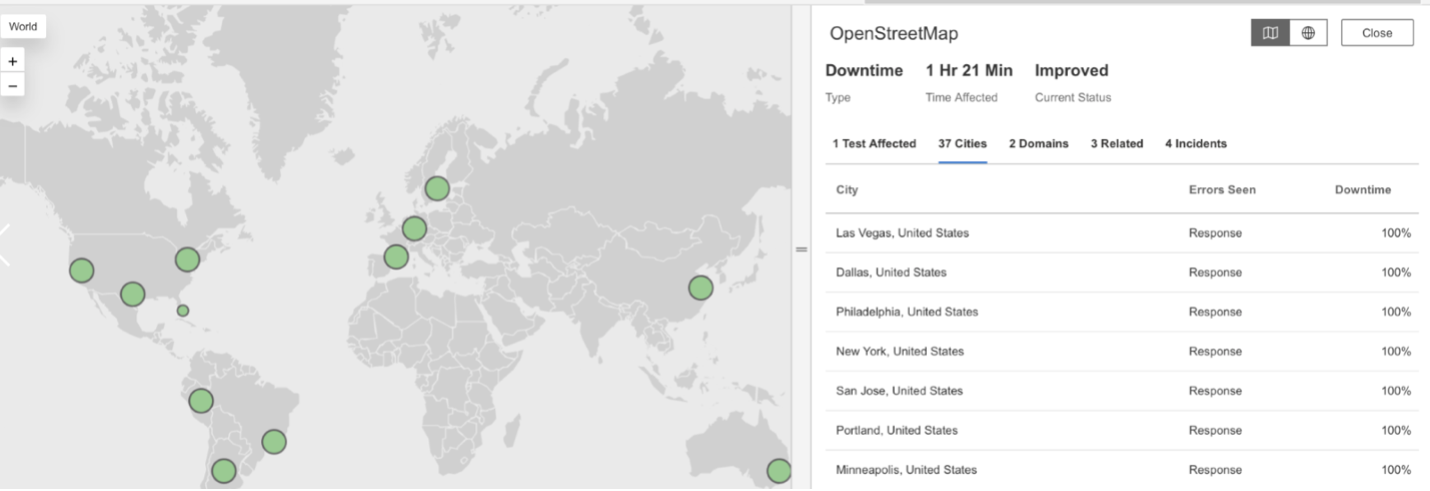

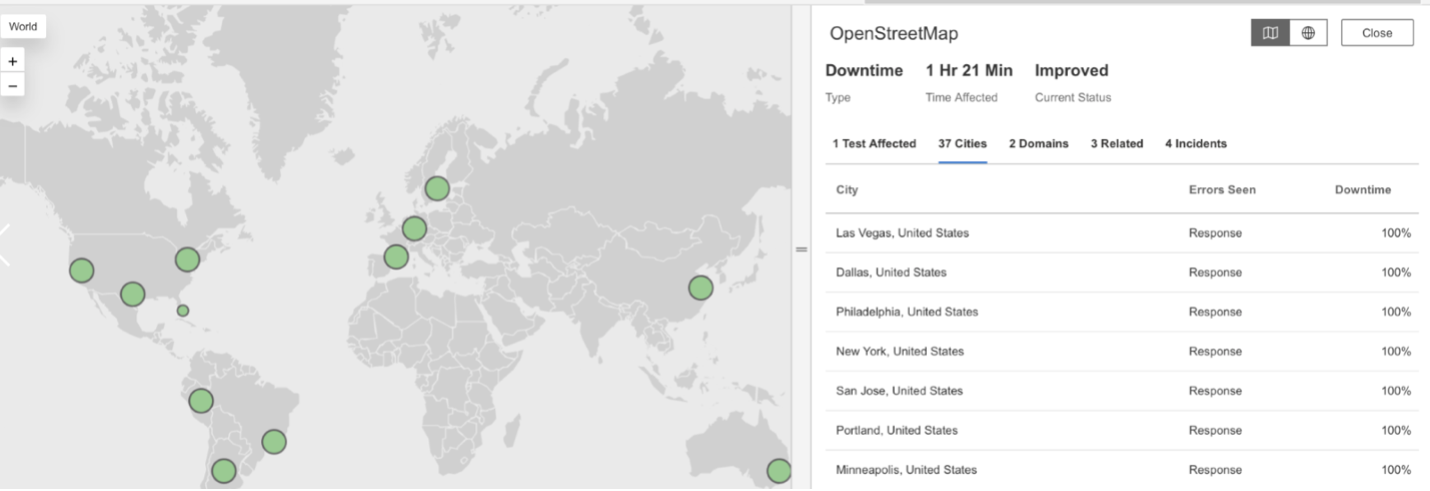

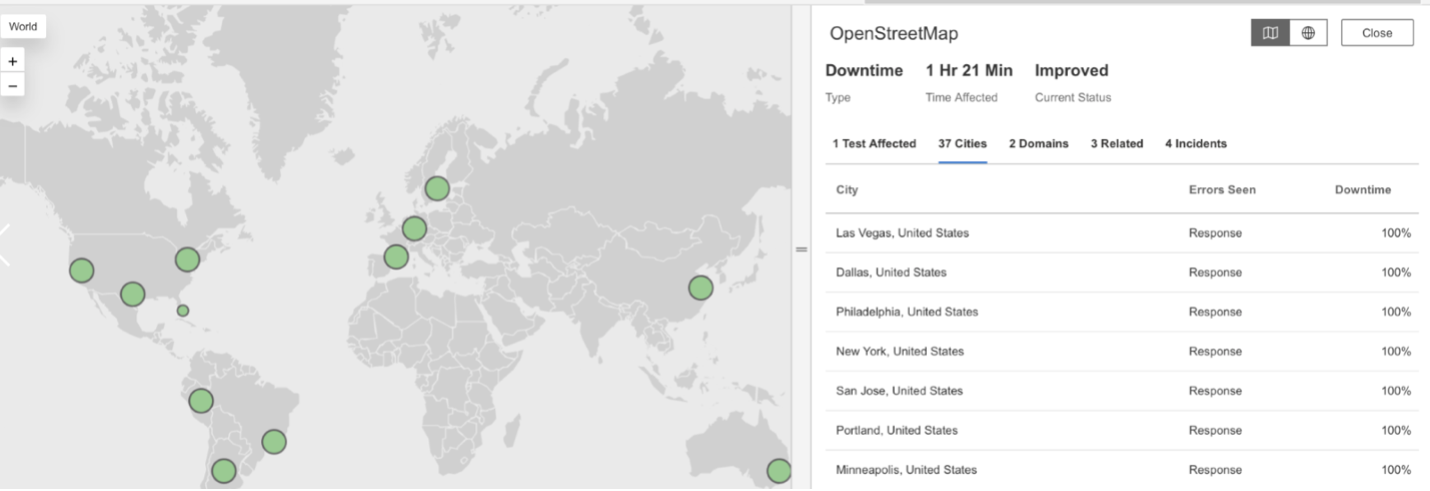

OpenStreetMap

Was ist passiert?

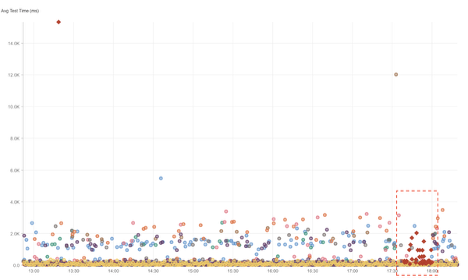

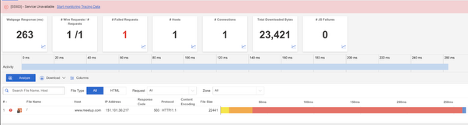

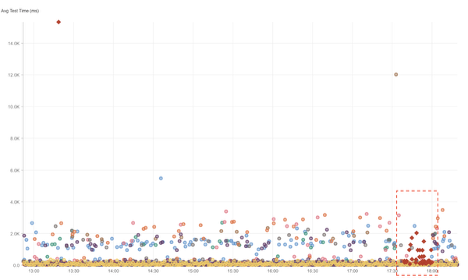

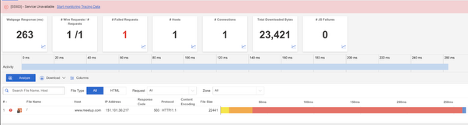

Bei OpenStreetMap kam es zu zwei separaten Unterbrechungen des Dienstes - zuerst am 21. Oktober 2025 um 20:00 Uhr EDT und erneut am 23. Oktober 2025 um 16:09 Uhr EDT. Bei beiden Vorfällen wurden Anfragen an OpenStreetMap-Dienste mit HTTP 503 (Service Unavailable) beantwortet, was bedeutet, dass die Server vorübergehend nicht in der Lage waren, den eingehenden Datenverkehr zu verarbeiten. Die Nutzer hatten während der Ausfälle wahrscheinlich langsame Ladezeiten für die Karten oder konnten nicht auf geografische Daten zugreifen.

Mitbringsel

Wiederholte 503-Fehler innerhalb eines kurzen Zeitraums können auf eine Überlastung des Servers oder eine Erschöpfung der Ressourcen aufgrund einer hohen Nutzernachfrage oder von Wartungsaktivitäten hinweisen. Durch kontinuierliche globale Überwachung lässt sich feststellen, ob solche Ausfälle auf eine Überlastung des Datenverkehrs, Kapazitätsgrenzen oder vorgelagerte Infrastrukturprobleme zurückzuführen sind. Für weit verbreitete Mapping- und Open-Data-Plattformen wie OpenStreetMap ist die Überwachung des Serverzustands und der Bearbeitung von Anfragen von entscheidender Bedeutung, um Ausfallzeiten zu minimieren und die Zuverlässigkeit für Entwickler und Nutzer gleichermaßen zu gewährleisten.

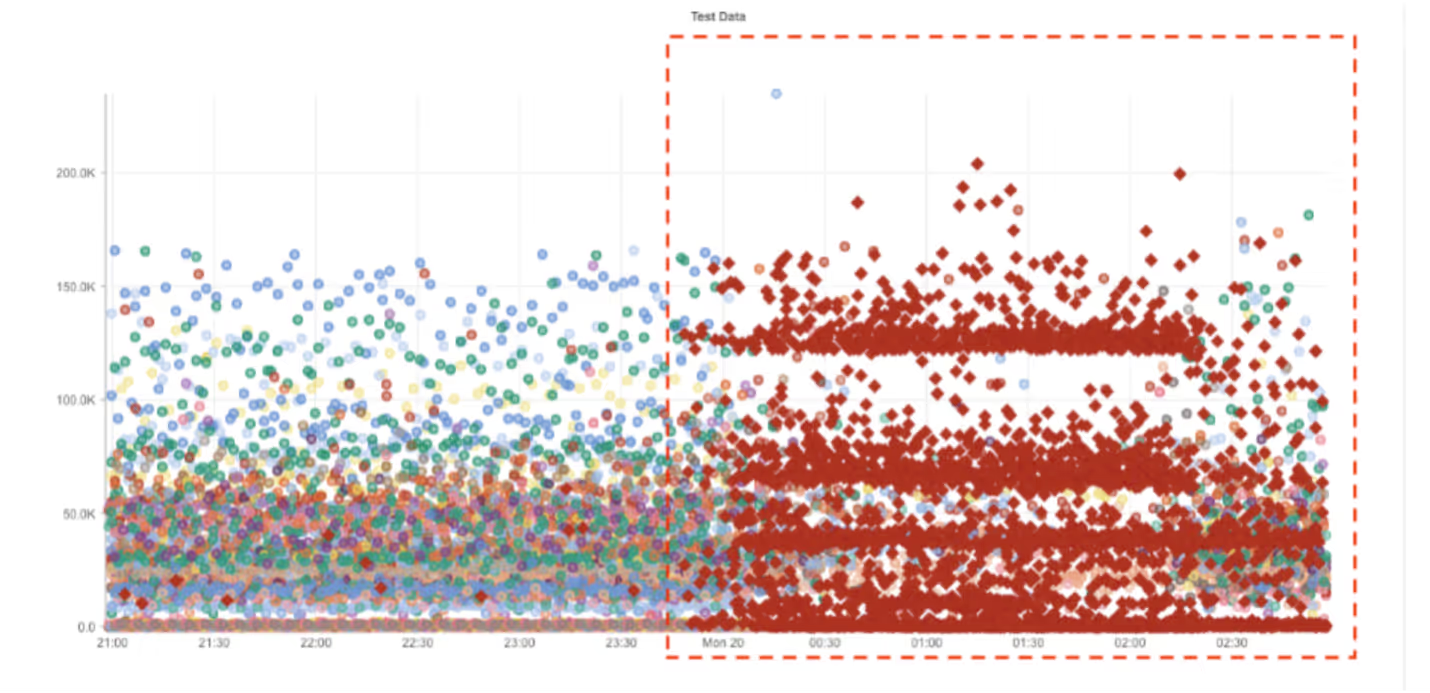

AWS

Was ist passiert?

Um ca. 06:55 Uhr UTC entdeckte der Internet-Sonar von Catchpointeinen großflächigen Ausfall der AWS-Infrastruktur, 16 Minuten bevor AWS um 07:11 Uhr UTC offiziell seine Statusseite aktualisierte. Während des Vorfalls gaben mehrere auf AWS gehostete Dienste - darunter mehrere Autodesk-Umgebungen - HTTP 500 (Internal Server Error) und HTTP 502 (Bad Gateway) Antworten zurück.

Mitbringsel

Dieser Ausfall verdeutlicht, dass selbst bei den widerstandsfähigsten Cloud-Ökosystemen kaskadenartige Ausfälle auftreten können. Die unabhängige globale Überwachung ermöglichte eine 16-minütige Früherkennung vor der Bestätigung durch AWS selbst und beweist damit, wie wichtig externe Sichtbarkeit ist. Durch die kontinuierliche Beobachtung des gesamten Internet-Stacks können die Teams genau feststellen, ob Serviceausfälle von Infrastrukturebenen, Netzwerkrouten oder vorgelagerten Abhängigkeiten ausgehen, was eine schnellere Erkennung und Entschärfung bei großen Vorfällen gewährleistet.

SAP C4C

Was ist passiert?

Bei den SAP C4C-Diensten kam es zu einem mittelschweren Ausfall, von dem Benutzer auf mehreren Kontinenten betroffen waren. Während des Vorfalls wurden Anfragen an den Dienst mit HTTP 503 (Service Unavailable) beantwortet, ein Fehler, der anzeigt, dass die Server entweder überlastet oder vorübergehend offline waren. Die Unterbrechung betraf wahrscheinlich den Zugriff auf CRM-Tools, Vertriebsmanagement-Dashboards und Kundenbindungsanwendungen, die auf die Cloud-Infrastruktur von SAP angewiesen sind.

Mitbringsel

Fehler im Zusammenhang mit der Nichtverfügbarkeit von Diensten in verschiedenen Regionen der Welt deuten auf Probleme in der zentralen Infrastruktur oder auf eine hohe Dienstlast hin. Eine kontinuierliche globale Überwachung kann helfen, zwischen lokaler Überlastung und plattformweiten Serverüberlastungen zu unterscheiden. Die Verfolgung des Anwendungszustands und der Antwortcodes über verteilte Endpunkte hinweg ermöglicht eine schnellere Identifizierung der Grundursachen und unterstützt eine größere Ausfallsicherheit von Internet- und Cloud-Diensten.

AWS

Was ist passiert?

Um ca. 4:02 PM EDT kam es bei AWS zu einem Ausfall, der die Region AP-Southeast-1 (Singapur) betraf und die EC2-Services (Elastic Compute Cloud) beeinträchtigte. Der Vorfall betraf mehrere abhängige Plattformen, darunter Kentik, Kasada und Flowise. Während des Ausfalls lieferten die betroffenen Systeme eine Mischung aus HTTP 502 (Bad Gateway), HTTP 504 (Gateway Timeout) und HTTP 500 (Internal Server Error) Antworten, die auf eine fehlgeschlagene Kommunikation zwischen den Diensten, nicht reagierende Server und interne Verarbeitungsfehler hinwiesen. Die Benutzer erlebten Verbindungsabbrüche und langsame Antwortzeiten, bis der normale Dienst wiederhergestellt war.

Mitbringsel

Dieses Ereignis veranschaulicht, wie sich regionale Cloud-Ausfälle auf mehrere abhängige Anwendungen auswirken können. Durch die Überwachung sowohl der Cloud-Infrastruktur als auch der Anwendungsebenen lässt sich feststellen, ob Störungen auf Ausfälle von Recheninstanzen, überlastete Gateways oder Abhängigkeiten von vorgelagerten Diensten zurückzuführen sind. Die vollständige Internet-Stack-Transparenz gewährleistet eine schnellere Diagnose bei Vorfällen mit mehreren Diensten, verbessert die Ausfallsicherheit und minimiert die Ausfallzeiten für Plattformen, die bei großen Cloud-Anbietern wie AWS gehostet werden.

Azure

Was ist passiert?

Um ca. 3:45 AM EDT kam es bei Microsoft Azure zu einem Ausfall, der den Zugang zu mehreren Diensten in mehreren Regionen beeinträchtigte. Während des Ereignisses wurden Anfragen an Azure-Content-Delivery- und Edge-Network-Endpunkte, wie azureedge.net und azurefd.net, nicht ordnungsgemäß beantwortet. Die Störung wirkte sich wahrscheinlich auf Anwendungen aus, die auf Azure Front Door und CDN-Dienste angewiesen sind, was zu langsamen Ladezeiten oder nicht verfügbaren Webressourcen führte.

Mitbringsel

Ausfälle auf der Edge-Ebene machen deutlich, wie Ausfälle bei der globalen Inhaltsbereitstellung oder der Datenverkehrslenkung abhängige Cloud-Anwendungen stören können. Die Überwachung der gesamten Internetbereitstellungskette, von der DNS-Auflösung und dem CDN-Verhalten bis hin zu Backend-Antworten, hilft dabei, festzustellen, ob das Problem im Edge-Caching, den Upstream-Servern oder dem Netzwerk-Routing liegt. Die Aufrechterhaltung der End-to-End-Transparenz in einer Cloud-Infrastruktur mit mehreren Regionen sorgt für eine schnellere Erkennung, genauere Diagnose und schnellere Wiederherstellung, wenn Ausfälle auftreten.

Microsoft Büro

Was ist passiert?

Um ca. 5:21 PM EDT kam es zu einem weitreichenden Ausfall von Microsoft Office, von dem Benutzer auf mehreren Kontinenten betroffen waren. Während des Vorfalls wurden bei Anfragen an Office-Dienste HTTP 503-Antworten (Service Unavailable) zurückgegeben, die auftreten, wenn die Server zu stark ausgelastet sind, um neue Anfragen zu bearbeiten oder vorübergehend offline sind. Durch das Problem wurde wahrscheinlich der Zugriff auf wichtige Produktivitätstools wie die Bearbeitung von Dokumenten, E-Mail und Cloud-Dateispeicher unterbrochen.

Mitbringsel

Fehler im Zusammenhang mit der Nichtverfügbarkeit von Diensten weisen häufig auf Kapazitäts- oder Wartungsprobleme in großen verteilten Systemen hin. Durch eine kontinuierliche globale Überwachung lässt sich feststellen, ob 503 Antworten von überlasteten Anwendungsservern, falsch konfigurierten Lastverteilern oder einer vorübergehenden Drosselung des Dienstes herrühren. Die Überwachung des gesamten Internet-Zustellungspfads - vom Client über DNS und Cloud-Infrastruktur - liefert wichtige Erkenntnisse über Leistungseinbußen und hilft Teams, die Verfügbarkeit schneller wiederherzustellen.

X Corp. (Twitter)

Was ist passiert?

Um ca. 4:10 AM EDT kam es bei X/Twitter zu einer kurzen Serviceunterbrechung, von der Nutzer in der gesamten EMEA-Region betroffen waren. Während des Ausfalls wurden Anfragen an die Plattform aufgrund hoher Wartezeiten und fehlender Serverantworten verzögert. Infolgedessen kam es zu Verzögerungen beim Laden von Feeds oder die Nutzer konnten vorübergehend nicht auf die Website zugreifen, bis der normale Betrieb wieder aufgenommen wurde.

Mitbringsel

Timeout-Probleme deuten oft auf eine Überlastung des Netzes oder nicht reagierende Backend-Server hin. Die Überwachung des Zustands der End-to-End-Verbindung, von der DNS-Auflösung bis zur Antwort der Anwendung, hilft dabei, zu erkennen, wo sich in der Lieferkette Latenz aufbaut. Bei globalen Plattformen wie X sorgt eine kontinuierliche Transparenz über mehrere Länder hinweg dafür, dass lokale Störungen schnell erkannt und isoliert werden können, bevor sie eine breitere Benutzerbasis beeinträchtigen.

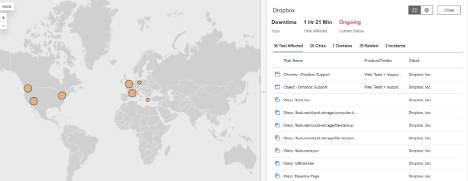

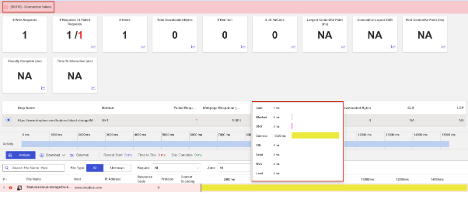

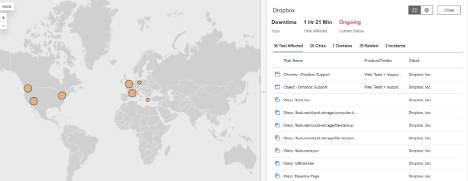

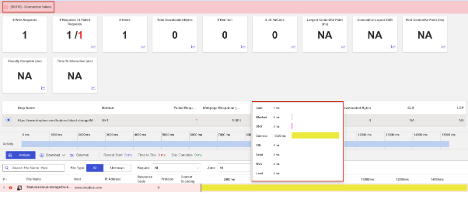

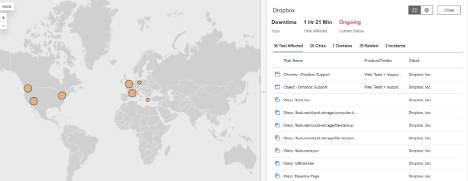

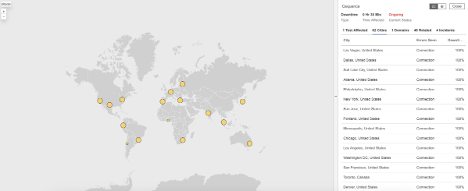

Dropbox

Was ist passiert?

Um ca. 2:45 AM EDT kam es bei Dropbox zu einer geringfügigen Dienstunterbrechung, von der Nutzer in mehreren Regionen betroffen waren. Während des Vorfalls schlugen die Verbindungen zum Hauptdienst-Endpunkt von Dropbox aufgrund hoher Verbindungszeiten fehl. Das bedeutet, dass die Geräte der Nutzer zu lange brauchten, um eine stabile Verbindung mit den Dropbox-Servern herzustellen. Das Problem führte wahrscheinlich zu kurzen Verzögerungen oder vorübergehender Unerreichbarkeit für Datei-Uploads, Downloads und den Zugriff auf das Konto.

Mitbringsel

Selbst Ausfälle geringen Ausmaßes, die durch lange Verbindungszeiten verursacht werden, können die Benutzerfreundlichkeit beeinträchtigen, insbesondere bei Diensten, die große Datenmengen übertragen. Durch die kontinuierliche Überwachung der Netzwerklatenz und der Verbindungsleistung lässt sich feststellen, ob Verzögerungen auf lokale Überlastung, ISP-Routing oder vorgelagerte Infrastrukturprobleme zurückzuführen sind. Der Überblick über die globalen Endpunkte stellt sicher, dass Teams kleine Probleme erkennen und beheben können, bevor sie sich zu weitreichenden Störungen auswachsen.

Braintree

Was ist passiert?

Um ca. 8:00 PM EDT kam es bei Braintree zu einem Serviceausfall, der die Zahlungsabwicklung in ganz Nordamerika beeinträchtigte. Während des Vorfalls wurden bei Anfragen an die Web- und API-Endpunkte von Braintree HTTP 503-Fehler (Service Unavailable) zurückgegeben, was darauf hindeutet, dass die Server entweder überlastet waren oder vorübergehend wegen Wartungsarbeiten offline genommen wurden. Diese Unterbrechung verhinderte wahrscheinlich, dass Händler während des Ereignisses Transaktionen verarbeiten oder auf ihre Dashboards zugreifen konnten.

Mitbringsel

Zahlungssysteme sind auf eine kontinuierliche Betriebszeit angewiesen, um das Vertrauen der Kunden und den Transaktionsfluss aufrechtzuerhalten. Die Überwachung der API-Verfügbarkeit und der Antwortzeiten hilft, Anzeichen von Überlastungen oder Fehlkonfigurationen bei der Wartung frühzeitig zu erkennen, bevor sie zu Ausfällen im gesamten Service führen. Die Überwachung der Internet-Performance, die DNS, Anwendungsserver und Netzwerkkonnektivität umfasst, kann aufzeigen, wo in der Kette die Leistung nachlässt, und Teams dabei helfen, Ausfallzeiten zu minimieren und kritische Finanzvorgänge zu schützen.

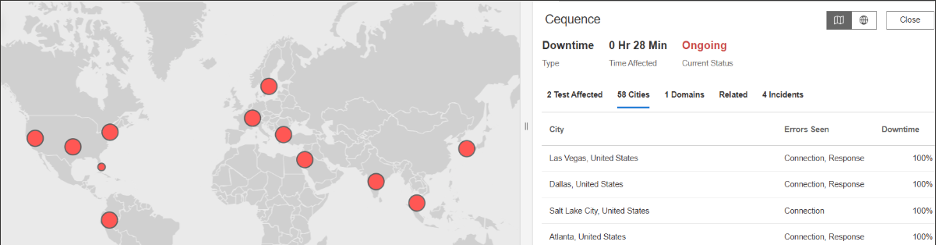

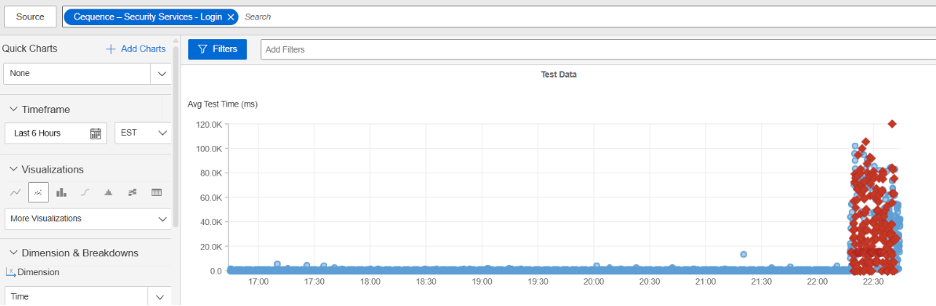

Cequence

Was ist passiert?

Um ca. 11:20 PM EDT kam es bei Cequence zu einem weltweiten Ausfall, der den Benutzerzugang auf mehreren Kontinenten beeinträchtigte. Während des Vorfalls wurden bei Anfragen an die Anmeldedienste von Cequence HTTP 504 (Gateway Timeout) Fehler zurückgegeben, was bedeutet, dass der Gateway- oder Proxy-Server keine rechtzeitige Antwort von einem vorgeschalteten System erhalten hat. Das Problem war mit hohen Verbindungszeiten verbunden, die zum Scheitern von Anfragen führten. Die Unterbrechung dauerte etwa 30 Minuten, und die normale Funktionalität wurde um 23:50 Uhr EDT wieder aufgenommen.

Mitbringsel

Globale Ausfälle wie dieser machen deutlich, wie sich Latenz- und Zeitüberschreitungsprobleme in vorgelagerten Systemen überregional auswirken können. Die Überwachung der Verbindungszeiten und des Antwortverhaltens im gesamten Internet-Stack, vom Client bis zur Anwendung, hilft zu erkennen, wo Verzögerungen auftreten und welche Systeme am stärksten betroffen sind. Umfassende Transparenz ermöglicht es den Teams, zwischen serverseitiger Verlangsamung, Netzwerküberlastung und externen Abhängigkeiten zu unterscheiden und so die allgemeine Ausfallsicherheit der Dienste zu verbessern.

Meetup

Was ist passiert?

Um ca. 5:39 PM EDT kam es bei Meetup zu einem größeren Ausfall, von dem Nutzer in den Vereinigten Staaten betroffen waren. Während des Vorfalls wurden bei Anfragen an den Dienst HTTP 503-Fehler (Service Unavailable) zurückgegeben, die auftreten, wenn ein Server vorübergehend nicht in der Lage ist, Anfragen zu bearbeiten - häufig aufgrund von Überlastung oder Wartungsarbeiten. Die Unterbrechung dauerte etwa 20 Minuten, wobei der normale Zugang um 5:59 PM EDT wiederhergestellt war. Während dieses Zeitraums konnten die Benutzer keine Veranstaltungen anzeigen oder verwalten, keine RSVP abgeben oder sich nicht bei ihren Konten anmelden.

Mitbringsel

Kurze, aber folgenschwere Ausfälle wie dieser zeigen, wie schnell sich Ausfälle auf Anwendungsebene auf das Vertrauen und die Beteiligung der Nutzer auswirken können. Eine synthetische Überwachung über mehrere Standorte hinweg kann dabei helfen festzustellen, ob 503-Fehler auf überlastete Server, fehlgeschlagene Backend-Abhängigkeiten oder vorübergehende Wartungsprobleme zurückzuführen sind. Die Aufrechterhaltung des Einblicks in den Serverzustand und das Reaktionsverhalten unterstützt eine schnellere Behebung und eine stärkere Internet-Resilienz für Community-gesteuerte Plattformen wie Meetup.

LaunchDarkly

Was ist passiert?

Um ca. 10:55 AM EDT kam es bei LaunchDarkly zu einem regionsübergreifenden Ausfall, der den Zugang zu wichtigen Diensten unterbrochen hat. Die Benutzer sahen sich mit einer Mischung aus Verbindungszeitüberschreitungen, HTTP 502 (Bad Gateway) und HTTP 504 (Gateway Timeout) Fehlern konfrontiert, die anzeigten, dass die Server entweder nicht rechtzeitig antworteten oder ungültige Antworten zurückgaben. Dies beeinträchtigte die Fähigkeit der Plattform, Feature-Flag-Updates und Ereignisverfolgung in Echtzeit zu liefern, was sich auf Software-Teams auswirkte, die sich auf LaunchDarkly für die kontinuierliche Bereitstellung und Funktionskontrolle verlassen.

Mitbringsel

Dieser Ausfall verdeutlicht, wie wichtig es ist, sowohl die Anwendungsendpunkte als auch die sie unterstützenden Netzwerkpfade zu überwachen. Wenn mehrere Fehlertypen gleichzeitig auftreten, deutet dies häufig auf Probleme zwischen Lastverteilern, Upstream-Servern oder Edge-Standorten hin. Eine End-to-End-Internettransparenz hilft dabei, festzustellen, ob langsame Antworten, Zeitüberschreitungen oder Upstream-Fehler ihren Ursprung in der Infrastruktur des Anbieters oder im gesamten Internet haben, wodurch die Ursachenanalyse und die Wiederherstellung beschleunigt werden.

September

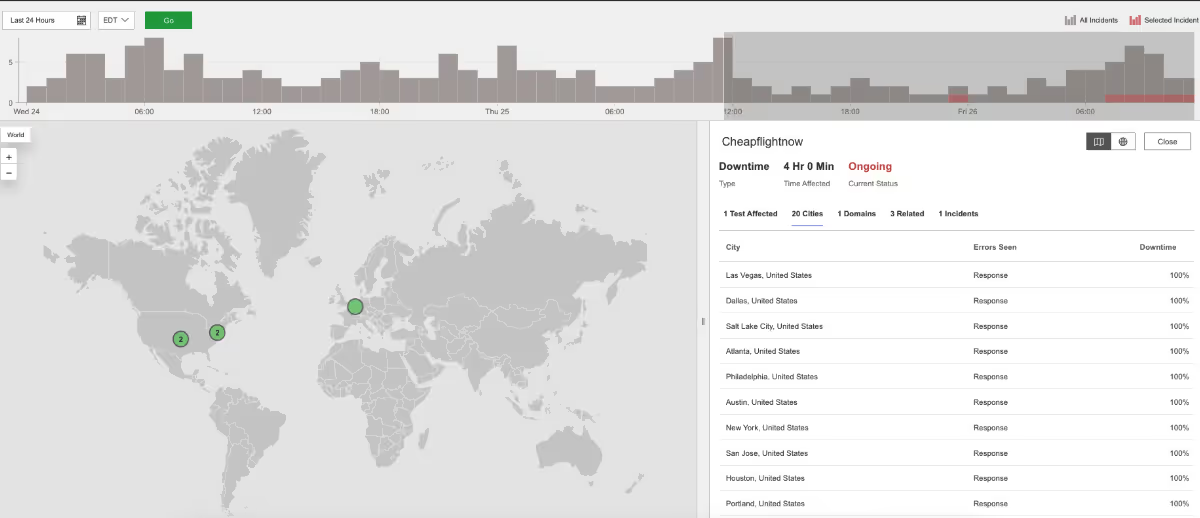

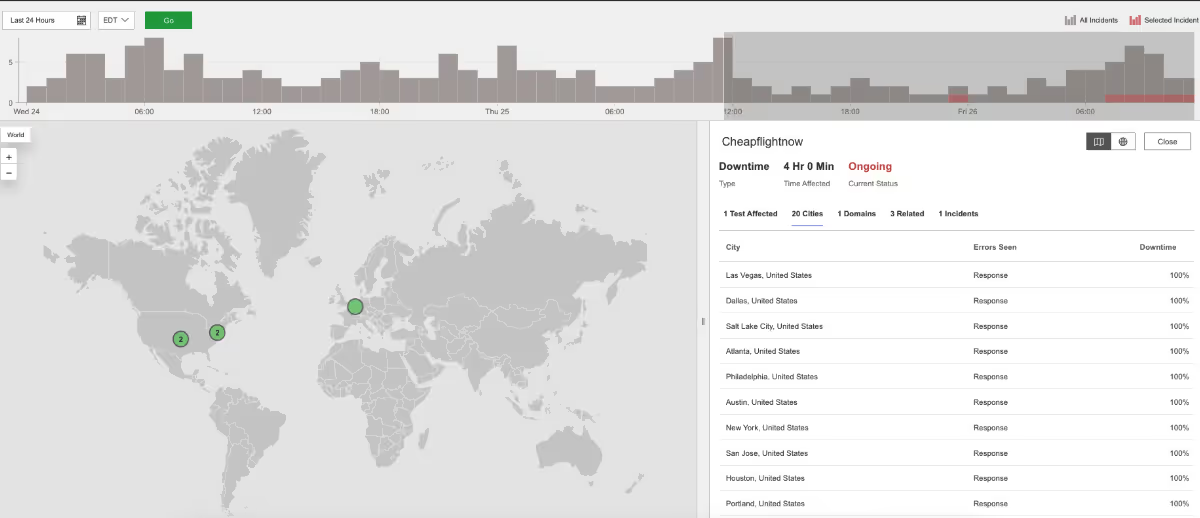

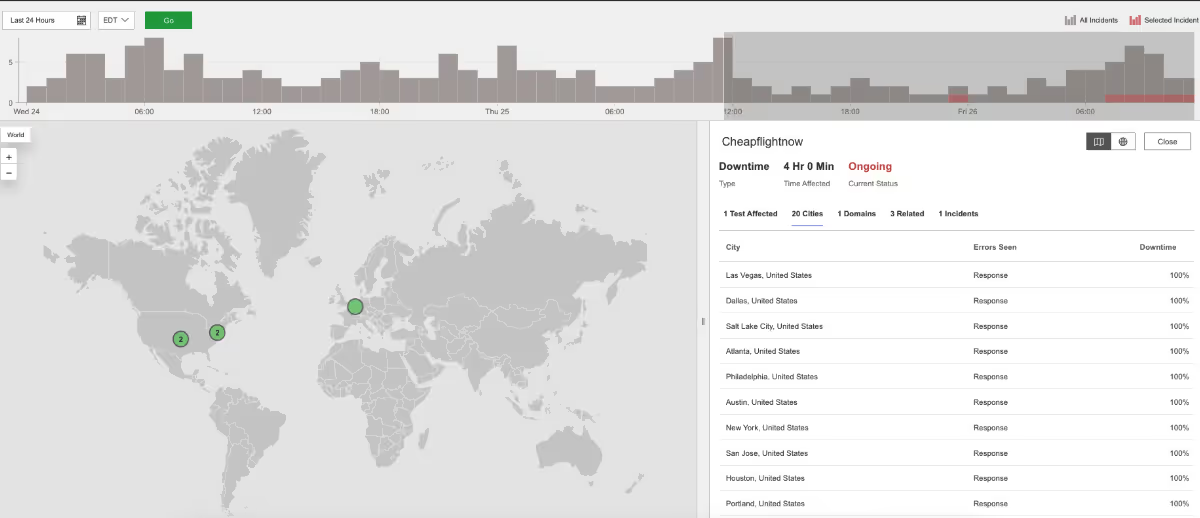

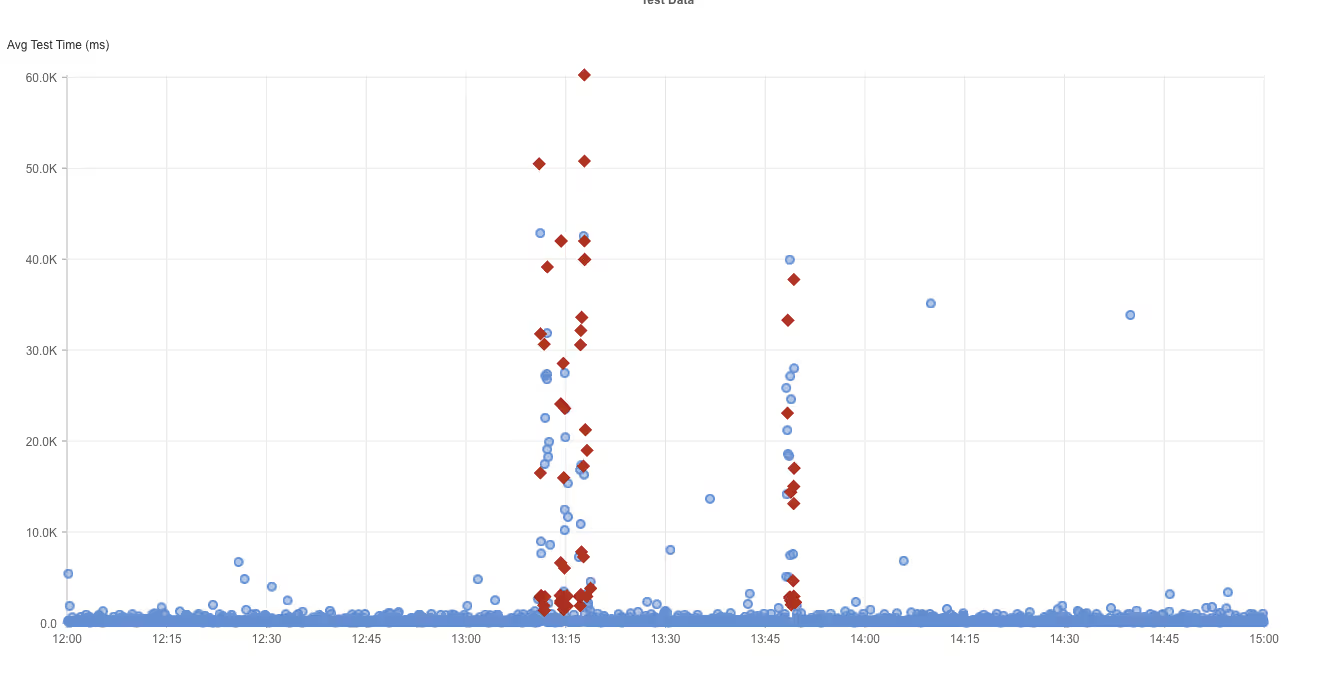

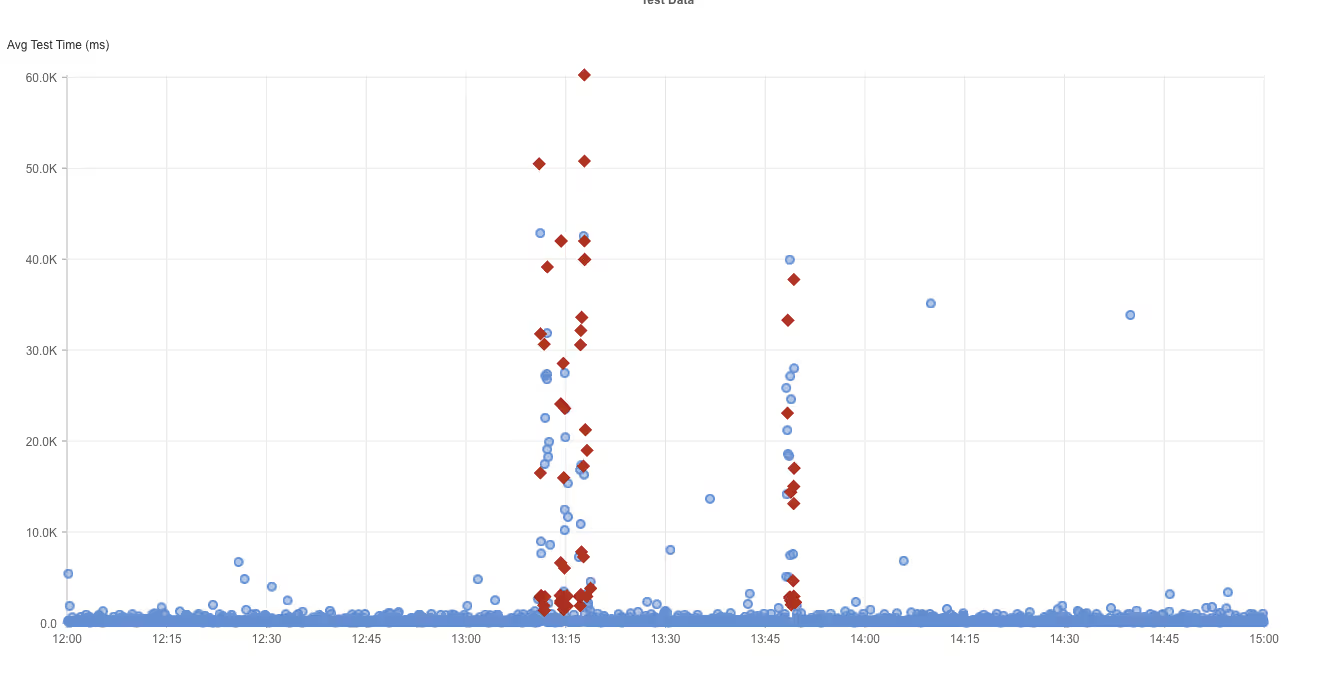

Cheapflightnow

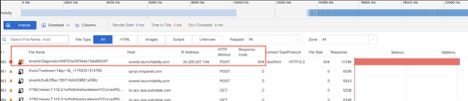

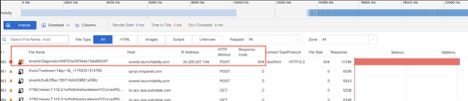

Was ist passiert?

Um 7:34 AM EDT funktionierte die Cheapflightnow-Website in Teilen der USA nicht mehr. Besucher sahen HTTP 522-Fehler, was ein Cloudflare-spezifischer Fehler ist. Das bedeutet, dass Cloudflare gut funktionierte, aber keine Verbindung zu Cheapflightnows eigenen Servern herstellen konnte - entweder waren diese ausgefallen oder zu langsam, um zu reagieren.

Mitbringsel

Das globale Netzwerk von Cloudflare blieb in Betrieb, aber da die eigenen Server von Cheapflightnow nicht antworteten, kam es für die Nutzer trotzdem zu Ausfällen. Um dies zu verhindern, richten Unternehmen häufig Backup-Ursprungsserver (zusätzliche Kopien ihrer Hauptserver) ein, damit der Datenverkehr bei einem Ausfall eines Servers auf einen anderen umgeleitet werden kann. Der Ausfall zeigt auch, wie Abhängigkeiten zwischen CDNs und Ursprungsservern zu Schwachstellen führen können. Durch die Überwachung des gesamten Internet-Stacks, von DNS über CDN bis hin zu Anwendungsservern, können Unternehmen schnell erkennen, wo die Kette bricht und Ausfallzeiten reduzieren.

Alaska Fluggesellschaften

Was ist passiert?

Um 10:27 PM EDT war die Hauptwebsite von Alaska Airlines www.alaskaair.com in vielen größeren Städten der USA nicht mehr erreichbar. Die Benutzer sahen HTTP 503 Service Unavailable und HTTP 500 Internal Server Error Meldungen. Ein 503-Fehler bedeutet, dass der Server zu stark ausgelastet oder wegen Wartungsarbeiten nicht erreichbar ist, während ein 500-Fehler eine allgemeine Meldung ist, dass im System etwas schief gelaufen ist.

Mitbringsel

Wenn sowohl 500- als auch 503-Fehler zusammen auftreten, bedeutet dies oft, dass das Problem nicht nur ein einzelner überlasteter Server ist, sondern ein tiefergehendes, systemweites Problem. Für Fluggesellschaften können Ausfälle zu Verzögerungen bei Flugbuchungen und Check-ins führen. Daher ist die Implementierung einer proaktiven Internet-Leistungsüberwachung (IPM) und der Aufbau starker Backup-Systeme entscheidend für einen reibungslosen Betrieb.

Microsoft Büro

Was ist passiert?

Zwischen 1:10 PM und 1:51 PM EDT funktionierte die Microsoft Office Website www.office.com in mehreren Ländern nicht mehr. Während des Ausfalls wurden Anfragen mit HTTP 503 (Service Unavailable) beantwortet.

Mitbringsel

Dieser Ausfall wurde wahrscheinlich durch eine vorübergehende Serverüberlastung oder einen Konfigurationsfehler verursacht. Die schnelle Wiederherstellung deutet darauf hin, dass Microsofts Backup-Systeme gegriffen haben und den Dienst wiederhergestellt haben. Das Problem dabei ist, dass Microsoft Office von Millionen Menschen genutzt wird. Ein proaktives Internet Performance Monitoring (IPM) stellt sicher, dass selbst kurze Unterbrechungen erkannt, gemessen und verstanden werden und hilft IT-Teams, die Ausfallsicherheit zu überprüfen und die Benutzerfreundlichkeit zu verbessern.

SAP C4C

Was ist passiert?

Um 11:08 AM EDT konnten Benutzer von SAP C4C (einem Cloud-basierten Kundenmanagement-Tool) weltweit nicht auf die Dienste zugreifen. Bei Anfragen an ondemand.com wurden HTTP 503 Service Unavailable-Fehler angezeigt, was bedeutet, dass die Server nicht in der Lage waren, den Datenverkehr zu verarbeiten.

Mitbringsel

Da der Ausfall so viele Regionen gleichzeitig betraf, war er wahrscheinlich auf ein zentrales Problem der Cloud-Infrastruktur zurückzuführen. Globale SaaS-Dienste sind auf einen Lastausgleich angewiesen, um den Datenverkehr zu verteilen und die Leistung stabil zu halten. Mit einer synthetischen Überwachung über mehrere Regionen hinweg können Unternehmen die Verfügbarkeit weltweit bestätigen und sicherstellen, dass ihre Kunden nicht ausgesperrt werden.

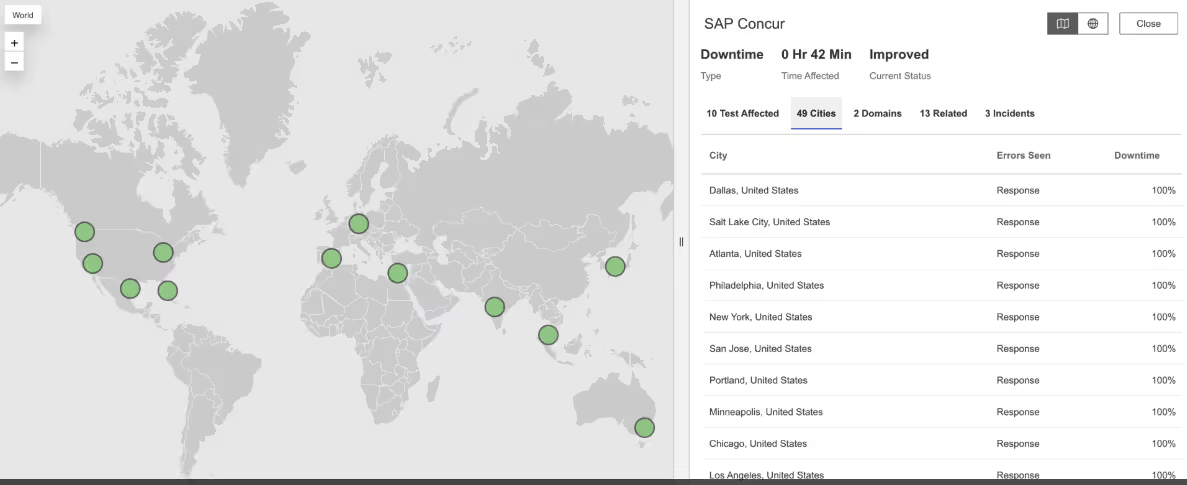

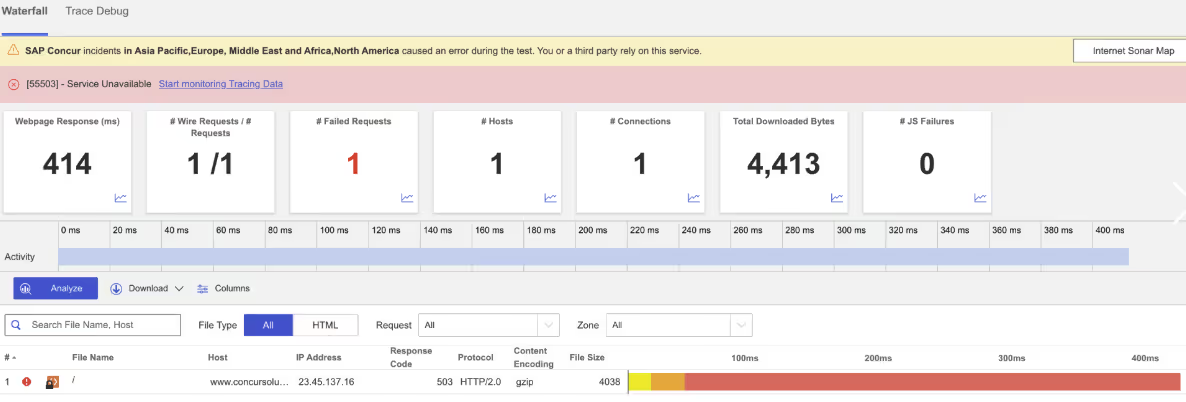

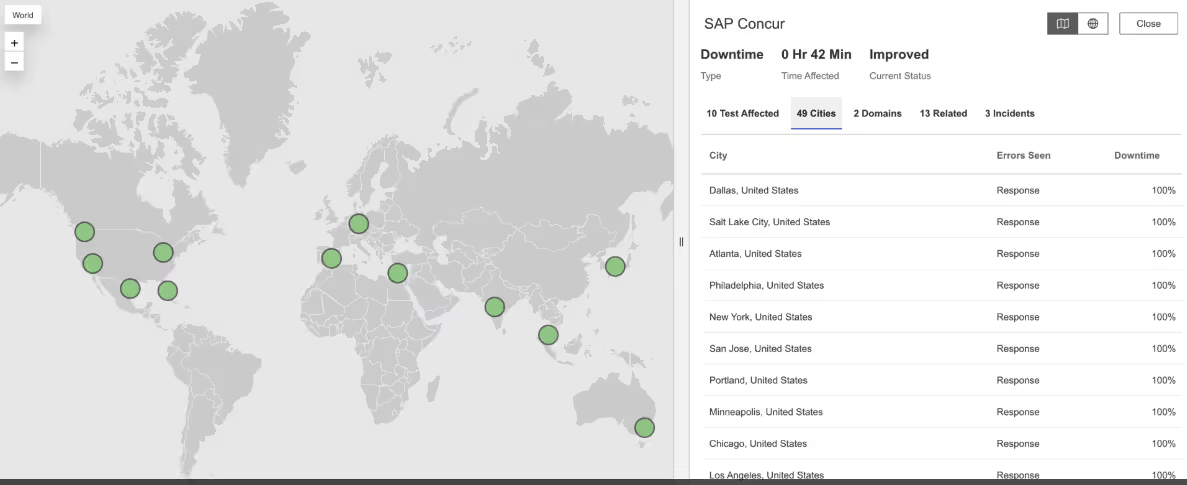

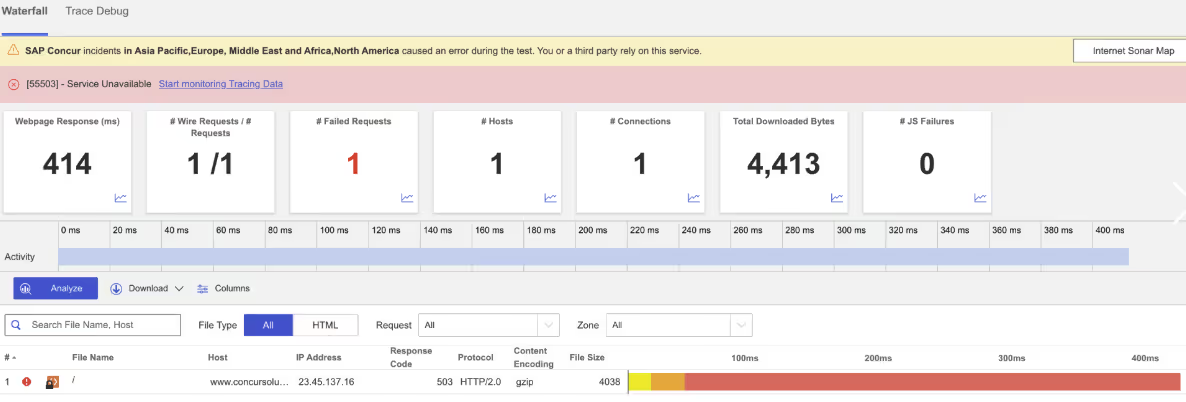

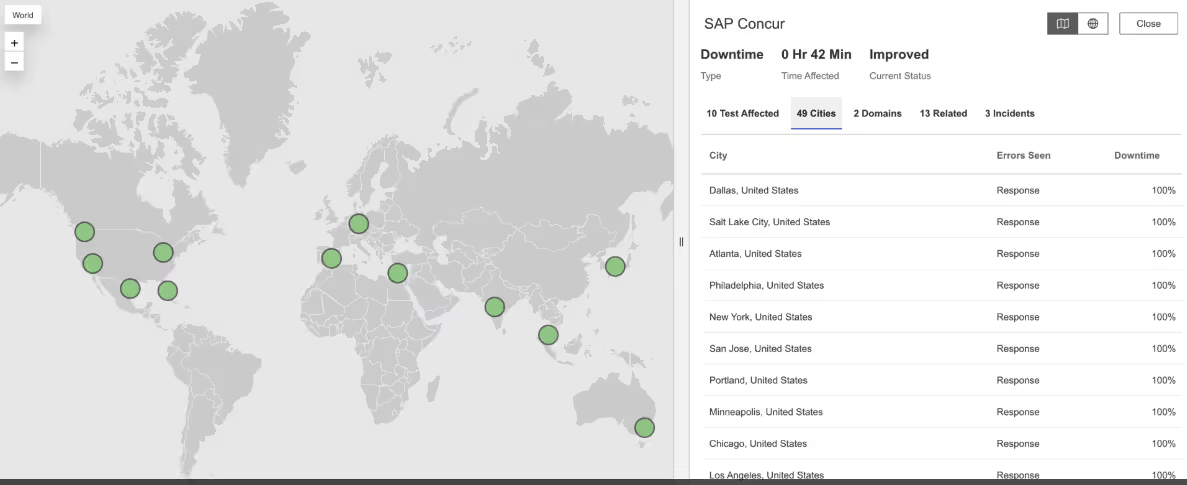

SAP Concur

Was ist passiert?

Von 3:39 PM bis 3:51 PM EDT ist SAP Concur, das für die Verwaltung von Geschäftsreisen und Spesen verwendet wird, in mehreren Regionen ausgefallen. Bei dem Versuch, eine Verbindung zu www.concursolutions.com herzustellen, wurde die Fehlermeldung HTTP 503 Service Unavailable ausgegeben.

Mitbringsel

Auch wenn dieser Ausfall nur kurz war, bedeuteten 503-Fehler, dass die Server Anfragen nicht bearbeiten konnten. Bei Unternehmensanwendungen wie Concur können selbst minutenlange Ausfallzeiten den Finanzbetrieb stören. Eine durchgängige Überwachung, von der DNS-Auflösung bis zur Reaktion der Anwendung, hilft Unternehmen, die Zuverlässigkeit zu überprüfen und Schwachstellen zu erkennen, bevor sie zu sichtbaren Ausfällen führen.

Aleph Alpha

Was ist passiert?

Um 4:20 AM EDT hatte die Website von Aleph Alpha in mehreren Ländern Probleme. Die Nutzer hatten mit Verbindungsabbrüchen und sehr langsamen Ladezeiten zu kämpfen, was bedeutet, dass ihre Browser keine stabile Verbindung zu den Servern herstellen konnten.

Mitbringsel

Langsame Verbindungen und Ausfälle können auf DNS-Probleme, Routing-Probleme oder Serverüberlastungen hinweisen. Für KI-Anbieter beeinträchtigen diese Störungen die Zuverlässigkeit. Durch die Überwachung von DNS und BGP (dem Routing-System des Internets) kann schnell festgestellt werden, ob die Ausfälle von der Netzwerkebene oder den Servern selbst ausgehen.

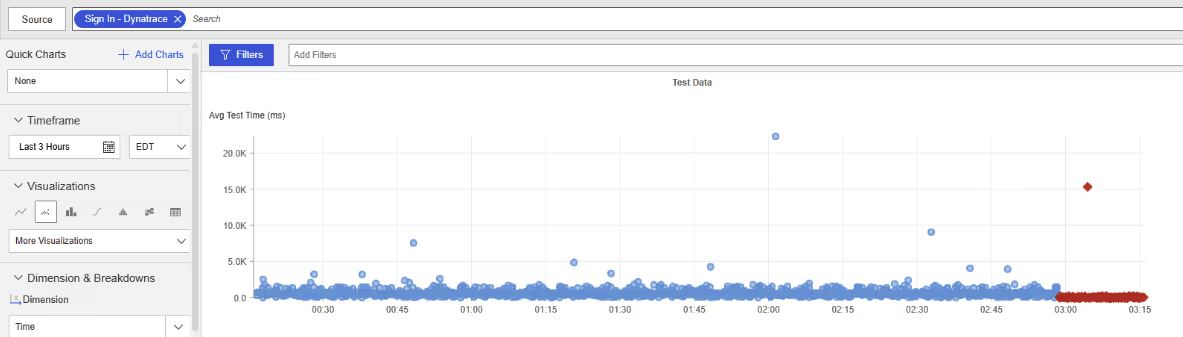

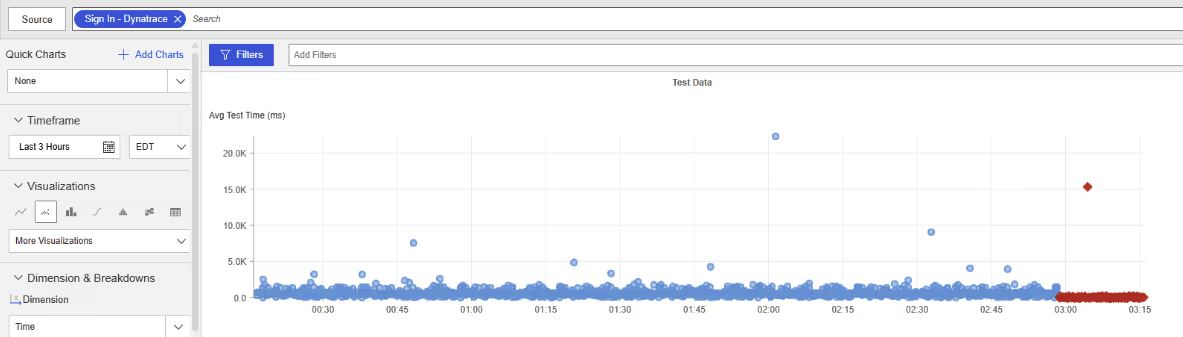

Dynatrace

Was ist passiert?

Um 2:58 AM EDT funktionierte der Login-Service von Dynatrace für Benutzer in mehreren Ländern nicht mehr, darunter Großbritannien, Rumänien, Serbien, die Niederlande und Südafrika. Beim Versuch, sich anzumelden, kam es zu Verbindungsfehlern, d. h. ihre Geräte konnten die Anmeldeserver überhaupt nicht erreichen.

Mitbringsel

Da der Anmeldedienst ausfiel, konnten die Benutzer nicht auf Dynatrace zugreifen, obwohl der Rest der Plattform vielleicht noch lief. Dies unterstreicht eine besondere Herausforderung: Die Überwachungstools selbst hängen von demselben Internet-Stack ab, den sie messen. Ausfälle in DNS, Routing (BGP) oder Authentifizierungsschichten können sich auf die Überwachungsplattformen auswirken. Eine unabhängige, externe Überwachung bietet ein Sicherheitsnetz - im Wesentlichen eine "Überwachung der Überwachungsgeräte" -, um sicherzustellen, dass die Sichtbarkeit nicht verloren geht, wenn die Tools selbst betroffen sind.

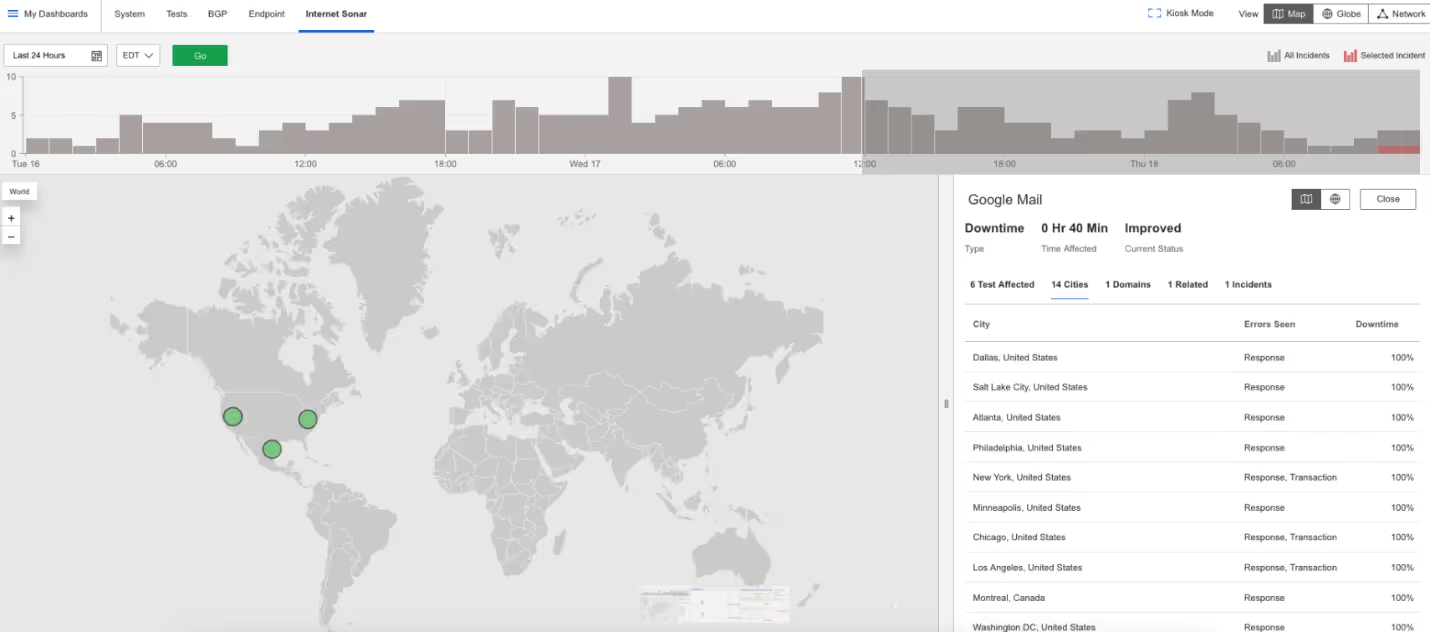

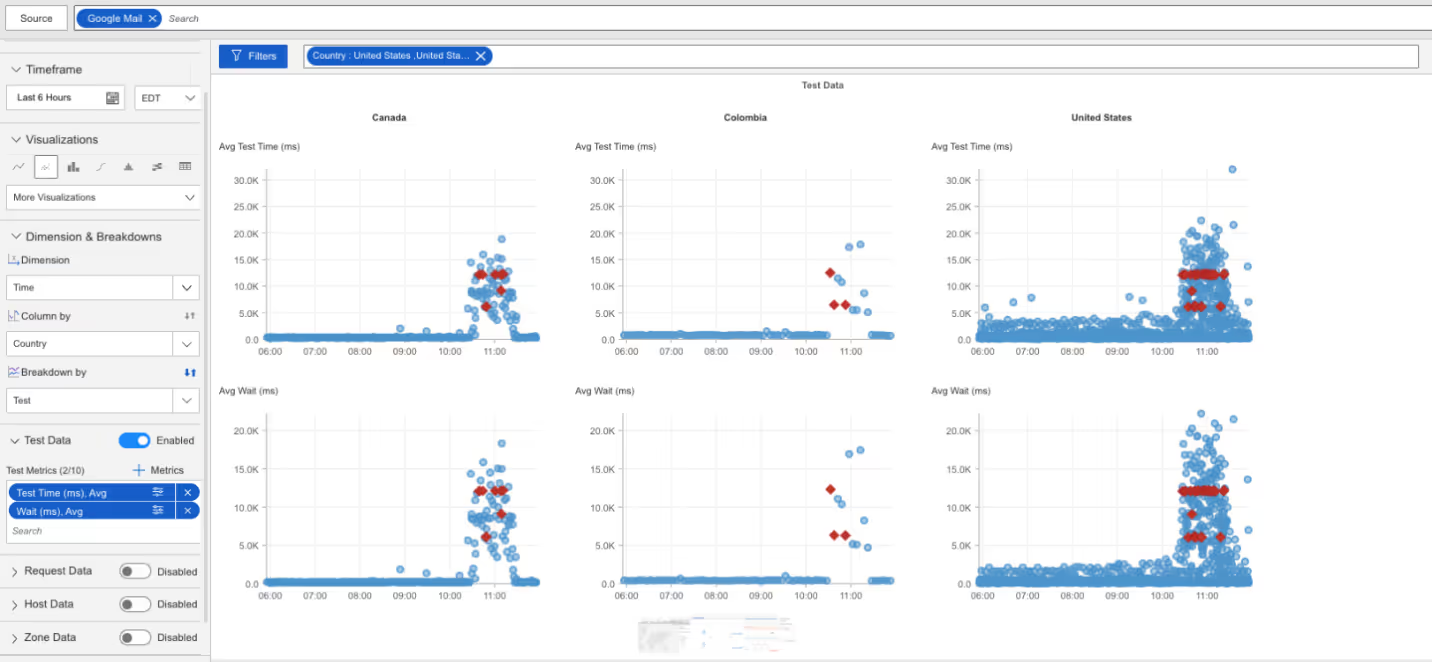

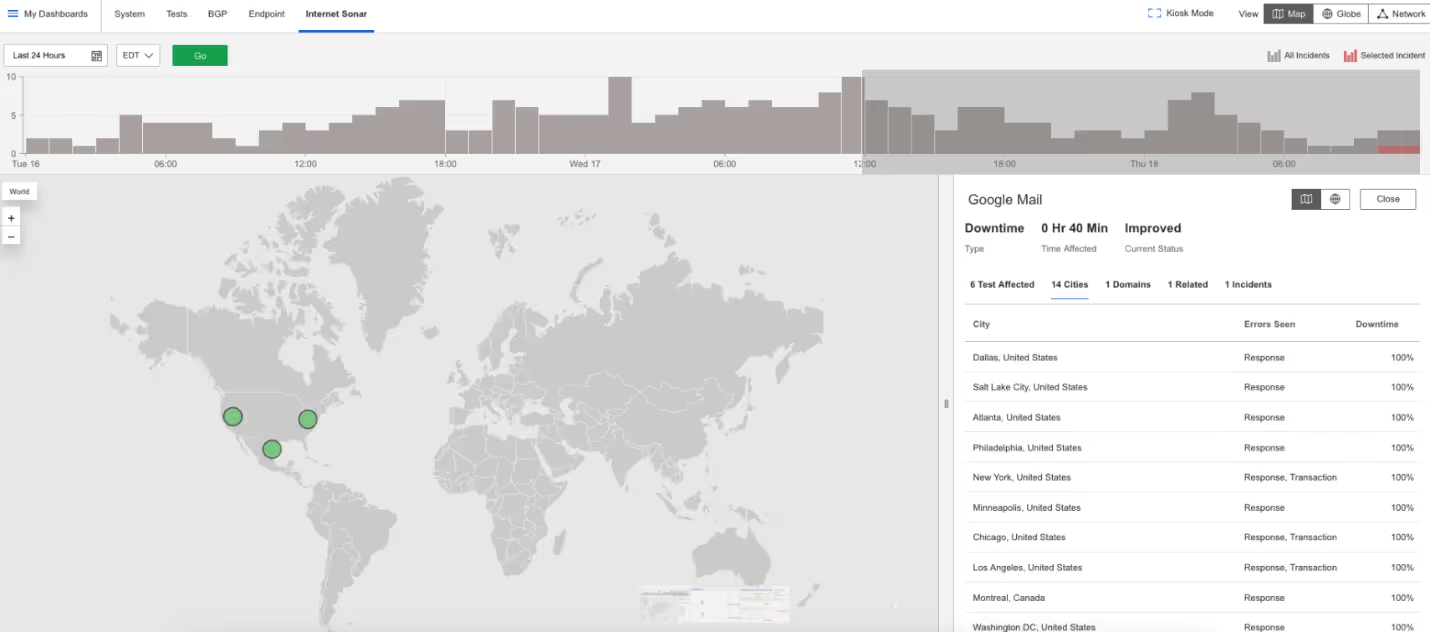

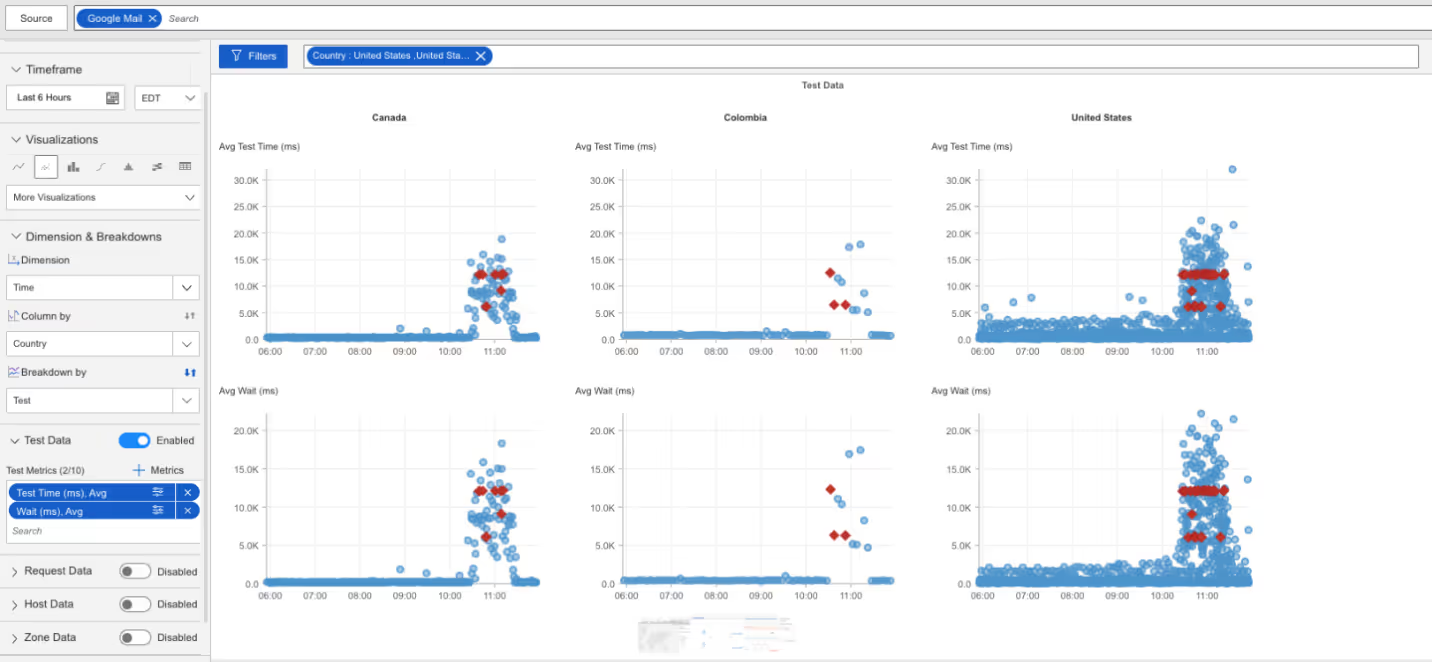

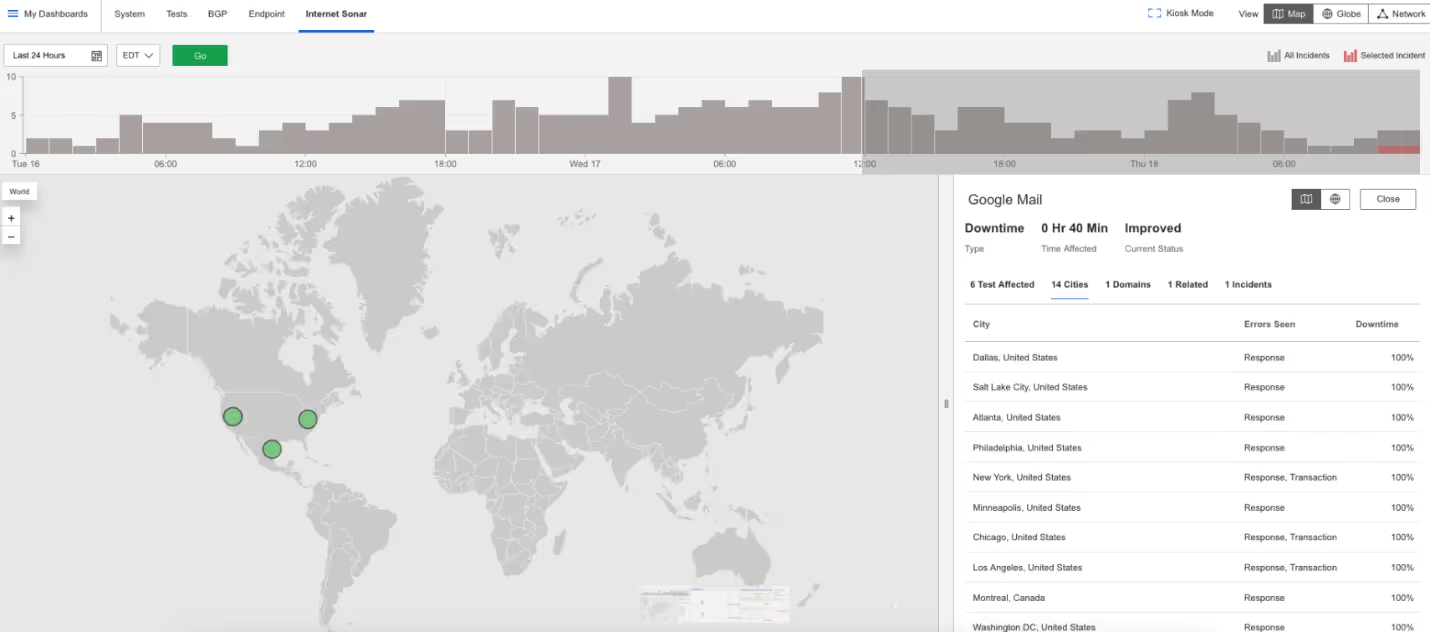

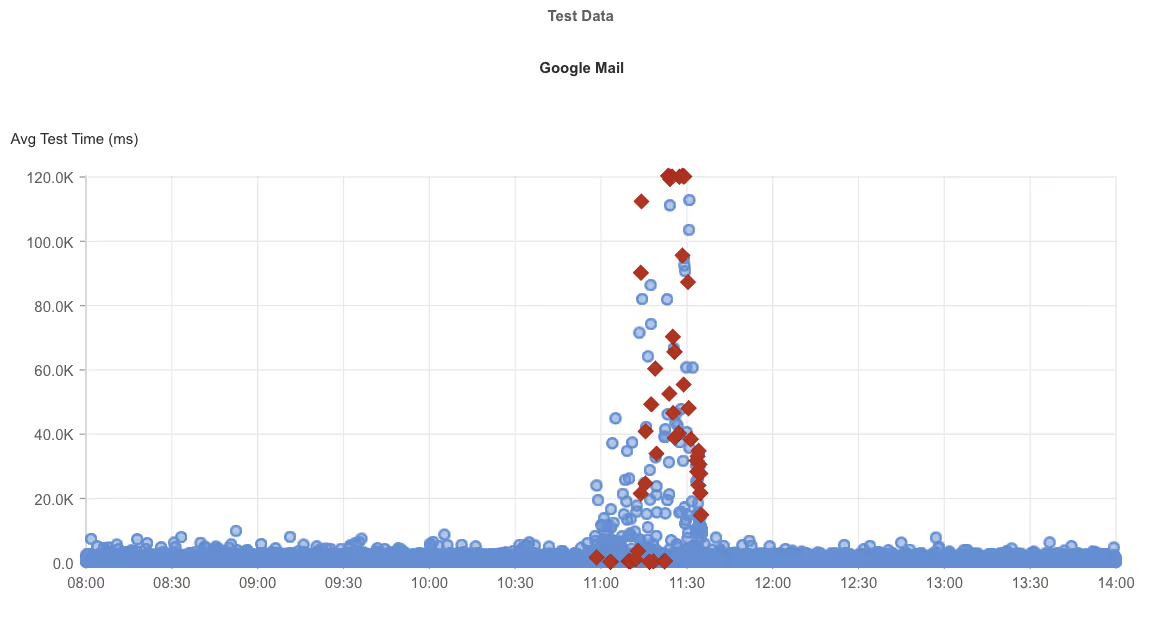

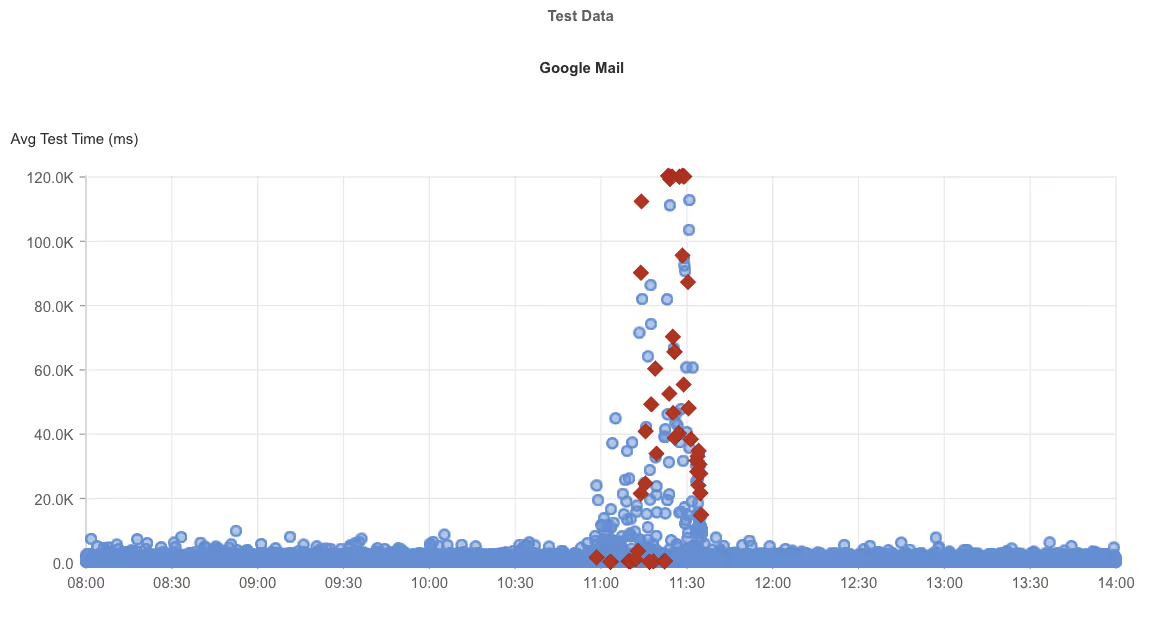

Google Mail

Was ist passiert?

Von 10:40 bis 11:20 AM EDT konnten sich Gmail-Benutzer nicht anmelden. Beim Versuch, auf die Anmeldeseite zuzugreifen, wurden HTTP 502 Bad Gateway-Fehler zurückgegeben, was bedeutet, dass ein Server eine ungültige Antwort von einem anderen erhalten hat. Ab 10:26 AM EDT kam es außerdem zu langsameren Ladezeiten.

Mitbringsel

Login-Fehler sind besonders störend, weil sie den Zugang blockieren, selbst wenn die Mailserver in Ordnung sind. Durch die Überwachung des gesamten Benutzerverlaufs, von der Anmeldung bis zum Posteingang, mit synthetischer Überwachung können Unternehmen diese Probleme frühzeitig erkennen und die Widerstandsfähigkeit des Internets stärken.

NS1

Was ist passiert?

Zwischen 7:08 und 7:32 Uhr EDT fielen die DNS-Dienste von NS1 in mehreren Regionen aus. DNS-Timeouts (wenn das System, das Website-Namen in IP-Adressen übersetzt, nicht rechtzeitig reagiert) unterbrachen den Zugang zu vielen Kunden-Websites, einschließlich Pinterest.

Mitbringsel

Wenn DNS ausfällt, werden Websites unerreichbar, selbst wenn ihre Server in Ordnung sind. Da NS1 vielen Diensten zugrunde liegt, wirken sich Ausfälle weit aus. Eine unabhängige Überwachung des DNS-Zustands und des Internet-Routings (BGP) ist der Schlüssel, um Ausfälle schnell zu erkennen und ihr Ausmaß zu bestätigen.

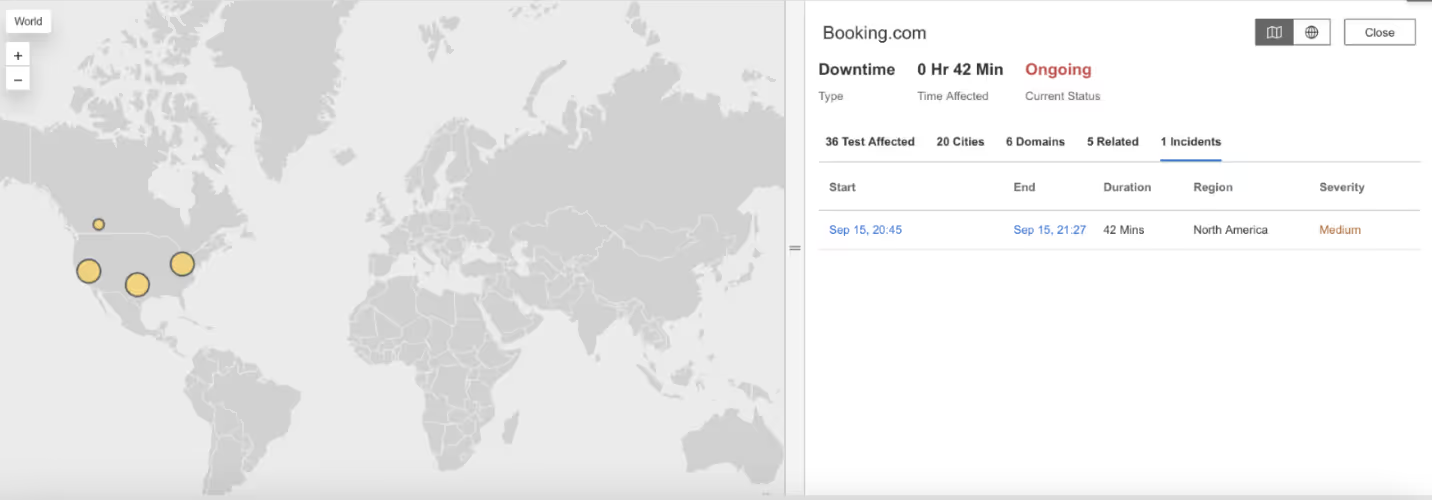

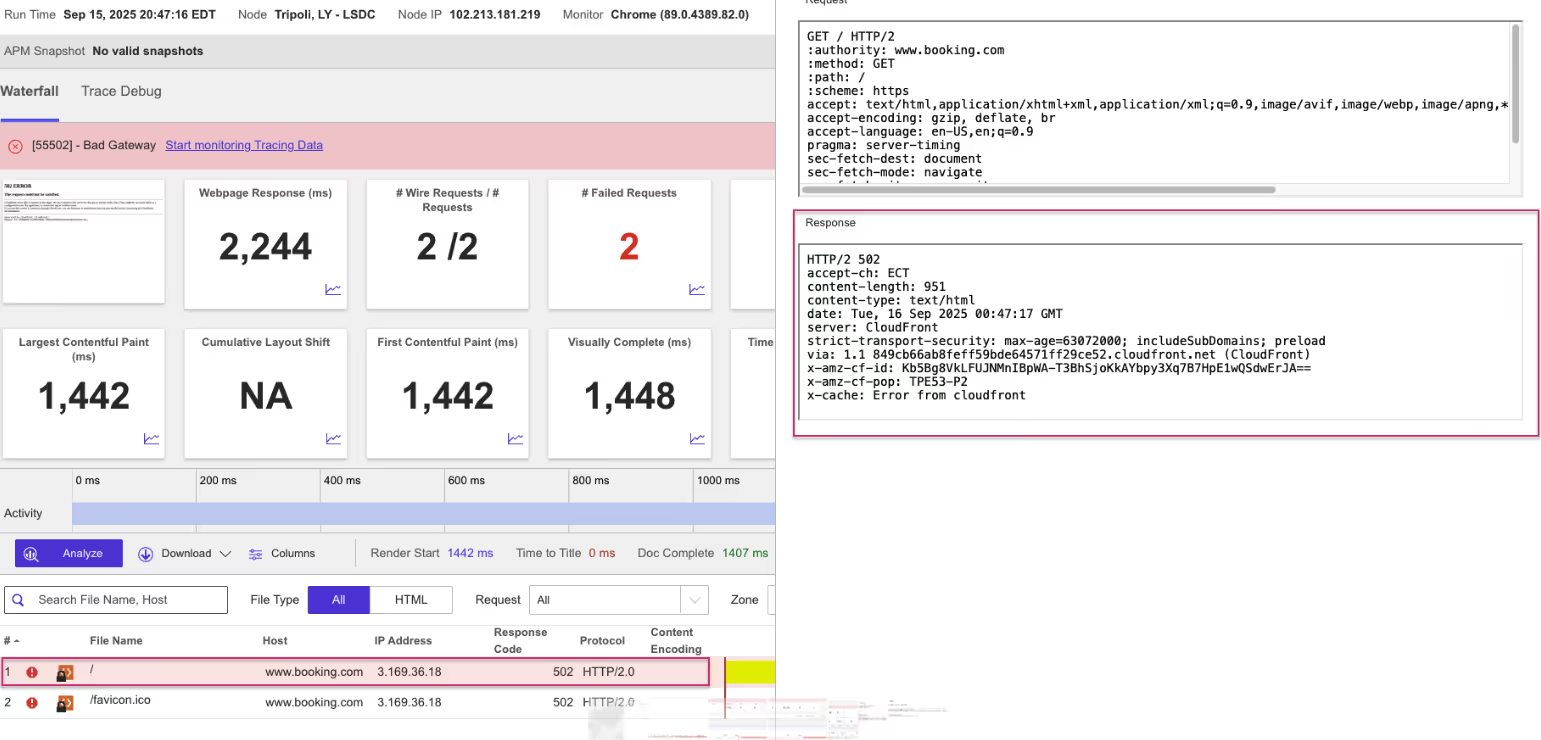

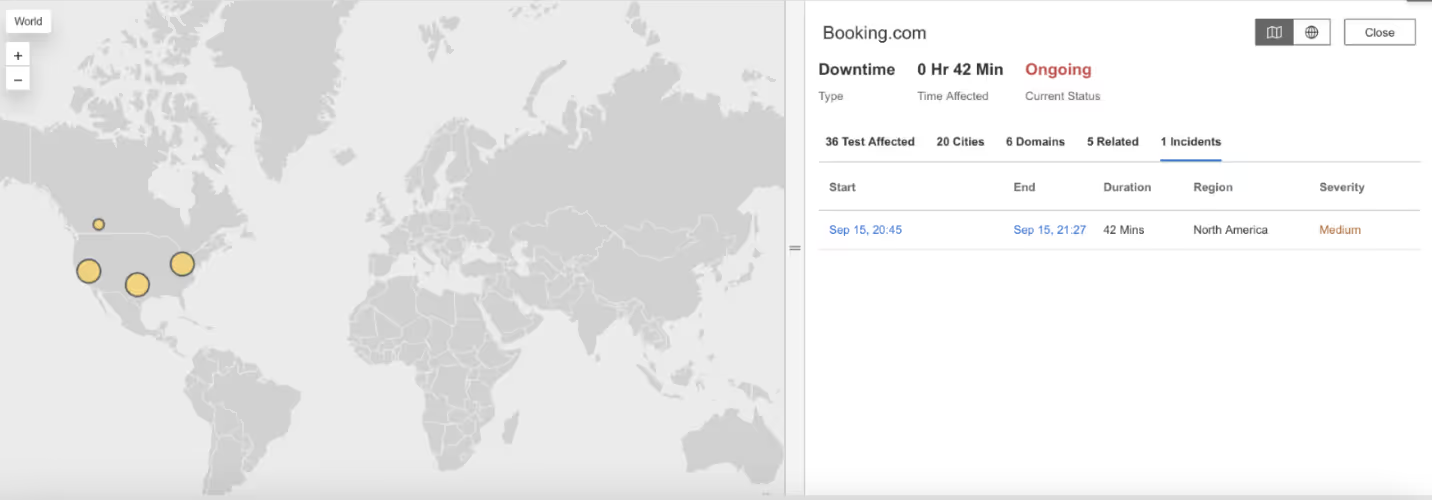

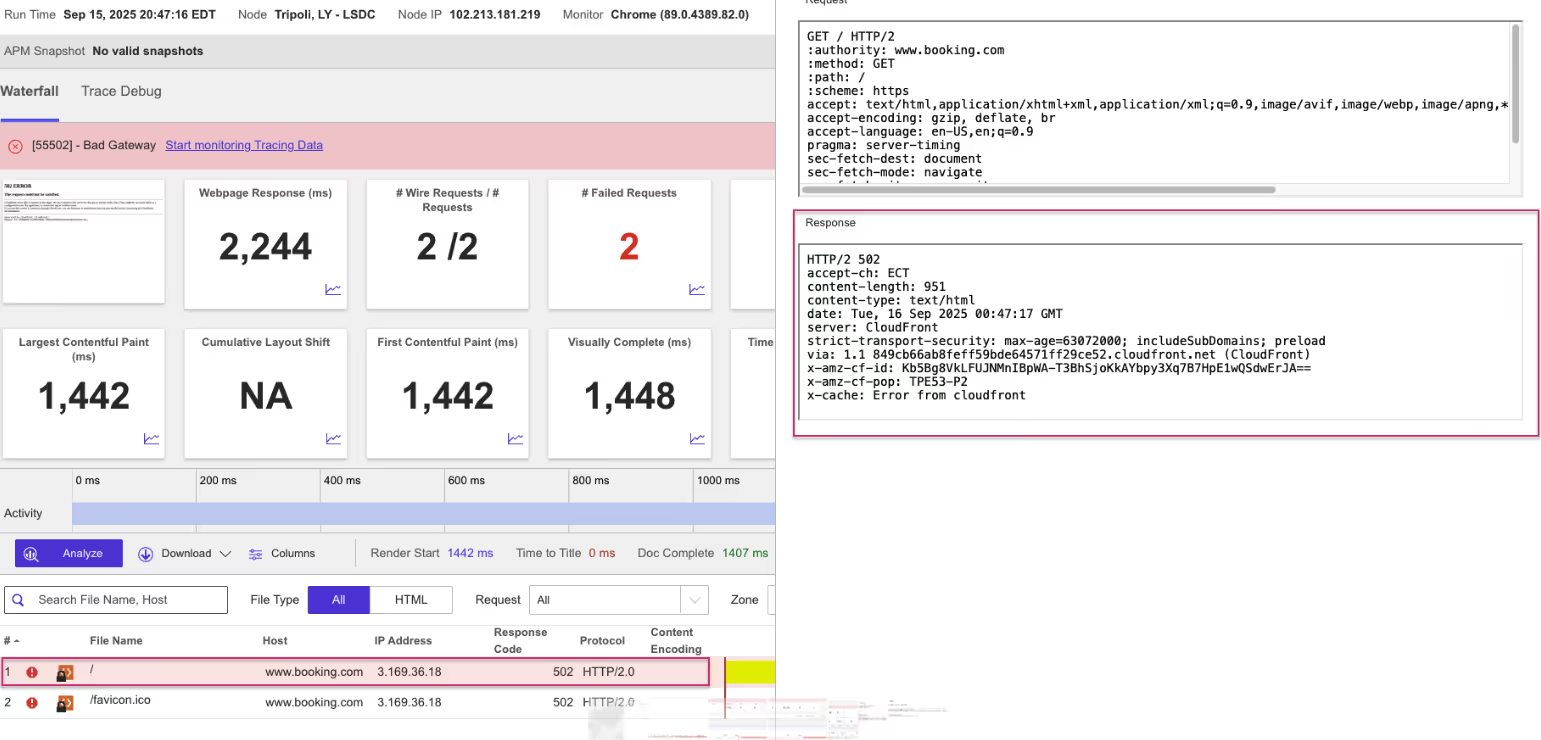

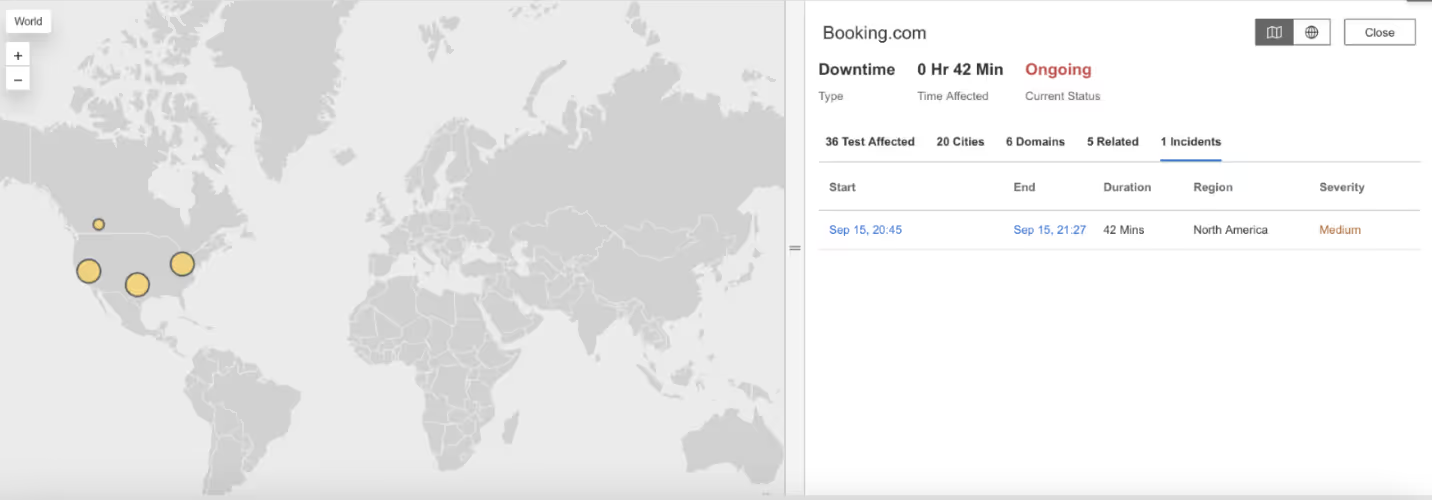

Buchen.com

Was ist passiert?

Um 8:00 PM EDT fielen die Dienste von Booking.com in mehreren Städten aus. Die Benutzer erhielten HTTP 502 Bad Gateway-Fehler, was bedeutet, dass die Server, die den Datenverkehr verarbeiten, die dahinter liegenden Systeme nicht erreichen konnten.

Mitbringsel

Bei Reiseplattformen blockieren Ausfälle Buchungen und schaden dem Vertrauen. Ein 502-Fehler deutet oft auf Ausfälle zwischen Lastverteilern und Anwendungsservern hin. Die synthetische Überwachung von APIs und Nutzertransaktionen hilft dabei, diese Ausfälle frühzeitig zu erkennen und genau zu zeigen, wo sie in der Dienstleistungskette auftreten.

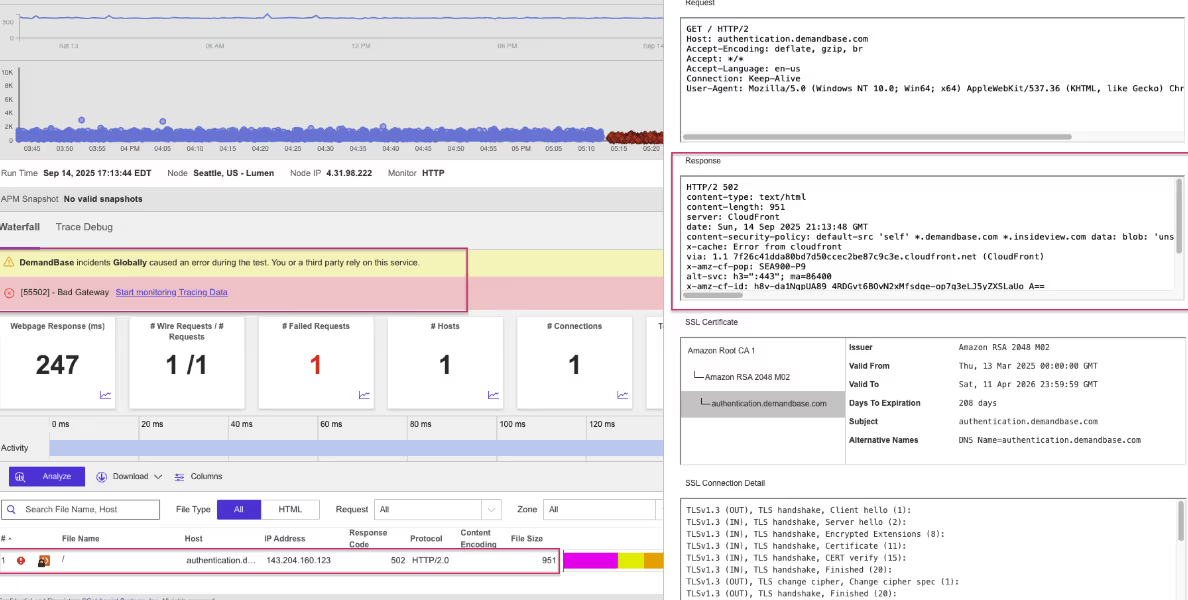

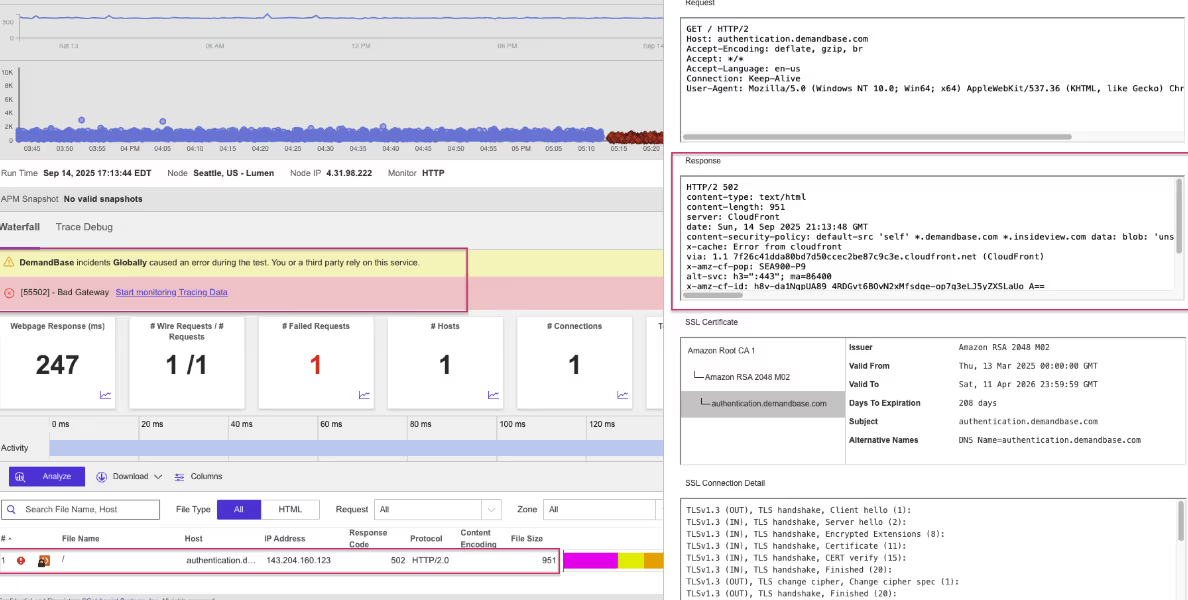

DemandBase

Was ist passiert?

Von 5:13 bis 6:55 PMT kam es bei DemandBase zu einem globalen Ausfall. Benutzer sahen sich mit HTTP 502 Bad Gateway-Fehlern und später mit einem SSL-Zertifikatsfehler konfrontiert, der sichere Verbindungen blockierte.

Mitbringsel

Bei diesem Ausfall wurden Serververbindungsprobleme mit einer falschen Sicherheitskonfiguration kombiniert. Da SSL-Zertifikate für den verschlüsselten Zugriff erforderlich sind, kann ein abgelaufenes oder ungültiges Zertifikat die Benutzer vollständig ausschließen. Die Überwachung des SSL-Zustands zusammen mit der DNS- und Anwendungsleistung hilft zu verhindern, dass kleine Unachtsamkeiten zu großen globalen Ausfällen führen.

Azure

Was ist passiert?

Um 3:33 PM EDT fiel die Azure-Cloud-Plattform von Microsoft in mehreren Regionen aus. Die Benutzer sahen HTTP 500 Internal Server Errors und HTTP 503 Service Unavailable Antworten. Ein 500-Fehler bedeutet, dass der Server nicht funktioniert, während ein 503-Fehler bedeutet, dass er zu stark ausgelastet oder nicht verfügbar ist.

Mitbringsel

Azure ist eine kritische Infrastruktur für Unternehmen, so dass Ausfälle unzählige abhängige Dienste beeinträchtigen. Die Überwachung des gesamten Internet-Stacks, von der Cloud-Infrastruktur bis hin zu DNS und CDNs, hilft Unternehmen zu verstehen, ob das Problem beim Cloud-Anbieter oder extern liegt

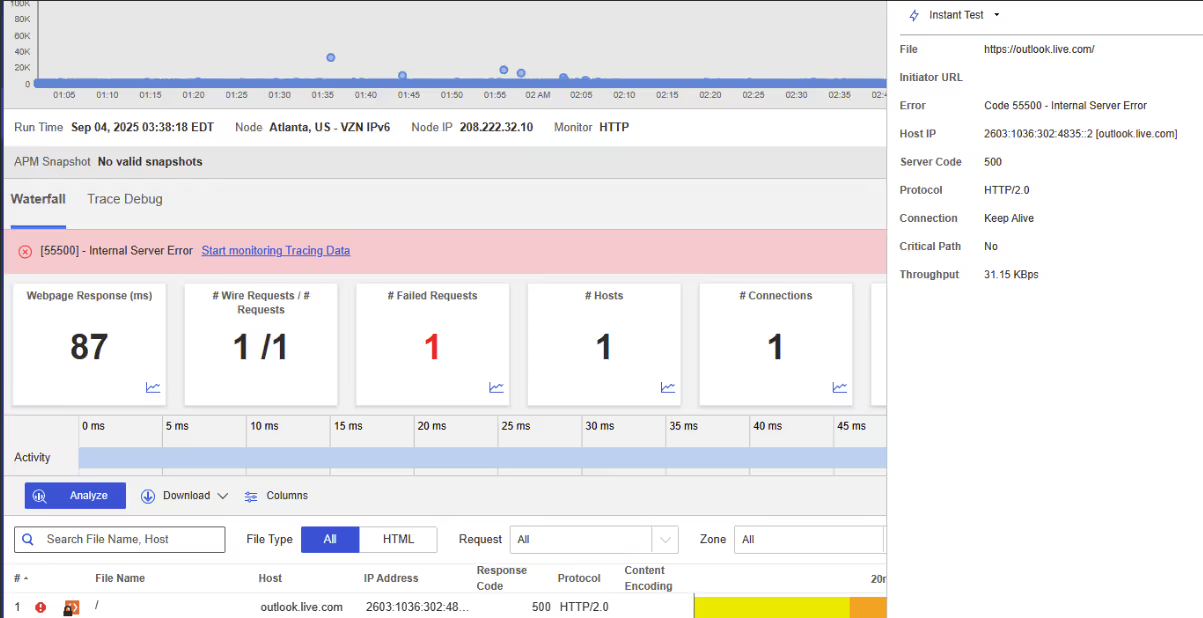

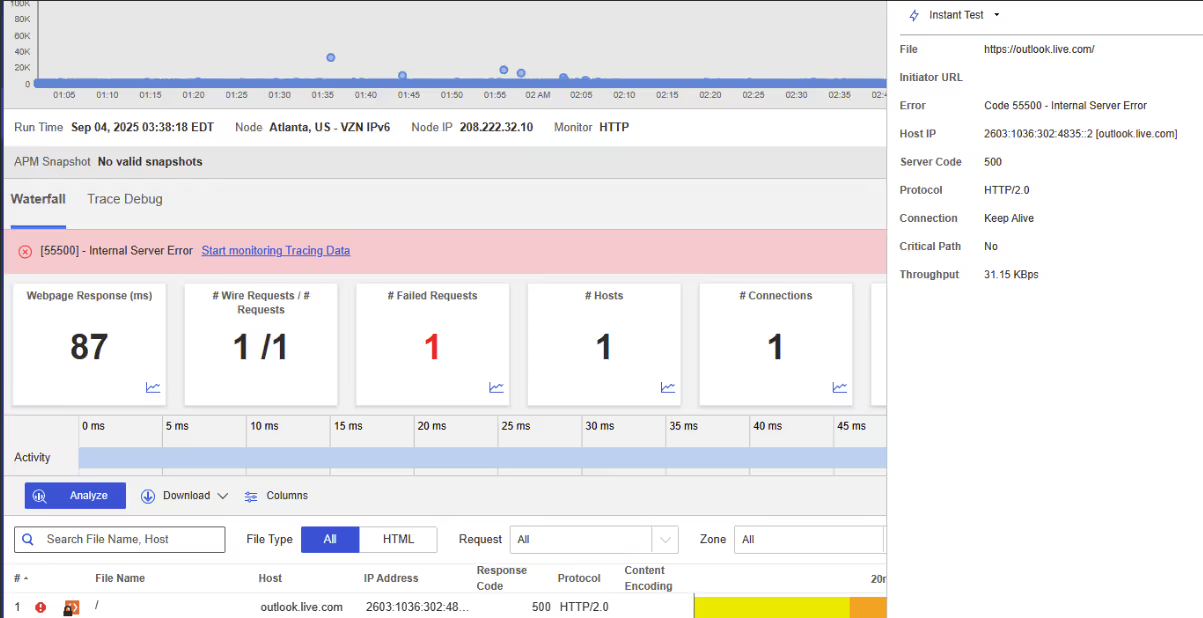

Microsoft Outlook

Was ist passiert?

Um 3:38 AM EDT kam es in ganz Nordamerika zu einem Outlook-Ausfall. Die Benutzer erhielten HTTP 500 Internal Server Error-Antworten, die zeigten, dass das Problem auf den Microsoft-Servern lag.

Mitbringsel

E-Mail ist sowohl für Unternehmen als auch für Behörden von entscheidender Bedeutung. Selbst kurze Ausfälle stören die Kommunikation. Die synthetische Überwachung von SaaS-Diensten bietet Unternehmen eine unabhängige Möglichkeit, Störungen frühzeitig zu erkennen und festzustellen, ob die Probleme beim Anbieter oder im Internet selbst liegen.

Was ist passiert?

Um 5:33 PM EDT ist Twitter in mehreren Ländern ausgefallen. Nutzer sahen HTTP 500 Internal Server Errors, was bedeutet, dass Anfragen die Twitter-Server erreichten, aber aufgrund interner Probleme fehlschlugen.

Mitbringsel

Ausfälle in den sozialen Medien sind unübersehbar. Interne Serverfehler sind oft auf Backend-Ausfälle oder Überlastungen zurückzuführen. Die Überwachung aus globaler Sicht ist entscheidend, um festzustellen, ob es sich um einen regionalen oder globalen Ausfall handelt, wodurch die Anbieter schneller reagieren können.

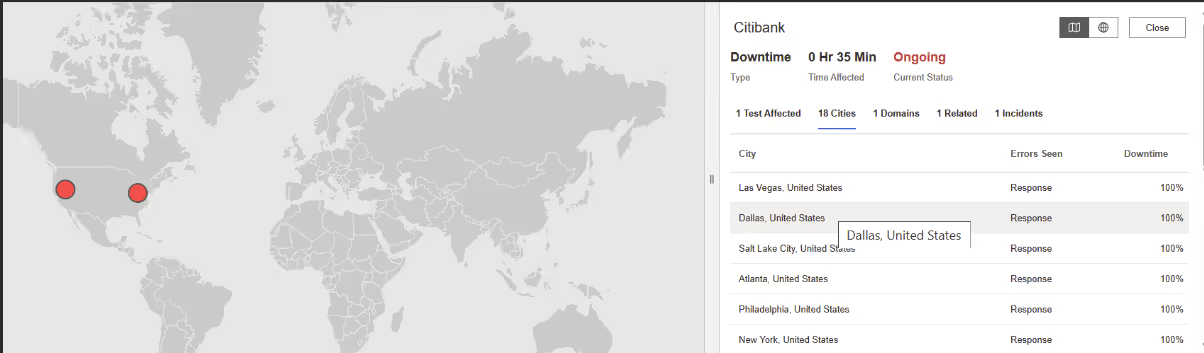

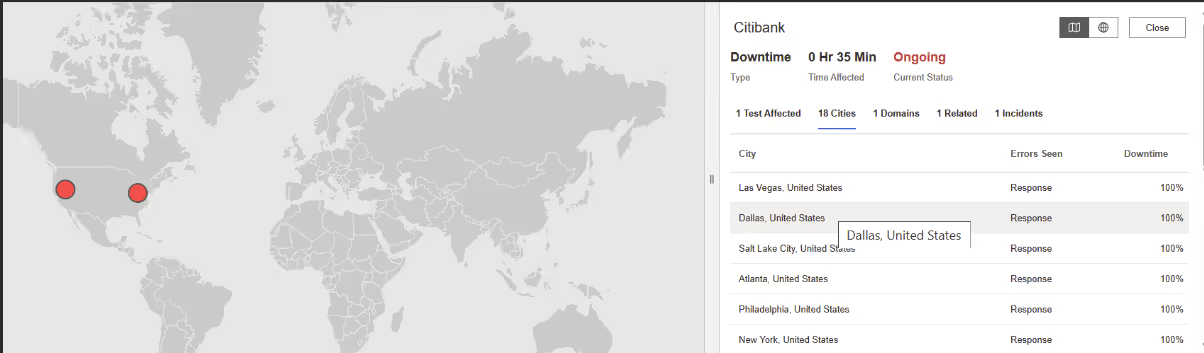

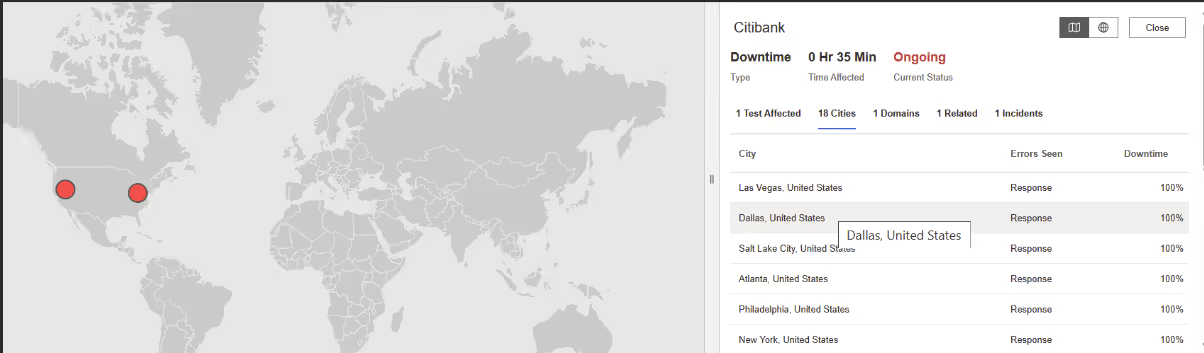

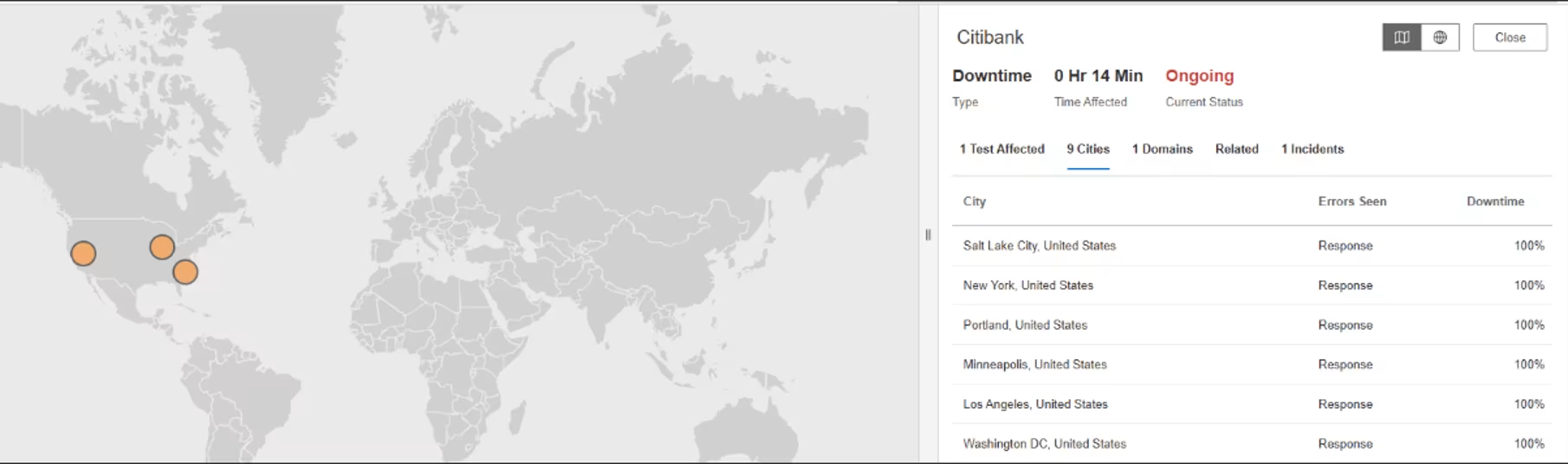

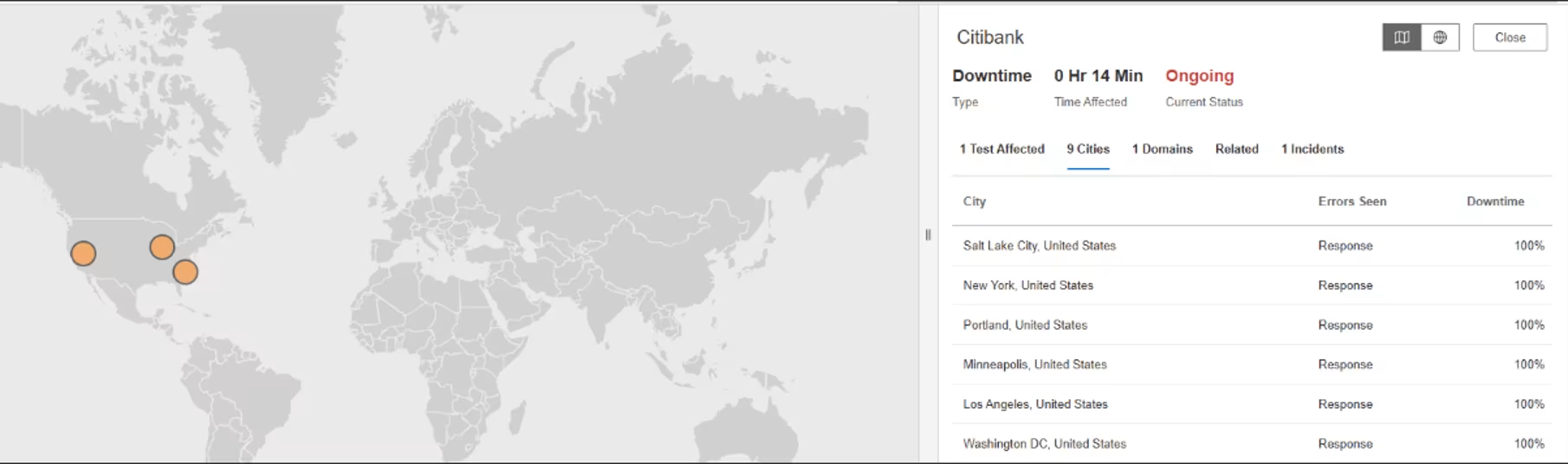

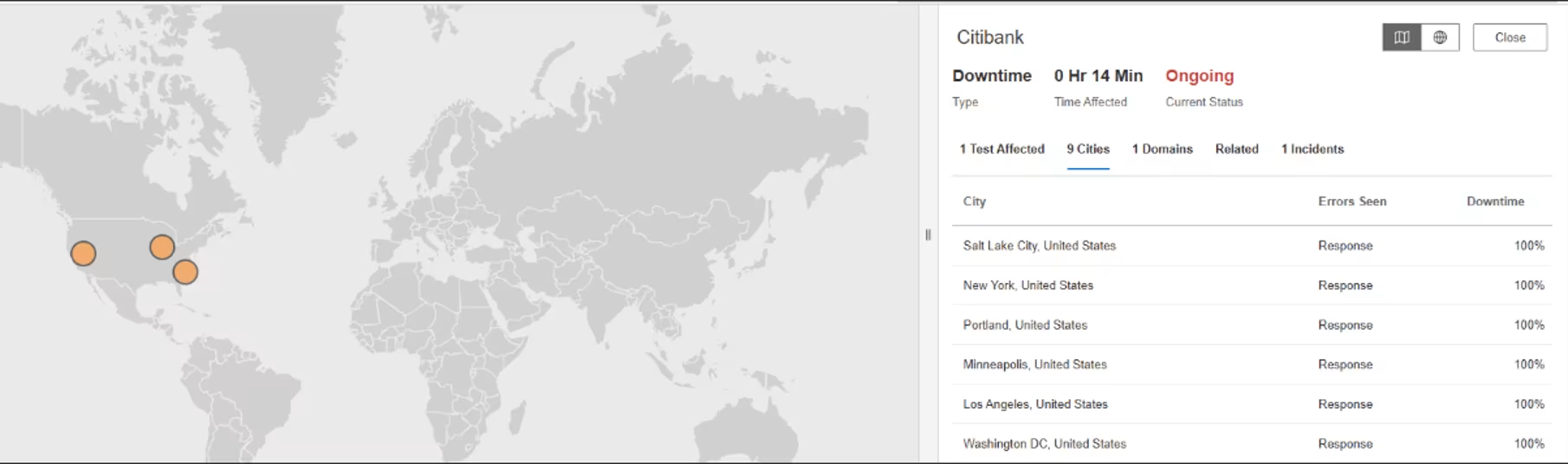

Citibank

Was ist passiert?

Von 3:10 bis 5:04 Uhr EDT waren die Citibank-Dienste in ganz Nordamerika unterbrochen. Die Kunden sahen sich mit HTTP 502 Bad Gateway-Fehlern konfrontiert, die anzeigten, dass die Front-End-Server die dahinter liegenden Banksysteme nicht erreichen konnten.

Mitbringsel

Für Finanzinstitute blockieren Ausfallzeiten wichtige Transaktionen und untergraben das Vertrauen. Die Überwachung der DNS-, TLS- und Anwendungsleistungsebenen liefert frühzeitige Warnungen und trägt dazu bei, dass Bankdienstleistungen stabil bleiben.

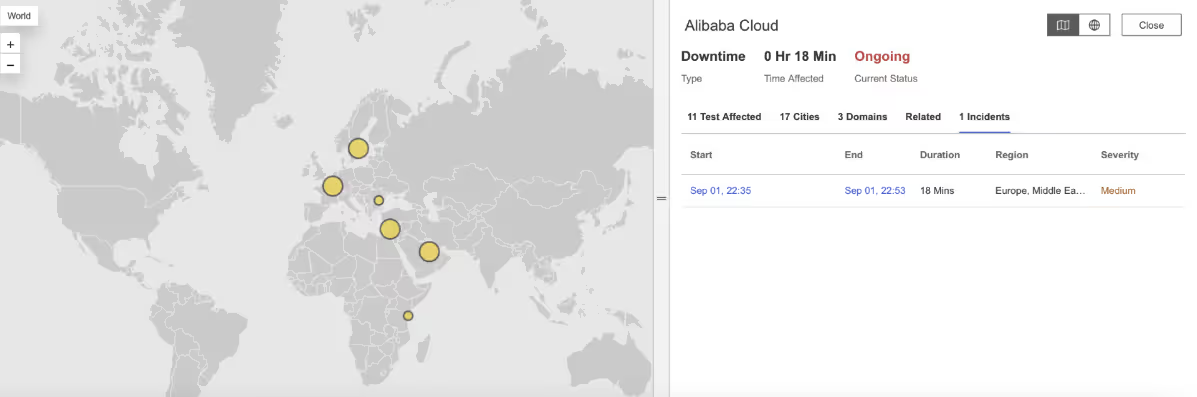

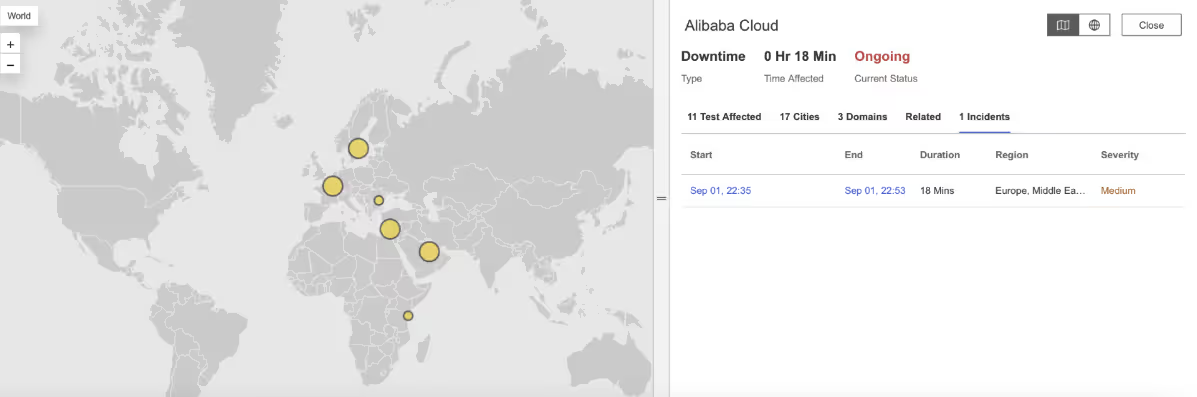

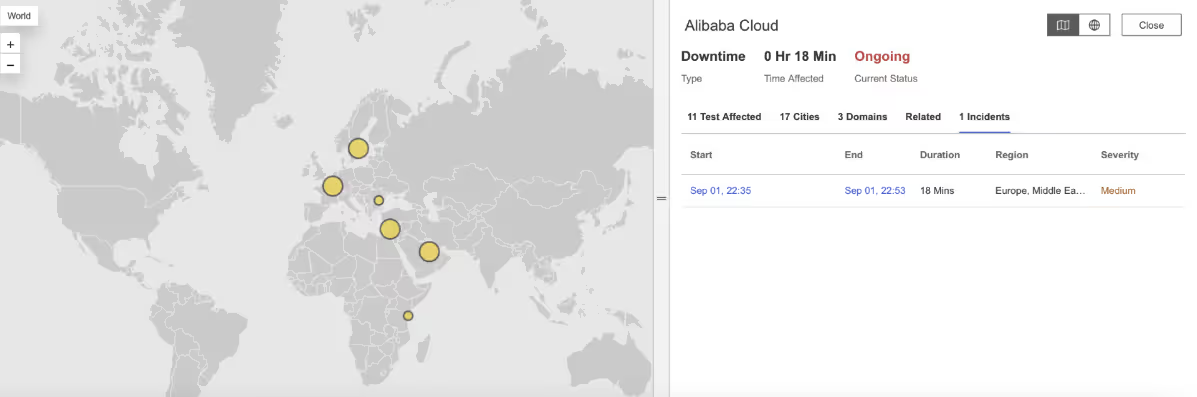

Alibaba Wolke

Was ist passiert?

Von 10:35 bis 10:50 Uhr EDT kam es bei Alibaba Cloud zu einem regionalen Ausfall. Die Benutzer sahen langsame Antworten und eine Reihe von Fehlern: HTTP 500 Internal Server Error, 502 Bad Gateway, 504 Gateway Timeout, und 413 Payload Too Large.

Mitbringsel

Die Mischung aus Fehlern zeigt Stress in verschiedenen Bereichen der Alibaba Cloud-Infrastruktur - von Serverüberlastungen bis hin zu Grenzen bei der Bearbeitung von Anfragen. Mit der synthetischen Überwachung von verteilten Standorten aus können Unternehmen den Umfang von Ausfällen bestätigen und Arbeitslasten in nicht betroffene Regionen umleiten, um die Serviceverfügbarkeit aufrechtzuerhalten.

August

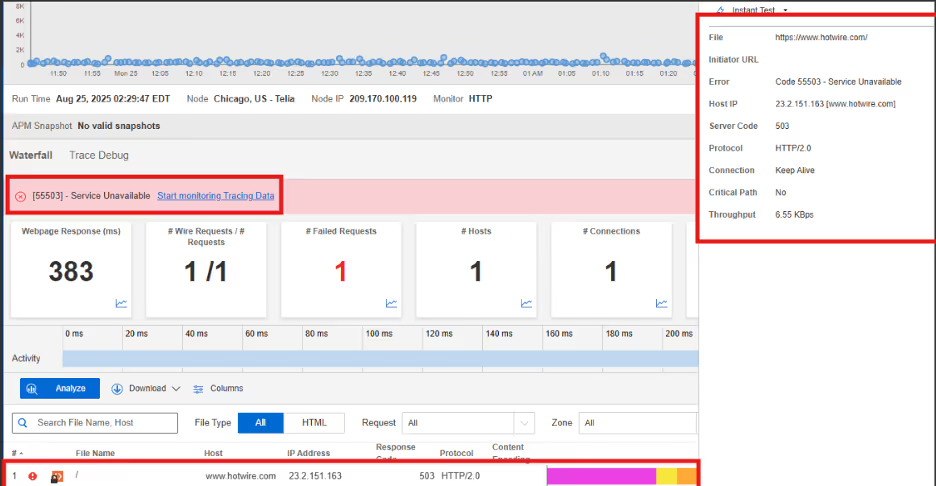

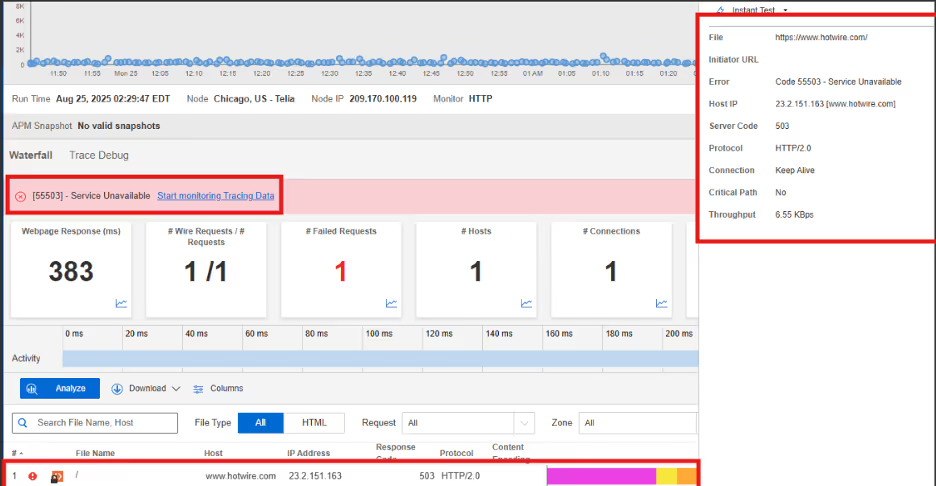

Hotwire

Was ist passiert?

Am 25. August 2025, um 2:09 AM EDT, entdeckte Internet Sonar einen Ausfall, der Hotwire-Dienste in mehreren Regionen, einschließlich der USA und Kanada, beeinträchtigte. Während des Vorfalls lieferten Anfragen an https://www.hotwire.com/ ab 2:09 AM EDT von mehreren Standorten HTTP 504 (Gateway Timeout) und HTTP 503 (Service Unavailable) Antworten.

Mitbringsel

Die Nichtverfügbarkeit des Dienstes und die Zeitüberschreitungen am Gateway können gleichzeitig auftreten, was auf eine Überlastung der serverseitigen Ressourcen und auf vorgelagerte Kommunikationsprobleme schließen lässt. Die Auswirkungen auf mehrere Regionen deuten darauf hin, dass es sich nicht um einen lokal begrenzten Ausfall handelte, sondern um eine umfassendere Unterbrechung des Dienstes. Die kontinuierliche Überwachung mit verteilten Aussichtspunkten war der Schlüssel zur schnellen Ermittlung des Ausmaßes und der Art der Ausfälle.

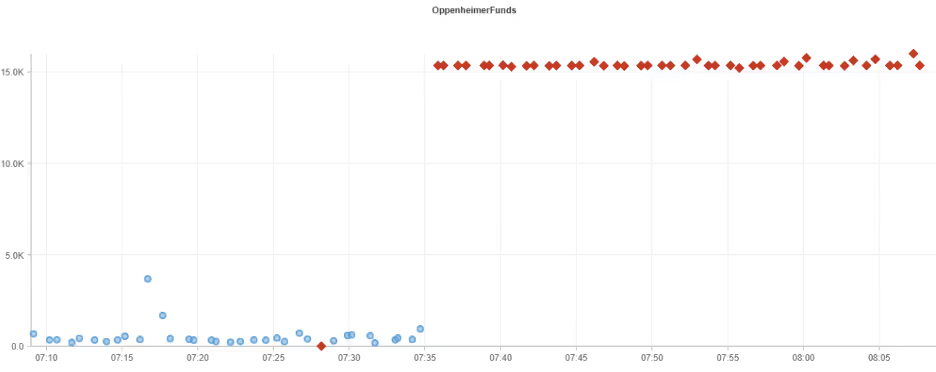

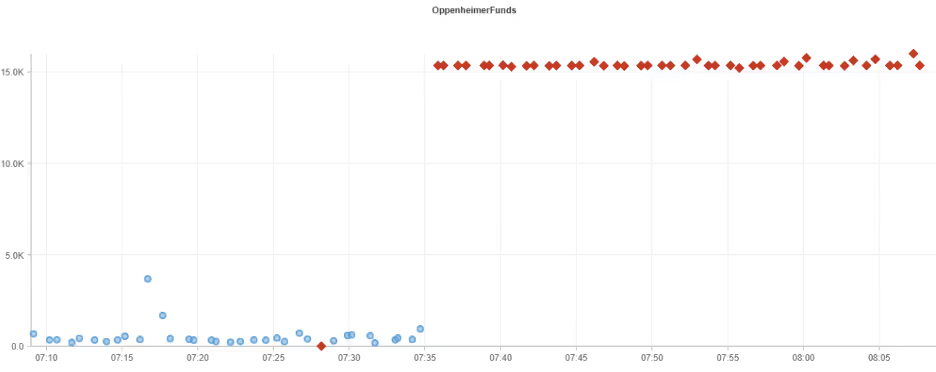

Oppenheimer-Fonds

Was ist passiert?

Am 23. August 2025, um 7:35 AM EDT, entdeckte Internet Sonar einen Ausfall, der die Dienste von Oppenheimer Funds in der gesamten Region Nordamerika beeinträchtigte. Während des Vorfalls wurde eine hohe Verbindungszeit für Anfragen beobachtet, die zur Domain https://www.oppenheimerfunds.com/ gehörten.

Mitbringsel

Im Gegensatz zu schwerwiegenden Ausfällen führen latenzbedingte Unterbrechungen oft zu langsameren Seitenladevorgängen, Transaktionsverzögerungen und möglichen Sitzungsausfällen. Unternehmen sollten nicht nur auf Ausfälle von Diensten achten, sondern auch auf Leistungseinbußen, da diese ein frühes Warnsignal für Infrastrukturstress oder falsch konfigurierte Abhängigkeiten sein können. Proaktive Leistungsüberwachung und Kapazitätsplanung sind entscheidend für die Minimierung der geschäftlichen Auswirkungen solcher Probleme.

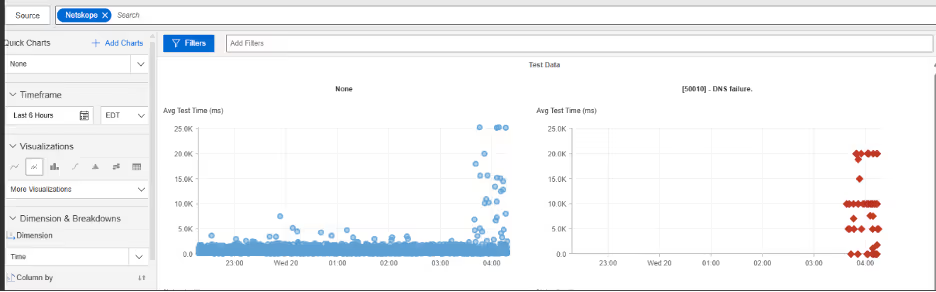

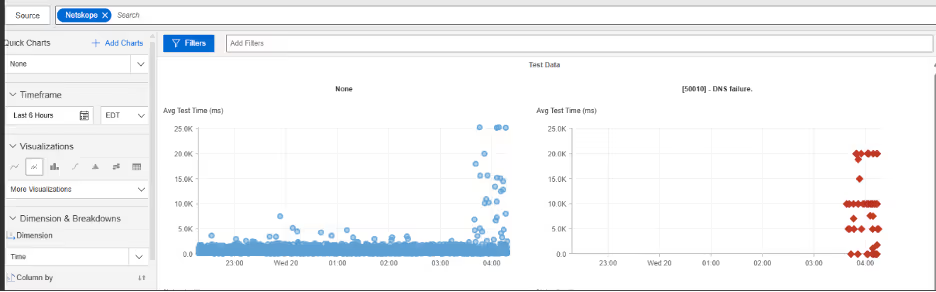

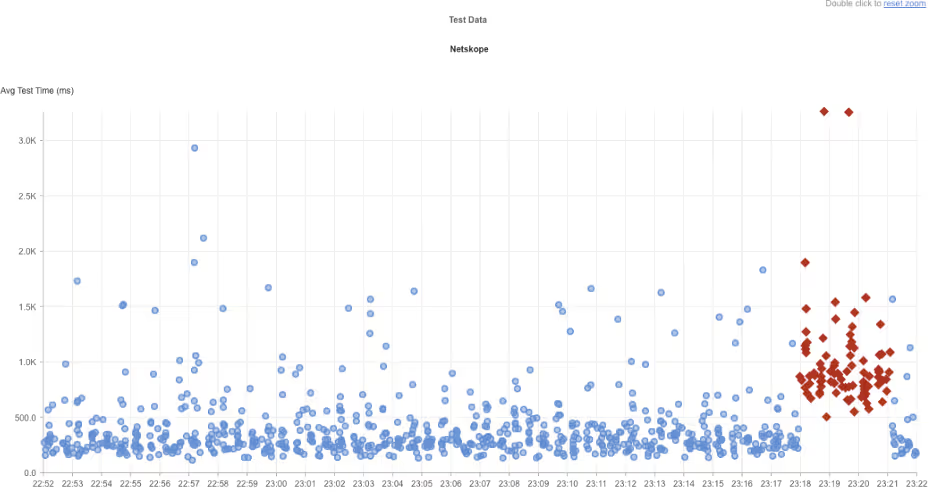

Netskope

Was ist passiert?

Am 20. August 2025, um 3:38 AM EDT, entdeckte Internet Sonar einen Ausfall, der Netskope in mehreren Regionen der Vereinigten Staaten betraf. Während des Ausfalls wurden DNS-Auflösungsfehler für die Domain https://www.netskope.com/ beobachtet , beginnend um 03:38:16 EDT von mehreren US-Standorten aus. Abfragen bei Level-2-Nameservern lieferten aufgrund von 100 % Paketverlusten "unbekannte" Antworten.

Mitbringsel

Selbst wenn die Anwendungsserver in Ordnung sind, können Ausfälle auf Nameserver-Ebene dazu führen, dass die Dienste für die Benutzer unzugänglich werden. Der 100-prozentige Paketverlust deutet eher auf ein systemisches Problem als auf eine lokale Beeinträchtigung hin, was entweder auf eine Fehlkonfiguration auf Seiten des Anbieters oder auf eine umfassendere Störung der Infrastruktur schließen lässt. Zur Risikominderung sollten Unternehmen die Implementierung redundanter DNS-Provider, die Überwachung der Auflösungspfade von verschiedenen Standorten aus und die Ausarbeitung von Failover-Strategien in Betracht ziehen, die die Auswirkungen auf die Benutzer minimieren, wenn primäre Nameserver ausfallen.

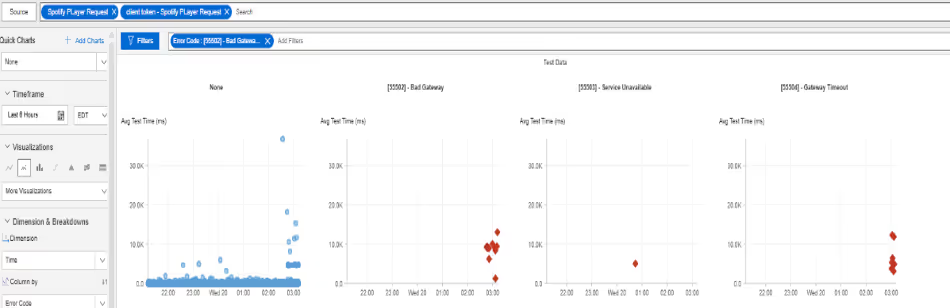

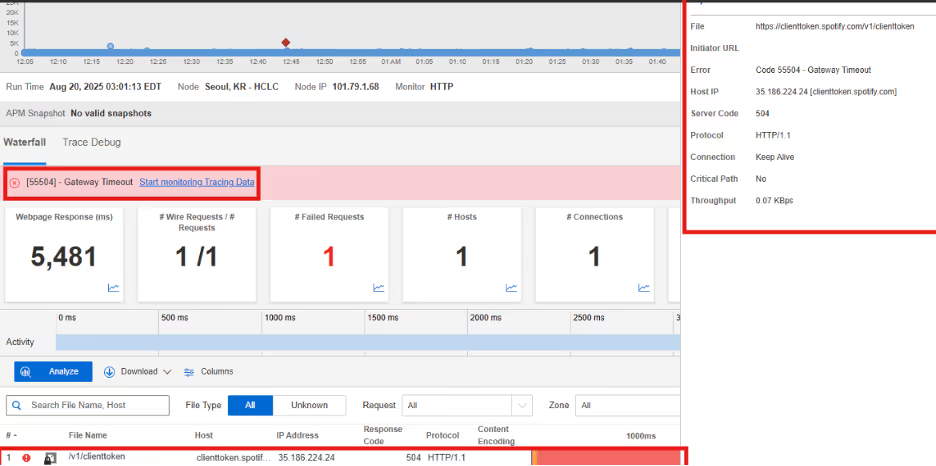

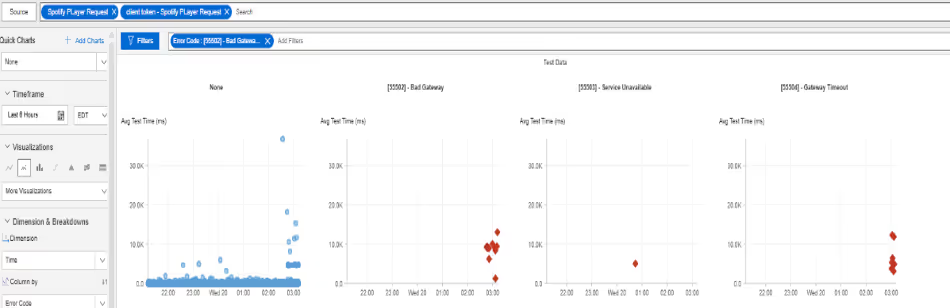

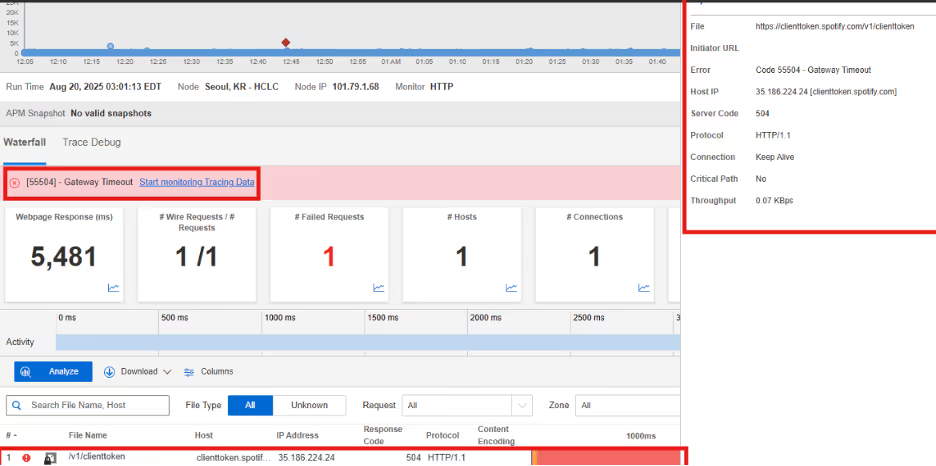

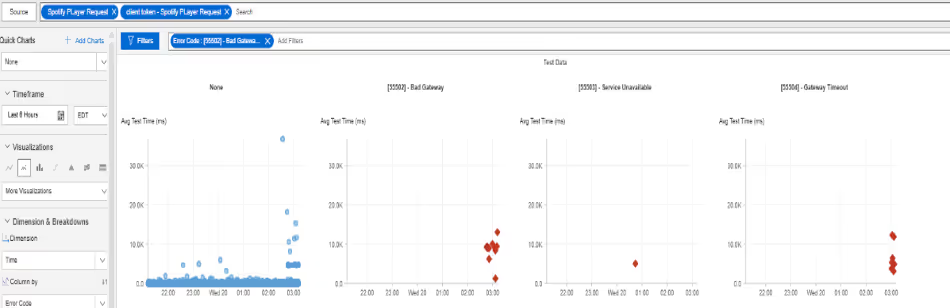

Spotify

Was ist passiert?

Am 20. August 2025, um 2:45 AM EDT, stellte Internet Sonar einen Ausfall fest, der Spotify-Dienste an mehreren Standorten im asiatisch-pazifischen Raum betraf. Während des Vorfalls lieferten Anfragen an clienttoken.spotify.com und apresolve.spotify.com HTTP 502 (Bad Gateway) und HTTP 504 (Gateway Timeout) Antworten.

Mitbringsel

Dieser Ausfall verdeutlicht die Anfälligkeit der Token-Authentifizierung und der Endpunkte für die Dienstauflösung, die beide für die Gewährleistung eines nahtlosen Benutzerzugriffs und der Wiedergabefunktionalität entscheidend sind. Ausfälle auf diesen Ebenen verhindern häufig die Sitzungsvalidierung und unterbrechen die Konnektivität zwischen Client-Anwendungen und Kerninfrastruktur. Unternehmen, die auf globaler Ebene tätig sind, sollten robuste Redundanz für Authentifizierungs- und Service-Discovery-Komponenten sowie proaktive Zustandsprüfungen implementieren, um Gateway-Fehler schnell zu erkennen und zu beheben, bevor sie sich zu regionalen Ausfällen auswachsen.

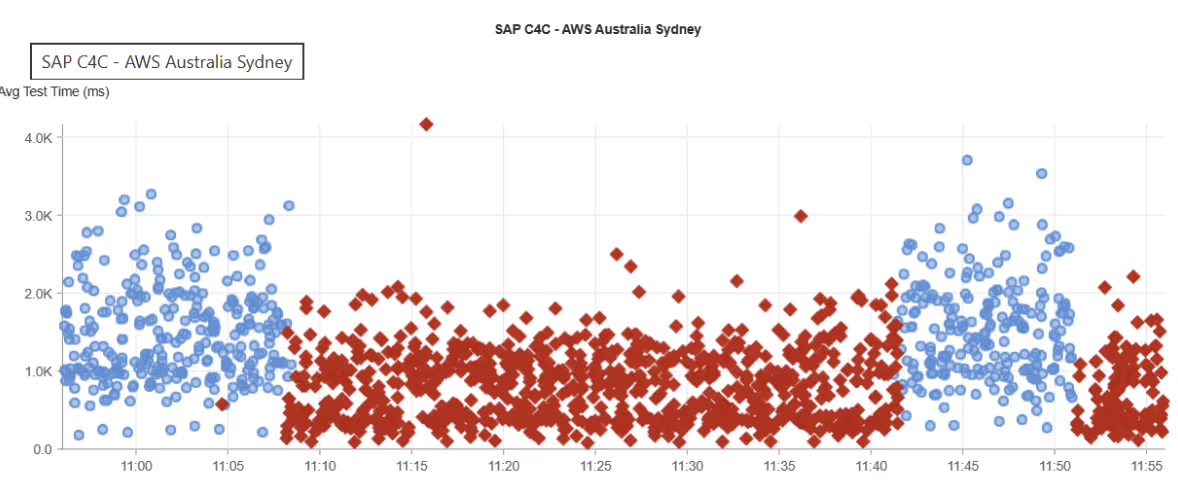

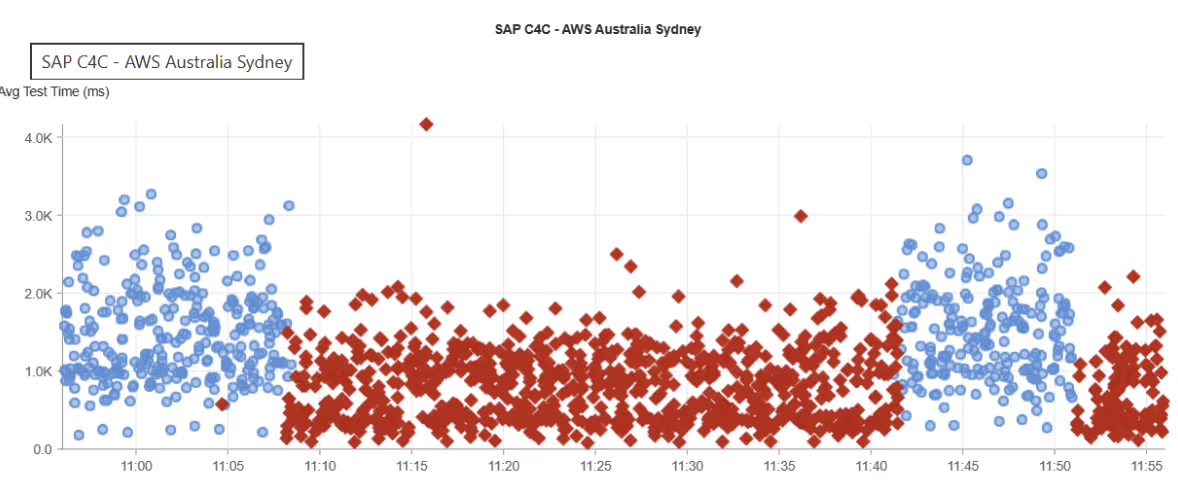

SAP C4C

Was ist passiert?

Am 16. August 2025 um 9:06 AM EDT stellte Internet Sonar einen Ausfall fest, der die SAP C4C-Dienste in mehreren Regionen, einschließlich Asien-Pazifik, Europa, Naher Osten und Afrika, Lateinamerika und Nordamerika, beeinträchtigte. Während des Vorfalls lieferten Anfragen an https://my354302.crm.ondemand.com die Antwort HTTP 503 Service Unavailable.

Mitbringsel

Dieser weit verbreitete Ausfall zeigt, wie sich Ausfälle auf der Dienstebene global ausbreiten können, wenn die zentrale Infrastruktur gestört wird. Die einheitlichen 503-Fehler deuten eher auf eine Ressourcenerschöpfung oder Nichtverfügbarkeit von Backend-Systemen als auf isolierte Netzwerkprobleme hin. Für Cloud-basierte CRM-Plattformen, die verteilte Unternehmen unterstützen, können solche Vorfälle die Geschäftskontinuität stark beeinträchtigen. Um das Risiko zu mindern, sollten Anbieter für einen angemessenen Lastausgleich, geografische Redundanz und Kapazitätssicherung sorgen, um die Verfügbarkeit in allen bedienten Regionen aufrechtzuerhalten.

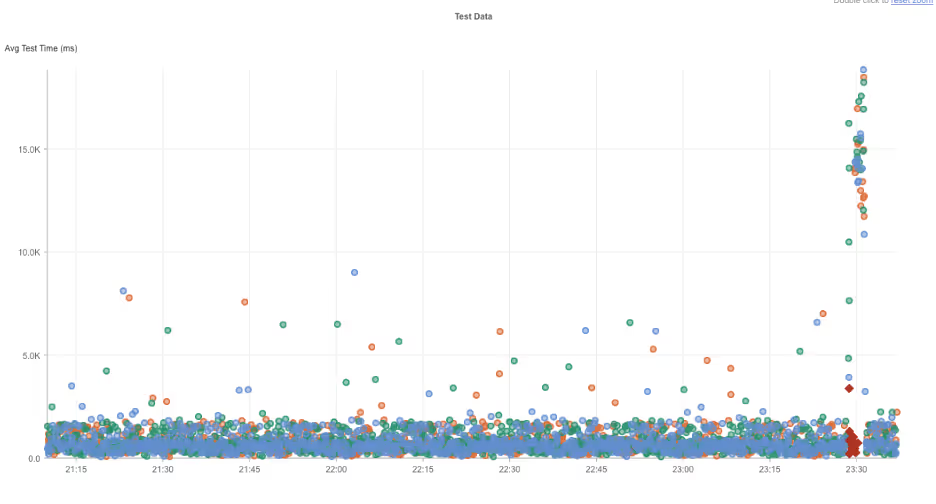

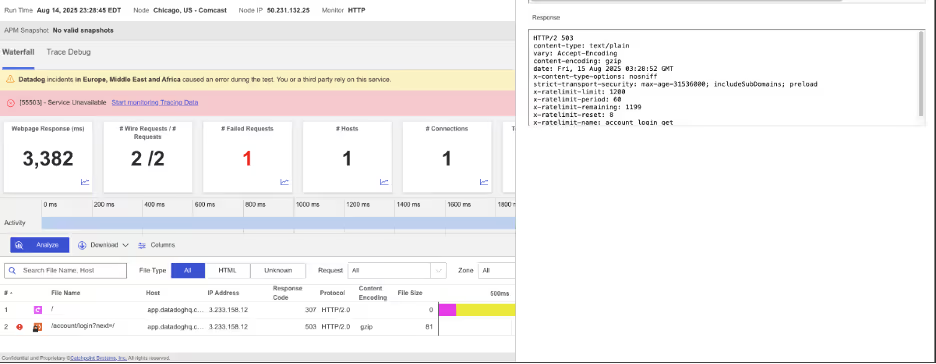

Datadog

Was ist passiert?

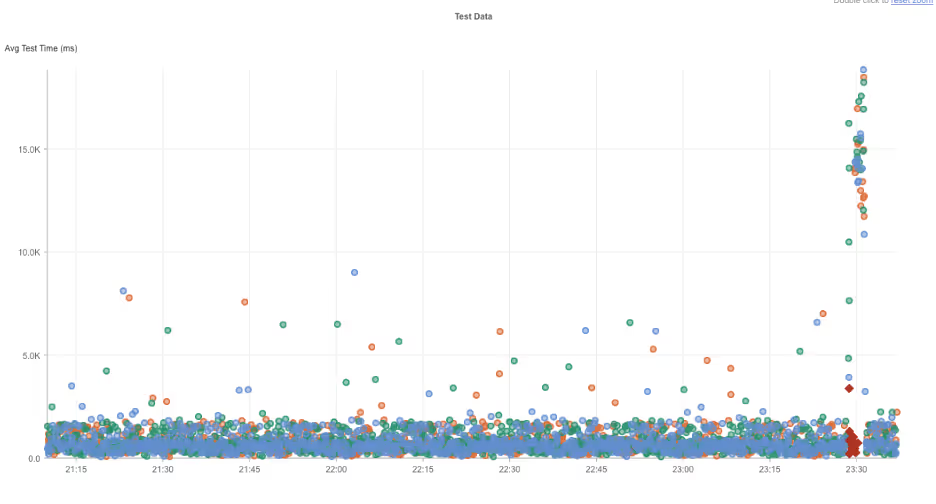

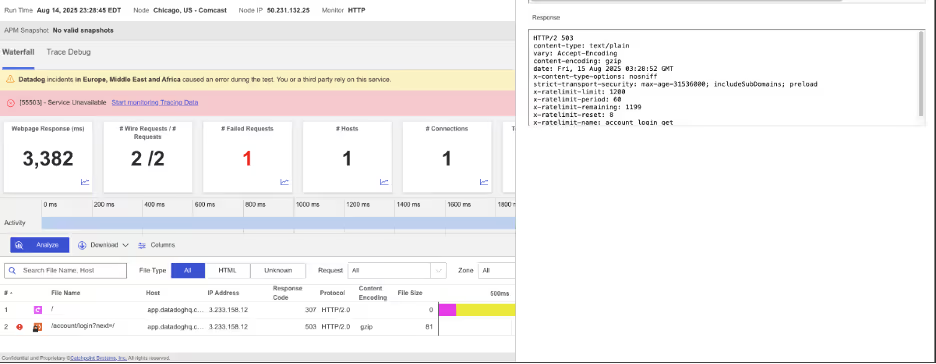

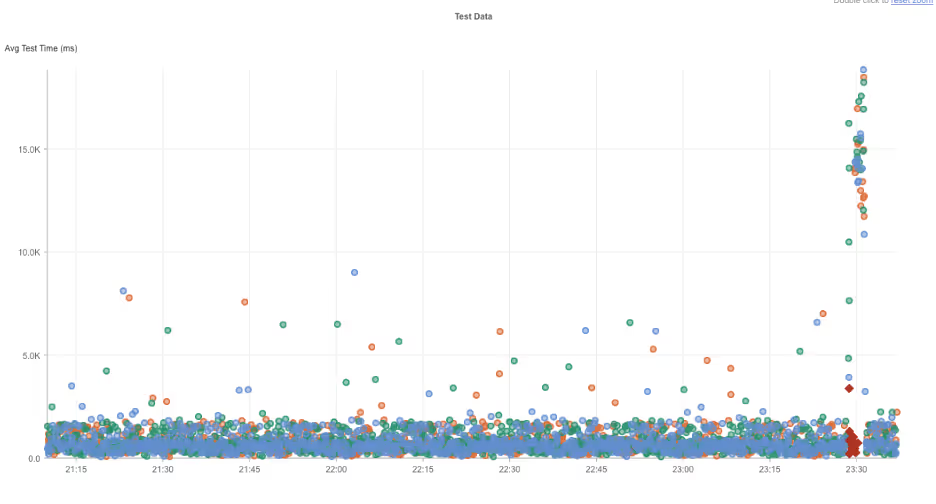

Am 14. August 2025, um 11:25 PM EDT, entdeckte Internet Sonar einen Ausfall, der Datadog-Dienste in mehreren Regionen, einschließlich Europa, dem Nahen Osten, Afrika und Nordamerika, betraf. Während des Vorfalls lieferten Anfragen an mehrere Datadog-Domänen, einschließlich app.datadoghq.com, logs.datadoghq.com und synthetics.datadoghq.com, HTTP 503 Service Unavailable-Antworten. Die Unterbrechung des Dienstes dauerte ca. 5 Minuten und konnte um 11:30 PM EDT wieder behoben werden.

Mitbringsel

Auch wenn dieser Ausfall nur von kurzer Dauer war, so zeigt er doch die betrieblichen Auswirkungen von gleichzeitigen Ausfällen in mehreren kritischen Servicebereichen. Für eine Plattform wie Datadog, die Beobachtbarkeit und Überwachung im großen Maßstab bietet, können selbst kurzzeitige Unterbrechungen kaskadenartige Auswirkungen auf die Fähigkeit der Kunden haben, den Zustand von Anwendungen zu verfolgen, Vorfälle zu erkennen und auf laufende Probleme zu reagieren. Dieses Ereignis unterstreicht die Bedeutung von Service-Segmentierung, robusten Failover-Mechanismen und proaktiver Incident-Kommunikation, um die geschäftlichen Auswirkungen von Verfügbarkeitsausfällen in mehreren Domänen zu reduzieren.

Es wirft auch die Frage auf : "Wer überwacht die Monitore?" Da Datadog in der Cloud gehostet wird, wirkt sich jede Unterbrechung der zugrundeliegenden Hosting-Umgebung direkt sowohl auf die eigenen Dienste als auch auf die Sichtbarkeit der Systeme der Kunden aus. Dies unterstreicht den Wert einer robusten Überwachungsstrategie mit mehreren unabhängigen Blickwinkeln, die einen kontinuierlichen Einblick gewährleistet, selbst wenn ein primärer Überwachungsanbieter ausfällt.

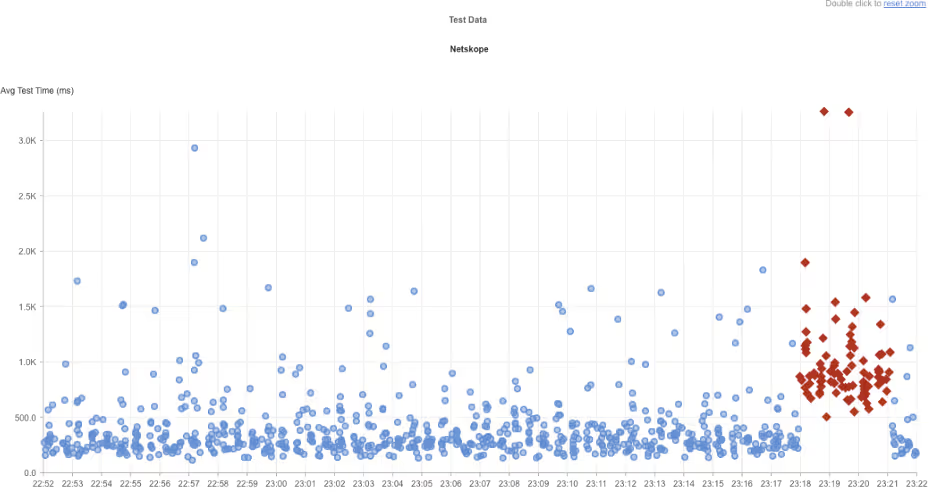

Netskope

Was ist passiert?

Am 14. August 2025, um 11:17 PM EDT, stellte Internet Sonar einen anhaltenden Ausfall fest, der Netskope-Dienste in mehreren Regionen, einschließlich Asien-Pazifik, Nordamerika, Europa, dem Nahen Osten und Afrika, betrifft. Anfragen an www.netskope.com haben seit Beginn des Vorfalls von mehreren globalen Standorten aus HTTP 500 Internal Server Error-Antworten zurückgegeben, was auf ein weit verbreitetes serverseitiges Problem hinweist.

Mitbringsel

Dieser Vorfall verdeutlicht die Risiken, die von zentralen serverseitigen Ausfällen ausgehen, wenn die Anwendungsinfrastruktur nicht mehr in der Lage ist, Anfragen global zu verarbeiten. HTTP-500-Fehler deuten in der Regel auf Fehlkonfigurationen, Softwarefehler oder überlastete Backend-Systeme hin - Probleme, die sich schnell auf eine in der Cloud bereitgestellte Plattform wie Netskope ausbreiten können. Die gleichzeitige globale Auswirkung unterstreicht die Bedeutung stabiler Bereitstellungsstrategien, wie verteilte Service-Cluster, Failover-Mechanismen und gestaffelte Rollouts, um weitreichende Störungen zu minimieren.

Für Sicherheits- und Cloud-Zugangsanbieter können Ausfälle dieser Art besonders störend sein, da sie genau die Dienste beeinträchtigen, auf die sich Unternehmen für eine sichere Konnektivität verlassen. Eine proaktive Überwachung über mehrere Regionen hinweg ist für eine schnelle Erkennung und eine schnellere Eingrenzung der Grundursache unerlässlich. Auch wenn dieser Ausfall nur von kurzer Dauer war, zeigt er doch die betrieblichen Auswirkungen von gleichzeitigen Ausfällen in mehreren kritischen Servicebereichen.

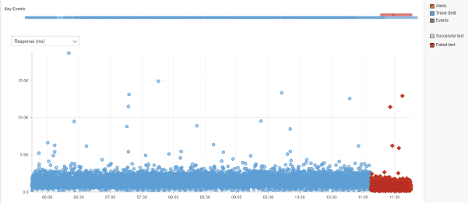

TikTok

Was ist passiert?

Am 14. August 2025, um 4:36 PM EDT, entdeckte Internet Sonar einen Ausfall, der die TikTok-Dienste in Nordamerika beeinträchtigte. Während des Vorfalls wurden bei Anfragen an https://www.tiktok.com/en/ HTTP 504 Gateway Timeout Antworten zurückgegeben. Der Ausfall dauerte ca. 11 Minuten, die Dienste waren um 4:47 PM EDT wieder verfügbar.

Mitbringsel

Dieser kurzzeitige, aber störende Ausfall verdeutlicht, wie sich Ausfälle auf Gateway-Ebene schnell auf die Verfügbarkeit für eine große Benutzerbasis auswirken können. HTTP 504-Fehler deuten oft auf Probleme mit vorgelagerten Diensten oder überlasteter Edge-Infrastruktur hin und verhindern, dass Anfragen ordnungsgemäß weitergeleitet oder verarbeitet werden. Bei verbraucherorientierten Plattformen wie TikTok können selbst kurze Ausfallzeiten die Benutzererfahrung in großem Umfang spürbar beeinträchtigen. Redundanz auf der Gateway-Ebene und schnelle Failover-Mechanismen sind wichtige Schritte, um die Auswirkungen solcher zeitkritischen Unterbrechungen zu minimieren.

Aurus Kreditverarbeitung

Was ist passiert?

Am 14. August 2025, um 1:23 AM EDT, entdeckte Internet Sonar einen Ausfall, der Aurus Credit Processing Services in mehreren Regionen, einschließlich Asien-Pazifik und Nordamerika, betraf. Während des Ausfalls kam es bei Anfragen an www.aurusinc.com zu Verbindungsausfällen und verlängerten Verbindungszeiten. Die Unterbrechung war nur von kurzer Dauer, und die Dienste waren um 1:30 AM EDT wieder verfügbar.

Mitbringsel

Selbst kurze Ausfälle von Zahlungs- und Kreditverarbeitungsdiensten können schwerwiegende Folgen haben, da sie den Transaktionsfluss stören und das Vertrauen der Kunden untergraben. In diesem Fall deuten erhöhte Verbindungszeiten in Verbindung mit völligen Ausfällen eher auf eine vorübergehende Belastung des Netzes oder der Infrastruktur als auf einen vollständigen Ausfall des Dienstes hin. Solche Vorfälle verdeutlichen, wie wichtig es ist, die Latenzzeiten ebenso genau zu überwachen wie die Verfügbarkeit, da eine frühzeitige Leistungsverschlechterung häufig größeren Ausfällen vorausgeht. Die Implementierung von Redundanz in Zahlungs-Gateways und die Gewährleistung schneller Failover-Mechanismen sind der Schlüssel zur Minimierung von Unterbrechungen bei geschäftskritischen Finanzdienstleistungen.

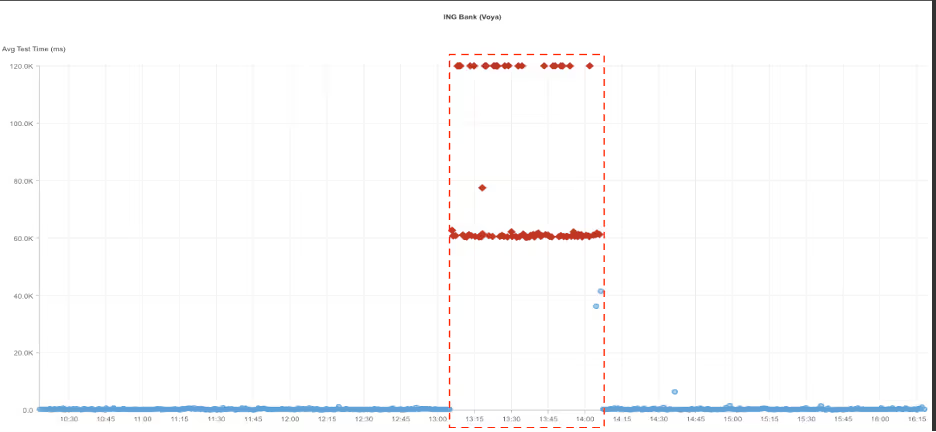

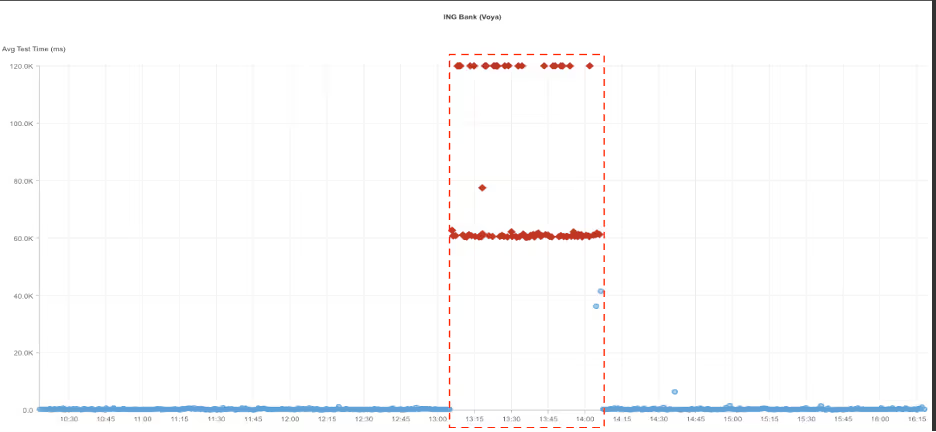

ING Bank (Voya)

Was ist passiert?